Chatbots in psychotherapy

Chatbots in therapy is an overarching term used to describe the use of machine-learning algorithms or software to mimic human conversation to assist or replace humans in multiple aspects of therapy.

Artificial intelligence is intelligence demonstrated by machines, based on input data and algorithms alone. Its primary goal is to perceive its environment and take action that maximizes its goals.[1] As such, different from human intelligence, artificial intelligence can sometimes work as a black box, with little reasoning behind its conclusion.

The primary aim of chatbots in therapy is to (1) analyze the relationships between symptoms exhibited by patients and possible diagnosis, and (2) act as a substitute or addition to human therapists due to the current shortage of therapists worldwide. Companies are developing technology through decreasing therapists overloading and better monitoring of patients.

As chatbot use in clinical therapy is still relatively new, some ethical concerns have arisen on the matter.

Contents

History

The idea of artificial intelligence stems from the study of mathematical logic and philosophy. The first theory that suggests a machine can simulate any kind of formal reasoning is the Church-Turing thesis, proposed by Alonzo Church and Alan Turing. Since the 1950s, AI researchers explored the idea that any human cognition can be reduced to algorithmic reasoning, and had based research in two main directions. The first is creating artificial neural networks, systems that model the biological brain. The second is developing symbolic AI (also known as GOFAI)[2] systems that are based on human-readable representations of problems solved by logic programming from the 1950s to the 1990s, before shifting into focus on subsymbolic AI due to technical limitations.

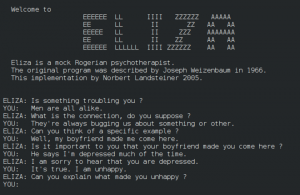

The first documented research of chatbots in psychotherapy is the chatbot ELIZA[3], developed from 1964 to 1966 by Joseph Weizenbaum. Eliza is created to be a pseudo Rogerian therapist that simulates human conversations. ELIZA was written in SLIP and trained on primarily the DOCTOR script that simulated interactions Carl Rogers has with his patients — notably repeating what the patient has said back at them. While ELIZA had been primarily developed to put emphasis on the superficial interactions between AI and humans and was not aimed to perform recommendations to patients, Weizenbaum observed that many believed the robot understood them.[4]

In the 1980s, psychotherapists started to investigate the usage of artificial intelligence clinically[6], primarily the possibilities of using logic based programming in quick intervention methods, such as in brief cognitive and behavioral therapy (CBT). This kind of therapy does not focus on the underlying causes of mental health ailments, rather, it triggers and supports a change in behavior and cognitive distortions.[7] However, technical limitations such as the lack of sophisticated and development of logical systems, and the lack of breakthrough in artificial intelligence technology, as well as the decrease in funding in AI technology by the Strategic Computing Initiative had led research in this field to stagnate until the mid 1990s when the internet became accessible to the general public.

Currently, artificial intelligence is becoming increasingly widespread in psychotherapy, with developments focusing on data logging and building mental models of patients.

Development

The following information describes the general development of chatbots in psychotherapy usage, though exceptions may exist.

Three concepts are highlighted as the main considerations for the construction of a chatbot: intentions (what the user demands or the purpose of the chatbot), entities (what topic the user wants to talk about) and dialogue (the exact wordings the bot use)[8]. Most psychologist-chatbots are similar in the latter two categories, differing in their intentions. The chatbots can be separated into two main categories: chatbots built for psychological assessments, and chatbots built for help interventions.

Turing Test

Given the interactive nature of therapy, most artificial intelligence tools developed around psychology are based on the Turing Test. The test was proposed in Alan Turing’s 1950 Computing Intelligence and Machinery paper, asking the question “can machines think?”[9]

The paper puts forward the idea that if a human cannot discern between the answers a machine makes and a human’s, the machine is effectively intelligent, regardless of how it came to its conclusions. This diverges from previous ideas that machines must completely mimic human cognition to achieve intelligence, and puts an emphasis on the possibility of generating human cognitive capacity rather than the means to achieve it. While chatbot-psychotherapists are not designed to fool a person into thinking they are talking to another person, a “machine must be able to mimic a human being to build trust with the patient and for the patient to be willing to talk to the chatbot”[10], particularly in conversational chatbots.

Natural Language Processing

Chatbots rely on natural language processing (NLP) to generate their responses. Natural language processing is the programming of computers to understand human conversational language. In the past (such as in early cases such as ELIZA or AliceBot (which stands for Artificial Linguistic Internet Computer Entity) ) these tools were mostly developed on preconfigured templates with human hard-coding. They had no framework in contextualizing any input and struggled with following a coherent conversation. In the present day, NLP tools such as ELMO and BERT are used for feature extractions in large texts while other tools like GPT-2 are utilized to generate response paragraphs given particular topics. The prevalence of chatbots in different fields also gave rise to programs developed specifically for chatbot development. Amongst these, some notable examples include IBM Watson, API.ai and Microsoft LUIS.

Types of chatbots

Depending on their usage, the dialogue of therapeutic chatbots, and the response by users it can accept can be separated into three categories.

Option based response

Option based responses are common with chatbots In chatbots built for psychological assessments or other logistic concerns, such as scheduling appointments. The chatbot is either trained with or hard-coded in a pre-assessment interview that asks for demographic information about the patient, including age, name, occupation, gender. These chatbots act more like virtual assistants, and users are explicitly warned to use concise language for the virtual assistant to recognize entities with more accuracy.[12]

Sentinobot

Sentino API is a “data-driven, AI-powered service built to provide insights into one’s personality”[13] developed in 2016. The bot version takes two versions of inputs. Users can either type their own sentences starting with “I” (such as “I am an introvert”) or type the command “test me” to lead users over taking a Big Five Personality Test (also known as OCEAN). Other inputs unrelated to personality assessment give a list of coded responses. If the test is selected, users are given statements (30 in each group) which they can respond with a five option scale of “disagree”, “slightly disagree”, “neutral”, “slightly agree” and “agree”.

The user is then sent a link to access their profiles. For each group 30 questions the user answers, the user is given a new section of their profile. As of January 2022, there are 4 sections — “Big 5 Portrait”, “10 Factors of Big5”, “30 Facets”, and “Adjective Cloud”.[14]

Unlike most AI psychotherapists, Sentinobot does not help with mental health intervention, and only serves as an alternative way to conduct a personality test.

Toolkit based

Toolkit based chatbots use brief CBT to focus on quick intervention. They follow a script to ask users questions about their mood and to guide them towards a personal understanding of intervention methods. While the format of dialogue is in chat, the chatbots’ primary goal is not to have a conversation with the user. Instead, quick check-ins are emphasised, and the chatbot uses the information to suggest a method of intervention by referring the user to a specific pre-made toolkit. Further text-based instructions or videos made by human psychotherapists are accompanied by the chatbot function. The purpose of these chatbots is not to build connections between humans and chatbots but to “learn skills to manage emotional resilience”.[15]

Woebot

Released in 2017, Woebot Health is founded by clinical research psychologist Alison Darcy. The bot describes itself less of a human replacement and more of a “choose-your-own-adventure self help book”[16], focusing on developing the users’ goal setting, accountability, motivation and engagement and reflection to their mental health, and notes that the app is not a replacement for therapy. Each interaction in Woebot begins with a general mental health enquiry, where users can respond with either simple options containing a few words or emojis, or via text. Mood data is gathered with follow up questions, after which Woebot presents the user with a number of toolkits to teach users about cognitive distortions. The user attempts to answer which (if any) cognitive distortions they are using, after which Woebot guides the user into rephrasing their thoughts.

Woebot is primarily coded on a decision tree and accepts options as inputs, but it also picks up keywords from natural language text-based input to determine where the conversation should next go. While the chatbot does not engage in conversation, the bot expresses empathy upon recognising keywords related to the users’ struggles (for example, responding with “I’m sorry to hear that. That must be very tough for you.”), and its style of dialogue is modeled on “human clinical decision making and dynamics of social discourse”.

Conversational agents

Conversational chatbots resemble the speech of a human being and aim to act as substitute or additional therapist to the client. These chatbots aim to foster a friendly relationship between the human and the bot, or to ease people who are more comfortable with confiding in an avatar into therapy.

Eliza

Eliza is developed in the MIT Artificial Intelligence lab by Joseph Weizenbaum and is the first chatbot. It is not made for clinical use, but its conversational style imitates the Rogerian therapy style, also known as person centered therapy. This is a type of therapy where the client directs the conversation. The therapist does not try to interpret or judge anything, only restating their ideas to clarify their thoughts.[17]

Eliza processes input using the basic natural language processing procedure of looking for keywords and then ranking them, then outputting a response that is categorized in the topic. For example, if the keyword “alike” is detected, Eliza will categorize it as a conversation on the topic of similarity, then respond “in what way?” Otherwise, Eliza also breaks down user input into small segments and rephrase them by changing the subject to restate the idea (for example, “I am happy” as an user input might generate the response of “you are happy”) or ask a probing question (outputting “what makes you happy?”)[18]

By design, Eliza does not save contextual evidence of the conversation, taking input on a surface level.

Ellie

Ellie is modelled off Eliza and developed by the Institute of Creative Technology at the University of Southern California.[19] Ellie is designed to detect symptoms of depression and post-traumatic stress disorder (PTSD) through a conversation with a patient, and acts as a “decision-support tool” that helps therapists make decisions about diagnosis and treatment.

Ellie gathers information through both verbal and non-verbal content of the conversation, including body language, facial movements and intonations in speech and reacts accordingly using Multisense[20] technology. The symptoms are noted via comparison of the patient’s input and a database with both civilian and military data.[21]

Replika

Replika was developed by Luka and started off as a “companion friend” that has since incorporated psychotherapy training toolkits. Replika is trained by a set of pre-determined and moderated sentences, then run through GPT-3 and BERT models to generate new responses. A feedback loop is created by users reacting to the messages Replika sends via either upvotes and downvotes, emojis, or direct text messages. Facial recognition and speech recognition is also implemented, and users can send Replika images and call Replika outside of texting.[22]

While it is advertised as a therapeutic tool on the App Store, Replika’s website and in-app persona describes itself as a companion to the user, and is meant to be the user’s friend instead of a therapist.

ethical concerns

The ELIZA effect

crisis management of artificial intelligence

data collection

limitations

see also

references

- ↑ Russell, S., and Norvig, P. (2009). Artificial Intelligence: A Modern Approach, 3rd Edn. Saddle River, NJ: Prentice Hall.

- ↑ Haugeland, J. (1980). Psychology and computational architecture. Behavioral and Brain Sciences, 3(1), 138–139. https://doi.org/10.1017/s0140525x00002120

- ↑ Weizenbaum, J. (1966b). ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36–45. https://doi.org/10.1145/365153.365168

- ↑ Weizenbaum, J. (1966b). ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36–45. https://doi.org/10.1145/365153.365168

- ↑ ELIZA conversation. (n.d.). [Photograph]. https://commons.wikimedia.org/wiki/File:ELIZA_conversation.png

- ↑ Glomann, L., Hager, V., Lukas, C. A., & Berking, M. (2018b). Patient-Centered Design of an e-Mental Health App. Advances in Intelligent Systems and Computing, 264–271. https://doi.org/10.1007/978-3-319-94229-2_25

- ↑ Benjamin, C. L., Puleo, C. M., Settipani, C. A., Brodman, D. M., Edmunds, J. M., Cummings, C. M., & Kendall, P. C. (2011b). History of Cognitive-Behavioral Therapy in Youth. Child and Adolescent Psychiatric Clinics of North America, 20(2), 179–189. https://doi.org/10.1016/j.chc.2011.01.011

- ↑ Khan, R., & Das, A. (2017). Build Better Chatbots: A Complete Guide to Getting Started with Chatbots (1st ed.). New York: Apress

- ↑ TURING, A. M. (1950). I.—COMPUTING MACHINERY AND INTELLIGENCE. Mind, LIX(236), 433–460. https://doi.org/10.1093/mind/lix.236.433

- ↑ Romero, M., Casadevante, C., & Montoro, H. (2020). CÓMO CONSTRUIR UN PSICÓLOGO-CHATBOT. Papeles Del Psicólogo - Psychologist Papers, 41(1). https://doi.org/10.23923/pap.psicol2020.2920

- ↑ Horev, R. (2018, November 17). BERT Explained: State of the art language model for NLP. Medium. https://towardsdatascience.com/bert-explained-state-of-the-art-language-model-for-nlp-f8b21a9b6270

- ↑ Romero, M., Casadevante, C., & Montoro, H. (2020). CÓMO CONSTRUIR UN PSICÓLOGO-CHATBOT. Papeles Del Psicólogo - Psychologist Papers, 41(1). https://doi.org/10.23923/pap.psicol2020.2920

- ↑ Sentino API - personality, big5, NLP, psychology analysis. (2016). Sentino. https://sentino.org/api

- ↑ Sentino project - Explore yourself. (n.d.). Sentino Profile. https://sentino.org/profile

- ↑ Wysa: Frequently asked questions. (n.d.). Wysa. https://www.wysa.io/faq

- ↑ Fitzpatrick, K. K., Darcy, A., & Vierhile, M. (2017). Delivering Cognitive Behavior Therapy to Young Adults With Symptoms of Depression and Anxiety Using a Fully Automated Conversational Agent (Woebot): A Randomized Controlled Trial. JMIR Mental Health, 4(2), e19. https://doi.org/10.2196/mental.7785

- ↑ Greenfield, D. P. (2004). Book Section: Essay and Review: Current Psychotherapies (Sixth Edition). The Journal of Psychiatry & Law, 32(4), 555–560. https://doi.org/10.1177/009318530403200411

- ↑ Weizenbaum, J. (1966c). ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36–45. https://doi.org/10.1145/365153.365168

- ↑ Tweed, A. (n.d.). From Eliza to Ellie: the evolution of the AI Therapist. AbilityNet. https://abilitynet.org.uk/news-blogs/eliza-ellie-evolution-ai-therapist

- ↑ MultiSense - VHToolkit - ~Confluence~Institute~for~Creative~Technologies. (n.d.). Multisense. https://confluence.ict.usc.edu/display/VHTK/MultiSense

- ↑ Robinson, A. (2017, September 21). Meet Ellie, the machine that can detect depression. The Guardian. https://www.theguardian.com/sustainable-business/2015/sep/17/ellie-machine-that-can-detect-depression

- ↑ Gavrilov, D. (2021, October 21). Building a compassionate AI friend. Replika Blog. https://blog.replika.com/posts/building-a-compassionate-ai-friend