Algorithms

An algorithm is defined as a set of precise steps and distinct states used to express the detailed structure of a program or the order of events that occured in a system.[1] Algorithms are involved in many aspects of daily life and in complex computer science concepts. They often use repetition of operations to allow people and machines to execute tasks more efficiently by executing faster and using less resources such as memory. They can help turn systematic and tedious tasks into a faster, automated processes. Large companies particularly value robust algorithms because their infrastructure depends on efficiency to remain profitable on a massive scale.[2]

Processes like cooking a meal or reading a manual to assemble a new piece of furniture are examples of algorithms being used in everyday life.[3] Algorithms are grounded in logic. The increase in their logical complexity via advancements in technology and human effort have provided the foundations for technological concepts such as artificial intelligence and machine learning.[4] The influence of algorithms is pervasive and in computer science so it leads to an increase in ethical concerns - bias, privacy, and accountability.

Contents

History

The earliest known algorithms stem back to 1700 BC when the Egyptians created them for quick multiplication and division.[5] Since then, ancient Babylonians in 300 BC created algorithms to track farming and livestock using square roots. On a basic level, an algorithm is a system working through different iterations of a process.[6] Following this, steady advancement gave birth to fundamental mathematical algorithm families like algebra, shaping the field of mathematics with its all-purpose formulas. The man often accredited as “The Father of Algebra,” Muhammad ibn Mūsa al-Khwarizmī, was also the one who gave English the word “algorithm” around 850 AD, as he wrote a book Al-Khwarizmi on the Hindu Art of Reckoning, which in Latin translates to Algoritmi de Numero Indorum. The English word "algorithm" was adopted from this title.

Many educational trainings teach mathematical formulas, such as rudimentary multiplication and division methods,[7] that serve as connections between explicit algorithms and mathematics.

A myriad of other fundamental algorithms have been developed throughout history, ranging from pure mathematics to important computer science stalwarts, extending from ancient times up through modern day. The computer revolution led to algorithms that can filter and personalize results based on the user.

The Algorithm Timeline outlines the advancements in the development of the algorithm as well as a number of the well known algorithms developed from the Medieval Period until modern day.

Computation

Another cornerstone for algorithms relates to Alan Turing and his contributions to cognitive and computer science. Turing conceptualized the concept of cognition and designed ways to emulate human cognition with machines. This process turned the human thought process into mathematical algorithms and it led to the development of Turing Machines. It capitalized on these theoretical algorithms to perform unique functions and the development of computers so it contributes to the field of computer science.[8] As their name suggests, computers utilized specific rules or algorithms to compute and it is these machines (or sometimes people)[9] that most often relate to the concept of algorithms that is used today. With the advent of mechanical computers, the computer science field paved the way for algorithms to run the world as they do now by calculating and controlling an immense quantity of facets of daily life.

Advancements In Algorithms

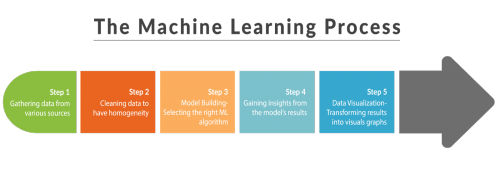

In the years following Alan Turing’s contributions, computer algorithms increased in magnitude and complexity. Advanced algorithms, such as artificial intelligence is defined as utilizing machine learning capabilities.[10] This level of algorithmic improvement provided the foundation for more technological advancement.

The machine learning process shown above describes how machine learning algorithms can provide more features and functionality to artificial intelligence.

Classifications

There are many different classifications of algorithms, some are more well-suited for particular families of computational problems than others. In many cases, the algorithm one chooses to make for a given problem will have tradeoffs dealing with time complexity and memory usage.

Recursion

A recursive algorithm is known as a function that calls itself forming a recursive relation with itself. It is an algorithmic approach in which one solves an issue by breaking a problem onto smaller and smaller sub-problems until the sub-problem becomes so small the answer is obvious, this is the base case. Then using the solution (base case) of this said sub-problem, one can find the solution of the sub-problem that invoked the call to itself. An example of recursive relationship is finding the factorial of a number n - which is n * factorial of n - 1. Eg. fact(3) = 3 * fact(2) and fact(2) = 2 * fact(1) where fact(1) is the base case since it is obvious the factorial of 1 is 1. Hence fact(2) = 2 * 1 = 2, so fact(3) = 3 * 2 = 6. A recursive function keeps using itself as an input until a base case is reached so it can stop and propagate back out the recursive stacks. A base case must be present, otherwise the recursive function will never stop calling itself. Because recursion incurs lots of function calls, it is one of the main source of stack overflow. Whereas, the program has to save more stacks than space available before even reaching the base case. As a result, some recursive algorithms are known as tail recursive in which recursive calls happens at the end of the function allowing only one stack to be saved. For more information on recursion read Recursion.

Serial, Parallel or Distributed

Serial algorithm is an algorithm in which calculation is done in sequential manners on one core, it follows a defined order in solving a problem. Parallel algorithms utilizes the fact that modern computers have more than one cores, so that computations that are not interdependent could be calculated on separate cores. This is referred to as multi-threaded algorithm where each thread is a series of executing commands and they are inter-weaved to ensure correct output without deadlock. A deadlock occurs when there are interdependence between more than one thread so that none of the threads can continue until one of the other threads continues. Parallel algorithm is important that it allows more than one program to run at the same time by leveraging a computer's available resources otherwise would not have been possible with serial algorithm. Finally, distributed algorithm is similar to parallel algorithm in that it allows multiple programs to run at once with the exception that instead of leveraging multiple cores in a single computer it leverages multiple computers that communicates through a computer network. Similar to how parallel algorithm builds on serial algorithm with the added complexity of synchronizing threads to prevent deadlock and ensure correct outputs, distributed algorithm builds on parallel algorithm with the added complexity of managing communication latency and defining order since it is impossible to synchronize every computer's clock in a distributed system without significant compromises. A distributed algorithm provides extra reliability in that data are stored in more than one location so that one failure would not result in loss of data and by doing computation in multiple computer it can potentially have even faster computational speed.

Deterministic vs Non-Deterministic

Deterministic algorithms are those that solve problems with exact precision and ordering so a given input would produce the same output every time. Non-deterministic algorithms either have data races or utilize certain randomization, so the same input could have a myriad of outputs. Non-deterministic algorithms can be represented by flowcharts, programming languages, and machine language programs. However, they vary in that they are capable of using a multiple-valued function whose values are the positive integers less than or equal to itself. In addition, all points of termination are labeled as successes or failures. The terminology "non-deterministic" does not imply randomness, of rather a kind of free will[11]

Exact vs Approximation

Some algorithms are implemented to solve for the exact solutions of a problem, whereas some problems are implemented for an approximation or heuristic. Approximation is important in which heuristics could provide an answer that is good enough such that the excessive computational time necessary to find the actual solution is not warranted since one would get minimal gains while expanding a great deal of resources. An example where an approximation is warranted is the traveling salesman's algorithm in which has computational complexity of O(n!), so an heuristic is necessary since some high values of n are not even possible to calculate.

Design Paradigms

Paradigm is also a good one to distinguish algorithms since they serve to solve different issues

Brute Force

A brute force algorithm is the most "naive" approach one can take in attempting to solve a particular problem. A solution is reached by searching through every single possible outcome before arriving at an answer. In terms of complexity or Big-O notation, brute force algorithms typically represent the highest order complexity compared to other potential solutions for a given problem. While brute force algorithms may not be considered the most efficient option for solving computational problems, they do offer reliability as well as a guarantee that a solution to a given problem will eventually be found.

Divide and Conquer

A divide and conquer algorithm divides a problem into smaller sub-problems then conquer each smaller problems before merging them together to solve the original problem. An example of divide and conquer is merge sort whereby a list is split into smaller sorted lists then merged together to sort the original list.

Dynamic Programming

Dynamic programming takes advantage of overlapping subproblems to more efficiently solve a larger computational problem. The algorithm first solves less complex subproblems and stores their solutions in memory. Then more complex problems will find these solutions using some method of lookup to find the solution and implement it in the more complex problem to find its own solution. The method of lookup enables solutions to be computed once and used multiple times[12]. This method reduces the time complexity from exponential to polynomial. Time complexities will be explained below using the Big-0 notation.

Backtracking

A backtracking algorithm is similar to brute force with the exception that as soon as it reaches a node where a solution could never be reached from said node on, it prunes all the subsequent node and backtracks to the closest node that has the possibility to be right.

Greedy Algorithm

An intuitive approach to design algorithms that don’t always yield the optimal solution. This approach required a collection of candidates, or options, in which the algorithm selects in order to satisfy a given predicate. Greedy algorithms can either favor the least element in the collection or the greatest in order to satisfy the predicate[13].

An example of a greedy algorithm may take the form of selecting coins to make change in a transaction. The collection includes the official coins of the U.S. currency (25 cents, 10 cents, 5 cents, and 1 cent) and the predicate would be to make change of 11 cents. The greedy algorithm will select the greatest of our collection to approach 11, but not past it. The algorithm will first select a dime, then a penny, then end when 11 cents has been made.

Complexity and Big-O Notation

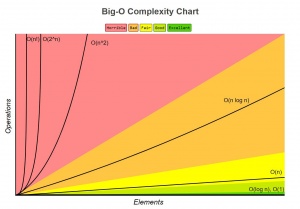

Since each computer has different computational time, it is illogical to measure the efficiency of an algorithm based on how fast it runs on a particular computer. However, using the fact that each operation must take some time to compute, measuring the efficiency of an algorithm is standardized by checking how well it grows with more inputs. In other words, computer scientists calculate how much does computational time increases with increasing inputs. Since this form of measurement merely intends to see how well an algorithm grows, the constants are left out since with high inputs these constants are negligible anyways. Big-O notation specifically describes the worst-case scenario and measures the time or space the algorithm uses[14]. Big-O notation can be broken down into order of growth algorithms such as O(1), O(N), and O(log N), with each notation representing different orders of growth. The later, log algorithms -commonly referred to as logarithms, are bit more complex than the rest, log algorithms take a median from a data set and compare it to a target value, the algorithm continues to halve the data as long as the median is higher or lower than the target value[14]. An algorithm with a higher Big-O is less efficient at large scales, for example O(N) runs slower than O(1).

Ethical Dilemmas

With the relevance of algorithms as well as their sheer magnitude, ethical dilemmas were bound to arise. There is a vast list of potential ethical issues relating to algorithms and computer science, including issues of privacy, data gathering, and bias.

Bias

Algorithms are designed by humans who are biased by the society they live in. Therefore, humans inherently code their own biases into algorithms.

Joy Buolamwini, a graduate computer science student at MIT, experienced an example of this. The facial recognition software she was working on was unable to detect her face, as she had a skin tone that had not been accounted for in the facial recognition algorithm. This is because the software had used machine learning with a dataset that was not diverse enough, and as a result, the algorithm did not recognize her face as it was supposed to.[15] Safiya Noble discusses instances of algorithmic search engines reinforcing racism in her book, "Algorithms of Oppression".[16] Bias like this occurs in countless algorithms, be it through insufficient machine learning data sets, or the algorithm developers own fault, among other reasons, and it has the potential to cause legitimate problems even outside the realm of ethics.

COMPAS is algorithm written to determine whether a criminal is likely to re-offend using information like age, gender, and previously committed crimes. Tests have found it to be more likely to incorrectly evaluate black people than white people because it has learned on historical criminal data, which has been influenced by our biased policing practices.[17]

Jerry Kaplan is a research affiliate at Stanford University’s Center on Democracy, Development and the Rule of Law at the Freeman Spogli Institute for International Studies, where he teaches “Social and Economic Impact of Artificial Intelligence.” According to Kaplan, algorithmic bias can even influence whether or not a person is sent to jail. A 2016 study conducted by ProPublica indicated that software designed to predict the likelihood an arrestee will re-offend incorrectly flagged black defendants twice as frequently as white defendants in a decision-support system widely used by judges. Ideally, predictive systems should be wholly impartial and therefore be agnostic to skin color. However, surprisingly, the program cannot give black and white defendants who are otherwise identical the same risk score, and simultaneously match the actual recidivism rates for these two groups. This is because black defendants are re-arrested at higher rates that their white counterparts (52% versus 39%), at least in part due to racial profiling, inequities in enforcement, and harsher treatment of black people within the justice system. [18]

Privacy And Data Gathering

The ethical issue of privacy is also highly relevant to the concept of algorithms. Information transparency [19] is an import point regarding these issues. In popular social media algorithms, user information is often probed without the knowledge of the individual, and this can lead to problems. It is often not transparent enough how these algorithms receive user data, resulting in often incorrect information which can affect both how a person is treated within social media, as well as how outside agents could view these individuals given false data. Algorithms can also often infringe on a user’s feelings of privacy, as data can be collected that a person would prefer to be private. Data brokers are in the business of collecting peoples in formation and selling it to anyone for a profit, like data brokers companies often have their own collection of data. In 2013, Yahoo was hacked, leading to the leak of data pertaining to approximately three billion users.[20] The information leaked contained data relating to usernames, passwords, as well as dates of birth. Privacy and data gathering are common ethical dilemmas relating to algorithms and are often not considered thoroughly enough by algorithm’s users.

The Filter Bubble

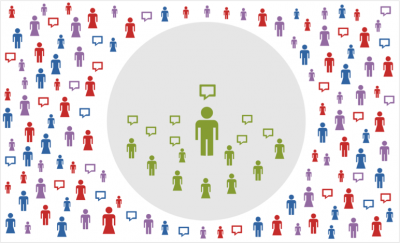

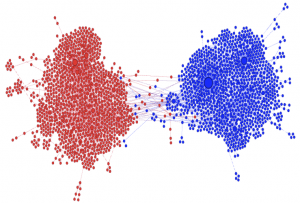

Algorithms can be used to filter results in order to prioritize items that the user might be interested in. On some platforms, like Amazon, people can find this filtering useful because of the useful shopping recommendations the algorithm can provide. However, in other scenarios, this algorithmic filtering can become a problem. For example, Facebook has an algorithm that re-orders the user's news feed. For a period of time, the technology company prioritized sponsored posts in their algorithm. This often prioritized news articles, but there was no certainty on whether these articles came from a reliable source, simply the fact that they were sponsored. Facebook also uses its technology to gather information about its users, like which political party they belong to. This combined with prioritizing news can create a Facebook feed filled with only one party's perspective. This phenomenon is called the filter bubble, which essentially creates a platform centered completely around its user's interests.

Many, like Eli Pariser, have questioned the ethical implications of the filter bubble. Pariser believes that filter bubbles are a problem because they prevent users from seeing perspectives that might challenge their own. Even worse, Pariser emphasizes that this filter bubble is invisible, meaning that the people in it do not realize that they are in it. [21] This creates a huge lack of awareness in the world, allowing people to stand by often uninformed opinions and creating separation, instead of collaboration, with users who have different beliefs.

Because of the issues Pariser outlined, Facebook decided to change their algorithm in order to prioritize posts from friends and family, in hopes of eliminating the effects of the potential filter bubble.

Filter Bubble in Politics

Another issue that these Filter Bubbles create are echo chambers; Facebook, in particular, filters out [political] content that one might disagree with, or simply not enjoy [22]. The more a user "likes" a particular type of content, similar content will continue to appear, and perhaps content that is even more extreme.

Agency And Accountability

Algorithms make "decisions" based on the steps they were designed to follow and the input they received. This can often lead to algorithms as autonomous agents[23], taking decision making responsibilities out of the hands of real people. Useful in terms of efficiency, these autonomous agents are capable of making decisions in a greater frequency than humans. Efficiency is what materializes the baseline for algorithm use in the first place.

From an ethical standpoint, this type of agency raises many complications, specifically regarding accountability. It is no secret that many aspects of life are run by algorithms. Even events like applying to jobs are often drastically effected by these processes. Information like age, race, status, along with other qualifications, are all fed to algorithms, which then take agency and decide who moves further along in the hiring process and who is left behind. Disregarding inherent biases in this specific scenario, this process still serves to reduce the input of real humans and decrease the number of decisions that they have to make, and what is left over is the fact that autonomous agents are making systematic decisions that have extraordinary impact on people's lives. While the results of the previous example may only culminate to the occasional disregard of a qualified applicant or resentful feelings, this same principle can be much more influential.

The Trolley Problem in Practice

Consider autonomous vehicles, or self-driving cars, for instance. These are highly advanced algorithms that are programmed to make split second decisions with the greatest possible accuracy. In the case of the well-known "Trolley Problem"[24], these agents are forced to make a decision jeopardizing one party or another. This decision can easily conclude in the injury or even death of individuals, all at the discretion of a mere program.

In situations like this, where one must be held responsible from the eyes of the law, society, or from an ethical viewpoint, the issue of accountability is raised. It is rarely feasible to simply attribute the culpability to the program itself, as it can not be reasonably held accountable in the same vein that a human might. While some may believe the responsibility then falls to the developer of the program making the decisions[25], and others may place the blame on the users or humans involved in the situation (e.g. the people who caused the autonomous vehicle to be placed in this situation... think jaywalkers or similar offenders), regardless, the ethical implications of such a circumstance are perplexing and wide-reaching, with sparsely an easy solution to be found.

These complications will continue to arise, especially as algorithms continue to make autonomous decisions at grander scales and rates. Determining responsibility for the decisions these agents make will continue to be a vexing process, and will no doubt shape in some form many of the advanced algorithms that will be developed in the coming years.

Intentions and Consequences

The ethical issues that are common in algorithm implementations can be both deliberate and unintentional. In addition to the examples of Buolamwini and Yahoo mentioned above, other cases where the algorithm's intent and outcomes differ are noted below.

YouTube Radicalization

Scholar and technosociologist Zeynep Tufekci has claimed that "YouTube may be one of the most powerful radicalizing instruments of the 21st century."[26] As YouTube algorithms aim maximize the amount of time that viewers spend watching, it inevitably discovered that the best way to do this was to show videos that slowly "up the stakes" of the subject being watched - from jogging to ultramarathons, from vegetarianism to veganism, from Trump speeches to white supremacist rants.[26] Thus, while the intention of Youtube is to keep viewers watching (and bring in advertisement money), they have unintentionally created a site that shows viewers more and more extreme content - contributing to radicalization. Such activity circles back to and produces filters and echo chambers.

References

- ↑ Cormen, Thomas H. et al. Introduction to Algorithms. MIT Press, 2009.

- ↑ Rastogi, Rajeev, and Kyuseok Shim. "Scalable algorithms for mining large databases." Tutorial notes of the fifth ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, 1999.

- ↑ “What Are Algorithms?” The Economist, The Economist Newspaper, 29 Aug. 2017, www.economist.com/the-economist-explains/2017/08/29/what-are-algorithms.

- ↑ McClelland, Calum. “The Difference Between Artificial Intelligence, Machine Learning, and Deep Learning.” Medium, IoT For All, 4 Dec. 2017, medium.com/iotforall/the-difference-between-artificial-intelligence-machine-learning-and-deep-learning-3aa67bff5991.

- ↑ Stepanov, A. and Rose, D. From Mathematics to Generic Programming: The First Algorithm. Chapter 2. 2014. http://www.informit.com/articles/article.aspx?p=2264460

- ↑ “A Brief History of Algorithms (and Why It's so Important in Automation, Machine Learning, and Everyday Life).” e27, e27.co/brief-history-algorithms-important-automation-machine-learning-everyday-life-20161207/.

- ↑ Algorithms for Multiplying and Dividing Whole Numbers. www.ms.uky.edu/~rwalker/ma201/3.4.pdf.

- ↑ "Alan Turing: Algorithms, Computation, Machines.” Brooklyn Institute for Social Research, thebrooklyninstitute.com/items/courses/alan-turing-algorithms-computation-machines/.

- ↑ “Human Computers.” NASA, NASA, crgis.ndc.nasa.gov/historic/Human_Computers.

- ↑ “The History of Artificial Intelligence.” Science in the News, 28 Aug. 2017, sitn.hms.harvard.edu/flash/2017/history-artificial-intelligence/.

- ↑ " FLOYD, ROBERT W. “Non-Deterministic Algorithms.” Carnegie Institute of Technology , Nov. 1996, p. 1–17."

- ↑ “Dynamic Programming.” Wikipedia, Wikimedia Foundation, 15 Feb. 2019, en.wikipedia.org/wiki/Dynamic_programming.

- ↑ “Greedy Algorithms.” Brilliant Math & Science Wiki, brilliant.org/wiki/greedy-algorithm/.

- ↑ 14.0 14.1 Bell, Rob. “A Beginner's Guide to Big O Notation.” A Beginner's Guide to Big O Notation - Rob Bell, rob-bell.net/2009/06/a-beginners-guide-to-big-o-notation/.

- ↑ Buolamwini, Joy. “How I'm Fighting Bias in Algorithms – MIT Media Lab.” MIT Media Lab, www.media.mit.edu/posts/how-i-m-fighting-bias-in-algorithms/.

- ↑ Noble, Safiya "Algorithms of Opression" https://en.wikipedia.org/wiki/Algorithms_of_Oppression

- ↑ Pro Publica Larson, Mattu, Kirchner, Angwin, May 23, 2016

- ↑ Kaplan, J. (2018, December 17). Why your AI might be racist. Retrieved from https://www.washingtonpost.com/opinions/2018/12/17/why-your-ai-might-be-racist/?utm_term=.3017d6f2e958

- ↑ Turilli, Matteo, and Luciano Floridi. “The Ethics of Information Transparency.” Ethics and Information Technology, vol. 11, no. 2, 2009, pp. 105–112., doi:10.1007/s10676-009-9187-9.

- ↑ Griffin, Andrew. “Yahoo Admits It Accidentally Leaked the Personal Details of Half the People on Earth.” The Independent, Independent Digital News and Media, 4 Oct. 2017, www.independent.co.uk/life-style/gadgets-and-tech/news/yahoo-hack-details-personal-information-was-i-compromised-affected-leak-a7981671.html.

- ↑ Eli Pariser. “The Filter Bubble.” Penguin Books, 2012.

- ↑ https://theconversation.com/explainer-how-facebook-has-become-the-worlds-largest-echo-chamber-91024

- ↑ “Autonomous Agent.” Autonomous Agent - an Overview | ScienceDirect Topics, www.sciencedirect.com/topics/computer-science/autonomous-agent.

- ↑ Roff, Heather M. “The Folly of Trolleys: Ethical Challenges and Autonomous Vehicles.” Brookings, Brookings, 17 Dec. 2018, www.brookings.edu/research/the-folly-of-trolleys-ethical-challenges-and-autonomous-vehicles/.

- ↑ Martin, Kirsten. “Ethical Implications and Accountability of Algorithms.” SpringerLink, Springer Netherlands, 7 June 2018, link.springer.com/article/10.1007/s10551-018-3921-3.

- ↑ 26.0 26.1 [1] Tufekci, Zeynep. “YouTube, the Great Radicalizer.” The New York Times, The New York Times, 10 Mar. 2018, www.nytimes.com/2018/03/10/opinion/sunday/youtube-politics-radical.html.