Difference between revisions of "Project Green Light"

| Line 15: | Line 15: | ||

==Concerns== | ==Concerns== | ||

===Civil Liberties=== | ===Civil Liberties=== | ||

| − | In the state of Michigan since 1999, every person taking a state ID has had their image added to a facial recognition database (SNAP). These images are used to profile those seen through Project Green Light video footage, and city activists have expressed discontent with this practice. Additionally, the use of real-time tracking, especially on mobile devices has been challenged. Opponents of the project have raised objections that the technologies and methods in place violate the first, fourth, and fourteenth amendments. | + | In the state of Michigan since 1999, every person taking a state ID has had their image added to a facial recognition database (SNAP). These images are used to profile those seen through Project Green Light video footage, and city activists have expressed discontent with this practice, which culminated in a change to facial recognition policy prohibiting the use of real-time surveillance and allowing the use of facial recognition only during violent crimes and home invasions.<ref>Facial recognition - national league of cities. (n.d.). Retrieved February 6, 2023, from https://www.nlc.org/wp-content/uploads/2021/04/NLC-Facial-Recognition-Report.pdf<ref> Additionally, the use of real-time tracking, especially on mobile devices has been challenged. Opponents of the project have raised objections that the technologies and methods in place violate the first, fourth, and fourteenth amendments. |

===Health and Well-Being=== | ===Health and Well-Being=== | ||

| Line 21: | Line 21: | ||

===Surveillance of Black Bodies=== | ===Surveillance of Black Bodies=== | ||

| − | + | A 2019 study by the National Institute of Standards and Technology (NIST) found that false positive rates in face recognition algorithms are higher in women than men, and are highest in American Indian, African American and East Asian individuals within domestic law enforcement image databases.<ref>Grother, Patrick J., et al. “Part 3: Demographic Effects” Face Recognition Vendor Test (FRVT). U.S. Dept. of Commerce, National Institute of Standards and Technology, 2014.</ref> In general, facial recognition is proven to be less effective for women and darker skinned individuals.<ref>Buolamwini, J., & Gebru, T. (2018, January 21). Gender shades: Intersectional accuracy disparities in commercial gender classification. PMLR. Retrieved January 27, 2023, from http://proceedings.mlr.press/v81/buolamwini18a.html</ref> These demographic differentials mean that misidentification and wrongful accusation is much more likely to happen for individuals who have historically been heavily surveilled and profiled, furthering the displacement and unjust discrimination against people of color. Inaccuracies run the risk of negative consequences for innocent civilians who are misidentified. A U.S government study has shown that even the best facial recognition algorithms misidentify Blacks at five to ten times the rates of whites. In Detroit, at least two individuals, [https://www.freep.com/story/news/local/michigan/detroit/2020/07/10/facial-recognition-detroit-michael-oliver-robert-williams/5392166002/ Michael Oliver] and [https://www.nytimes.com/2020/06/24/technology/facial-recognition-arrest.html Robert Williams] have been wrongly accused through Project Green Light surveillance technologies of involvement with crimes they had nothing to do with. Sociologist Ruha Benjamin has described contemporary biometric surveillance as a form of technologies used to support racist policies and practices through the tracking and control of Black people throughout American history.<ref>Benjamin, R. (2020). Race after technology: Abolitionist Tools for the new jim code. Polity.</ref> Surveillance of Black bodies has a long history can be traced back to slavery. The “lantern-laws” of 18th-century required Black people to illuminate themselves when passing white people in the street. The use of facial recognition on racial justice protestors recalls the COINTELPRO surveillance of Black activists and community organizers in the 1960s. Video surveillance in Detroit, a city with an 80% Black population has been theorized as a continuing legacy of oppression. Introducing the use of this technology through Project Green Light makes this massive facial recognition experiment the first of its kind in on a concentration of Black individuals. | |

===Security vs Safety=== | ===Security vs Safety=== | ||

| − | The idea that public security measures such as surveillance equates to public safety has led local governments to make problematic decisions that facilitate an outcome that many believe to be ineffective and unsafe for community members. Such policies, such as predictive policing, may disproportionately affect marginalized peoples (undocumented, formerly incarcerated, unhoused, poor, etc.) and | + | The idea that public security measures such as surveillance equates to public safety has led local governments to make problematic decisions that facilitate an outcome that many believe to be ineffective and unsafe for community members. Such policies, such as predictive policing, may disproportionately affect marginalized peoples (undocumented, formerly incarcerated, unhoused, poor, etc.) and minoritized (Asian, Black, Indigenous, Latinx) populations.<ref>Shapiro, Aaron. Reform predictive policing. Nature. January 25, 2017, Vol. 541, pp. 458-460.</ref> |

===Algorithmic Bias=== | ===Algorithmic Bias=== | ||

| − | Algorithmic bias refers to statistical errors based on poorly composed datasets which provide an outcome to group A that differs from groups B, C or D. These outcomes generally perpetuate the legacies of exclusion and disproportionate advantage to some that have shaped our legal structures today. Algorithmic biases are in part due to human social norms and ideologies that are reflected back to us in our sociotechnical systems. | + | Algorithmic bias refers to statistical errors based on poorly composed datasets which provide an outcome to group A that differs from groups B, C or D. These outcomes generally perpetuate the legacies of exclusion and disproportionate advantage to some that have shaped our legal structures today. Algorithmic biases are in part due to human social norms and ideologies that are reflected back to us in our sociotechnical systems. The datasets that facial recognition systems are trained on present racial, gender and age bias that, if local governments wish to implement for crime-control, requires full transparency to its citizens before a vote is made. |

==See Also== | ==See Also== | ||

==References== | ==References== | ||

Revision as of 18:58, 6 February 2023

Project Green Light is a city-wide police surveillance system in Detroit, Michigan. The first public-private-community partnership of its kind,[1] Project Green Light Detroit utilizes real-time police monitoring using high-resolution cameras (1080p) whose images can be linked to the state of Michigan's facial recognition database, SNAP. The Project's aim is to deter and reduce city crime and is used in the pursuit of criminals.

Contents

History

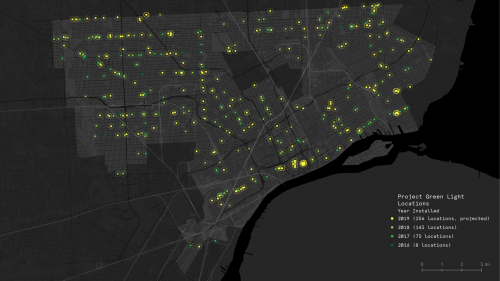

Launched January 1, 2016 with support from Detroit Mayor Mike Duggan, eight city gas station businesses were recruited with the assurance from the Detroit Police Department that the flashing green lights and cameras to be installed at each location would help identify criminal suspects in any future crimes, as well as helping lower crime altogether.[2] With continued governmental and state support, Project Green Light expanded with the justification that Detroit Police Department data showed a decrease in “criminal activity” at the original eight locations. Since its inception, the number of institutions who pay for Project Green Light infrastructure has expanded to over 700 locations as of January, 2021.

In 2017, the city of Detroit contracted an agreement with DataWorks Plus and began to implement their facial recognition software, FacePlus into Project Green Light technologies.[3] FacePlus can automatically search any face that enters the camera's field of vision and may assess them against a statewide database.

According to news sources in June 2020,[4] Project Green Light has allegedly been used on crowds in Black Lives Matter protests using supplemental facial recognition technology to identify protestors who did not adhere to social distancing protocols, a violation which carries up to a $1,000 fine. Project Green Light images have also been allegedly used to help police identify and locate further #BLM protestors whose immigration status was under question.[5]

Methodology

As a public-private partnership, Project Green Light is a paid service that Detroit businesses, residential complexes, schools, churches, and other institutions opt into willingly. Each participant of Project Green Light pays for installation of cameras, signage, decals and green lights to identify that they are part of the project. The cost of these materials for businesses ranges from $4,000 to $6,000.[6] The cameras which monitor buildings are strategically placed to capture license plates and faces of individuals at any given location. This footage from cameras is available 24/7 and is monitored and virtually patrolled in real-time by members of the police, analysts, and volunteers. Much of this work is done at Detroit's Real-Time Crime Center (RTCC), also launched in 2016 costing the city an initial $8 million with an additional $4 million for expansion in 2019.[7] The RTCC prioritizes Project Green Light affiliates over non-affiliates and works with such government agencies as the FBI, the Department of Homeland Security, and private partners including DTE and Rock Financial.[8] This purportedly enables rapid Detroit Police Department response to situations in-progress, which has been beneficial for proponents of Project Green Light as a highly effective technological solution to crime in high-risk areas.

Concerns

Civil Liberties

In the state of Michigan since 1999, every person taking a state ID has had their image added to a facial recognition database (SNAP). These images are used to profile those seen through Project Green Light video footage, and city activists have expressed discontent with this practice, which culminated in a change to facial recognition policy prohibiting the use of real-time surveillance and allowing the use of facial recognition only during violent crimes and home invasions.Cite error: Closing </ref> missing for <ref> tag In general, facial recognition is proven to be less effective for women and darker skinned individuals.[9] These demographic differentials mean that misidentification and wrongful accusation is much more likely to happen for individuals who have historically been heavily surveilled and profiled, furthering the displacement and unjust discrimination against people of color. Inaccuracies run the risk of negative consequences for innocent civilians who are misidentified. A U.S government study has shown that even the best facial recognition algorithms misidentify Blacks at five to ten times the rates of whites. In Detroit, at least two individuals, Michael Oliver and Robert Williams have been wrongly accused through Project Green Light surveillance technologies of involvement with crimes they had nothing to do with. Sociologist Ruha Benjamin has described contemporary biometric surveillance as a form of technologies used to support racist policies and practices through the tracking and control of Black people throughout American history.[10] Surveillance of Black bodies has a long history can be traced back to slavery. The “lantern-laws” of 18th-century required Black people to illuminate themselves when passing white people in the street. The use of facial recognition on racial justice protestors recalls the COINTELPRO surveillance of Black activists and community organizers in the 1960s. Video surveillance in Detroit, a city with an 80% Black population has been theorized as a continuing legacy of oppression. Introducing the use of this technology through Project Green Light makes this massive facial recognition experiment the first of its kind in on a concentration of Black individuals.

Security vs Safety

The idea that public security measures such as surveillance equates to public safety has led local governments to make problematic decisions that facilitate an outcome that many believe to be ineffective and unsafe for community members. Such policies, such as predictive policing, may disproportionately affect marginalized peoples (undocumented, formerly incarcerated, unhoused, poor, etc.) and minoritized (Asian, Black, Indigenous, Latinx) populations.[11]

Algorithmic Bias

Algorithmic bias refers to statistical errors based on poorly composed datasets which provide an outcome to group A that differs from groups B, C or D. These outcomes generally perpetuate the legacies of exclusion and disproportionate advantage to some that have shaped our legal structures today. Algorithmic biases are in part due to human social norms and ideologies that are reflected back to us in our sociotechnical systems. The datasets that facial recognition systems are trained on present racial, gender and age bias that, if local governments wish to implement for crime-control, requires full transparency to its citizens before a vote is made.

See Also

References

- ↑ Project Green Light Detroit. City of Detroit. (n.d.). Retrieved January 25, 2023, from https://detroitmi.gov/departments/police-department/project-green-light-detroit

- ↑ Campbell, Eric T.; Howell, Shea; House, Gloria; & Petty, Tawana (eds.). (2019, August). Special Issue: Detroiters want to be seen, not watched. Riverwise. The Riverwise Collective.

- ↑ A critical summary of Detroit’s Project Green Light and its greater context. (n.d.). Retrieved January 26, 2023, from https://detroitcommunitytech.org/system/tdf/librarypdfs/DCTP_PGL_Report.pdf?file=1%26type=node%26id=77%26force=

- ↑ WXYZ-TV Detroit | Channel 7. (2020). Future of Facial Recognition Technology. Retrieved January 25, 2023, from https://www.youtube.com/watch?v=h-3_cTPqKI4.

- ↑ Race, policing, and Detroit's Project Green Light. UM ESC. (2020, June 25). Retrieved January 25, 2023, from https://esc.umich.edu/project-green-light/

- ↑ Project Green Light Detroit. City of Detroit. (n.d.). Retrieved January 25, 2023, from https://detroitmi.gov/departments/police-department/project-green-light-detroit

- ↑ Detroit real-time crime center. Atlas of Surveillance. (n.d.). Retrieved January 27, 2023, from https://atlasofsurveillance.org/real-time-crime-centers/detroit-real-time-crime-center

- ↑ City of Detroit. Detroit Police Commissioners Meeting 04 19 2018. DetroitMI.gov. [Online] April 19, 2018. [Cited: June 5, 2019.] http://video.detroitmi.gov/CablecastPublicSite/show/5963?channel=3.

- ↑ Buolamwini, J., & Gebru, T. (2018, January 21). Gender shades: Intersectional accuracy disparities in commercial gender classification. PMLR. Retrieved January 27, 2023, from http://proceedings.mlr.press/v81/buolamwini18a.html

- ↑ Benjamin, R. (2020). Race after technology: Abolitionist Tools for the new jim code. Polity.

- ↑ Shapiro, Aaron. Reform predictive policing. Nature. January 25, 2017, Vol. 541, pp. 458-460.