Gender bias in Wikipedia

In the context of Wikipedia, Gender bias refers to the disproportionate rate of female and non-binary content contributions to what is a heavily male-dominated site. Wikipedia is an online encyclopedia allowing the distribution of the collaborative culmination of knowledge, and is intended to be straightforward and accessible to a wide digital audience. For Wikipedia, any individual with internet access has the ability to edit Wikipedia with minimal training. The site offers a neutral setting for users to contribute personal expertise to an established knowledge base [1]. However, among other biases, stark gender bias can be seen in Wikipedia content and the culture of Wikipedia editing. This gender imbalance raises several ethical concerns regarding value sensitive design, online harassment, and algorithmic bias.

Evidence of the Gender Gap

Differences in Wikipedia Editors

A study performed in 2008 by Wikipedia concluded that 15% of Wikipedia's contributors from the United States are women, while 13% of contributors worldwide are women [2][3]. While other studies found the proportion of editors identifying as female is 23%, the moderators and contributors remain predominantly male. [4] This lack of female voices can be a result of elimination of women's perspectives in Wikipedia's content. Wikipedia struggled to maintain female editors and contributors because of other cultural complications; a survey conducted in 2008 shares that women report feeling less confident about their expertise and knowledge, less comfortable in editing other’s work, and react more negatively to feedback than men [5]. These findings illustrate the cultural and attitudinal difference which women and men experience when they edit articles online. While this data pertains to those who have edited Wikipedia articles, researchers suggested that a lack of self-confidence could be a reason why there are fewer women editors [5]. Due to the lack of female contribution, it can be intimidating for female editors to start editing in the first place, creating a feedback loop. [6]

Content Imbalances

Wikipedia's content reflects the perspectives and interests of its editors. There are differences among the interests, preferences, and topics that female and male editors decide to focus their time. With a smaller percentage of Wikipedia contribution coming from women, there may be an imbalance of attention, as well as unbiased reporting, on certain topics related to minority perspectives. [7] Wikipedia lacks Biographies of notable women in comparison to male counterparts. In 2014, Wikimedia, Wikipedia's parent foundation, evaluated all biographies shown in the English Wikipedia and discovered that 15% of them were about notable women across all fields of study and professions. [8] Many initiatives were created to combat this imbalance of women-specific content by organizing the mass editing and addition of many female biographies across varying fields. Many utilize and perceive Wikipedia as an unbiased representation of knowledge. Thus, there is an implicit bias in how Wikipedia and its editors perceive and prioritize these topics. [9]

Women-specific Characterization

Many Wikipedia pages of notable women are more likely to include women-specific characterization through language and links to other Wikipedia pages. A study investigating gender bias in Wikipedia through language found through natural language processing that words most associated with male Wikipedia profiles were mainly about sports, while words most associated with female Wikipedia profiles were mainly arts, gender or family. Other common themes found on Wikipedia pages of notable women are references to a spouse, primarily husbands, and being "the first woman" in her field.[10] Other subtle linguistic differences include highlighting positive aspects of male biographies while highlighting negative aspects of female biographies. One study found that while some differences in the treatment of men and women may be expected due to social and historical biases, there are statistically significant differences in Wikipedia attitudes that can be attributed to Wikipedia editors. [11]

The patterns found by this analysis highlight the prevalence of stereotyping theory in Wikipedia. The pages of women are often characterized as one of two extremes: being the exception to commonalities, or existing within predefined roles. This dichotomy of characterization shows a gap in which knowledge about successful women cannot be robust, but can only be one of two narratives.[10]

Ethical Implications

Foundational Issues and Value Sensitive Design

Wikipedia was founded to reflect a culture that encourages honest, diplomatic thought and neutral points of view. [6] The foundational structure of Wikipedia allows the editing of any pages with little policing, but there is a select group of editors that observe popular, well-visited pages closely. Wikipedia was meant to reflect the neutrality of an encyclopedia; encyclopedias were originally developed to create a collective knowledge-base. [6] The creation of the internet emerged during a time where the intersection of male-dominated areas, government, military, academia, and engineering, were at the center of culture.[4]

The combination of these factors led to Wikipedia's Value Sensitive Design incorporating values intrinsic to humanity. It is true that value sensitive design is advocated for in technological processes, as it strives to incorporate human values[12], and Wikipedia's design can be scrutinized for the nature of its inherent values. A value can refer to what a group of people find important in life.[12] Given that Wikipedia was established by men, the design integrated more masculine and male-oriented values due to its foundation in encyclopedias and male infrastructure. These values conflict with the unanticipated morals of other users and editors, therefore creating ethical problems as to whose voices and opinions should be heard.

Algorithmic and User Bias

Wikipedia's governance is decentrally structured so that it has flexibility, the delegation of tasks and the capability of growth.[13] This structure permits any user to edit Wikipedia pages and form sub-groups of contributors that operate under their own guidelines. Contributors on Wikipedia apply their own knowledge, judgments and prejudices when editing articles[14] and since the majority of Wikipedia editors are male, bias against women is prevalent.

This bias against women can take the form of transparent biases blatant online harassment or opaque biases. [15] Other technical biases can be found in Wikipedia's physical design. One survey found that many potential women editors felt that Wikipedia's interface was complicated or lacked the time to experiment and understand how to use this site. [4]

This user bias is ingrained within Wikipedia's self-policing design as it encourages anyone to access, edit or add information.[4] In Wikimedia's 2011 survey of women editors and contributors, many participants reported feeling less confident and less comfortable with their expertise and editing others' work due to fear of conflict or disparate negative feedback.[2] Wikipedia's governance is dependent on emergent, community-generated social norms created by long-time editors on the site. [15] These norms helped shape the culture of Wikipedia through collective action and be more powerful than explicit rules but reflect biases against a specific group of contributor. This causes a decline in participation from these particular groups.[13] While these biases may be unintentional, Wikipedia's structure and governance raise ethical concerns as to what can be done to improve the gender balance and prevent harm.

Harassment Online

Many women online have faced some sort of aggression or harassment. The creation of Wikipedia's culture encourages active discussion between collaborators, though this tactic for constructive criticism may cross into more harmful action in some cases. Wikimedia’s 2011 survey of female editors found that more than half of the sampled editors described getting into an argument with other editors on discussion pages with around 12% of editors reporting inappropriate comments for or about them.[4] In addition, women are more likely to be penalized or be faced with more backlash when expressing anger, and more likely to be harshly judged for mistakes. [2] In a space where online altercations are commonplace and even encouraged, many women may understand the bias they face due to cultural stereotypes regarding the critical discussion. Overall, many women find Wikipedias culture to be too combative, sexualized, and misogynistic.[4]

Responsive Measures

To balance the gender gap, Wikipedia would need to have a broader population of editors to broaden their topics and knowledge. [8] In order for them to attract more women contributors and editors, Wikipedia would need put forth an effort.

Internally, Wikipedias current self-policing system could be altered to maintain a space for women editors. It would require the implement of a fuller security system to gauge a contributor's expertise, monitors for harassment or vandalism and encourage training or education in multiple perspectives on knowledge sharing. [2]

The gender bias found in Wikipedia reflect the biases found in the secondary sources that Wikipedia relies on.[10] It is prevalent for other fields also need to recognize gender biases they may face to build the repository from research that Wikipedia editors may pull from.

WikiProject Women in Red

On Wikipedia, red links indicate that a page hasn't been created for that person or topic and blue links indicate valid pages. In effort to improve the gender balance on Wikipedia, the WikiProject, Women in Red, began their initiative to create pages for women's names to appear as red links.(hence the name Women in Red).[16] This group began in 2015 and was co-founded by Rosie Stephenson-Goodknight.[16] From November 2014 to May 2017, the project facilitated the creation of more than 45,000 new pages about women.[17] The WikiProject publicizes its objective of using social media platforms and by hosting edit-a-thons. They partnered with WikiProject Women scientists and the Wiki Education Foundation.[16] By September 2018, the biographies of women had increased to 17.79% from an original approximation of 15% in 2014.[17] This group demonstrates that with time and effort, improvement in the gender balance on Wikipedia can be made.

WikiProject Women Scientists

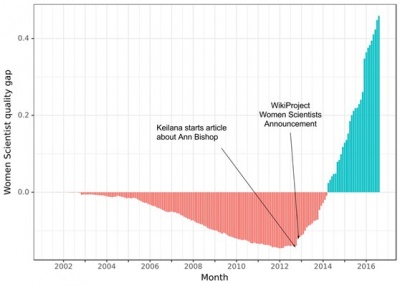

The WikiProject Women Scientists group was formed in 2012 and was co-founded by Emily Temple-Wood.[17] Its main goal is to improve the quality and quantity of Wikipedia pages on women in science. Temple-Wood edits under the alias "Keliana"[16] and has played a major role in the project's success. She has written more than 100 pages on women in science, and the project has attracted over 80 members.[16] The quality of pages on women in science has improved so much from this initiative that researchers have dubbed the phenomenon the "Keliana Effect".[17] Before the project established itself, the pages about women in science were observed between 2002 and 2013, it was found to be worse than the average page quality. After the initiative began, many of the pages became more than 40% better in quality than the average page, which is an immense jump from the project's starting point.[17]

More Efforts from the Wikimedia Foundation

Other External Efforts

- Wikid GRRLS: This project teaches online and research skills and encourages teenage girls in the Detroit area to participate in online discussion.

References

- ↑ Zittrain, Jonathan. 2008. “The Lessons of Wikipedia,” in The Future of the Internet and How to Stop It. New Haven: Yale University Press, Chapter 6, pp. 127-48. [21 pages]

- ↑ 2.0 2.1 2.2 2.3 Nicole Torres “Why Do So Few Women Edit Wikipedia?” (Harvard Business Review, Gender, Jun 02 2016)

- ↑ Noam Cohen “Define Gender Gap? Look Up Wikipedia’s Contributor List” (New York Times, Media, Jan 30, 2011)

- ↑ 4.0 4.1 4.2 4.3 4.4 4.5 Aysha Khan “The slow and steady battle to close Wikipedia’s dangerous gender gap” (Think Progress, Dec 15, 2016)

- ↑ 5.0 5.1 Torres, Nicole. “Why Do So Few Women Edit Wikipedia?” Harvard Business Review, 2 June 2016, hbr.org/2016/06/why-do-so-few-women-edit-wikipedia.

- ↑ 6.0 6.1 6.2 Emma Paling “Wikipedia’s Hostility to Women”, (The Atlantic, Technology, Oct 21 2015)

- ↑ Julia Bear, Benjamin Collier "Where are the Women in Wikipedia? Understanding the Different Psychological Experiences of Men and Women in Wikipedia", (Sex Roles: A Journal of Research, (2016) 74: 254)

- ↑ 8.0 8.1 Katherine Maher "Wikipedia is a mirror of the world’s gender biases", (Wikimedia Foundation, News, Oct 18, 2018)

- ↑ Joseph Reagle, Lauren Rhue "Gender Bias in Wikipedia and Britannica", (International Journal of Communication, 5 (2011), 1138–1158)

- ↑ 10.0 10.1 10.2 Eduardo Graells-Garrido, et. al. "First Women, Second Sex: Gender Bias in Wikipedia" (Cornell University, Computer Science, Social and Information Networks, 2015)

- ↑ Claudia Wagner, Eduardo Graells-Garrido, David Garcia and Filippo Menczer "Women through the glass ceiling: gender asymmetries in Wikipedia", (EPJ Data Science, 2016, 5:5)

- ↑ 12.0 12.1 Friedman, B., "Value Sensitive Design and Information Systems", The Handbook of Information and Computer Ethics, 2008

- ↑ 13.0 13.1 Andrea Forte, Vanesa Larco & Amy Bruckman "Decentralization in Wikipedia Governance" (Journal of Management Information Systems, 26:1, 49-72, 2009)

- ↑ Monica Hesse “History has a massive gender bias. We’ll settle for fixing Wikipedia.” (The Washington Post, Perspective, Feb 17 2019)

- ↑ 15.0 15.1 Philip Brey "Values in technology and disclosive computer ethics" (2010)

- ↑ 16.0 16.1 16.2 16.3 16.4 Vitulli, M., "Writing Women in Mathematics into Wikipedia", Notices of the AMS, March 2018

- ↑ 17.0 17.1 17.2 17.3 17.4 White, A., "The history of women in engineering on Wikipedia", Autumn 2018

| ←Back • ↑Top of Page |