Discrimination in Algorithms

Discrimination in algorithms describes outcomes from computer systems “which are systematically less favorable to individuals within a particular group and where there is no relevant difference between groups that justifies such harms” [1]. Algorithms, which are instructions computers follow to accomplish some goal, are commonplace in the 21st century and can cause various biases [1]. There are multiple causes of bias in algorithms that can be classified into the following three groups: data creation bias, data analysis bias, and data evaluation bias. As a result of these biases, algorithms can create or perpetuate racism, sexism, and classism. To prevent those biases from a technical standpoint, it is important to monitor and analyze algorithms during data creation, data analysis, and data evaluation. From a general standpoint, it is important to increase diversity and inclusion in companies to mitigate discrimination from multiple aspects.

Contents

Causes of Discrimination

Data Creation Bias

Sampling Bias

Sampling bias is a type of bias that occurs when a dataset is created by choosing certain types of instances over others [2]. This renders the dataset under representative of the population. An example of sampling bias is when a facial recognition algorithm is fed more photos of light-skinned people than dark-skinned people. This leads to the algorithm having a harder time recognizing dark-skinned people.

Measurement Bias

Measurement bias is a type of bias that occurs as a result of human error. It can occur when “proxies are used instead of true values” when creating datasets [2]. An example of this type of bias was in a recidivism risk prediction tool. The tool used prior arrests and friend or family arrests as proxy variables to measure the level of riskiness or crime [3]. Minority communities are policed more frequently, so they have higher arrest rates. The tool would conclude that minority communities have higher riskiness because they have higher arrest rates, but it does not take into account that there is a difference in how those communities are assessed.

Label Bias

Label bias is a type of bias that occurs as a result of inconsistencies in the labeling process [2]. An example of this type of bias is two scientists labeling the same object as a stick and a twig rather than using the same label. Another type of label bias can occur when scientists assign a label based on their subjective beliefs rather than objective assessment.

Omitted Variable Bias

Omitted variable bias is a type of bias that occurs when one or more important variables are left out of a dataset [4]. An example would be when a model is designed to predict the percentage of customers that will stop subscribing to a service. It is observed that many users are cancelling their subscriptions without warning from the model because a new competitor in the market offers the same product for half the price. The arrival of the competitor was something that the model was not ready for, so it considered to be an omitted variable [3].

Historical Variable Bias

Historical bias is a type of bias that occurs when already existing bias in the world seeps into the dataset even when it is perfectly sampled and selected [3]. An example of this type of bias can be found when doing an image search for women CEOs. The search results show fewer women CEO images “due to the fact that only 5% of Fortune 500 CEOs are woman," so the search results are more biased toward male CEOs [5]. The image search results were reflecting reality, but whether algorithms should reflect this reality is a debated issue.

Historical bias can occur when people from privileged groups create most of the datasets and algorithms [6]. Those datasets could be biased or not collected at all. Because the demographics of data science are not representative of the population as a whole (“Google’s Board of Directors is 82% white men” and “Facebook’s Board is 78% male and 89% white”), a small group of people are making algorithms for the world which incurs risk [6].

Data Analysis Bias

Sample Selection Bias

Sample selection bias is a type of bias that occurs when the selection of data for analysis is not representative of the population being analyzed. An example would be when analyzing the effect of motherhood on wages. If the study is restricted to women who are already employed, the measured effect will be biased as a result of “conditioning on employed women” [2].

Confounding Bias

Confounding bias is a type of bias that occurs when the algorithm learns the wrong relations by not considering all the information or misses relevant relations [2]. An example would be when graduate schools state that admissions are solely based on grade point average. There could be other factors such as ability to afford tutoring which could depend on factors such as race. These factors could influence grade point average and consequently admission rates. As a result, “spurious relations between inputs and outputs are introduced” which could lead to bias [2].

Design-Related Bias

Design-related bias is a type of bias that occurs as a result of limitations or constraints put on algorithms such as computational power [3]. This type of bias includes presentation and ranking bias.

Presentation bias is a type of bias that occurs as a result of how information is presented. An example would be when users can only click on content that they see on the Web, so the seen content gets clicks and everything else gets no click. The clicked content would be seen as preferred by users when it could just be the case that the user does not see all the information on the Web [3].

Ranking bias is a type of bias that occurs when results are ranked [3]. The highest ranked results would result in more clicks and be seen as more relevant. This bias affects search engines [7].

Aggregation Bias

Aggregation bias is a type of bias that occurs when false conclusions are made about individuals after observing a population [3]. An example of this type of bias is seen in clinical aid tools. HbA1c levels, which are widely used to diagnose and monitor diabetes, differ across genders and ethnicities [8]. An algorithm that ignores individual differences would not be accurate for all ethnic and gender groups. This would be true even if they are represented equally in the training data. Any assumptions about the HbA1c level for individuals with diabetes based on the HbA1c levels of the diabetes population would result in aggregation bias since HbA1c levels differ for the ethnic or gender subgroups within that population.

Simpson’s paradox is a type of aggregation bias that occurs during the analysis of heterogeneous data [9]. The paradox arises when “an association observed in aggregated data disappears or reverses when the same data is disaggregated into its underlying subgroups” [9]. An example of this type paradox occurred during a gender bias lawsuit against UC Berkeley [10]. After analyzing admissions data, it seemed like there was a bias against women. When admissions data was separated and analyzed over the departments, female applicants had the same admission rates and sometimes even higher admission rates. The paradox was a result of women applying to departments with lower admission rates for both genders.

Data Evaluation Bias

Sample Treatment Bias

Sample treatment bias is a type of bias that occurs when the test sets used to evaluate an algorithm may be biased” [2]. An example would be when showing an advertisement to a specific group of viewers, such as those speaking a certain language [2]. The observed results would not be representative of the population as a whole since the algorithm was only tested on a specific group of people.

Impact

Racism

In 2018, a software could not identify Joy Buolamwini’s face. Buolamwini is a dark-skinned Black woman who was a graduate student at MIT. She was working on a project for class that used facial-analysis software [6]. The software could not identify her face but could identify her light-skinned coworkers. When Buolamwini put on a white mask, the software was able to detect the mask’s facial features. Buolamwini learned that the software had been tested on a dataset that contained “78 percent male faces and 84 percent white faces” [6]. Because the algorithm was trained on biased data, it was “forty-four times more likely to be misclassified than lighter-skinned males” [6].

Most hospitals use predictive algorithms to determine how to split up resources. An algorithm that was “designed to predict the cost of care as a proxy for health needs” consistently gave the same risk score to Black and white patients when the Black patient was far sicker than the white patient [11]. This was because providers spent much less on their care overall compared to white patients [11]. The algorithm was trained on historic data, and that history included “segregated hospital facilities, racist medical curricula, and unequal insurance structures, among other factors” [11]. As a result, the algorithm consistently rated Black patients as costing less because history had valued Black patients as less.

In 2014, Latanya Sweeney found that online searches for African-American names were more likely to return ads related to arrest records compared to white names [1]. Additionally, she found that African Americans were targets for higher-interest credit cards and other financial products even though they had similar backgrounds to their white counterparts [12]. A website which marketed the “centennial celebration of an all-black fraternity” consistently received ads relating to arrest records or accepting high-interest credit cards [12].

The Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) algorithm is used to determine whether defendants should be detained or released on bail [1]. The algorithm was found to biased against Black defendants. It used a risk score based on “arrest records, defendant demographics, and other variables” which was used to determine the likelihood to commit a future crime or offense [1]. Black defendants received a higher risk score compared to white defendants who were equally likely to reoffend which resulted in longer periods of detention when awaiting trial [1].

Amazon used an algorithm to determine which neighborhoods to exclude from same-day Prime delivery [13]. The data included in the algorithm was “whether a particular zip code had a sufficient number of Prime members, was near a warehouse, and had sufficient people willing to deliver to that zip code” [13]. While none of the data on its own is exclusionary, the result was the “exclusion of poor, predominantly African-American neighborhoods, transforming these data points into proxies for racial classification” [1].

Sexism

Princeton University researchers used a machine learning software to analyze words [1]. They found that the words “women” and “girl” were more likely to be “associated with the arts instead of science and math, which were most likely connected to males” [1]. The algorithm had picked up on and incorporated human gender biases into its software. If the learned associations of the algorithm were used as part of a search-engine ranking algorithm, it could perpetuate and reinforce existing gender biases [1].

In 2012, Target used a pregnancy detection model to send coupons to women who were possibly pregnant in order to increase their profits [6]. A father was outraged that his daughter was receiving pregnancy coupons when she was only a teenager, but he later learned that his daughter was pregnant. As a result of Target’s algorithm, the teenager had lost “control over information related to her own body and her health” [6].

In 2018, Amazon was creating an algorithm to screen applicants. The dataset the algorithm was trained on included resumes from primarily men. Because of this, the algorithm developed an even stronger preference for male applicants and “downgraded resumes with the word women and graduates of women’s colleges” [6]. The lack of representation in the dataset lead to a biased algorithm.

Classism

An algorithm was developed to predict the risk of child abuse in any home, and its goal was to remove children from abusive homes before the abuse even started [6]. Wealthier parents have access to private health care and expensive mental health services unlike poorer parents. This resulted in less data being collected on wealthy parents. Poor parents had to utilize public resources which made it easy to collect data on them. Some of the data collected for the algorithm included “records from child welfare services, drug and alcohol treatment programs, mental health services, Medicaid histories, and more” [6]. Since more data was available on poor parents, they were oversampled and their children were more likely to be targeted as being at risk for child abuse [6]. This resulted in more poor parents having their kids taken away from them.

An algorithm was used in the UK to determine the grades of students. As a result of COVID-19, the UK had decided to cancel the A-levels and to use an algorithm instead to determine the scores [15]. The problem arose when the algorithm attached significant weighting to the “previous averaged results of the school the student was attending” [15]. As a result, nearly all of the grade increases were “absorbed by private schools, which can charge upwards of £40,000 a year” [15].

Solutions

Technical

Solutions to Data Creation Bias

Before creating an algorithm, there are steps that can be taken to moderate the data going into the algorithm. For example, if the data lacks representation, researchers can use machine learning or artificial intelligence to re-weight the data [16]. This prevents the underrepresented populations from having a smaller effect on the algorithm’s decisions and allows for labelling biases to be found [16].

Solutions to Data Analysis Bias

To counteract bias during data analysis, companies should “incorporate fairness into the machine learning training task itself” by adding a penalty to undesired biases [16]. For example, “an in-processing mitigation strategy” would be establishing that women should be accepted at the same rate as men or Black men should be accepted at the same rate as white men [16].

Solutions to Data Evaluation Bias

To counteract biases during data evaluation, it is recommended to use post-processing algorithms. Post-processing algorithms “focus on reducing bias by working on the model output predictions” [16]. While this sounds simple, it can challenging to maintain accuracy while reducing bias through this approach [16].

Diversity and Inclusion

The tech industry is dominated by white men which allows for biases to seep into algorithms. If those men were “specifically trained in structural oppression before building their data systems,” that could counteract the biases [6]. Additionally, adding diversity and inclusion “into the algorithm’s design can potentially vet the cultural inclusivity and sensitivity of the algorithms for various group” [1]. Having a diverse group of humans working with machines can help mitigate bias throughout the process [17]. If the algorithm is showing signs of bias, it should be discontinued and reviewed to prevent further harm.

References

- ↑ 1.00 1.01 1.02 1.03 1.04 1.05 1.06 1.07 1.08 1.09 1.10 Lee, Nicol Turner, et al. “Algorithmic Bias Detection and Mitigation: Best Practices and Policies to Reduce Consumer Harms.” Brookings, 9 Mar. 2022, https://www.brookings.edu/research/algorithmic-bias-detection-and-mitigation-best-practices-and-policies-to-reduce-consumer-harms/.

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 2.6 2.7 Srinivasan, Ramya, et al. “Biases in AI Systems.” Communications of the ACM, 1 Aug. 2021, https://dl.acm.org/doi/10.1145/3464903.

- ↑ 3.0 3.1 3.2 3.3 3.4 3.5 3.6 Mehrabi, Ninareh, et al. “A Survey on Bias and Fairness in Machine Learning.” USC, 25 Jan. 2022, https://arxiv.org/abs/1908.09635.

- ↑ Clarke, Kevin A, “The Phantom Menace: Omitted Variable Bias in Econometric Research” Conflict management and peace science, 2005.

- ↑ Suresh, Harini, and V. John. "A Framework for Understanding Unintended Consequences of Machine Learning." 2019.

- ↑ 6.00 6.01 6.02 6.03 6.04 6.05 6.06 6.07 6.08 6.09 6.10 6.11 D'Ignazio, Catherine, and Lauren F. Klein. Data Feminism. MIT Press, 2020.

- ↑ Baeza-Yates, Ricardo. "Bias on the Web." ACM 61, May 2018.

- ↑ Herman, William H. “Do Race and Ethnicity Impact Hemoglobin A1C Independent of Glycemia?” Journal of Diabetes Science and Technology, U.S. National Library of Medicine, 1 July 2009, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2769981/.

- ↑ 9.0 9.1 Blyth, Colin R. "On Simpson’s Paradox and the Sure-Thing Principle." American Statistics Association.

- ↑ Bickel, Peter J, et al. "Sex Bias in Graduate Admissions: Data from Berkeley." Science, 2002.

- ↑ 11.0 11.1 11.2 Benjamin, Ruha. "Assessing Risk, Automating Racism." Science. Oct. 2019, https://www.science.org/doi/full/10.1126/science.aaz3873?casa_token=9tolNYOPMRQAAAAA:jiXEhUdWdZ5bjIF07aKWlaQqSXSylZ0vM2-DTRSzW1h4BaQ1RhRcQq4gVGsPfgOzFF66F6SSCMSBuw.

- ↑ 12.0 12.1 Sweeney, Latanya and Jinyan Zang. “How appropriate might big data analytics decisions be when placing ads?” Federal Trade Commission Conference, Washington, DC. September 15, 2014, https://www.ftc.gov/systems/files/documents/public_events/313371/bigdata-slides-sweeneyzang-9_15_14.pdf

- ↑ 13.0 13.1 Lee, Nicol Turner. “Inclusion in Tech: How Diversity Benefits All Americans.” Subcommittee on Consumer Protection and Commerce, United States House Committee on Energy and Commerce, 2019,https://www.brookings.edu/testimonies/inclusion-in-tech-how-diversity-benefits-all-americans/.

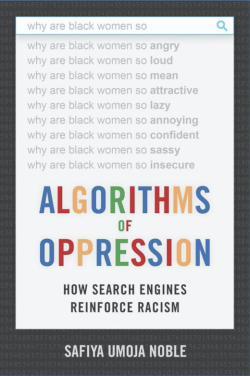

- ↑ Noble, Safiya Umoja. Algorithms of Oppression: How Search Engines Reinforce Racism. New York University Press, 2018.

- ↑ 15.0 15.1 15.2 “The Ethics of Algorithms: Classism.” HECAT, 2020, https://hecat.eu/2020/08/24/the-ethics-of-algorithms-classism/.

- ↑ 16.0 16.1 16.2 16.3 16.4 16.5 Kelly, Sonja, and Mehrdad Mirpourian. "Algorithmic Bias, Financial Inclusion, and Gender." Women's World Banking, Feb. 2021, http://www.womensworldbanking.org/wp-content/uploads/2021/02/2021_Algorithmic_Bias_Report.pdf.

- ↑ Manyika, James, et al. “What Do We Do about the Biases in Ai?” Harvard Business Review, 17 Nov. 2022, https://hbr.org/2019/10/what-do-we-do-about-the-biases-in-ai.