Content Moderation in Facebook

Content moderation is the process of screening content users post online by applying a set of rules or guidelines to see if it is appropriate or not [2]. Facebook is a social media platform that allows people to connect with friends, family and communities of people who share common interests [3]. As with many other popular social media platforms, Facebook has come up with an approach to moderate and control the type of content users see and engage with [4]. Facebook has two main approaches when it comes to content moderation; they utilize both AI moderators and human moderators. The way Facebook moderates its content is through its community standards that lay out what they believe each post should follow [5]. Although Facebook does have success with its content moderation tactics reducing the harmful content on its platform, the media mainly focuses on the instances where it has failed [6]. The ethics behind Facebook’s content moderation approach has also been widely controversial, from the mental health struggles human moderators are forced to deal with [7] to questioning how the AI is trained to flag inappropriate content [8].

Overview/Background

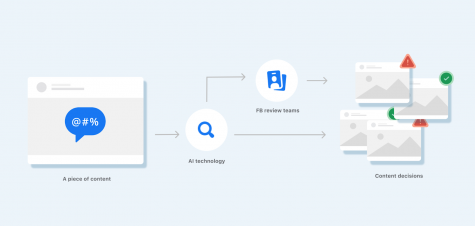

When it comes to content moderation, Facebook utilizes both AI moderators as well as human moderators. Posts that violate the community standards are deemed inappropriate. This includes everything from spam to hate speech to content that involves violence [5]. Content that violates the community standards is found right away through the AI moderators, however, the human moderators are responsible for posts that the AI is not quite sure about and are vital to improving the machine learning model the AI technology uses [9].

AI Moderators

Facebook filters all posts initially through its AI technology. Facebook’s AI technology starts with building machine learning (ML) models that have the ability to analyze the content of a post or recognize different items in a photo. These models are used to determine whether what the post contains fits within the community standards or if there is a need to take action on the post, such as removing it [5].

Sometimes, the AI technology is unsure if content violates the community standards so it will send over the content to the human review teams. Once the review teams make a decision, the AI technology is able to learn and improve from that decision. This is how technology is trained over time and gets better. Oftentimes, Facebook’s community standard policies change to keep up with changes in social norms, language and their products and services. This requires the content review process to always be evolving and changing [5].

Until fairly recently, posts were viewed in the order they were reported, however, Facebook says it wants to make sure the most important posts are seen first. They decided to change their machine learning algorithms to prioritize more severe or harmful posts [9]. Facebook has recently reworked how they decide if a post is a violation of the community standards. They used to have separate classification systems that looked at individual parts of a post. The AI split it up into content type and violation type and would have many classifiers look at photos and text. Facebook decided it was too disconnected and created a new approach. Now, Facebook says their machine learning algorithm works through a holistic approach or Whole Post Integrity Embeddings (WPIE). It was trained on a very widespread selection of violations and has greatly improved [10].

Human Moderators

Facebook filters all posts through its AI technology initially, but it is passed through to their human moderators if Facebook’s AI technology decides that certain pieces of content require further review. It will send the content to human review teams to take a closer look and make a decision on whether or not to remove the post. So, in other words, the human moderators get the final say in what sort of content users will see [5].

There are about 15,000 Facebook content moderators employed throughout the world [12]. Their main job is to sort through the AI flagged posts and make decisions about whether or not they violate the company’s guidelines [9]. There are many companies that Facebook has worked with to help moderate content. Some of these companies included Cognizant, Accenture, Arvato, and Genpact [1]. Because these employees technically work for third-party firms, it means they don't get the same benefits as Facebook's salaried employees would [12].

Human moderators receive extremely low pay in comparison to Facebook's other employees. Human moderators at Cognizant, a company Facebook utilizes to provide moderators, earn just about $4 above the state's minimum wage. For example, employees of Cognizant who work in Phoenix, Arizona make $28,800 per year. Whereas, the average Facebook employee has a total compensation of $240,000 [7]. Many content moderators have expressed frustration with the low pay [13].

Facebook is one of the largest social media platforms as the latest update from the Q3 2022 data reported 2.96 billion users worldwide [14]. Since Facebook must cater to so many worldwide users, it is often when the human moderators are overwhelmed. Many human moderators have also expressed their struggles with mental health issues as a result of working that job. Many employees have reported the work environment being stressful, dirty and unhealthy [1].

Self Moderation

Facebook additionally provides page manager tools with which users can utilize to moderate their own pages, at a basic level these include what types of posts users can upload (photos, text, comments, nothing). These permissions extend to banning users, hiding or deleting comments and posts, and blocking profanity or specific vocabulary from text posts. These posts may not violate the community standards Facebook has created, but for whatever reason, the user does not want to see. Users are able to block certain words from being seen or block entire users entirely. While this option could be dangerous and create a biased or narrow-minded view of something, allowing the opportunity for users to moderate the content they see is important to Facebook. Facebook also has a "Moderation Assist" feature that uses AI to censor individual accounts or posts based on features of the account or post, including not having a profile picture, not having friends or followers, posts including links, or posts with custom keywords [15].

How Successful is Facebook’s Content Moderation Process?

There are over three million posts reported daily by either users or the AI screening technology as possible content guideline violations. In 2018, Mark Zuckerberg, the CEO of Facebook, stated that moderators “make the wrong call in more than one out of every 10 cases.” That equates to about 300,000 mistakes made everyday. Whether one focuses on how Facebook's content moderation approach is 10% inaccurate or 90% accurate is up to which side of the argument one wants to make. [6].

AI Mistakes

Facebook has faced criticism when it comes to their content moderation process. Specifically, the automated removal systems and the vague rules and unclear explanations of its decisions. Some reasons why the content moderation AI faces backlash is due to unreliable algorithms, vague standards/guidelines, not being able to see more context, and proportionality [8].

In Facebook's defense however, it is important to note that Facebook knows that their AI algorithms are not perfect. Zuckerberg stated in February 2022 that the company's goal is to get their AI to a "human level" of intelligence where it would be capable of learning, predicting, and acting on its own [17]. It is also important to note that Facebook is constantly changing its guidelines to stay up to date with current times. It is hard for the algorithm to change and update at the same pace [5].

Instances

Facebook is fairly successful in its content moderation efforts with a success rate of 90% with human moderators [6] and over 95% with its AI technologies when it came to violent and sexually inappropriate content in recent years [18]. However in the media and news, negative situations caused by Facebook's content moderation is highlighted much more often. This could be due to the fact that approximately 90% of all media news is negative [19]. As a result of this, it is challenging to find situations when Facebook is praised for its content moderation results and instead much easier to hear about negative instances [8].

Covid-19 (The Good & The Bad)

During the Covid-19 pandemic, there was misinformation spread about the disease and/or vaccine [20]. Initially, Facebook did not want to prohibit free speech, however, they realized incorrect or misleading information was occasionally being shared which resulted in some policy changes such as linking new CDC updates and removing factually incorrect posts [21].

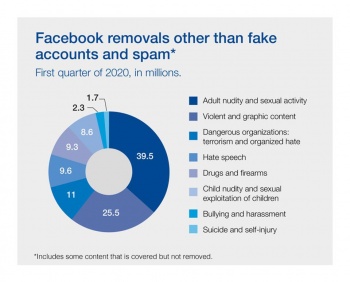

When the pandemic hit in March 2020, Facebook decided to send its human moderators home and only rely on AI. As a result, Facebook saw a decrease in removal of posts when it came to child sexual abuse material and suicide and self-injury content. The drop in removal was not due to a decrease in those types of posts, but instead due to the limited number of actual humans who were available to look at those posts. Facebook also stated that they did not feel comfortable providing people with extremely graphic content at home [22].

Humans are not responsible to find all all child sexual abuse material. Automated systems are responsible for removing 97.5% of those types of posts that appear on Facebook. But if the human moderators aren't able to flag those types of posts, the AI system is unable to find it at scale. The Covid-19 Pandemic showed Facebook how vital and needed human moderators are in making sure their content moderation is a success [22].

Even though Facebook had access to a lesser amount of human moderators due to Covid-19, Facebook noted that it was able to improve in other areas through its AI technology. Some examples include improving its proactive detection rate for the moderation of hate speech, terrorism, and bullying and harassment content. [23]

Language Gaps

Facebook reported that almost two-thirds of users use a language other than English [24]. Facebook's content moderation strategy has a hard time understanding and policing non-English content as its AI machine learning algorithms don’t fully understand the nuances of language. For example, when it comes to content in Arabic, Facebook is much less successful in moderating it. One study done in 2020 discovered that Facebook's human and automated reviewers struggle to comprehend the different dialects used across the Middle East and North Africa. As a result of this, Facebook has wrongfully censored harmless posts as promoting terrorism and leaving up hateful speech that should be removed. Users in various countries, such as Afghanistan, have also reported that they can’t easily report problematic content because Facebook had not translated its community standards into their country’s official languages [25].

Facebook has relied heavily on artificial intelligence filters that commonly make mistakes which lead to “a lot of false positives and a media backlash.” Its reliance is due to the fact that there are limited, unskilled moderators in those languages [26].

Throughout all of this, Facebook has made it known that they are aware of the inaccuracies of their content moderation system and are constantly working hard to improve. Their goals are to enhance the algorithms and enlist more moderators from less-represented countries. They are aware that there is not one clear strategy to fix this issue and are proactively working to better their approach [27].

Removal of Content That's Not a Violation of Community Standards

There have been many cases where using Facebook's algorithms have resulted in the removal of content that did not actually violate the community standards even though they were flagged. Each time Facebook acknowledged their mistake and made policy changes in order to improve on their mistakes [8].

An example of this occurred during the #MeToo movement. During this movement, many women shared stories of their experience with sexual abuse, harassment, and assault on Facebook that technically violate Facebook’s rules. Initially, Facebook was flagging these posts because of those violations. However, the Facebook policy team decided those stories were important for people to be able to share. Facebook relied more heavily on human moderators for posts like these and wrote up new rules/tweaked existing ones to make enforcement consistent [28].

Ethical Concerns

Mental Health & Unfair Treatment of Human Moderators

One of the biggest ethical concerns when it comes to Facebook’s content moderation process is the human moderators. The human moderators are reported to have unfair wages, unfair hours, and are left to deal with many mental health issues [6].

Former moderators who worked for Facebook have come out to say how they have struggled with PTSD and trauma from some of the content they were forced to engage with [30]. One former moderator, Josh Sklar, has stated how Facebook was always changing up the policies and guidelines and never really gave specific quota. In other words, he saw things as extremely mismanaged. He also expressed great frustration when it came to the type of content he was reviewing (such as child porn). He cited that as his main reason for quitting and hopes in the future, Facebook will have more emphasis on mental health for these moderators. [31]. A current employee, Isabella Plunkett, states how she signed an NDA when she first got hired so she is unable to talk to her family and friends about what she sees. She also noted that she does not know how much longer she can continue to do content moderation for Facebook because of the strain on her mental health. She notes insufficient support and that the wellness coaches are not qualified psychiatrists [32].

Facebook uses content moderation provider companies from around the world and one of its largest has recently decided to discontinue working with Facebook. A TIME investigation found low pay, trauma and alleged union-busting surrounding the company [13]. Despite Facebook stating that they will work to address the poor workplace conditions for its content moderators, human moderators still continue to complain about them.[33]

Moderation rules

Facebook constantly gets attacked from all sides. There are arguments from conservatives, liberals, governments, news organizations, and human rights organizations all saying that Facebook is supporting something they are against. In order to be fair and unbiased towards any certain group, Facebook utilizes its community standards (or moderation rules). These rules cover all different types of possible content users may see [28].

This breakdown of moderation rules can be seen as unethical as it's forcing various posts to be put into classifications. Forcing classifications can be dangerous as items may not necessarily fit into certain boxes which creates an imbalance [34]. In Facebook's case, classifications can cause the incorrect removal of some posts [8]. However, Facebook's AI algorithms utilize classification systems in order to sort posts more effectively [10] so it is tricky to find that balance.

One example of this included the removal of images of breastfeeding mothers and mastectomy scars displayed by cancer survivors as they were flagged as sexual imagery. Facebook has a nudity policy where there is a disproportionate impact on female users as posts with male breasts are not a violation but posts with female breasts are. Facebook did acknowledge this mistake and made an effort to create a more balanced approach [8].

References

- ↑ 1.0 1.1 1.2 Wong, Q. (2019, June). Facebook content moderation is an ugly business. Here's who does it. CNET. Retrieved from https://www.cnet.com/tech/mobile/facebook-content-moderation-is-an-ugly-business-heres-who-does-it/

- ↑ Roberts, S. T. (2017). Content moderation. In Encyclopedia of Big Data. UCLA. Retrieved from https://escholarship.org/uc/item/7371c1hf

- ↑ Facebook. Meta. Retrieved from https://about.meta.com/technologies/facebook-app/

- ↑ Meta. Facebook community standards. Transparency Center. Retrieved from https://transparency.fb.com/policies/community-standards/

- ↑ 5.0 5.1 5.2 5.3 5.4 5.5 5.6 How does facebook use artificial intelligence to moderate content? Facebook Help Center. Retrieved from https://www.facebook.com/help/1584908458516247

- ↑ 6.0 6.1 6.2 6.3 Barrett , P. M. (2020). Who Moderates the Social Media Giants? A Call to End Outsourcing. NYU Stern Center for Business and Human Rights

- ↑ 7.0 7.1 Simon, S & Bowman, E. (2019, March 2). Propaganda, hate speech, violence: The working lives of Facebook's content moderators. NPR. Retrieved from https://www.npr.org/2019/03/02/699663284/the-working-lives-of-facebooks-content-moderators

- ↑ 8.0 8.1 8.2 8.3 8.4 8.5 Hecht-Felella, L. & Patel, F. (2022, November 18). Facebook's content moderation rules are a mess. Brennan Center for Justice. Retrieved from https://www.brennancenter.org/our-work/analysis-opinion/facebooks-content-moderation-rules-are-mess

- ↑ 9.0 9.1 9.2 Vincent, J. (2020, November 13). Facebook is now using AI to sort content for quicker moderation. The Verge. Retrieved from https://www.theverge.com/2020/11/13/21562596/facebook-ai-moderation

- ↑ 10.0 10.1 New progress in using AI to detect harmful content. Meta AI. Retrieved from https://ai.facebook.com/blog/community-standards-report/

- ↑ Shead, S. (2020, November 19). Facebook Moderators Say Company has risked their lives by forcing them back to the Office. CNBC. Retrieved from https://www.cnbc.com/2020/11/18/facebook-content-moderators-urge-mark-zuckerberg-to-let-them-work-remotely.html

- ↑ 12.0 12.1 Leskin, P. (2021, April 14). Facebook content moderator who quit reportedly wrote a blistering letter citing 'stress induced insomnia' among other 'trauma'. Business Insider. Retrieved from https://www.businessinsider.com/facebook-content-moderator-quit-with-blistering-letter-citing-trauma-2021-4

- ↑ 13.0 13.1 Perrigo, B. (2023, January 10). Facebook's partner in Africa Sama Quits content moderation. Time. Retrieved from https://time.com/6246018/facebook-sama-quits-content-moderation/

- ↑ Facebook: quarterly number of MAU (monthly active users) worldwide 2008-2022. Statista. (2022, October 27). Retrieved from https://www.statista.com/statistics/264810/number-of-monthly-active-facebook-users-worldwide/#statisticContainer

- ↑ Meta Business Help Center: Moderation. Meta. Retrieved from https://www.facebook.com/business/help/1323914937703529

- ↑ Koetsier, J. (2021, June 30). Report: Facebook makes 300,000 content moderation mistakes every day. Forbes. Retrieved from https://www.forbes.com/sites/johnkoetsier/2020/06/09/300000-facebook-content-moderation-mistakes-daily-report-says/?sh=287ba53d54d0

- ↑ Hays, K. Facebook is trying to advance AI to 'human levels' in order to build its metaverse. Business Insider. Retrieved from https://www.businessinsider.com/facebook-metaverse-challenges-include-ai-development-to-human-level-2022-2?r=US&IR=T

- ↑ Bassett, C. (2022, January 17). Will AI take over content moderation? Mind Matters. Retrieved from https://mindmatters.ai/2022/01/will-ai-take-over-content-moderation/

- ↑ Clayson, J & McMahon, S. (2021, April 5). U.S. media offers negative picture of covid-19 pandemic, study finds. Here & Now. Retrieved from https://www.wbur.org/hereandnow/2021/04/05/news-media-negative-coronavirus

- ↑ Yang, A., Shin, J., Zhou, A., Huang-Isherwood, K. M., Lee, E., Dong, C., Kim, H. M., Zhang, Y., Sun, J., Li, Y., Nan, Y., Zhen, L & Liu, W. (2021, August 30). The battleground of COVID-19 vaccine misinformation on Facebook: Fact checkers vs. misinformation spreaders: HKS Misinformation Review. Misinformation Review. Retrieved from https://misinforeview.hks.harvard.edu/article/the-battleground-of-covid-19-vaccine-misinformation-on-facebook-fact-checkers-vs-misinformation-spreaders/

- ↑ Krishnan, N., Gu, J., Tromble, R & Abroms, L. C. (2021, December 15). Research note: Examining how various social media platforms have responded to COVID-19 misinformation: HKS Misinformation Review. Misinformation Review. Retrieved from https://misinforeview.hks.harvard.edu/article/research-note-examining-how-various-social-media-platforms-have-responded-to-covid-19-misinformation/

- ↑ 22.0 22.1 Lapowsky, I. (2020, August 12). How covid-19 helped - and hurt - facebook's fight against bad content. Protocol. Retrieved from https://www.protocol.com/covid-facebook-content-moderation

- ↑ Rodriguez, S. (2020, August 11). Covid-19 slowed Facebook's moderation for suicide, self-injury and child exploitation content. CNBC. Retrieved from https://www.cnbc.com/2020/08/11/facebooks-content-moderation-was-impacted-by-covid-19.html

- ↑ Introducing the first AI model that translates 100 languages without relying on English. Meta. (2021, March 24). Retrieved from https://about.fb.com/news/2020/10/first-multilingual-machine-translation-model/

- ↑ Simonite, T. (2021, October 25). Facebook is everywhere; its moderation is nowhere close. Wired. Retrieved from https://www.wired.com/story/facebooks-global-reach-exceeds-linguistic-grasp/

- ↑ Akram, F & Debre, I. (2021, October 25). Facebook's language gaps let through hate-filled posts while blocking inoffensive content. Los Angeles Times. Retrieved from https://www.latimes.com/world-nation/story/2021-10-25/facebook-language-gap-poor-screening-content

- ↑ Debre, I. (2021, October 25). Facebook's language gaps weaken screening of hate, terrorism. AP NEWS. Retrieved from https://apnews.com/article/the-facebook-papers-language-moderation-problems-392cb2d065f81980713f37384d07e61f

- ↑ 28.0 28.1 Koebler, J & ox, J. (2018, August 23). The Impossible Job: Inside Facebook’s Struggle to Moderate Two Billion People. VICE. Retrieved from https://www.vice.com/en/article/xwk9zd/how-facebook-content-moderation-works

- ↑ Chen, A. (2014, October 23). The laborers who keep Dick Pics and beheadings out of your facebook feed. Wired. Retrieved from https://www.wired.com/2014/10/content-moderation/

- ↑ Sklar, J. & Silverman, J. (2023, January 26). I was a Facebook content moderator. I quit in disgust. The New Republic. Retrieved from https://newrepublic.com/article/162379/facebook-content-moderation-josh-sklar-speech-censorship

- ↑ Newton, C. (2019, February 25). The trauma floor: The secret lives of Facebook moderators in America. The Verge. Retrieved from https://www.theverge.com/2019/2/25/18229714/cognizant-facebook-content-moderator-interviews-trauma-working-conditions-arizona

- ↑ Criddle, C. (2021, May 12). Facebook moderator: 'every day was a nightmare'. BBC News. Retrieved from https://www.bbc.com/news/technology-57088382

- ↑ Messenger, H & Simmons, K. (2021, May 11). Facebook content moderators say they receive little support, despite company promises. NBCNews.com. Retrieved from https://www.nbcnews.com/business/business-news/facebook-content-moderators-say-they-receive-little-support-despite-company-n1266891

- ↑ Bowker, G. C & Star, S. L. (2008). Introduction: To Classify is Human. In Sorting things out: Classification and its consequences (pp. 1–32). essay, MIT Press.