Difference between revisions of "YouTube recommendation algorithm"

| Line 96: | Line 96: | ||

==References== | ==References== | ||

| + | |||

| + | [[Category:2020New]] | ||

| + | [[Category:2020Concept]] | ||

Revision as of 13:22, 17 March 2020

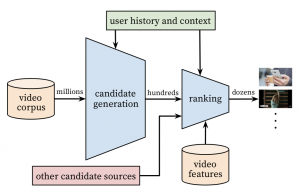

YouTube’s recommendation algorithm is the method by which new videos are recommended to users on the YouTube video-sharing app. The algorithm is designed to present users with engaging new videos, and it generates new recommendations based on both the user’s history of watched videos and the recorded tendencies of other app users [1] [2]. The algorithm employs deep-learning to narrow down millions of possible videos to a smaller subset, which are then displayed to the user[3]. In October 2006, YouTube was bought by Google, but it continued to operate somewhat independently and without major changes to its recommendation algorithm.[4]

Contents

Algorithm details

The recommendation algorithm consists of two neural networks: one for video candidate generation and one for ranking[5].

Candidata Generation

During candidate generation, the algorithm reads data from the user’s activity history (previously watched list of videos), and generates a subset of a few hundred possible videos from the larger corpus of millions of videos in the database. This is achieved using a deep neural network, which is trained using user history and implicit video feedback. Implicit feedback refers to the number of watches and whether users fully completed the video. Explicit feedback, on the other hand, includes objective values such as video thumbs up/down and survey results. Discriminating between videos using these categories is done using a softmax classifier and a nearest neighbor search algorithm. Specific inputs to the algorithm include users’ search history, watch history, geographic location, device used, gender, logged-in state, and age[6]. Furthermore, according to Google, “Training examples are generated from all YouTube watches (even those embedded on other sites) rather than just watches on the recommendations we produce. Otherwise, it would be very difficult for new content to surface and the recommender would be overly biased towards exploitation."[7]. All of these factors serve as input to the algorithm at different stages, depending on the context and goal, such as generating home page refresh results versus specific search results. This phase of the algorithm selects a few hundred vidoes to move on to the ranking phase.

Ranking

During video ranking, scores are assigned to each of the few hundred videos, using many more features of the videos. These scores, which are usually a derivative of expected user watch time, are assigned using logistic regression. Features considered include video thumbnail, previous user interaction with the channel that posted the video, and whether the video has previously been recommended to the user. Watch time is used instead of click-through rate in order to avoid “deceptive videos that the user does not complete (‘clickbait’)."[9] The list of videos is then sorted by score and output to the user’s page.

Criticism

The YouTube algorithm has been scrutinized by users on other social media platforms, such as Reddit and Instagram, in the form of memes. These memes criticize the algorithm for recommending old or out-of-the-ordinary videos, and for disproportionately using “weird” watch history for new recommendations[10][11].

Ethical Concerns

Radicalization

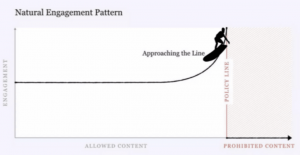

The YouTube recommendation algorithm has faced scrutiny for recommending radical videos based on innocuous user history. For example, watching Donald Trump rallies led one user to be recommended white supremacy and holocost denial speeches. This same user, after watching videos about vegetarianism, was recommended videos about veganism[12]. These recommendations led Zeynep Tufekci, a writer for The New York Times, to assert that YouTube’s algorithm is exploiting “natural human desire: to look “behind the curtain,” to dig deeper into something that engages us”[13]. She cites Google’s bottom line as the cause: “YouTube leads viewers down a rabbit hole of extremism, while Google racks up the ad sales”[14]. Tufekci is not alone in condemning the Google and YouTube business model. Ben Popken, of NBC news, criticized YouTube for recommending extremist videos in order to capture as much of users’ time as possible and make more money. The consequence of these recommendations, combined with YouTube’s large audience, according to Chaslot, a software engineer in artificial intelligence, is “gaslighting people to make them believe that everybody lies to them just for the sake of watch time”[15].

Mar Masson Maack commented on this affect, citing Guillaume Chaslot: “divisive and sensational content is often recommended widely: conspiracy theories, fake news, flat-Earther videos, for example. Basically, the closer it stays the edge of what’s allowed under YouTube’s policy, the more engagement it gets”[16].

Algorithm inputs and privacy

In order to make the recommendation algorithm as effective as possible, YouTube gathers several user data points. According to Google’s Privacy & Terms, YouTube collects[17]:

- Your name and password

- Unique Identifiers such as your browser, device, application you are using, device settings, operating system, mobile network information such as carrier and phone number, and Google/YouTube application version number

- Payment information

- Email Address

- Content you create, upload, or receive from others, such as emails, photos, videos, documents, spreadsheets, and YouTube comments.

- Terms you search for

- Videos you watch

- Your interaction with content and ads

- Your voice and audio information (if you use audio features such as dictation)

- Purchase activity

- People with whom you communicatie

- Activity on third-party sites that use Google services

- Chrome browser history

- Your geographical location

- Information about things near your device (Wi-Fi, cell towers, bluetooth devices)

- Information about you available through public sources

- Information about you gathered by their marketing partners and advertisers

This information is then stored and used for a variety of purposes, including customized search results, personalized ads, and making improvements to their software[18]. In September of 2019, Google was fined $170 million for violating the Children’s Online Privacy Protection Act[19]. According to Makena Kelly, Google refused to acknowledge that portions of YouTube were specifically geared towards children, and as such they lacked appropriate privacy policies for videos for children. After the COPPA case, YouTube created more algorithms to identify content geared toward children, stating that they “will limit data collection and use on videos made for kids only to what is needed to support the operation of the service”[20].

Restriction of LGBTQ content

YouTube is currently being sued by a group of content creators, on the grounds of “unlawful content regulation, distribution, and monetization practices that stigmatize, restrict, block, demonetize, and financially harm the LGBT Plaintiffs and the greater LGBT Community”[21][22]. According to these creators, the YouTube algorithm targets channels and videos with keywords such as “gay,” “bisexual,” or “transgender”[23]. According to Tom Foremski, the issue is not that YouTube is purposefully discriminatory, its that they, in the interest of saving money on labor, use algorithms in place of human-moderated content; “Google's algorithms are not that smart -- especially when it comes to cultural and political issues where they can't discriminate between legitimate and harmful content. The software has no understanding of what it is viewing[24].

See Also

References

- ↑ https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45530.pdf

- ↑ https://thenextweb.com/google/2019/06/14/youtube-recommendations-toxic-algorithm-google-ai/

- ↑ https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45530.pdf

- ↑ https://en.wikipedia.org/wiki/History_of_YouTube

- ↑ https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45530.pdf

- ↑ https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45530.pdf

- ↑ https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45530.pdf

- ↑ https://www.researchgate.net/profile/Massimo_Airoldi/publication/303096460_Follow_the_algorithm_An_exploratory_investigation_of_music_on_YouTube/links/5ca7483792851c64bd513531/Follow-the-algorithm-An-exploratory-investigation-of-music-on-YouTube.pdf

- ↑ https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45530.pdf

- ↑ https://www.reddit.com/r/dankmemes/comments/b24how/first_of_all_the_youtube_algorithm/

- ↑ https://knowyourmeme.com/photos/1513030-anthony-adams-rubbing-hands

- ↑ https://coinse.io/assets/files/teaching/2019/cs489/Tufekci.pdf

- ↑ https://coinse.io/assets/files/teaching/2019/cs489/Tufekci.pdf

- ↑ https://coinse.io/assets/files/teaching/2019/cs489/Tufekci.pdf

- ↑ https://www.nbcnews.com/tech/social-media/algorithms-take-over-youtube-s-recommendations-highlight-human-problem-n867596

- ↑ https://thenextweb.com/google/2019/06/14/youtube-recommendations-toxic-algorithm-google-ai/

- ↑ https://policies.google.com/privacy?hl=en

- ↑ https://policies.google.com/privacy?hl=en

- ↑ https://www.theverge.com/2019/9/4/20848949/google-ftc-youtube-child-privacy-violations-fine-170-milliion-coppa-ads

- ↑ https://www.theverge.com/2019/9/4/20848949/google-ftc-youtube-child-privacy-violations-fine-170-milliion-coppa-ads

- ↑ https://www.theverge.com/2019/8/14/20805283/lgbtq-youtuber-lawsuit-discrimination-alleged-video-recommendations-demonetization

- ↑ https://www.zdnet.com/article/lgbtq-the-missing-letters-in-googles-youtube-alphabet-and-the-moral-struggle-of-algorithms/

- ↑ https://www.theverge.com/2019/8/14/20805283/lgbtq-youtuber-lawsuit-discrimination-alleged-video-recommendations-demonetization

- ↑ https://www.zdnet.com/article/lgbtq-the-missing-letters-in-googles-youtube-alphabet-and-the-moral-struggle-of-algorithms/