Tristan Harris

| Birthname | Tristan Harris |

| Date of Birth | 1984 |

| Birth Place | San Francisco, CA |

| Nationality | American |

| Occupation | Founder of the Center of Humane Technology |

| Biography | Information Ethicist |

Tristan Harris (self-pronounced “TRIST-ahn”)[1] is a former software engineer, computer scientist, psychologist, and prominent information ethicist. [2] He was recently featured in Netflix's, “The Social Dilemma”, a documentary that highlights the human impact on social networking, where he speaks on his experiences working at Google.[3] He is the founder of the Center of Humane Technology, a nonprofit organization focused on ethics of consumer technology.[4] TIME Magazine listed Harris in its 100 “Next Leaders Shaping the Future” and Rolling Stone Magazine named him in “25 People Shaping the World.”[5]

Contents

Early life and Education

Born in 1984,[6] Harris grew up amid the Digital Age.[7] As a child, Tristan Harris was fascinated by magic, which contributed to his growing interest in technology and its influence on human behavior.[8] Harris attended Stanford University and graduated in 2006 with a degree in Computer Science.[9] During his time at Stanford, Harris interned at Apple and helped create the “Spotlight for Help” feature, designed to allow the user to find apps, documents, and files on their Mac.[10] Fifteen years later, this feature can be seen as a magnifying glass in the top right corner of the current Macbooks.

In addition to his computer science classes, Harris took supplemental courses in behavioral economics, social psychology, behavior change, and habit formation. He began to focus on human behavior change while working at Professor BJ Fogg’s Stanford Persuasive Technology Lab. His supplemental studies inspired him to pursue a master's degree in the “psychology of behavior change,”[11] but stopped out before he completed the program.

While in school, Harris founded Apture, a service that allowed blogs and news sites to add additional facts or insights to their content. Harris developed Apture because of a "search leak" problem among online publications where users would disengage with the publication's content to search up a term from the article or blog post. Apture allows users to bring up search results for any word in an article with a click to prevent users from leaving their current webpage.[12] The service was very successful and was eventually acquired by Google, where Harris joined the team as a product manager.[2]

Work and Career

At Google, Harris started and headed Google’s “Reminder Assist” project, now used across Google products. It is an auto-completion engine to help users "remember things that they want to do."[11] While working as a product manager, Harris attended Burning Man, which he stated: "awakened him to question his beliefs."[13] Upon return, he created a 144-page slide deck titled “A Call to Minimize Distraction & Respect Users’ Attention”[14] that discussed Big Tech abusing the attention economy. In his presentation, Harris demonstrates the severity of this issue in a powerful quote: “Never before in history have the decisions of a handful designers working at three companies (Google, Apple, and Facebook) had so much impact on how millions of people around the world spend their attention.”[13] In this presentation, Harris also suggested that big companies such as Google, Apple and Facebook should “feel an enormous responsibility” to make sure our society does not spend every second buried in a smartphone.[15] Harris sparked the conversation of the monopolization of Big Tech. Although Harris only shared the slideshow to 10 people internally in the company, it managed to spread to 5,000 Google employees and even reached the CEO at the time, Larry Page. Thousands of employees applauded him for his statements and agreed. Despite the positve reception, Harris states that nothing changed. Product roadmaps and requirements still had to be met.

Harris transitioned from Product Manager to Design Ethicist and Product Philosopher within Google. In this position, Harris impacted the company and how information ethics were handled. Harris's primary job was to study how digital applications affect user's mental health and behavior.[2] Using findings from this study, Harris developed a framework for how technology could ethically steer the thoughts and actions of millions of people.[16] He also spent time trying to make Big Tech companies recognize the social implications of their products. The Atlantic referred to Harris as "the closest thing Silicon Valley has to a conscience."[13] However, at Google, Harris felt limited and unable to enact change, prompting him to leave the company in 2013.[17] Harris left Google to co-found a 501(c)3 nonprofit organization called "Time Well Spent," which is now known as the "Center for Humane Technology."[18] Through this organization, Harris wanted to mobilize support for an alternative centered around core values at tech corporations. Harris believes that human minds are easily controlled and their choices are not as free as we believe.[19]

Several epigrams that have guided Harris in his journey of safeguarding individuals’ freedom of choice and that Harris has attempted to incorporate into the actions he takes are, “I promise to use this day to the fullest, realizing it can never come back again” and, “Do not open [with reference to Harris’ own laptop] without intention.”[1]

Center for Humane Technology

After leaving Google, Harris forged Time Well Spent, a movement focused on promoting the user of technology with intention and care to dispute tech companies' goal to hold the user's attention for as long as possible.[20] Harris claims that technology is responsible for the following: reducing attention spans, distraction, information overload, polarization, social isolation, etc.[21] The movement gained so much attention that Harris went on to co-found the Center of Humane Technology with Ava Raskin and Randima Fernando The Center for Humane Technology is a non-profit dedicated to shifting the digital infrastructure toward "humane technology that supports our well-being, democracy, and shared information environment."[22] Their work includes educating the public, supporting technologists through training workshops, and advocating for policy change to advance legislation toward Big Tech.[23]

The Center for Humane Technology has been named one of Fast Company's most innovative companies because of their work, including giving tech users tips to control their content consumption like charging their phone outside of their room and disabling app notifications.[24] These tips, and many others, are featured on the Center for Humane Technology's website under the title of Take Control. It emphasizes mindful consumption of content as well as consumption of content from multiple points of view. Harris, in an interview at the NYC Media Lab Summit in 2020, recommended users to swap phones with someone with contrasting views to them, and scroll through their Facebook feed. He calls this activity a "reality swap," where users can see that because of social media, people can consume entirely different information.[25]

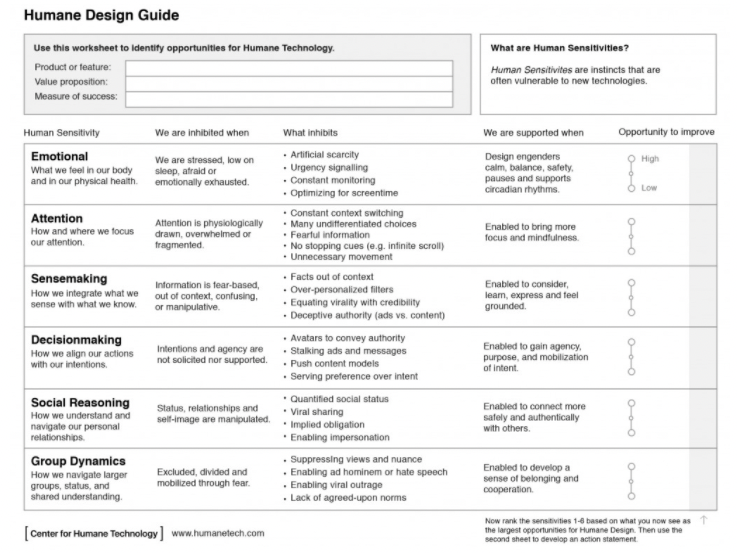

He also created the Humane Design Guide.[21] which educates designers how to identify opportunities to create humane technology.[21]

The Center of Humane Technology does not plan to slow down any time soon. In 2021, they are launching a course called the Fundamentals of Humane Technology, developed to "explore the personal, societal, and practical challenges of being a humane technologist."[4]

Your Undivided Attention

Harris also co-hosts a podcast called “Your Undivided Attention” with Aza Raskin through the “Center for Humane Technology” on Apple Podcasts.[5] The podcast features Harris and Raskin exploring concepts related to social media’s attention-seeking quest and how it leads to choice manipulation, obscuring of “truth,” and upsetting of the balance of real-world communities.[5][26] Episodes of the podcast generally feature interviews with a wide variety of different individuals, including, but not limited to, anthropologists, researchers, activists, experts, cultural leaders, and faith-based leaders, with the aim of Harris and Raskin being not only to investigate and discuss remedies to complex ethical issues in the world, but also to provide profound insight into human nature as a means of promoting greater erudition.[26] As of April 15, 2021, the podcast series currently spans 33 episodes in total.[26]

Speaker Events and Calls to Action

Congress Hearings

In addition to the Center of Humane technology, Harris often travels across the country to spread his message, and has spoken at two United States Congress hearings. The first, on June 25th 2019, was titled “Optimizing for Engagement: Understanding the Use of Persuasive Technology on Internet Platforms”, and testified how technology giants are taking advantage of people’s attention.[27] The most recent, on January 8th of 2020, was titled "Americans at Risk: Manipulation and Deception in the Digital Age”, highlighting the urgency for legislation to stop Big Tech’s leverage on the public.[28]

Ted Talks

How Better Tech Could Protect Us From Distraction

In the beginning of the Time Well Spent movement in 2014, Harris gave this Ted Talk in Brussels. He suggests that technologies need to return the power of choice back to the user. This could be seen in the form of “pausing” our technologies, which are commonly seen today with setting time limits on apps. He emphasizes that designers need to reengineer the platforms to create a net positive contribution to humanity, while users themselves need to demand this change.[29]

How a Handful of Tech Companies Control Billions of Minds Every Day

In 2017 talk, Harris lists 3 radical steps that he believes society must take to counteract how these companies are hijacking our minds.[30]

- Transform our self-awareness: many people believe that they are not susceptible to persuasion.[31]

- Reform the way that these systems are inherently designed: for instance, one way to do this would be to remove comments on Facebook with a ‘Let’s Meet’ button. Harris talks about this as a way to make our time unfragmented. Instead of constant distraction, schedule a productive amount of time to discuss a topic with someone. Over time, this would shift society back towards face-to-face interactions as opposed to hiding behind the screen of these new technologies and platforms. This would greatly reduce polarization of people's views so that we could actually understand who we are talking to before we make a comment.[31]

- Transform business and increase accountability: social media companies monetize through corrupt advertising models. Business models should not use advertising which targets demographics of people and polarizes sets of people. Instead, we should transition towards subscriptions and micropayments. Similar to how society is moving away from coal, tech giants need to make this transition for a more socially sustainable future.[31]

60 Minutes

Harris spoke with television personality Anderson Cooper on 60 Minutes about smartphones, apps, and social media from the perspective of a 'tech insider.' He compares smartphones to slot machines that instill destructive habits in users who are constantly looking for interactions on their devices. He spoke about his journey through Stanford, Google, and eventually to his current position as the founder of the Center for Humane Technology. His call to action is to tech insiders and developers, rather than to users, to consider the dangerous effects they are having on their users through engineering their addictive platforms.[32] This episode also featured two researchers studying related topics: Ramsay Brown, a programmer who focuses on neuroscience principles to "hack" users brains to keep them on social media apps as long as possible, as well as Larry Rosen, a psychologist at California State University Dominguez Hills, who studies technology's effect on anxiety levels.[32]

The Social Dilemma

Harris was the lead interview subject in the 2020 Netflix movie "The Social Dilemma." The film caused a slew of controversy in Silicon Valley with Facebook calling it a "conspiracy documentary". Harris nor the Center for Humane Technology were involved in financing or creating the movie but Harris has been an adamant defender of the documentary. [34] Harris claims that the film intended to highlight the business model in Big Tech that prioritizes engagement at the cost of giving each person their own (skewed) reality. The film received criticism over the idea that there is a group of leaders and developers that manipulate every world event in secret to alter a user's understanding of their reality. Harris rebuttals this by claiming the critics understood a misrepresented view of what the film says. The film portrayed the AI behind social networking and information sharing platforms as three men in a futuristic "mission control center" that would twist and turn knobs to manipulate users into performing desired actions. The three men represented the growth, engagement, and advertising goals of the AI. According to Harris, any critics were quick to "misunderstand" the characters in mission control as leaders in Big Tech and much of the criticism of the film's message stems from this fundamental misunderstanding. The film also received backlash over its generalizing that social media algorithms have directly caused a rise in self-harm among teenage girls, a rise in nationalism, and a potential rise in loneliness and withdrawal. Harris claims that the filmmakers had control over the movie's editing and therefore are to blame for this overgeneralization. However, he is firm in the belief that the algorithms he scrutinizes are the main factor causing these issues. Harris agrees that the movie is heavily dramatized and this has muddled the main ideas expressed by the film. Harris believes that the movie perked global interest but recommends viewers to listen to his podcast for a clear-cut discussion of the issues presented. [35]

Criticism

An anonymous tech executive has reprimanded Harris by claiming that he disillusions his viewers into believing that tech companies are omnipotent entities that have total control over a person’s actions. The tech executive calling Harris out describes how the notion of tech companies creating satisfying products to stimulate continual usage is akin to how restaurants strive to create good-tasting food in order to encourage patrons to return in the future, and in doing so, challenges Harris’ criticisms of tech companies and insinuates that they are extreme.[36]

Another individual decrying Harris’ message claims that Harris’ solution to the problem of Social Media’s pervasive, domineering influence is self-contradictory as it advocates for greater emphasis on using the same tools that helped to create the problem in the first place.[36]

References

- ↑ 1.0 1.1 Barber, P. (2020, October 15). Santa Rosa native Tristan Harris takes on Big Tech in Netflix documentary ’The Social Dilemma’. The Press Democrat. https://www.pressdemocrat.com/article/news/santa-rosa-native-tristan-harris-takes-on-big-tech/?sba=AAS

- ↑ 2.0 2.1 2.2 Bosker, Bianca. (2020, September 22) How Burning Man Inspired The Social Dilemma's Tristan Harris To Speak Out. Bustle. Retrieved 12 March 2021 from https://www.bustle.com/entertainment/who-is-tristan-harris-the-social-dilemma-subject-is-a-google-alum

- ↑ The Social Dilemma. (2020, September 09). Retrieved March 19, 2021 from https://www.netflix.com/title/81254224

- ↑ 4.0 4.1 For Technologists. (n.d.). Retrieved March 19, 2021 from https://www.humanetech.com/technologists

- ↑ 5.0 5.1 5.2 Harris, T. (n.d.). Tristan Harris. Tristan Harris. https://www.tristanharris.com/

- ↑ Tristan Harris. (2021, March 05). Retrieved March 19, 2021 from https://en.wikipedia.org/wiki/Tristan_Harris

- ↑ Hall, M. (2021, February 04). Facebook. Retrieved March 19, 2021 from https://www.britannica.com/topic/Facebook

- ↑ (n.d.). Retrieved 12 March 2021 from https://www.imdb.com/name/nm9038510/bio?ref_=nm_ov_bio_sm

- ↑ Lachenal, Jessica. (n.d.) Future Society. Retrieved 12 March 2021 from https://thefuturesociety.org/people/firstname-name-2/

- ↑ Use Spotlight On Your Mac. (2020, October 20). Retrieved March 19, 2021 from https://support.apple.com/en-gb/HT204014

- ↑ 11.0 11.1 Harris, Tristan. (n.d.) Retrieved 12 March 2021 from https://www.linkedin.com/in/tristanharris/.

- ↑ Rao, Leena. (24 August 2010). Retrieved 6 April 2021. [1] TechCrunch

- ↑ 13.0 13.1 13.2 Bosker, B. (2017, January 06). The Binge Breaker. Retrieved March 19, 2021 from https://www.theatlantic.com/magazine/archive/2016/11/the-binge-breaker/501122/

- ↑ Minimized Distractions Slide Deck by Tristan Harris. Retrieved 12 March 2021 from http://www.minimizedistraction.com/

- ↑ Haselton, Todd (2018, May 10). "Google employee warned in 2013 about five psychological weaknesses that could be used to hook users". CNBC. Retrieved 20 March 2021 from https://www.cnbc.com/2018/05/10/google-employee-tristan-harris-internal-2013-presentation-warnings.html

- ↑ Ted. (n.d.). Retrieved 12 March 2021 from https://www.ted.com/speakers/tristan_harris#:~:text=Prior%20to%20founding%20the%20new,hijack%20human%20thinking%20and%20action

- ↑ How Tech Hijacks Our Brains, Corrupts Culture, And What To Do Now. (2019, May 21). Retrieved March 19, 2021 from https://www.npr.org/2019/05/15/723671325/how-tech-hijacks-our-brains-corrupts-culture-and-what-to-do-now

- ↑ "Google's new focus on well-being started five years ago with this presentation". (2018, May 10). The Verge. Retrieved 20 March 2021 from https://www.theverge.com/2018/5/10/17333574/google-android-p-update-tristan-harris-design-ethics

- ↑ Lewis, Paul (2017, October 6). "'Our minds can be hijacked: the tech insiders who fear a smartphone dystopia". The Guardian. Retrieved 20 March 2021 from https://www.theguardian.com/technology/2017/oct/05/smartphone-addiction-silicon-valley-dystopia

- ↑ Staff, R. (2017, February 07). Full transcript: Time Well Spent Founder Tristan Harris on Recode Decode. Retrieved March 19, 2021 from https://www.vox.com/2017/2/7/14542504/recode-decode-transcript-time-well-spent-founder-tristan-harris

- ↑ 21.0 21.1 21.2 (2019, July 17). Retrieved 12 March 2021 from https://voicesofvr.com/787-ethical-design-of-humane-technology-with-tristan-harris

- ↑ Who We Are. (n.d.). Retrieved March 19, 2021 from https://www.humanetech.com/who-we-are

- ↑ Our Work. (n.d.). Retrieved March 19, 2021 from https://www.humanetech.com/who-we-are#work

- ↑ Fast Company. Retrieved 6 April 2021. [2]

- ↑ Bloom, David. (7 October 2020). Retrieved 6 April 2021.Tristan Harris And “The Social Dilemma:” Big Ideas To Fix Our Social Media Ills [3] Forbes.

- ↑ 26.0 26.1 26.2 26.3 Your Undivided Attention. (n.d.). Center for Human Technology. https://www.humanetech.com/podcast

- ↑ Optimizing for engagement: Understanding the use of persuasive technology on internet platforms. (2019, June 25). Retrieved March 19, 2021 from https://www.commerce.senate.gov/2019/6/optimizing-for-engagement-understanding-the-use-of-persuasive-technology-on-internet-platforms

- ↑ Hearing on "Americans at Risk: Manipulation and Deception in the Digital Age". (2020, January 14). Retrieved March 19, 2021 from https://energycommerce.house.gov/committee-activity/hearings/hearing-on-americans-at-risk-manipulation-and-deception-in-the-digital

- ↑ Harris, T. (n.d.). How better tech could protect us from distraction. Retrieved March 19, 2021 from https://www.ted.com/talks/tristan_harris_how_better_tech_could_protect_us_from_distraction/transcript#t-697877

- ↑ Harris, T. (n.d.). How a handful of tech companies control billions of minds every day. Retrieved March 19, 2021 from https://www.ted.com/talks/tristan_harris_how_a_handful_of_tech_companies_control_billions_of_minds_every_day

- ↑ 31.0 31.1 31.2 Abosch, Kevin. (2017, July 2016). Retrieved 12 March 2021 from https://www.wired.com/story/our-minds-have-been-hijacked-by-our-phones-tristan-harris-wants-to-rescue-them/

- ↑ 32.0 32.1 Cooper, Anderson. (9 April 2017). Retrieved 6 April 2021. What is "brain hacking"? Tech insiders on why you should care. [4] CBS News

- ↑ https://m-gorynski.medium.com/the-unintentional-comedy-a-commentary-on-the-social-dilemma-cf5c7655d071

- ↑ Barber, P. (2020, October 23). Santa Rosa Native takes on big tech in DOCUMENTARY ʽthe Social Dilemma'. Retrieved April 20, 2021, from https://www.pressdemocrat.com/article/news/santa-rosa-native-tristan-harris-takes-on-big-tech/

- ↑ Kantrowitz, A. (2020, October 07). 'Social dilemma' STAR Tristan Harris responds to criticisms of the Film, Netflix's ALGORITHM, AND... Retrieved April 20, 2021, from https://onezero.medium.com/social-dilemma-star-tristan-harris-responds-to-criticisms-of-the-film-netflix-s-algorithm-and-e11c3bedd3eb

- ↑ 36.0 36.1 Newton, C. (2019, April 24). The leader of the Time Well Spent movement has a new crusade. The Verge. https://www.theverge.com/interface/2019/4/24/18513450/tristan-harris-downgrading-center-humane-tech