Difference between revisions of "Telehealth"

| Line 26: | Line 26: | ||

| − | == | + | ==Types== |

| − | + | ||

| − | + | ||

| − | + | Telehealth is an umbrella term for a variety of services provided to patients in all conditions. Care typically falls into four different categories: condition management, counseling and mental health therapy, patient education, and prescription refills. Specialized care is also often available. | |

| − | + | ===Condition Management=== | |

| − | + | While patients still require in-person consultation to gather data—including lab tests and x-rays—some clinics and practitioners provide virtual appointments and other methods of communication that enable patients to see their doctor and/or nurse routinely. Such appointments allow for ongoing and active care when a visit is not necessary, not possible and/or not desired (as seen in the COVID-19 pandemic). | |

==Ethical Implications== | ==Ethical Implications== | ||

Revision as of 21:25, 28 January 2022

Telehealth — often called telemedicine — refers to the systems which enable the delivery and facilitation of health and health-related services between patients and healthcare providers including: diagnosis, monitoring, advice, reminders, education, intervention, and remote admissions. Many such systems are designed to allow healthcare professionals to provide care for patients without, or alongside, an in-person office visit. Telehealth is conducted primarily online on computers, tablets, smartphones, and many other smart devices. Such devices allow patients to send and receive messages—via email, video chat, secure messaging, and secure file exchange—with their doctors. This digital information allows doctors to actively manage health care from afar.

Telehealth began as a means to provide medical support when a face-to-face interaction between the providers was made difficult due to the distance, cost, or unavailability of a suitable transport. Providers seek to improve access to care for those living in remote and underserved areas. Further use-cases for telehealth include those caused by patient immobility—caused by coma, paralysis, or emergency.

Telehealth, generally, also includes topics besides diagnosis and monitoring such as advice and reminders. The scope further expands to include a multitude of non clinical services such as preventive and promotive components and campaigns. Also included is tele-education, for patients or care providers, through distance learning, meetings, supervision, and presentations.

Goals of telehealth include decreasing physician visit frequency, decreasing healthcare cost, decreasing mortality rates, and decreasing healthcare disparities amongst communities.

Contents

History

The inequality in the availability of good healthcare between the higher and lower classes, certain ages and geographies, and other demographics has existed since the advent of healthcare.

Prior to the advent of the telephone—in 1876—those seeking remote medical attention and advice would send representatives and/or telegrams to convey information on symptoms and ailments Given this information, doctors and representatives would relay home diagnosis and treatment options. The need to communicate health information rapidly was underscored throughout the American Civil War; telegraphs were used to aid in the care of wounded soldiers, and deliver mortality information away from the front line and to the White House and beyond. As early as 1879, doctors recognized the potential of remote patient care in order to avoid unnecessary house and hospital visits.

Modern telecommunication was invented following the commercialization of Alexander Graham Bell’s telephone. With the ever-growing prevalence of wired communication, new relationships between patients and doctors began to form. Patients were now able to describe health issues real-time with their providers.

By 1905, doctors were able to use the telephone to transmit and monitor cardiac sounds and rhythms: increasing the utility of telehealth. Radio systems on ships were able to transmit patient information long distances to doctors far from conflict zones. Video transmission of health information was pioneered in the mid 20th century, vastly improving professionals’ ability to witness and understand the scope of each case.

The ability to care for astronauts aboard Apollo 11 and other space flights was extremely important to the National Aeronautics and Space Administration. Neil Armstrong, Buzz Aldrin, and other astronauts routinely reported key health information to Mission Control doctors.

Over subsequent decades, technologists enabled telehealth professionals to transmit additional medical data such as X-rays, stethoscope audio files, electrocardiograms, and many other medical tests long distances. At this point, providers began veering from initial motivations: to provide support for rural and hard-to-reach populations and medical emergencies. The digitization of medical records, and wider ease of access, was understood to improve care for all. Additionally, it was hypothesized that cost savings related to forgoing physical records could be passed on to consumers. Instant access to current and prior images using computers could also speed up the dissemination of information and assist with rapid and accurate diagnoses.

Internet use further increased participation in telehealth and rapidly altered practices: audio and images could be instantly interpreted. Customized hardware and software was created to increase the ability of carers to interpret a patient's symptoms and state-of-mind. The increasing ability of various software to exchange information has both inhibited and enabled increasing standardization. New technologies aim to decrease the effect of an inability to physically interact with, and touch patients.

Types

Telehealth is an umbrella term for a variety of services provided to patients in all conditions. Care typically falls into four different categories: condition management, counseling and mental health therapy, patient education, and prescription refills. Specialized care is also often available.

Condition Management

While patients still require in-person consultation to gather data—including lab tests and x-rays—some clinics and practitioners provide virtual appointments and other methods of communication that enable patients to see their doctor and/or nurse routinely. Such appointments allow for ongoing and active care when a visit is not necessary, not possible and/or not desired (as seen in the COVID-19 pandemic).

Ethical Implications

First Mover Advantages

A future after an artificial superintelligence has undergone an intelligence explosion is known as a "post-singularity" future, and by its nature may be impossible to predict. Bostrom claims that as machines get smarter, they don’t just get score better on intelligence exams, but instead grow more capable of accomplishing their goals by exerting their will upon the world. Bostrom breaks these abilities down into several categories, at which artificial superintelligences may be significantly better than humans and therefore better able to accomplish their goals than any human. These include cognitive self-improvement, which would allows the agent to make itself even more intelligent; strategizing, which would allow it to better achieve distant goals and overcome intelligent opposition; social manipulation, which would allow it to enlist human assistance and persuade states and other organizational actors; technological research, which would allow it to explore and realize alternative strategies; and economic productivity, which would allow it to acquire more resources to further its goals.[1]

As a result of all this, an artificial superintelligence would be more capable of accomplishing its goals than any human, so whoever dictated these goals would have a possibly insurmountable first-mover advantage. Furthermore, this artificial superintelligence could also be used to suppress the development of any competing artificial superintelligences, as these would likely compete with the original for resources to accomplish its goals. As such, the race to develop the first artificial superintelligence is in fact the race for total influential superiority.[1] Groups competing for this title will likely consist of governments, tech firms, and black-market groups [citation needed] . Depending on who solves the problem first, it could prove consequential, especially if the artificial superintelligence is developed by a ethically unscrupulous group. In this case, ASI could be harmful.

Applied Ethics

Additionally, if ASI can help humans become immortal, is this ethical? In a sense, ASI and humans would be playing the hand of God, which leaves people divided about the idea. Furthermore, there are serious implications if people become immortal. In a world where there is a no death rate, but still a birth rate, a serious impact on our living conditions and other species around us could occur. Could this lead to overpopulation? Or would ASI provide a solution to this? What if ASI concludes that certain humans/species pose a threat to society as a whole, and its solution is to eliminate a certain group of humans/species? What if we don’t like the answers that ASI has for humanity?

Bias and AI Risk

An artificial superintelligence could also face issues with bias. Because any artificial superintelligence would need to be programmed by humans initially, it is extremely unlikely that one would be created without some level of bias. Almost all human records, including medical, housing, criminal, historical, and educational, have some degree of bias against minorities [citation needed] . This is caused by human flaws and failures, and the question remains if an artificial superintelligence would act to further these shortcomings or fix humanity's mistakes. As stated, experts don't know what to expect from an artificial superintelligence. Nonetheless, humans will endow any future artificial superintelligence with a vast library of bias-filled information that is present both today and in the past. While we don't know how an artificial superintelligence would act on this knowledge, it could be motivation to promote biased actions.

The biggest source of bias in the actualization of artificial superintelligence, and one with potentially existential consequences for humanity, is the specific nature of a superintelligent agent's goal. In principle, this bias is desirable, as the artificial superintelligence must have some goal to pursue, otherwise neither it nor we may benefit from its superintelligence being put to any purpose. Furthermore, this bias is also necessary: by the orthogonality thesis, "intelligence and final goals are orthogonal axes along which possible agents may freely vary".[2] Therefore, since the selection of a goal is arbitrary to any level of intelligence, a human designer must ultimately make a biased decision as to what goal the artificial superintelligence will pursue. However, for several important reasons, this decision supremely important from an ethical perspective, and could dictate the fate of the entire human species.

First, as mentioned previously, the ability of an artificial superintelligence to pursue its goals would be unmatched by any human, just as a humanity's ability to pursue its goals cannot be circumvented by an ant or a beetle. In the event that the artificial superintelligence's goal was actively harmful to humans, such as a military agent, the results for human life would be disastrous. However, an artificial superintelligence's goal need not be explicitly malicious to have similar catastrophic effects on human life and wellbeing. Even an agent with a goal completely neutral regarding humans poses an existential threat to humanity. This is due to instrumental convergence, which is the tendency of goal-seeking agents to converge on specific subgoals regardless of the nature of their terminal goals. These are described by computer scientist Steve Omohundro as self-preservation, meaning that an agent will attempt to secure its ability to continue existing, so as to ensure its goals are accomplished; goal-content integrity, meaning that an agent will protect itself against having its goals changed; resource acquisition, meaning that an agent will seek out more resources to better accomplish its goals; cognitive enhancement, meaning an agent will attempt to improve itself to better accomplish its goals; and technological perfection, meaning the research of new technology to better or more efficiently accomplish its goals.[3][1]

An artificial agent that is neutral towards humans, or doesn't consider them an important part of their goals, will very likely still pose an existential threat to humans because of its instrumental goals. For example, an artificial superintelligence might launch a preemptive first strike against humans, calculating that we might be a threat to its future self-preservation or that we might try to change its goals to be more human-friendly. Alternatively, consider Bostrom's thought experiment regarding an artificial superintelligence whose only goal is the production of more paperclips. As the superintelligence has no care for humans in its goal, it has no problem with breaking down first the entire Earth, and then the entire universe into raw materials to make more paperclips out of.[1] Consider the analogous case of a human construction project that destroys an anthill during construction; it's not that the construction workers hate the ants, they just don't care about them, and they are in the way of accomplishing a human goal. As AI researcher Eliezer Yudkowsky puts it, "The AI neither hates you, nor loves you, but you are made out of atoms that it can use for something else."[4]

On the other hand, Bostrom posits a future in which artificial superintelligence does have a human-aligned goal, a so-called Friendly AI, and is able to assist humanity in claiming its "cosmic endowment." In such a scenario, human flourishing could spread across the cosmos, reaching between 6x10^18 - 2x10^20 stars with relativistic probes before cosmic expansion puts the rest of the universe beyond our reach, providing habitats and resources for between 10^35 - 10^54 human beings. What's at stake in choosing the correct goal for an artificial superintelligence is not merely the extinction of the human species, but the potential happiness of 1,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000 human lives spread among the stars.[1]

Media

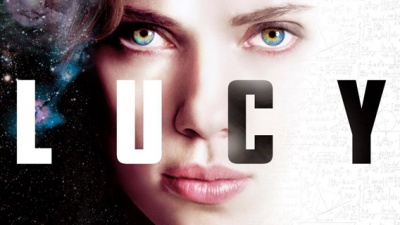

Lucy (film)

Lucy is a 2013 science-fiction drama film starring Scarlet Johansson. The plot follows Lucy, a woman who gains telekinetic abilities following her exposure to a cognitive enhancement drug. Immediately following her exposure to the drug, Lucy begins to gain enhanced mental and physical abilities such as telepathy and telekinesis. In order to prevent her body from disintegrating due to cellular degeneration, Lucy must continue taking more of the drug. The additional doses work to further increase Lucy's cerebral capacity well beyond that of a normal human being, which gifts her with telekinetic and time-travel abilities. Her emotions are dulled and she grows more stoic and robotic. Her body begins to change into a black, nanomachine type substance that spreads over the computers and electronic objects in her lab. Eventually, Lucy transforms into a complex supercomputer. She becomes an all-knowing entity, far beyond the intellectual capacity of any human being. She eventually reaches 100% of her cerebral capacity and transcends this plane of existence and enters into the spacetime continuum. She leaves behind all of her knowledge on a superintelligent flash drive so that humans may learn from all of her knowledge and insight about the universe[5].

The story of Lucy can be likened to the concept of artificial superintelligence. Lucy is transformed into an all-knowing supercomputer with intelligence much greater than any human. Although she is not artificial, but rather a superintelligent human, she gains the ability to create solutions to problems that are unfathomable to the human mind. In a sense, Lucy loses her humanity and evolves into a machine with an intellect that is smarter than that of any human.

References

- ↑ Cite error: Invalid

<ref>tag; no text was provided for refs namedSuperintelligence - ↑ Armstrong, Stuart (January 2013). "General Purpose Intelligence: Arguing the Orthogonality Thesis". Analysis and Metaphysics. 12: 68-84. Retrieved April 27, 2019.

- ↑ Omohundro, Stephen M. (2007). "The Nature of Self-Improving Artificial Intelligence". Singularity Summit 2007'. San Francisco. Retrieved April 27, 2019.

- ↑ Yudkowsky, Eliezer (2008). "Artificial intelligence as a positive and negative factor in global risk." Global Catastrophic Risks. Oxford University Press. pp. 303-333. Retrieved April 27, 2019.

- ↑ retrieved April 20, 2019., https://www.imdb.com/title/tt2872732/

- https://nickbostrom.com/superintelligence.html

- The Singularity Is Near: When Humans Transcend Biology by Ray Kurzweil

- http://yudkowsky.net/