Difference between revisions of "Predictive Policing"

(edited grammar/wording) |

(added and removed words for clarity, edited grammar) |

||

| Line 11: | Line 11: | ||

==Efficacy== | ==Efficacy== | ||

| − | In 2011, predictive policing was | + | In 2011, predictive policing was considered one of the top 50 best inventions, according to TIME magazine.<ref name="Brayne"/> Since then, predictive policing practices have been further implemented in various cities such as Los Angeles, Santa Cruz, New York City, and more, and the effects of these new practices continue to be analyzed.<ref name="Friend">Friend, Z., M.P.P. (2013, April 09). Predictive Policing: Using Technology to Reduce Crime. Retrieved from https://leb.fbi.gov/articles/featured-articles/predictive-policing-using-technology-to-reduce-crime</ref> |

===Los Angeles Police Department=== | ===Los Angeles Police Department=== | ||

| − | From 2011 to 2013, a controlled randomized trial on predictive policing was conducted in three divisions, the Foothill Division, North Hollywood Division, Southwest Division, by the Los Angeles Police Department.<ref>Mohler, G. O., Short, M. B., Malinnowski, S., Johsson, M., Tita, G.E., Bertozzi, A. L., & Brantingham, J. (2015) Randomized Controlled Field Trials of Predictive Policing, Journal of the American Statistical Association, 110:512, 1399-1411, DOI: 10.1080/01621459.2015.1077710. Retrieved from https://www.tandfonline.com/doi/full/10.1080/01621459.2015.1077710</ref><ref name="Brantingham"/> The test group received leads produced by an algorithm on areas to target. The control group received leads produced by an analyst, using current policing techniques. At the end of the study, officers using the algorithmic predictions had an average 7.4% drop in crime at the mean patrol level. The officers using predictions made by the analyst had an average 3.5% decrease in crime at the mean patrol level. <ref name="Brantingham">Brantingham, J., Valasik, M., & Mohler, G. O. (2018) Does Predictive Policing Lead to Biased Arrests? Results From a Randomized Controlled Trial, Statistics and Public Policy, 5:1, 1-6, DOI: 10.1080/2330443X.2018.1438940. Retrieved from https://www.tandfonline.com/doi/full/10.1080/2330443X.2018.1438940</ref>. | + | From 2011 to 2013, a controlled randomized trial on predictive policing was conducted in three divisions, the Foothill Division, North Hollywood Division, Southwest Division, by the Los Angeles Police Department.<ref>Mohler, G. O., Short, M. B., Malinnowski, S., Johsson, M., Tita, G.E., Bertozzi, A. L., & Brantingham, J. (2015) Randomized Controlled Field Trials of Predictive Policing, Journal of the American Statistical Association, 110:512, 1399-1411, DOI: 10.1080/01621459.2015.1077710. Retrieved from https://www.tandfonline.com/doi/full/10.1080/01621459.2015.1077710</ref><ref name="Brantingham"/> The test group received leads produced by an algorithm on areas to target. The control group received leads produced by an analyst, using current policing techniques. At the end of the study, officers using the algorithmic predictions had an average 7.4% drop in crime at the mean patrol level. The officers using predictions made by the analyst had an average 3.5% decrease in crime at the mean patrol level. <ref name="Brantingham">Brantingham, J., Valasik, M., & Mohler, G. O. (2018) Does Predictive Policing Lead to Biased Arrests? Results From a Randomized Controlled Trial, Statistics and Public Policy, 5:1, 1-6, DOI: 10.1080/2330443X.2018.1438940. Retrieved from https://www.tandfonline.com/doi/full/10.1080/2330443X.2018.1438940</ref>. It can be noted that the percent difference in crime rates in these locations was statistically significant, but when results were adjusted for previous crime rates in these locations arrests were lower or unchanged. <ref name="Brantingham">Brantingham, J., Valasik, M., & Mohler, G. O. (2018) Does Predictive Policing Lead to Biased Arrests? Results From a Randomized Controlled Trial, Statistics and Public Policy, 5:1, 1-6, DOI: 10.1080/2330443X.2018.1438940. Retrieved from https://www.tandfonline.com/doi/full/10.1080/2330443X.2018.1438940</ref> |

===New York City Police Department=== | ===New York City Police Department=== | ||

| − | In 2017, researchers analyzed the effectiveness of predictive policing methods since 2013, when the [https://en.wikipedia.org/wiki/Domain_Awareness_System Domain Awareness System (DAS)] was implemented in the NYPD. <ref name="Meijer"/> As defined by the researchers, DAS “... is a citywide network of sensors, databases, devices, software, and infrastructure that informs decision making by delivering analytics and tailored information to officers | + | In 2017, researchers analyzed the effectiveness of predictive policing methods since 2013, when the [https://en.wikipedia.org/wiki/Domain_Awareness_System Domain Awareness System (DAS)] was implemented in the NYPD. <ref name="Meijer"/> As defined by the researchers, DAS “... is a citywide network of sensors, databases, devices, software, and infrastructure that informs decision making by delivering analytics and tailored information to officers smartphones and precinct desktops.<ref name="Levine">Levine, E. S., Tisch, J. S., Tasso, A. S., & Joy, M. S. (2017). The New York City Police Department’s Domain Awareness System: 2016 Franz Edelman Award Finalists [Abstract]. Informs Journal on Applied Analytics, 47(1), 1-109. doi:10.1287/inte.2016.0860. Retrieved from https://www.researchgate.net/publication/312542282_The_New_York_City_Police_Department's_Domain_Awareness_System_2016_Franz_Edelman_Award_Finalists</ref> The evaluation displayed that the overall crime index of New York decreased by 6%, but the researchers do not believe the decrease is entirely attributed to the use of DAS.<ref name="Levine"/> The DAS has generated a predicted $50 million in sayings for the NYPD per year through increasing staff efficiency. <ref name="Levine">Levine, E. S., Tisch, J. S., Tasso, A. S., & Joy, M. S. (2017). The New York City Police Department’s Domain Awareness System: 2016 Franz Edelman Award Finalists [Abstract]. Informs Journal on Applied Analytics, 47(1), 1-109. doi:10.1287/inte.2016.0860. Retrieved from https://www.researchgate.net/publication/312542282_The_New_York_City_Police_Department's_Domain_Awareness_System_2016_Franz_Edelman_Award_Finalists</ref> |

===Shreveport Police Department=== | ===Shreveport Police Department=== | ||

Revision as of 19:11, 18 April 2021

Predictive policing is a term used to describe the application of analytical techniques on data with the intent to prevent future crime by making statistical predictions. This data includes information about past crimes, the local environment, and other relevant information that could be used to make predictions regarding crime.[1] It is not a method for replacing traditional policing practices such as hotspot policing, intelligence-led policing, or problem-oriented policing. It is a way to assist conventional methods by applying the knowledge gained from algorithms and statistical techniques.[2] The utilization of predictive policing methods in law enforcement has received praise, but also criticism that it challenges civil rights and civil liberties. Likewise, its algorithms could exacerbate racial biases in the criminal justice system, as demonstrated through multiple U.S. studies.[3]

In Practice

Predictive policing practices can be divided into four categories: predicting crime, predicting offenders, predicting perpetrator's identities, and predicting victims of crime.[1] It can also be categorized into place-based, which makes geographic predictions on crime, or person-based in which predictions about individuals, victims, or social groups involved in crime are made. [4]

The Process of Predictive Policing

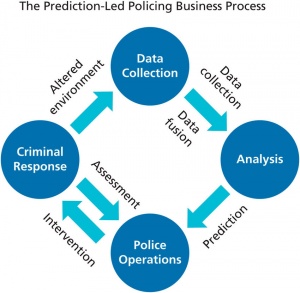

The practice of predictive policing occurs in a cycle consisting of four phases: data collection, analysis, police operations/intervention, and criminal/target response.[1][4] Data collection involves gathering crime data and regularly updating it to reflect the current conditions.[4] The second phase, analysis, involves creating predictions on crime occurrences.[1] The third phase, police operations (or police interventions), involves acting on crime forecasts[1]. The fourth phase, criminal response, also called target response, refers to law enforcement reacting to the implemented policy interventions.[1] Examples of criminal responses may include the criminals or perpetrators stopping the crimes they are committing, changing locations of where the crime is committed, or changing the way in which they commit the crime. Thus, making current data unrepresentative of the new environmental conditions, and the cycle begins again.[1]

Efficacy

In 2011, predictive policing was considered one of the top 50 best inventions, according to TIME magazine.[4] Since then, predictive policing practices have been further implemented in various cities such as Los Angeles, Santa Cruz, New York City, and more, and the effects of these new practices continue to be analyzed.[5]

Los Angeles Police Department

From 2011 to 2013, a controlled randomized trial on predictive policing was conducted in three divisions, the Foothill Division, North Hollywood Division, Southwest Division, by the Los Angeles Police Department.[6][7] The test group received leads produced by an algorithm on areas to target. The control group received leads produced by an analyst, using current policing techniques. At the end of the study, officers using the algorithmic predictions had an average 7.4% drop in crime at the mean patrol level. The officers using predictions made by the analyst had an average 3.5% decrease in crime at the mean patrol level. [7]. It can be noted that the percent difference in crime rates in these locations was statistically significant, but when results were adjusted for previous crime rates in these locations arrests were lower or unchanged. [7]

New York City Police Department

In 2017, researchers analyzed the effectiveness of predictive policing methods since 2013, when the Domain Awareness System (DAS) was implemented in the NYPD. [2] As defined by the researchers, DAS “... is a citywide network of sensors, databases, devices, software, and infrastructure that informs decision making by delivering analytics and tailored information to officers smartphones and precinct desktops.[8] The evaluation displayed that the overall crime index of New York decreased by 6%, but the researchers do not believe the decrease is entirely attributed to the use of DAS.[8] The DAS has generated a predicted $50 million in sayings for the NYPD per year through increasing staff efficiency. [8]

Shreveport Police Department

Analysis of a predictive policing experiment in the Shreveport Police Department in Louisiana was conducted by RAND (Research and Development) researchers in 2012 [9]. Three police divisions used the analytical predictive policing strategy, called "The Predictive Intelligence Led Operational Targeting" (PILOT). The three control police divisions used existing policing strategies. There were no statistically significant differences in property crime levels in areas patrolled by the experimental police groups and control police groups.[10]

Ethical Concerns

Statements of concern about predictive policing over civil rights and civil liberties and the debate that algorithms could reinforce racial biases of the criminal justice system[3] have been issued by national organizations like the ACLU and NAACP.[7][11] In 2016, the ACLU released a document, signed off by various civil rights and research groups, such as the Leadership Conference on Civil and Human Rights, Brennan Center for Justice, Center for Democracy & Technology, Center for Media Justice, Color of Change, and other special interest groups and organizations. The statement identified the following issues: "A lack of transparency about predictive policing systems prevents a meaningful, well-informed public debate", "Predictive policing systems ignore community needs", "Predictive policing systems threaten to undermine the constitutional rights of individuals", "Predictive technologies are primarily being used to intensify enforcement, rather than to meet human needs.", "Police could use predictive tools to anticipate which officers might engage in misconduct, but most departments have not done so", and "Predictive policing systems are failing to monitor their racial impact."[12]

The Fourth Amendment

The Fourth Amendment is the constitutional restriction on the U.S. government’s ability to obtain personal or private information. It protects citizens from unreasonable searches and seizures as determined through case law.[1] Law professor Fabio Arcila Jr. claims that predictive policing usage could “... fundamentally undermine the Fourth Amendment’s protections...”.[13] Predictive policing methods raise specific concern on police-citizen encounters because, “ investigative detentions, or stops, which must be preceded by reasonable, articulable suspicion of criminal activity.”[14]. Andrew G. Ferguson, a law professor at the American University Washington College of Law, explains in a law review that an encounter can “be predicted on the aggregation of specific and individualized, but otherwise noncriminal, factors.” He argues that otherwise noncriminal factors “might create a predictive composite that satisfied the reasonable suspicion standard” as depicted by predictive policing algorithm insights. But researchers Sarah Brayne, Alex Rosenblat, and Danah Boyd think that “... predictive analytics may effectively make it easier to meet the reasonable suspicion standard in practice, thus justifying more police stops.”[4] Overpolicing specific areas bring up ethical concerns that are further discussed later.

Utilization of Biased Data in Algorithms

Predictive algorithms in policing are often based on previous arrest datasets. This is where bias can be introduced. A 2013 study done by the New York Civil Liberties Union found that while black and Latino males between the ages of fourteen and twenty-four made up only 4.7 percent of the city’s population, they accounted for 40.6 percent of the stop-and-frisk checks by police. [15] This pattern of policing minorities disproportionately leads to a disproportionate amount of minorities getting charged with crimes. A key factor in an algorithm's determining of recidivism is based on past "involvement" with the police, which is one method where racial bias can be introduced. [16] These algorithms can serve to proliferate racial profiling. Social justice advocate, Dorothy Roberts, also argues that factors that go into these algorithms like incarceration history, level of education, the neighborhood of residence, membership in gangs or organized crime groups can produce algorithms that suggest to over-police minority communities or those of a low-income. [17] Further, a study of AI has found that policing algorithms can lack feedback loops and can target minority neighborhoods. [18]

Santa Cruz Police Department

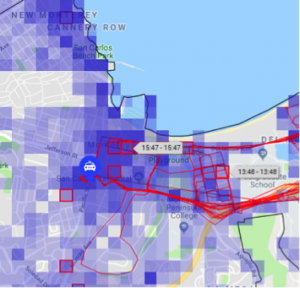

The Santa Cruz Police Department (SCPD) was among one of the first cities to conduct predictive policing studies in 2010 [19]. The six-month study utilized a data-driven policing program that directed officers to hotspot locations prior to a crimes' occurrence[19]. The predictive policing program used an earthquake aftershock algorithm by using verified crime data to predict future crime within a designated location[20]. The determined patterns were made from five years of 1,200 and 2,000 counts of crime data comprised of assaults, burglaries, batteries, and other crimes[20]. PredPol, a California-based company, backed the Santa Cruz program[21]. The predictive policing initially lead to a 19% drop in burglaries[21].However, 10 years later, Santa Cruz has become the first U.S city to ban predictive policing technology[22]. Santa Cruz’s first African-American mayor, Justin Cummings, proposed the ban in 2019[23]. Cummings expressed to the Thomoson Reuters Foundation, “We have technology that could target people of color in our community - it’s technology that we don’t need”[23]. The Santa Cruz City Council prohibited the technology along with facial-recognition technologies[22]. Santa Cruz Police Chief And Mills expressed that, “Predictive policing has been shown over time to put officers in conflict with communities rather than working with communities”[22]. Concerns over predictive policing practices disproportionately impacting racial minorities were reignited by summer 2020 Black Lives Matter protests over the death of George Floyd. Enthusiasm towards police reform uncovered that PredPol used only three data points in its algorithm[22]. PredPol’s algorithm was fueled by crime type, location, and time/date. These data points were determined to be incapable of making predictions that took office-initiated bias into consideration[22]. The decision to ban predictive policing was backed by civil liberty and justice groups such as the ACLU of Northern California, the Electric Frontier Foundation, and the NAACP of Santa Cruz.

Oakland, California Study

A study conducted on 2010 crime data in the city of Oakland, California with the predictive algorithm PredPol to predict daily crime expectations for 2011. The results directed police to target black communities at nearly twice the rate of white, despite public health data displaying roughly equivalent drug usage across races.[24] Similar results were found when analyzing low-income neighborhoods and higher-income neighborhoods. Researchers conducting the study predicted that a bias amplifying feedback loop could be created when non-white and low-income areas are targeted and heavily policed, as suggested by the algorithm. The higher reports of crime will be accounted for in future predictions, and minorities will be further faced with high concentrations of patrol.[24]

Broward County, Florida Study

Another study performed in 2016 by ProPublica investigative journalists analyzed actual outcomes of crimes with predictions made by the algorithm, called COMPAS (Correctional Offender Management Profiling for Alternative Sanctions). It assigns a risk score to a convicted criminal to determine if they will be likely to commit another offense in the next two years, or commit recidivism. [25] Over 7,000 risk scores of individuals arrested in 2013 and 2014 in Broward County, Florida were compared with the offenders’ actual outcomes. The journalist found that black defendants were almost twice (45%) as likely to be misclassified as high risk when compared to white defendants (23%). On the contrary, their analysis had shown that white defendants were mislabeled as low risk almost twice (48%) as often as black defendants (28%).[26]

Smart Policing Initiative (SPI)

One of the most notable strides that the United States Government has taken in order to push predictive policing through is to support the Smart Policing Initiative (SPI). As stated by the Bureau of Justice, SPI “supports law enforcement agencies in building evidence-based, data-driven law enforcement tactics and strategies that are effective, efficient, and economical.” [27] In 2015, SPI was given a huge spark after the Justice Department awarded the initiative with over $2 million in funding for further research, development, and improvement of the technologies used in predictive policing. These efforts of SPI started out in just a few communities but has now grown to over 40, and will continue to grow as time goes on. [28] It has been made apparent that the United States Government is committed to SPI and is trying to leverage advanced technology and analytical tools to optimize policing despite some backlash about ethical concerns.

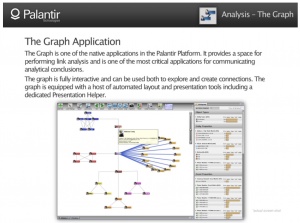

One of the government’s closest partners with SPI is the company Palantir, one of the largest data analytics companies in the world. Palantir offers law enforcement agencies an advanced database of information for virtually anybody. Many people are trying to fight SPI because it is an invasion of privacy and claim that the technology is actually inherently racist. [29] Activist Jacinta Gonzalez from the Los Angeles area says, “Palantir is not actually improving things. It's expanding the power that police have. And it’s minimizing the right that communities have to fight back, because many times, the surveillance is done in secretive ways.” Diving a bit deeper, Maria Velez, a criminologist at the University of Maryland, believes “The focus of a data-driven surveillance system is to put a lot of innocent people in the system. And that means that many folks who end up in the Palantir system are predominantly poor people of color, and who have already been identified by the gaze of police.” [30] All in all, it is clear that the government will try to leverage technology whenever possible and that also applies to policing. It will be interesting to see how these technological advancements play a role in policing in the short and long term within the United States as the government tries to balance public backlash with the potential to improve policing.

The Covid-19 Pandemic Results

Health and Safety

During the coronavirus pandemic of 2020 and 2021, health and safety threats related to the set up of densely populated incarceration facilities has resulted in the reduction of criminal arrests and the early release of many inmates. Many major cities chose to release non-violent criminals from jail in order to keep individuals safer. Research shows that with the release of so many inmates, crime levels were not affected by this action, and the police department did not have to spend more money or resources protecting all civilians. This proves that cities could keep less individuals locked up while maintaining standard safety levels for a community. [32]

Racial awareness in 2020

The predictive technology is meant to reduce costs for the police forces by sending police surveillance in crime-heavy areas, but this only justifies extreme over-policing in struggling neighborhoods. Inequitable Predictive policing technology brought more challenges to neighborhoods already suffering from poverty. With follow up testing, it was found that predictive algorithms did not save any money for police departments. Since the predictive technology assigns individuals a score for their level of risk- while the algorithm may not possess the same biases as a human cop- still becomes a problematic assessment by removing the national notion of presumed innocence. [33]

In may and June of 2020, following the death of George Floyd, the black lives matter movement saw a wave of support, resulting in the social justice awareness of society. This wave called for the defunding of the police. Major cities have begun to reduce unnecessary police department spending and technology. The city of Boston banned the use of facial recognition technology for criminal surveillance. [34]

The dangers in over policing struggling neighborhoods are plentiful, but it most significantly results in major loss of trust between police and communities. Overpolicing also perpetuates racial biases from cops, who could choose to give some individuals warnings, and turn around to arrest someone else for the same crime. [35]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 1.7 Perry, W., Mcinnis, B., Price, C., Smith, S., & Hollywood, J. (2013). Predictive Policing: The Role of Crime Forecasting in Law Enforcement Operations. DOI:10.7249/rr23. Retrieved from https://www.rand.org/pubs/research_reports/RR233.html

- ↑ 2.0 2.1 Meijer, A., & Wessels, M. (2019). Predictive Policing: Review of Benefits and Drawbacks. International Journal of Public Administration, 42(12), 1031-1039. DOI:10.1080/01900692.2019.1575664. Retrieved from https://www.tandfonline.com/doi/full/10.1080/01900692.2019.1575664

- ↑ 3.0 3.1 Lau, T. (2020, April 01). Predictive Policing Explained. Retrieved from https://www.brennancenter.org/our-work/research-reports/predictive-policing-explained

- ↑ 4.0 4.1 4.2 4.3 4.4 Brayne, S., Rosenblat, A., & Boyd, D. (2015). Predictive Policing. DATA & CIVIL RIGHTS: A NEW ERA OF POLICING AND JUSTICE. Retrieved from https://datacivilrights.org/pubs/2015-1027/Predictive_Policing.pdf.

- ↑ Friend, Z., M.P.P. (2013, April 09). Predictive Policing: Using Technology to Reduce Crime. Retrieved from https://leb.fbi.gov/articles/featured-articles/predictive-policing-using-technology-to-reduce-crime

- ↑ Mohler, G. O., Short, M. B., Malinnowski, S., Johsson, M., Tita, G.E., Bertozzi, A. L., & Brantingham, J. (2015) Randomized Controlled Field Trials of Predictive Policing, Journal of the American Statistical Association, 110:512, 1399-1411, DOI: 10.1080/01621459.2015.1077710. Retrieved from https://www.tandfonline.com/doi/full/10.1080/01621459.2015.1077710

- ↑ 7.0 7.1 7.2 7.3 Brantingham, J., Valasik, M., & Mohler, G. O. (2018) Does Predictive Policing Lead to Biased Arrests? Results From a Randomized Controlled Trial, Statistics and Public Policy, 5:1, 1-6, DOI: 10.1080/2330443X.2018.1438940. Retrieved from https://www.tandfonline.com/doi/full/10.1080/2330443X.2018.1438940

- ↑ 8.0 8.1 8.2 Levine, E. S., Tisch, J. S., Tasso, A. S., & Joy, M. S. (2017). The New York City Police Department’s Domain Awareness System: 2016 Franz Edelman Award Finalists [Abstract]. Informs Journal on Applied Analytics, 47(1), 1-109. doi:10.1287/inte.2016.0860. Retrieved from https://www.researchgate.net/publication/312542282_The_New_York_City_Police_Department's_Domain_Awareness_System_2016_Franz_Edelman_Award_Finalists

- ↑ Hunt, P., Saunders, J., & Hollywood, J. S. (2014, July 01). Evaluation of the Shreveport Predictive Policing Experiment. Retrieved from https://www.rand.org/pubs/research_reports/RR531.html

- ↑ Moses, L. B., & Chan, J.(2018) Algorithmic prediction in policing: assumptions, evaluation, and accountability, Policing and Society, 28:7, 806-822, DOI: 10.1080/10439463.2016.1253695. Retrieved from https://www.tandfonline.com/doi/full/10.1080/10439463.2016.1253695

- ↑ Moravec, E. R. (2019, September 10). Do Algorithms Have a Place in Policing? Retrieved from https://www.theatlantic.com/politics/archive/2019/09/do-algorithms-have-place-policing/596851/

- ↑ Statement of Concern About Predictive Policing by ACLU and 16 Civil Rights Privacy, Racial Justice, and Technology Organizations. (n.d.). Retrieved from https://www.aclu.org/other/statement-concern-about-predictive-policing-aclu-and-16-civil-rights-privacy-racial-justice

- ↑ Arcila, F . (2013) Nuance, Technology, and the Fourth Amendment: A Response to Predictive Policing and Reasonable Suspicion, 63 Emory L. J. Online 2071. Retrieved from https://scholarlycommons.law.emory.edu/elj-online/30

- ↑ Ferguson, A. G. (2011). Crime Mapping and the Fourth Amendment: Redrawing “High-Crime Areas ”. Hastings Law Journal, 63(1), 179-232. Retrieved from https://repository.uchastings.edu/hastings_law_journal/vol63/iss1/4.

- ↑ Bellin, Jeffrey. “The Inverse Relationship between the Constitutionality and Effectiveness of New York City 'Stop and Frisk'.” SSRN, 25 Mar. 2014, Retrieved from papers.ssrn.com/sol3/papers.cfm?abstract_id=2413935.

- ↑ O'Neil, C. (2016, June 21). Weapons of Math Destruction. weaponsofmathdestructionbook.com. Retrieved from https://weaponsofmathdestructionbook.com/.

- ↑ Roediger, B. D., & Roberts, D. E. (2019, April 10). Digitizing the Carceral State. Harvard Law Review. Retrieved from https://harvardlawreview.org/2019/04/digitizing-the-carceral-state/.

- ↑ Where in the World is AI? Responsible & Unethical AI Examples. (0AD). Retrieved from https://map.ai-global.org/.

- ↑ 19.0 19.1 Santa Cruz, C. (2012, March). The city of Santa Cruz. Retrieved from https://www.cityofsantacruz.com/government/city-departments/city-manager/community-relations/city-annual-report/march-2012-newsletter/predictive-policing

- ↑ 20.0 20.1 Friend, Z., M.M.P. (2013, April 09). Predictive policing: Using technology to reduce crime. Retrieved from https://leb.fbi.gov/articles/featured-articles/predictive-policing-using-technology-to-reduce-crime

- ↑ 21.0 21.1 Rey, E., & Rey, E. (2012, December 18). Predictive policing in Santa CRUZ leads to 19 percent drop in burglaries. Retrieved from https://www.predpol.com/predictive-policing-in-santa-cruz-leads-to-19-percent-drop-in-burglaries/

- ↑ 22.0 22.1 22.2 22.3 22.4 Ibarra, N., & Sentinel, S. (2021, June 24). Santa Cruz, Calif., Bans predictive Policing Technology. Retrieved from https://www.govtech.com/public-safety/Santa-Cruz-Calif-Bans-Predictive-Policing-Technology.html

- ↑ 23.0 23.1 Asher-Schapiro, A. (2020, June 19). In a U.S. FIRST, Santa Cruz set to ban predictive policing technology. Retrieved from https://www.santacruztechbeat.com/2020/06/18/in-a-u-s-first-santa-cruz-set-to-ban-predictive-policing-technology/

- ↑ 24.0 24.1 Ehrenberg, R. (2017, March 8). Data-driven crime prediction fails to erase human bias. Retrieved from https://www.sciencenews.org/blog/science-the-public/data-driven-crime-prediction-fails-erase-human-bias

- ↑ Larson, J., Angwin, J., Mattu, S., & Kirchner, L. (2016, May 23). Machine Bias. Retrieved from https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

- ↑ Angwin, J., Larson, J., Kirchner, L., & Mattu, S. (2016, May 23). How We Analyzed the COMPAS Recidivism Algorithm. Retrieved from https://www.propublica.org/article/how-we-analyzed-the-compas-recidivism-algorithm

- ↑ “Smart Policing Initiative.” Bureau of Justice Assistance, bja.ojp.gov/sites/g/files/xyckuh186/files/Publications/SmartPolicingFS.pdf.

- ↑ “Justice Department Announces More than $2 Million for Smart Policing Initiative.” The United States Department of Justice, 28 Sept. 2015, www.justice.gov/opa/pr/justice-department-announces-more-2-million-smart-policing-initiative.

- ↑ Hvistendahl, Mara. “How the LAPD and Palantir Use Data to Justify Racist Policing.” The Intercept, 30 Jan. 2021, theintercept.com/2021/01/30/lapd-palantir-data-driven-policing/.

- ↑ Haskins, Caroline. “Scars, Tattoos, And License Plates: This Is What Palantir And The LAPD Know About You.” BuzzFeed News, BuzzFeed News, 1 Oct. 2020, www.buzzfeednews.com/article/carolinehaskins1/training-documents-palantir-lapd.

- ↑ Haskins, Caroline. “Scars, Tattoos, And License Plates: This Is What Palantir And The LAPD Know About You.” BuzzFeed News, BuzzFeed News, 1 Oct. 2020, www.buzzfeednews.com/article/carolinehaskins1/training-documents-palantir-lapd.

- ↑ Jacob Kang-Brown Jasmine Heiss, & Heiss, J. (2020, April 01). The scale of the covid-19-related jail population decline. Retrieved April 09, 2021, from https://www.vera.org/publications/covid19-jail-population-decline

- ↑ Sassaman, H. (n.d.). Covid-19 proves it's time to abolish 'predictive' policing algorithms. Retrieved April 09, 2021, from https://www.wired.com/story/covid-19-proves-its-time-to-abolish-predictive-policing-algorithms/

- ↑ Jarmanning, A. (2020, June 24). Boston bans use of facial recognition Technology. it's the 2ND-LARGEST city to do so. Retrieved April 09, 2021, from https://www.wbur.org/news/2020/06/23/boston-facial-recognition-ban

- ↑ Sassaman, H. (n.d.). Covid-19 proves it's time to abolish 'predictive' policing algorithms. Retrieved April 09, 2021, from https://www.wired.com/story/covid-19-proves-its-time-to-abolish-predictive-policing-algorithms/