Difference between revisions of "Parental Controls"

m (Ponette moved page Role of Parents in Internet Censorship to Parental Controls: Prof. Pasquetto and I discussed new name.) |

|||

| (98 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | ||

{{Nav-Bar|Topics#A}}<br> | {{Nav-Bar|Topics#A}}<br> | ||

| − | ''' | + | '''Parental controls''' allows parents to control what websites their children can see, determine at what times their children have access to the internet, track their children's internet history, limit accessible content, block outgoing content, and many other things. <ref>J. D. Biersdorfer. [https://www.nytimes.com/2018/03/02/technology/personaltech/setting-up-parental-controls-on-pcs-and-macs.html "Tools to Keep Your Children in Line When They’re Online"] (2018, March 02). ''New York Times''. Retrieved April 01, 2021. </ref> <ref name=FTC> Federal Trade Commission. (2018, March 13). Parental controls. Retrieved April 06, 2021, from https://www.consumer.ftc.gov/articles/0029-parental-controls </ref> The introduction of parental control software has raised ethical concerns over the last decade. <ref name = who>Thierer, Adam. (2019). Parental Controls & Online Child Protection. The Progress & Freedom Foundation, 45-51. https://poseidon01.ssrn.com/delivery.php?ID=396031102089030101016075082091103088058033095009026094104027126098086093111106075097029031099028051096054088127017025008122107111073000085023006114113101117080113006077037031064068080020002099009101067004068104007107105022107078007111120115083101094&EXT=pdf&INDEX=TRUE</ref> Parental controls are most likely used between the ages of 7 and 16, but parents with “very young children or older teens often have very little need for parental control technologies.” <ref name="who"/> Ethical concerns include loss of trust between parents and children <ref> Managing Screen Time and Privacy | Could Parental Control Apps Do More Harm than Good? https://techden.com/blog/screen-time-privacy-parental-control-apps/ </ref> and a decreased sense of autonomy that leads to reduced opportunities for self-directed learning. <ref> Controlling Parents – The Signs And Why They Are Harmful https://www.parentingforbrain.com/controlling-parents/ </ref> Additionally, various monitoring tools may be used with or without the child's consent. <ref name=FTC> Federal Trade Commission. (2018, March 13). Parental controls. Retrieved April 06, 2021, from https://www.consumer.ftc.gov/articles/0029-parental-controls </ref> |

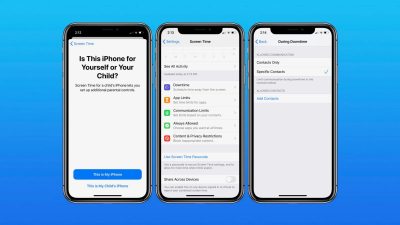

| − | + | [[File:Phone.png|400px|thumbnail|Apple's built-in parental controls. <ref>amaysim. (2020, November 3). How to set up parental controls on your iPhone, iPad or Android device. amaysim. https://www.amaysim.com.au/blog/world-of-mobile/set-up-parental-controls-apple-android </ref>]] | |

| − | |||

| − | |||

| − | + | ==Overview== | |

| − | + | Parental control software typically allows parents to customize internet permissions on their child's devices or accounts on shared devices. <ref>The Business Insider. (2020, September 18). The best internet parental control systems. Newstex LLC. https://go-gale-com.proxy.lib.umich.edu/ps/i.do?p=STND&u=umuser&id=GALE%7CA635821966&v=2.1&it=r&sid=summon</ref> The account administrator, typically a parent, can change internet permissions for the entire household, while accounts under the administrator do not have that capability. This allows parents to establish rules for their children without having to physically enforce them. | |

| − | + | The parental control software has become more prevalent recently. For example, basic parental control software now comes with standard operating systems, such as Windows. <ref>Microsoft. (n.d.). Parental consent and Microsoft child accounts. Microsoft. https://support.microsoft.com/en-us/account-billing/parental-consent-and-microsoft-child-accounts-c6951746-8ee5-8461-0809-fbd755cd902e | |

| + | </ref> | ||

| − | + | There are three main ways that parental control software functions: | |

| − | * ''' | + | * '''Complete disablement''' of the internet allows parents to cut off their child's connection to wifi entirely during chosen time intervals. This can range from disabling their wifi during a scheduled time interval, such as at bedtime, to turn off their internet indefinitely, such as in instances of punishment. |

| − | * ''' | + | * '''Content blocking''' focuses on filtering the content that children can see, and different accounts might have different age-appropriate preferences set. For larger households with several devices, this allows for different children to view age-appropriate content as determined by the account administrator. |

| + | |||

| + | * '''Monitoring''' means that parents can have access to the complete browsing history of their children at any time. This allows parents to monitor how their children navigate the internet without hard boundaries. | ||

==History== | ==History== | ||

| + | The emergence of parental controls was first known as a content filter. <ref> (Clark, N). Content Filter Technology. Retrieved 1 April 2021, from https://www.britannica.com/technology/content-filter </ref> Content filters are interchangeable with internet filter, which is a software that assesses and blocks online content that has specific words or images. Although the Internet was created to make information more accessible, open access to all different kinds of information was deemed to be problematic, in instances where children or younger age groups could potentially view obscene or offensive materials. Content filters restrict what users may view on their computer by screening Web pages and e-mail messages for category-specific content. Such filters can be used by individuals, businesses, or even countries in order to regulate Internet usage. <ref> Web Content Filtering. Retrieved 1 April 2021, from https://www.webroot.com/us/en/resources/glossary/what-is-web-content-filtering </ref> | ||

| − | === | + | ===Uses for Parental Control Software=== |

| + | ====Protection from Harmful Content==== | ||

| + | The vastness of the internet places a heavy burden on parents trying to protect their children from harmful content. Because children might not have the skills to successfully and safely navigate online environments, parental controls can be a helpful tool to guide them. While the internet is an integral part of children's schooling, the internet also makes available potentially traumatic content that these children would not otherwise see. Parental control software offers parents the ability to control what content their children have access to, even when they are not physically present to monitor them. There is evidence that parents who are involved in their children’s internet use in some way are more likely to encourage safe internet practices. <ref>Gallego, Francisco, A. (2020, August). Parental monitoring and children's internet use: The role of information, control, and cues. ScienceDirect. https://www-sciencedirect-com.proxy.lib.umich.edu/science/article/pii/S0047272720300724?via%3Dihub</ref> | ||

| + | ====Recommendations for Controlling Content for Children==== | ||

| + | There are various third-party parental control services such as Bark, Qustodio or NetNanny that allows people to keep track of the devices. Prices for these services range anywhere from $50 to more than $100 if there are several children to monitor. These costs include 24/7 device monitoring and full-range visibility into how they are using their devices. <ref>Knorr, Caroline. (2021, March). Parents' Ultimate Guide to Parental Controls. https://www.commonsensemedia.org/blog/parents-ultimate-guide-to-parental-controls#What%20are%20the%20best%20parental%20controls%20for%20setting%20limits%20and%20monitoring%20kids?</ref> However, these parental controls can only monitor certain accounts that they know the children are using. For some instances, the services need passwords in order to monitor activity. <ref>Orchilles, Jorge. (2010, April). Parental Control. https://www.sciencedirect.com/topics/computer-science/parental-control</ref> | ||

| + | ====Health Benefits==== | ||

| + | Parental controls can also help one's child to live a healthier lifestyle. A study from 2016 found that about 59% of parents believed their children to be addicted to their cellular and/or electronic devices. <ref> Teenage Cellphone Addiction https://www.psycom.net/cell-phone-internet-addiction </ref> As children increasingly receive smartphones at younger and younger ages, it is important for parents to be able to limit their device usage so as to lower their children's chance of becoming addicted to their phone in the future. Addiction to cellular and other electronic devices has several negative symptoms. These symptoms range from psychological (anxiety and depression) to physical (eye strain and neck strain). <ref> Signs and Symptoms of Cell Phone Addiction https://www.psychguides.com/behavioral-disorders/cell-phone-addiction/signs-and-symptoms/ </ref> Less time spent on phones leads to increased physical activity and more legitimate social interaction, which makes for a more well-rounded lifestyle. The American Academy of Pediatrics found that limiting children's screentime improves their physical and mental health and they develop academically, creatively, and socially. <ref name=benefits> Miller, M. (2020, February 24). Benefits Of Limiting Screen Time For Children. Retrieved from https://web.magnushealth.com/insights/benefits-of-limiting-screen-time-for-children#:~:text=It%20is%20amazing%20to%20see,online%20and%20age%2Dinappropriate%20videos. </ref> | ||

| − | + | ==Parental Control Apps== | |

| − | + | [[File:Qustodio.jpeg|250px|thumbnail|An example of what information parents could view about their children's device usage with Qustodio. <ref name="spy"/>]] | |

| − | + | Aside from standard operating systems’ built-in parental controls, parents can also download apps to set up these restrictions/monitoring systems. Life360 is a popular app that parents can use to have access to their children's location at all times. The app also offers driving reports so you can see if your teenager is speeding or not. Life360 has been controversial with people even calling it the "Big Brother" of apps. Teens have even said it has ruined their relationship with their parents. <ref> Lenore Skenazy, Life 360 Should Be Called “Life Sentence 360”</ref>Net Nanny is an app that can block inappropriate content online and “can also record instant messaging programs, monitor Internet activity, and send daily email reports to parents.” <ref>Kanable, Rebecca. (2004, November). Policing Online: From Internet Safety to Employee Management and Parolee Monitoring, Technology Can Help. U.S. Department of Justice. https://www.ojp.gov/ncjrs/virtual-library/abstracts/policing-online-internet-safety-employee-management-and-parolee </ref> | |

| − | + | One app called Qustodio provides parents with activity summaries for each of their children. <ref name = spy>Teodosieva, Radina. (2015, October 16). Spy me, please! The University of Amsterdam. http://mastersofmedia.hum.uva.nl/blog/2015/10/16/spy-me-please-the-self-spying-app-that-you-need/ </ref> These summaries include total screen time, a breakdown of the screen time that shows how much time was spent on different apps, a list of words that the child searched on the internet, and a tab alerting parents to possibly questionable activity. <ref name="spy"/> | |

| − | + | Another, more invasive, option is Key logger services. A Key logger tracks all key strokes made on a device and provides a file to the parent, usually via email, of everything logged in the child's device. These services can include access to contact lists and internet search histories. <ref name=key> Reporter, S. (2019, November 29). Parenting benefits of keylogger program. Retrieved April 08, 2021, from https://www.sciencetimes.com/articles/24367/20191129/parenting-benefits-of-keylogger-program.htm </ref> | |

| − | + | About “16% of parents report using parental control apps to monitor and restrict their teens’ mobile online activities,” and some parents are more likely than others to download these apps. <ref name = safety>Ghosh, Arup K, et al. (2018, April 26). A Matter of Control or Safety? Examining Parental Use of Technical Monitoring Apps on Teens’ Mobile Devices. Association for Computing Machinery Digital Library. Association for Computing Machinery. https://dl.acm.org/doi/pdf/10.1145/3173574.3173768 </ref> Two factors that correspond to higher rates of parental control app usage are if the parents are “low autonomy granting” and if the child is being “victimized online” or has had “peer problems.” <ref name="safety"/> | |

| − | + | ||

| − | + | ||

| − | + | ==Bypassing Parental Control== | |

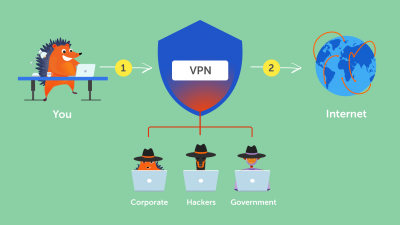

| + | [[File:Vpn.png|400px|thumbnail|How VPN works as the middle man between the client and the host server. <ref>Namecheap [https://www.namecheap.com/vpn/how-does-vpn-virtual-private-network-work/ . "How does VPN work?"] (January 3, 2020). ''PC Mag''. Retrieved April 01, 2021. </ref>]] | ||

| + | While parental controls like Life360 are quite comprehensive, tech-savvy teens have found ways to bypass some of the controls. Most software has bugs that can be utilized by users to bypass the rules and regulations set for them. For example, on the iPhone parents can set screen time limits for certain apps like limiting two hours of iMessaging per day for their child. <ref>Lance Whitney. [https://www.pcmag.com/how-to/how-to-use-apples-screen-time-on-iphone-or-ipad#:~:text=Set%20App%20Limits,entire%20category%20or%20specific%20apps. "How to Use Apple's Screen Time on iPhone or iPad"] (January 3, 2020). ''PC Mag''. Retrieved April 01, 2021. </ref> However, what kids have figured out is that a lot of apps have a built-in share functionality that you can use to send messages through. This is a loophole to send messages even when the iMessage app is locked. <ref> Jellies App. [https://jelliesapp.com/blog/kids-bypassing-screen-time "Are Your Kids or Teens Unlocking Apple Screen Time Limits?"] (January 3, 2020). Retrieved April 01, 2021. </ref> And if done properly, the parents can remain ignorant that their kids have found a way to seemingly extended their time limits to have no bounds. Currently, there are no solutions for parents to fully implement a solution to the issue. A complete solution would need to come from developers at Apple. | ||

| − | + | Additionally, teens can download VPN (Virtual private network) to bypass browsing the internet freely. A VPN can create an encrypted connection between your computer and the server you are trying to reach.<ref>Mark Smirniotis. [https://www.nytimes.com/wirecutter/guides/what-is-a-vpn/. "What Is a VPN and What Can (and Can’t) It Do?"] (March 3, 2021). ''New York Times''. Retrieved April 01, 2021. </ref> In essence, even though a teenager has parental controls for a certain website, the VPN can make a request to another computer that does not have parental controls, then that computer can give you the content you want. Creating a middle man between blocked content and teenager. Again there is really no complete solution to stopping someone from accessing a website once they have a VPN. The main use of VPNs is to protect people’s privacy, so they were built to be extremely secure. <ref>David Pierce. [https://www.wsj.com/articles/why-you-need-a-vpnand-how-to-choose-the-right-one-1537294244 . "Why You Need a VPN—and How to Choose the Right One"] (September 18, 2018). ''Wall Street Journal''. Retrieved April 01, 2021. </ref> The only sure way to prevent VPN use would just to prevent teenagers from downloading a VPN from the beginning. This can be done by monitoring the app store to prevent a VPN app from being downloaded in the first place. Additionally, most good VPNs have a monthly fee, so there is a financial barrier to entry. <ref>Yael Grauer. [https://www.nytimes.com/wirecutter/reviews/best-vpn-service/. "The Best VPN Service"] (February 25, 2021). ''New York Times''. Retrieved April 01, 2021. </ref> | |

| − | + | A recent TikTok trend revealed how teens were escaping the potential punishments of their parents finding out their true whereabouts or activities by using creative methods to avoid setting off any triggers. Some such tactics are placing their phones in their friends' mailboxes to ensure their location is where they said they are, and then traveling without a phone to their actual destination. Teens have also figured out various cellular and wifi settings that allow them to restrict which of their activities are available to their parents via the app. <ref name=tiktok> Meisenzahl, M. (2019, November 08). Teens are Finding sneaky and clever ways to outsmart their parents' location-tracking apps, and it's turning into a meme ON TIKTOK. Retrieved April 08, 2021, from https://www.businessinsider.com/life360-location-tracker-teens-tiktok-memes-tips-2019-11#as-more-teens-and-their-parents-use-life360-the-community-on-tiktok-has-made-a-meme-out-of-it-videos-are-set-to-the-song-fly-by-still-lonely-which-has-the-lyrics-life-360-in-it-2 </ref> | |

| − | + | ==Adoption== | |

| − | + | About 32% of U.S. households have children, but that doesn’t mean all of them to utilize parental controls. <ref name = who>Thierer, Adam. (2019). Parental Controls & Online Child Protection. The Progress & Freedom Foundation, 45-51. https://poseidon01.ssrn.com/delivery.php?ID=396031102089030101016075082091103088058033095009026094104027126098086093111106075097029031099028051096054088127017025008122107111073000085023006114113101117080113006077037031064068080020002099009101067004068104007107105022107078007111120115083101094&EXT=pdf&INDEX=TRUE</ref> Parental controls are most likely used between the ages of 7 and 16, but parents with “very young children or older teens often have very little need for parental control technologies.” <ref name="who"/> Other factors that influence whether or not a family chooses to use parental controls include aversions to these technologies, beliefs that these technologies are ineffective, and “alternative methods of controlling media content and access in the home,” such as “household media rules.” <ref name="who"/> Sometimes, parents might elect not to deal with parental controls simply because they’re too out of energy. <ref name="who"/> However, parents who do choose to monitor their children’s technology use can do so in a variety of ways. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

==Ethical Issues== | ==Ethical Issues== | ||

| − | + | In academia, there is a debate about whether or not parental control software leads to healthy outcomes. Some say that greater parental involvement in children's device usage allows for better internet safety practices. Others contend that parental control software enables parental behaviors that negatively affect family dynamics and internet safety practices. However, there is a consensus that the obstacles parents face when trying to protect their children from harmful content are largely shaped by how much information is easily accessible on the internet. | |

| − | === | + | ===Trust=== |

| − | + | While some parents can potentially harm their children by failing to teach them safe internet practices, research has shown that the reverse is also true. Because it provides parents with greater control over their children’s internet access, the parental control software can enable parents who may already struggle with being overcontrolling in their relationships with their children. This can lead to broken trust within families and leave the children without any of their own experience practicing safe internet practices. A study from the University of Central Florida found that two-thirds of teens' relationships with their parents soured after the installation of a parental control application. <ref> Managing Screen Time and Privacy | Could Parental Control Apps Do More Harm than Good? https://techden.com/blog/screen-time-privacy-parental-control-apps/ </ref> It is thought that perhaps parents may replace meaningful conversations about safe internet practices with parental control software. | |

| − | + | The teens who are bypassing parental controls also adjust the trust balance between parents and their children. <ref name=tiktok> </ref> Many of the children believe they are outsmarting their parents. Consequently, parents are not as alert to the safety of their children because they trust the app to do its job. Because of some of these behaviors, such as not having their phones present with them, teens may not be able to properly contact their parents or emergency services in the case that something goes wrong. This leads to an increased safety concern, when parents intended to decrease safety concerns. | |

| − | + | ||

| − | + | === Independence === | |

| − | + | As children enter adulthood, some have trouble adjusting to having autonomy of their internet practices due to heavy supervision in the home. <ref>Cetinkaya, L. (2019). The Relationship between Perceived Parental Control and Internet Addiction: A Cross-sectional study among Adolescents. Contemporary Educational Technology, 10(1), 55–74. https://doi.org/10.30935/cet.512531</ref> An important thing for children to develop is the ability to learn from their mistakes and solve their problems on their own. One study suggested that some children whose parents used parental controls were less likely to want to approach their parents about problems they had run into, both on- and offline, leading to these children being less able to solve their problems through collaboration with others. <ref> Managing Screen Time and Privacy | Could Parental Control Apps Do More Harm than Good? https://techden.com/blog/screen-time-privacy-parental-control-apps/ </ref> Additionally, there have been extensive studies done on the effects that overbearing parents can have on children. Children with controlling parents demonstrate lower self-esteem, act out more, and have lower academic performance. <ref> Controlling Parents – The Signs And Why They Are Harmful https://www.parentingforbrain.com/controlling-parents/ </ref> As parental controls can lead to controlling parenting, they need to be treated with great care. | |

| − | === | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

==References== | ==References== | ||

<references/> | <references/> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 00:50, 9 April 2021

Parental controls allows parents to control what websites their children can see, determine at what times their children have access to the internet, track their children's internet history, limit accessible content, block outgoing content, and many other things. [1] [2] The introduction of parental control software has raised ethical concerns over the last decade. [3] Parental controls are most likely used between the ages of 7 and 16, but parents with “very young children or older teens often have very little need for parental control technologies.” [3] Ethical concerns include loss of trust between parents and children [4] and a decreased sense of autonomy that leads to reduced opportunities for self-directed learning. [5] Additionally, various monitoring tools may be used with or without the child's consent. [2]

Contents

Overview

Parental control software typically allows parents to customize internet permissions on their child's devices or accounts on shared devices. [7] The account administrator, typically a parent, can change internet permissions for the entire household, while accounts under the administrator do not have that capability. This allows parents to establish rules for their children without having to physically enforce them.

The parental control software has become more prevalent recently. For example, basic parental control software now comes with standard operating systems, such as Windows. [8]

There are three main ways that parental control software functions:

- Complete disablement of the internet allows parents to cut off their child's connection to wifi entirely during chosen time intervals. This can range from disabling their wifi during a scheduled time interval, such as at bedtime, to turn off their internet indefinitely, such as in instances of punishment.

- Content blocking focuses on filtering the content that children can see, and different accounts might have different age-appropriate preferences set. For larger households with several devices, this allows for different children to view age-appropriate content as determined by the account administrator.

- Monitoring means that parents can have access to the complete browsing history of their children at any time. This allows parents to monitor how their children navigate the internet without hard boundaries.

History

The emergence of parental controls was first known as a content filter. [9] Content filters are interchangeable with internet filter, which is a software that assesses and blocks online content that has specific words or images. Although the Internet was created to make information more accessible, open access to all different kinds of information was deemed to be problematic, in instances where children or younger age groups could potentially view obscene or offensive materials. Content filters restrict what users may view on their computer by screening Web pages and e-mail messages for category-specific content. Such filters can be used by individuals, businesses, or even countries in order to regulate Internet usage. [10]

Uses for Parental Control Software

Protection from Harmful Content

The vastness of the internet places a heavy burden on parents trying to protect their children from harmful content. Because children might not have the skills to successfully and safely navigate online environments, parental controls can be a helpful tool to guide them. While the internet is an integral part of children's schooling, the internet also makes available potentially traumatic content that these children would not otherwise see. Parental control software offers parents the ability to control what content their children have access to, even when they are not physically present to monitor them. There is evidence that parents who are involved in their children’s internet use in some way are more likely to encourage safe internet practices. [11]

Recommendations for Controlling Content for Children

There are various third-party parental control services such as Bark, Qustodio or NetNanny that allows people to keep track of the devices. Prices for these services range anywhere from $50 to more than $100 if there are several children to monitor. These costs include 24/7 device monitoring and full-range visibility into how they are using their devices. [12] However, these parental controls can only monitor certain accounts that they know the children are using. For some instances, the services need passwords in order to monitor activity. [13]

Health Benefits

Parental controls can also help one's child to live a healthier lifestyle. A study from 2016 found that about 59% of parents believed their children to be addicted to their cellular and/or electronic devices. [14] As children increasingly receive smartphones at younger and younger ages, it is important for parents to be able to limit their device usage so as to lower their children's chance of becoming addicted to their phone in the future. Addiction to cellular and other electronic devices has several negative symptoms. These symptoms range from psychological (anxiety and depression) to physical (eye strain and neck strain). [15] Less time spent on phones leads to increased physical activity and more legitimate social interaction, which makes for a more well-rounded lifestyle. The American Academy of Pediatrics found that limiting children's screentime improves their physical and mental health and they develop academically, creatively, and socially. [16]

Parental Control Apps

Aside from standard operating systems’ built-in parental controls, parents can also download apps to set up these restrictions/monitoring systems. Life360 is a popular app that parents can use to have access to their children's location at all times. The app also offers driving reports so you can see if your teenager is speeding or not. Life360 has been controversial with people even calling it the "Big Brother" of apps. Teens have even said it has ruined their relationship with their parents. [18]Net Nanny is an app that can block inappropriate content online and “can also record instant messaging programs, monitor Internet activity, and send daily email reports to parents.” [19]

One app called Qustodio provides parents with activity summaries for each of their children. [17] These summaries include total screen time, a breakdown of the screen time that shows how much time was spent on different apps, a list of words that the child searched on the internet, and a tab alerting parents to possibly questionable activity. [17]

Another, more invasive, option is Key logger services. A Key logger tracks all key strokes made on a device and provides a file to the parent, usually via email, of everything logged in the child's device. These services can include access to contact lists and internet search histories. [20]

About “16% of parents report using parental control apps to monitor and restrict their teens’ mobile online activities,” and some parents are more likely than others to download these apps. [21] Two factors that correspond to higher rates of parental control app usage are if the parents are “low autonomy granting” and if the child is being “victimized online” or has had “peer problems.” [21]

Bypassing Parental Control

While parental controls like Life360 are quite comprehensive, tech-savvy teens have found ways to bypass some of the controls. Most software has bugs that can be utilized by users to bypass the rules and regulations set for them. For example, on the iPhone parents can set screen time limits for certain apps like limiting two hours of iMessaging per day for their child. [23] However, what kids have figured out is that a lot of apps have a built-in share functionality that you can use to send messages through. This is a loophole to send messages even when the iMessage app is locked. [24] And if done properly, the parents can remain ignorant that their kids have found a way to seemingly extended their time limits to have no bounds. Currently, there are no solutions for parents to fully implement a solution to the issue. A complete solution would need to come from developers at Apple.

Additionally, teens can download VPN (Virtual private network) to bypass browsing the internet freely. A VPN can create an encrypted connection between your computer and the server you are trying to reach.[25] In essence, even though a teenager has parental controls for a certain website, the VPN can make a request to another computer that does not have parental controls, then that computer can give you the content you want. Creating a middle man between blocked content and teenager. Again there is really no complete solution to stopping someone from accessing a website once they have a VPN. The main use of VPNs is to protect people’s privacy, so they were built to be extremely secure. [26] The only sure way to prevent VPN use would just to prevent teenagers from downloading a VPN from the beginning. This can be done by monitoring the app store to prevent a VPN app from being downloaded in the first place. Additionally, most good VPNs have a monthly fee, so there is a financial barrier to entry. [27]

A recent TikTok trend revealed how teens were escaping the potential punishments of their parents finding out their true whereabouts or activities by using creative methods to avoid setting off any triggers. Some such tactics are placing their phones in their friends' mailboxes to ensure their location is where they said they are, and then traveling without a phone to their actual destination. Teens have also figured out various cellular and wifi settings that allow them to restrict which of their activities are available to their parents via the app. [28]

Adoption

About 32% of U.S. households have children, but that doesn’t mean all of them to utilize parental controls. [3] Parental controls are most likely used between the ages of 7 and 16, but parents with “very young children or older teens often have very little need for parental control technologies.” [3] Other factors that influence whether or not a family chooses to use parental controls include aversions to these technologies, beliefs that these technologies are ineffective, and “alternative methods of controlling media content and access in the home,” such as “household media rules.” [3] Sometimes, parents might elect not to deal with parental controls simply because they’re too out of energy. [3] However, parents who do choose to monitor their children’s technology use can do so in a variety of ways.

Ethical Issues

In academia, there is a debate about whether or not parental control software leads to healthy outcomes. Some say that greater parental involvement in children's device usage allows for better internet safety practices. Others contend that parental control software enables parental behaviors that negatively affect family dynamics and internet safety practices. However, there is a consensus that the obstacles parents face when trying to protect their children from harmful content are largely shaped by how much information is easily accessible on the internet.

Trust

While some parents can potentially harm their children by failing to teach them safe internet practices, research has shown that the reverse is also true. Because it provides parents with greater control over their children’s internet access, the parental control software can enable parents who may already struggle with being overcontrolling in their relationships with their children. This can lead to broken trust within families and leave the children without any of their own experience practicing safe internet practices. A study from the University of Central Florida found that two-thirds of teens' relationships with their parents soured after the installation of a parental control application. [29] It is thought that perhaps parents may replace meaningful conversations about safe internet practices with parental control software.

The teens who are bypassing parental controls also adjust the trust balance between parents and their children. [28] Many of the children believe they are outsmarting their parents. Consequently, parents are not as alert to the safety of their children because they trust the app to do its job. Because of some of these behaviors, such as not having their phones present with them, teens may not be able to properly contact their parents or emergency services in the case that something goes wrong. This leads to an increased safety concern, when parents intended to decrease safety concerns.

Independence

As children enter adulthood, some have trouble adjusting to having autonomy of their internet practices due to heavy supervision in the home. [30] An important thing for children to develop is the ability to learn from their mistakes and solve their problems on their own. One study suggested that some children whose parents used parental controls were less likely to want to approach their parents about problems they had run into, both on- and offline, leading to these children being less able to solve their problems through collaboration with others. [31] Additionally, there have been extensive studies done on the effects that overbearing parents can have on children. Children with controlling parents demonstrate lower self-esteem, act out more, and have lower academic performance. [32] As parental controls can lead to controlling parenting, they need to be treated with great care.

References

- ↑ J. D. Biersdorfer. "Tools to Keep Your Children in Line When They’re Online" (2018, March 02). New York Times. Retrieved April 01, 2021.

- ↑ 2.0 2.1 Federal Trade Commission. (2018, March 13). Parental controls. Retrieved April 06, 2021, from https://www.consumer.ftc.gov/articles/0029-parental-controls

- ↑ 3.0 3.1 3.2 3.3 3.4 3.5 Thierer, Adam. (2019). Parental Controls & Online Child Protection. The Progress & Freedom Foundation, 45-51. https://poseidon01.ssrn.com/delivery.php?ID=396031102089030101016075082091103088058033095009026094104027126098086093111106075097029031099028051096054088127017025008122107111073000085023006114113101117080113006077037031064068080020002099009101067004068104007107105022107078007111120115083101094&EXT=pdf&INDEX=TRUE

- ↑ Managing Screen Time and Privacy | Could Parental Control Apps Do More Harm than Good? https://techden.com/blog/screen-time-privacy-parental-control-apps/

- ↑ Controlling Parents – The Signs And Why They Are Harmful https://www.parentingforbrain.com/controlling-parents/

- ↑ amaysim. (2020, November 3). How to set up parental controls on your iPhone, iPad or Android device. amaysim. https://www.amaysim.com.au/blog/world-of-mobile/set-up-parental-controls-apple-android

- ↑ The Business Insider. (2020, September 18). The best internet parental control systems. Newstex LLC. https://go-gale-com.proxy.lib.umich.edu/ps/i.do?p=STND&u=umuser&id=GALE%7CA635821966&v=2.1&it=r&sid=summon

- ↑ Microsoft. (n.d.). Parental consent and Microsoft child accounts. Microsoft. https://support.microsoft.com/en-us/account-billing/parental-consent-and-microsoft-child-accounts-c6951746-8ee5-8461-0809-fbd755cd902e

- ↑ (Clark, N). Content Filter Technology. Retrieved 1 April 2021, from https://www.britannica.com/technology/content-filter

- ↑ Web Content Filtering. Retrieved 1 April 2021, from https://www.webroot.com/us/en/resources/glossary/what-is-web-content-filtering

- ↑ Gallego, Francisco, A. (2020, August). Parental monitoring and children's internet use: The role of information, control, and cues. ScienceDirect. https://www-sciencedirect-com.proxy.lib.umich.edu/science/article/pii/S0047272720300724?via%3Dihub

- ↑ Knorr, Caroline. (2021, March). Parents' Ultimate Guide to Parental Controls. https://www.commonsensemedia.org/blog/parents-ultimate-guide-to-parental-controls#What%20are%20the%20best%20parental%20controls%20for%20setting%20limits%20and%20monitoring%20kids?

- ↑ Orchilles, Jorge. (2010, April). Parental Control. https://www.sciencedirect.com/topics/computer-science/parental-control

- ↑ Teenage Cellphone Addiction https://www.psycom.net/cell-phone-internet-addiction

- ↑ Signs and Symptoms of Cell Phone Addiction https://www.psychguides.com/behavioral-disorders/cell-phone-addiction/signs-and-symptoms/

- ↑ Miller, M. (2020, February 24). Benefits Of Limiting Screen Time For Children. Retrieved from https://web.magnushealth.com/insights/benefits-of-limiting-screen-time-for-children#:~:text=It%20is%20amazing%20to%20see,online%20and%20age%2Dinappropriate%20videos.

- ↑ 17.0 17.1 17.2 Teodosieva, Radina. (2015, October 16). Spy me, please! The University of Amsterdam. http://mastersofmedia.hum.uva.nl/blog/2015/10/16/spy-me-please-the-self-spying-app-that-you-need/

- ↑ Lenore Skenazy, Life 360 Should Be Called “Life Sentence 360”

- ↑ Kanable, Rebecca. (2004, November). Policing Online: From Internet Safety to Employee Management and Parolee Monitoring, Technology Can Help. U.S. Department of Justice. https://www.ojp.gov/ncjrs/virtual-library/abstracts/policing-online-internet-safety-employee-management-and-parolee

- ↑ Reporter, S. (2019, November 29). Parenting benefits of keylogger program. Retrieved April 08, 2021, from https://www.sciencetimes.com/articles/24367/20191129/parenting-benefits-of-keylogger-program.htm

- ↑ 21.0 21.1 Ghosh, Arup K, et al. (2018, April 26). A Matter of Control or Safety? Examining Parental Use of Technical Monitoring Apps on Teens’ Mobile Devices. Association for Computing Machinery Digital Library. Association for Computing Machinery. https://dl.acm.org/doi/pdf/10.1145/3173574.3173768

- ↑ Namecheap . "How does VPN work?" (January 3, 2020). PC Mag. Retrieved April 01, 2021.

- ↑ Lance Whitney. "How to Use Apple's Screen Time on iPhone or iPad" (January 3, 2020). PC Mag. Retrieved April 01, 2021.

- ↑ Jellies App. "Are Your Kids or Teens Unlocking Apple Screen Time Limits?" (January 3, 2020). Retrieved April 01, 2021.

- ↑ Mark Smirniotis. "What Is a VPN and What Can (and Can’t) It Do?" (March 3, 2021). New York Times. Retrieved April 01, 2021.

- ↑ David Pierce. . "Why You Need a VPN—and How to Choose the Right One" (September 18, 2018). Wall Street Journal. Retrieved April 01, 2021.

- ↑ Yael Grauer. "The Best VPN Service" (February 25, 2021). New York Times. Retrieved April 01, 2021.

- ↑ 28.0 28.1 Meisenzahl, M. (2019, November 08). Teens are Finding sneaky and clever ways to outsmart their parents' location-tracking apps, and it's turning into a meme ON TIKTOK. Retrieved April 08, 2021, from https://www.businessinsider.com/life360-location-tracker-teens-tiktok-memes-tips-2019-11#as-more-teens-and-their-parents-use-life360-the-community-on-tiktok-has-made-a-meme-out-of-it-videos-are-set-to-the-song-fly-by-still-lonely-which-has-the-lyrics-life-360-in-it-2

- ↑ Managing Screen Time and Privacy | Could Parental Control Apps Do More Harm than Good? https://techden.com/blog/screen-time-privacy-parental-control-apps/

- ↑ Cetinkaya, L. (2019). The Relationship between Perceived Parental Control and Internet Addiction: A Cross-sectional study among Adolescents. Contemporary Educational Technology, 10(1), 55–74. https://doi.org/10.30935/cet.512531

- ↑ Managing Screen Time and Privacy | Could Parental Control Apps Do More Harm than Good? https://techden.com/blog/screen-time-privacy-parental-control-apps/

- ↑ Controlling Parents – The Signs And Why They Are Harmful https://www.parentingforbrain.com/controlling-parents/