Neurotechnology

Background

First defined by Jonathan Wolpaw in the 1990s, noninvasive neurotechnologies began gaining traction amongst the scientific community, looking to explore its potential capabilities. [2] Following the work of many scientists who had contributed to discoveries of EEGs and electrical signals in the brain, Jacques Vidal published his paper in 1973 “Toward Direct Brain-Computer Communications” which detailed the brain's ability to communicate with external devices.[3] These devices do so by detecting and interpreting neural activity. [4] The most common measures used in neural devices to interpret brain patterns are electroencephalogram (EEG) and functional magnetic resonance imaging (fMRI) because they are already widely used in the medical field and low risk. EEG tracks the electrical potential between two electrodes, and fMRI monitors changes in blood flow. [5] Since the ability to connect a user's brain directly to a computer was discovered, the field has progressed rapidly to create new ways of enabling users to act with their mind without using muscles and nerves. Neural devices now come in a variety of forms and functions - among them are implants, monitoring devices that use electrodes attached to the scalp, and physical devices such as brain powered limbs. Researchers in the medical and entertainment fields alike are interested in harnessing the capabilities of neural devices to their benefit, especially after studies have been released such as one conducted by Davimar Borducchi that found neurotechnologies have potential to help improve sports performance, academic success, and even possible military advantages.[6] The market for neurotechnology products is projected to reach $13.3 billion in 2022[7] and as it progresses, so do its accompanying ethical discussions.

Types

With a wide variety of neurotechnology, devices are classified into 3 main types, that refer to how they interact with and interpret a users’ brain waves.

Active

To utilize these devices, users “actively generate” brain signals to administer a specific command. [8]

Reactive

These devices are used by interpreting how a user's brain responds to “specific probe stimuli”[9]. They control an application by interpreting the users brain activity in response to the stimuli. [10]

Passive

These types of devices read the cognitive activity of the user's brain activity, allowing them to recognize a user's emotions and mental state. [11] They are not used for controlling devices or applications, but to improve an interaction between a user and a device by tracking the user's current state. [12]

Applications

Medical Devices

Assistive Applications

These types of devices are employed to assist users who have lost function, by providing them with technological alternatives. Current forms of assistive applications include neural devices that enable users to communicate via “mental typing” and auditory assistance for those with neurodegenerative diseases that affect communication. Newer possibilities for assistive neurotechnology include the ability to control wheelchairs or similar devices. [13]

Rehabilitative Applications

These types of devices trigger neural plasticity which over time allow the users own neural pathways recover control over their limb. They function by detecting and interpreting a user's attempt or thought of movement, then artificially moving the limb with a robotic aid or by stimulation of the muscles. [14]

Civilian Devices

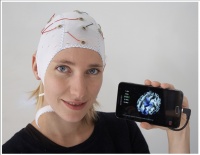

Direct to Consumer Products

Direct to Consumer Products (DCPs) describe the neural devices that can be purchased by a consumer without the involvement of a researcher or doctor. [15] There are a variety of neurotechnology devices that are becoming available to the greater public, along with accompanying apps, and accessories. DCPs vary from gaming, to self tracking medical data, and those that improve “brain fitness and performance”. These devices usually rely on bluetooth connections between the neural device and a home computer or machine. [16]

Emerging Applications

As further research and progress is made in the field of neurotechnology, new applications are being explored : “biofeedback, sleep control, treatment of learning disorders, functional and stroke rehabilitation, and the use of brain signals as biomarkers for diagnosis of diseases or their progression” are some of the trending applications.[17] More recent commercial applications include neuromarketing and defense applications.[18] The ability of neural devices to “induce cortical plasticity” has also led to development of potential therapeutic applications: reducing seizures, treating ADD and ADHD, improving cognitive function in elderly patients, managing pain, and more.[19] These newer applications are being introduced to broader markets than traditional, medical BCIs.[20]

Ethical Implications

Neurotechnology is a rapidly growing field that may offer innovative solutions to many societal problems. As it gains popularity and expands beyond clinical usage, many ethical implications have arisen. Because ethical dilemmas can occur at various phases along the neurotechnology feedback cycle, there are multiple concerns in the neurotechnology domain. [21].

Privacy

Throughout history, in the beginning phases of technological innovation, the risks of security issues are higher due to a lack of well developed security protocols built into the technologies, along with legal structures that aren’t fully prepared for the new issues brought upon by the technology. [22] These concerns are founded in past breaches in technological privacy, as “large corporations already provide third-party access, usually through customer’s unknowing approval, that infringes on patients’ right to privacy” [23] Notoriously, "technology innovates faster than the regulatory system can adapt, and disruptive technological advancements can make current privacy and security norms obsolete”. [24] There is a possibility that hackers could gain access to sensitive information of users. As privacy and data protection are often valued by individuals, this poses a threat to users. [25] Private information could include financial, medical, and locational data. This data could potentially be abused by not only criminals, but employers who use the information to make hiring decisions, leading to potential hiring discrimination. In this context, if neural information reveals that a patient is predisposed to certain disorders or diseases, should they or could they be obligated to share that information with their employer, insurance company or elsewhere? Standard practices of setting privacy standards and choosing which data to share will weaken when users aren’t cognizant of what data is being extracted from them. [26] The specifics surrounding how neural data is used after it is entered into a system can often be ambiguous to common users, as there is inadequate transparency in policies dictating how companies share their data. [27] There are particular concerns regarding devices marketed towards the general public. Consumer devices don’t have to adhere to the stringent regulations that many clinical devices do. This enables direct to consumer companies to share large amounts of user data to third parties without the consent of their users. [28] There is a concern that those in charge of neurotechnology development will not prioritize the privacy of users over scientific research or profit. In a study conducted by Katherine MacDuffie, she found that general public respondents were significantly more likely to prioritize privacy than members of the neural device industry. This finding may have implications for further advances in neurotechnology in regards to whether the beliefs of stakeholders, users or researchers should be prioritized.[29]

Autonomy

The ethical issue of autonomy is relevant to neurotechnologies as neural devices may complicate a users ability to feel that they’re acting and existing separately from their device and others. Some users felt “unsure about the authenticity or authorship of their feelings and behaviors” when using neurotechnologies. [30] If neurotechnology gets hacked, an individual would have the ability to alter a user’s decisions and or behavior.[31] The threat of lost agency is potentially increased in clinical settings in which patients with severe neurological disorders are vulnerable.[32] These threats are worth noting as they create doubt regarding the willingness of the user to perform the action.

Liability

Neurotechnologies have the potential to necessitate changes in legal systems, as their issues regarding agency may complicate questions of liability. While laws have changed to respond to technological advancements in the past, neural devices are unlike many other forms of autonomous technology. While other forms operate independently from user input, some neural devices are ingrained into a users’ cognitive system. Thus, there is ambiguity surrounding who is responsible if harm is caused or damage is done by a user of neurotechnology. As of now, there are no clear guidelines of whether the manufacturer, user, or device is at fault. [33] There is a possibility that users themselves may not feel certain if they are responsible for their actions. A study conducted by Luke Bashford found that neurotechnology users gained a false sense of control over a virtual hand, despite there being no existing causal connection between their device and the hand. Conversely, other users reported not feeling in control of their device when they were. [34] Because BCIs are capable of moving a user's body via brain activity instead of physical movements, these new actions are uncharted territory and don’t fall under current legal definitions of “willed bodily movements and thus, as actions”[35]

Hacking

As neurotechnology becomes commonplace, the possibility of viewing and or manipulating the cognitive information of neurotechnology users emerges. [36] Neurocrime takes advantage of the neural device to access and alter cognitive information - similar to the hacking of a computer. [37] This “brain hacking” can occur at different stages of the neurotechnology cycle. “Misusing neural devices for cybercriminal purposes may not only threaten the physical security of the users but also influence their behavior and alter their self-identification as persons.” [38] This type of neurocrime could manifest in different forms: gaining control over a users’ prostheses, uncovering sensitive information by reading a users’ brain signals, and more. A study done by Ivan Martinovic at the University of Oxford showed that it was possible to extract banking information, addresses, and other sensitive information via brain hacking. This type of hacking could complicate issues of fraud, password hacking, identity theft, and more. [39]

Matrix of Domination

“The matrix of domination works to uphold the undue privilege of dominant groups while unfairly oppressing minoritized groups.“ [40] As new neurotechnologies continue to be developed, there is potential for their creators to influence the data and functionalities of the devices. Thus, ethical issues arise concerning the risk of biased devices. Similar to other products in the technological sphere, there is a lack of diversity among developers - which may likely introduce biases into the architecture of the devices. Because the technology will be principally developed by a select few, devices may (often inadvertently) attempt to correct “certain behaviors” that could unfairly target and oppress minority groups. [41]. An opposing argument exists that neurotechnology may potentially reduce problems of injustice by providing more affordable access to products that will benefit lower socioeconomic groups. [42] There is also concern that neurotechnologies may result in increased discrimination or social isolation if information from the neural devices that contain signs of a users’ cognitive impairments, disorders, or personality traits are unintentionally shared. [43] There has been notable apprehension surrounding the potential to use function brain imaging as a means to predict an individual's future behavior - such as an employer deciding whether or not they will hire a person whose brain activity suggests they're prone to combative behavior. The prospect of using brain activity to make assumptions about what people will do is controversial as it may unfairly target those with conditions or disorders that they would not otherwise disclose. [44]

Distributive Justice

The allocation of improved health is always a social concern, and in a society where neural technologies enable enhancement, rehabilitation, therapies, and recreation to consumers, it is likely there would not be equal access to everyone. [45] The wealthier classes will likely have better access to these technologies, as they do to the majority of innovations in the healthcare field.[46] This unequal distribution may increase the already existent divides and inequalities amongst social classes, thus furthering the disparate access to information that often hurts lower socioeconomic groups. This issue is especially relevant to countries with privatized healthcare systems such as the United States or when neural devices are distributed via private corporations. In those cases, poorer patients may be unable to purchase these devices - furthering a “monetary divide within the society”. [47] Similarly, in his book “The Ethics of Biomedical Enhancement”, Allen Buchanan says that the public should not assume that the developers of neurotechnology will be inclined to distribute their products in a way that promotes justice - but rather in ways that may result in “unjust exclusion or domination”.[48] Thus, there are concerns that “those who already possess certain traits, attributes, and or resources will likely and quickly get even more”. [49]

Normality

The ability to make enhancements and changes to an individual's brain raises ethical questions regarding society’s view of what is “normal” and “good”, [50] as aiming for everyone to desire and display “normal” functioning requires a societal definition of what “normal” is and should be. Likewise, allowing people to change their mental, sensory and physical abilities could change societal norms. In Khan Shujhat’s book "Brain Communications", he says that this new ability to self “enhance” with neurotechnology may alter human nature - or what separates “healthy humans” from “animals” - because these devices can change cognitive reasoning, our ability to make moral judgments, and emotional perception. [51] People are often discriminated against if they have differently functioning brains or bodies, and there is concern this may extend to neurotechnologies. There may be pressure to use neurotechnology for self enhancement as progress in the field creates new types of discrimination. [52] In an Oxford University symposium, Dr Kevin FitzGerald highlighted the importance of knowing the truth about the capacities and limits to changing oneself. The process of a patients' informed consent and the “ethical dilemmas that could occur as we respond to escalating socio-economic pressures” to use every technology at our disposal [53] may alter or damage the aspects of the social and natural environment on which life depends. [54]

Identity

“Identity encompasses the memories, experiences, relationships, and values that create one’s sense of self.” [55]. The ethics around the possibility of maintaining a consistent identity while neurotechnology alters brain activity is under debate. Some users of neural devices have reported feeling that their movements and accomplishments are not their own and that they feel artificial or robotic. [56] Research posted in the Social Science Research Network conducted by Emily Postan says that the information gleaned in monitored neural data that are considered “self descriptors” such as an indication that a user is a light sleeper can modify an individual's identity and sense of self - which may in turn alter an individual's perceptions and actions. [57] Additionally, Dr. Michael Decker found that altering brain function via brain stimulation could cause unintended changes in a users’ mental states integral to their personality , which therefore can affect an individual's personal identity. [58] Further, brain stimulation through neural devices may result in behavioral changes such as increased aggressiveness, quicker decision making in high-conflict conditions, and altered sexual behaviors. [59] Marcello Ienca suggests the implementation of the protection of the right to “psychological continuity” in the future, to alleviate these concerns and ensure the protection of continuity in an individual's thoughts, preferences, and choices.[60]

Informed Consent

“Informed consent is regarded as a continuous agreement to go forward with a particular treatment modality”. [61] As one of the largest anticipated applications of neurotechnology is treating damaged cognitive functioning, assuring that a patient is completely aware of any and all ramifications of their treatment to give their informed consent becomes complicated. Dr. James Giordano says that “ongoing research is needed to enable clinicians to communicate the relative values, benefits, and risks of particular treatments and to enable patients to make well-informed decisions.” [62] Even if a healthcare provider can assure that a patient is aware of potential consequences, there are doubts of whether individuals should have the option to opt into unnecessary treatments or applications of neurotechnology. This question could become relevant in contexts such as sports where a user may feel pressured to give their consent to improve their motor performance and therefore be influenced to undergo treatment [63]

Public Perception

There are concerns that the capabilities of BCIs and other forms of neurotechnology are being overestimated by the media and general public. A study done by Eric Racine et al found that popular media conveys to the public an incorrect emphasis on the positives and capabilities of neurotechnology to reveal a person's true character. 67% of the articles they studied didn’t mention the capabilities of neural devices, and 79% had a mostly optimistic tone. If the limitations and risks of these devices aren’t understood by the broader public as they gain commercial popularity, problems will arise if society thinks that neurotechnology is able to accurately reveal the “true self”, incorrectly believing that the “brain can’t lie”. [64] Additionally, a case study by Allyson Purcell-Davis found that “the initial results of scientific experiments conducted within laboratories were often projected as potential cures for those with neurodegenerative disease, without any further discussion of the cost of such procedures or how long it might be before they become available.” [65] This tendency for neurotechnologies to be viewed by the public as powerful and astonishing, could threaten a person's ability to decide logically whether or not the use of these technologies is beneficial for them, taking into account the potential risks.[66] While intending to highlight the wide breadth of capabilities that neurotechnology offers, some representations may have negative consequences. If the companies that allocate neural devices do not maintain transparency regarding the outcomes of a device, consumers are more likely to be misled. [67]

References

- ↑ Institute of Electrical and Electronics Engineers(May 26, 2021). "Neurotechnologies: The Next Technology Frontier". Retrieved February 2, 2022.

- ↑ Friedrich, Orsolya (2021). "Clinical Neurotechnology meets Artificial Intelligence". Retrieved January 25, 2022.

- ↑ Friedrich, Orsolya (2021). "Clinical Neurotechnology meets Artificial Intelligence". Retrieved January 25, 2022.

- ↑ Friedrich, Orsolya (2021). "Clinical Neurotechnology meets Artificial Intelligence". Retrieved January 25, 2022.

- ↑ White, Susan (October 17, 2014). "The Promise of Neurotechnology in Clinical Translational Science". Retrieved February 5, 2022.

- ↑ Borducchi, Davimar (November 30, 2016). "Transcranial Direct Current Stimulation Effects on Athletes’ Cognitive Performance: An Exploratory Proof of Concept Trial". Retrieved February 9, 2022.

- ↑ Marcella, Ienca (September, 2018). "Brain leaks and consumer neurotechnology". Retrieved February 6, 2022.

- ↑ VanErp, J.B.F (July 7, 2012). "Framework for BCIs in Multimodal Interaction and Multitask Environments". Retrieved February 2, 2022.

- ↑ VanErp, J.B.F (July 7, 2012). "Framework for BCIs in Multimodal Interaction and Multitask Environments". Retrieved February 2, 2022.

- ↑ O Zander, Thorston (March 24, 2011). "Towards passive brain–computer interfaces: applying brain–computer interface technology to human–machine systems in general". Retrieved February 2, 2022.

- ↑ Kawala-Sterniuk, Thorston (January 3, 2021). "Summary of over Fifty Years with Brain-Computer Interfaces—A Review". Retrieved February 2, 2022.

- ↑ O Zander, Thorston (March 24, 2011). "Towards passive brain–computer interfaces: applying brain–computer interface technology to human–machine systems in general". Retrieved February 2, 2022.

- ↑ Vlek, Rutger (June,2012). https://journals.lww.com/jnpt/FullText/2012/06000/Ethical_Issues_in_Brain_Computer_Interface.8.aspx?casa_token=C18A3mf2Y5EAAAAA:wwz9lj2qZSJCWAQFV4lR8-fmWUeidUMQ9CcznLLjfeV4UJQhRBKmbqGiR2Nw3jNeCJEPdXCo2bQs9X2WGWGgmQ2sK1wM "Ethical Issues in Brain–Computer Interface Research, Development, and Dissemination"]. Retrieved January 26, 2022.

- ↑ Vlek, Rutger (June,2012). https://journals.lww.com/jnpt/FullText/2012/06000/Ethical_Issues_in_Brain_Computer_Interface.8.aspx?casa_token=C18A3mf2Y5EAAAAA:wwz9lj2qZSJCWAQFV4lR8-fmWUeidUMQ9CcznLLjfeV4UJQhRBKmbqGiR2Nw3jNeCJEPdXCo2bQs9X2WGWGgmQ2sK1wM "Ethical Issues in Brain–Computer Interface Research, Development, and Dissemination"]. Retrieved January 26, 2022.

- ↑ Ienca, Marcello (October 13, 2019). "Direct-to-Consumer Neurotechnology: What Is It and What Is It for?". Retrieved February 6, 2022.

- ↑ Marcella, Ienca (September, 2018). "Brain leaks and consumer neurotechnology". Retrieved February 6, 2022.

- ↑ P, Brunner (March 24, 2011). "Current trends in hardware and software for brain–computer interfaces (BCIs)". Retrieved January 26, 2022.

- ↑ P, Brunner (March 24, 2011). "Current trends in hardware and software for brain–computer interfaces (BCIs)". Retrieved January 26, 2022.

- ↑ P, Brunner (March 24, 2011). "Current trends in hardware and software for brain–computer interfaces (BCIs)". Retrieved January 26, 2022.

- ↑ P, Brunner (March 24, 2011). "Current trends in hardware and software for brain–computer interfaces (BCIs)". Retrieved January 26, 2022.

- ↑ Marcella, Ienca (April 16, 2016). "Hacking the brain: brain–computer interfacing technology and the ethics of neurosecurity". Retrieved January 24, 2022.

- ↑ Marcella, Ienca (September, 2018). "Brain leaks and consumer neurotechnology". Retrieved February 6, 2022.

- ↑ Shujhat, Khan (September 16, 2019). "Transcending the brain: is there a cost to hacking the nervous system?". Retrieved February 9, 2022.

- ↑ Marcella, Ienca (September, 2018). "Brain leaks and consumer neurotechnology". Retrieved January 24, 2022.

- ↑ Marcella, Ienca (April 16, 2016). "Hacking the brain: brain–computer interfacing technology and the ethics of neurosecurity". Retrieved January 24, 2022.

- ↑ Marcella, Ienca (September, 2018). "Brain leaks and consumer neurotechnology". Retrieved January 24, 2022.

- ↑ Ishan, Dasgupta (2020). "Developments in Neuroethics and Bioethics". Retrieved February 9, 2022.

- ↑ Marcella, Ienca (September, 2018). "Brain leaks and consumer neurotechnology". Retrieved January 24, 2022.

- ↑ MacDuffie, Katherine (March 31, 2021). "Neuroethics Inside and Out: A Comparative Survey of Neural Device Industry Representatives and the General Public on Ethical Issues and Principles in Neurotechnology". Retrieved February 9, 2022.

- ↑ Goering, Sara (March 10, 2021). "Neurotechnology ethics and relational agency". Retrieved January 24, 2022.

- ↑ Marcella, Ienca (April 16, 2016). "Hacking the brain: brain–computer interfacing technology and the ethics of neurosecurity". Retrieved January 24, 2022.

- ↑ Marcella, Ienca (April 16, 2016). "Hacking the brain: brain–computer interfacing technology and the ethics of neurosecurity". Retrieved January 24, 2022.

- ↑ Bublitz, Christoph (November 16, 2018). "Legal liabilities of BCI-users: Responsibility gaps at the intersection of mind and machine?". Retrieved February 9, 2022.

- ↑ Bashford, Luke (June 15, 2016). "Ownership and Agency of an Independent Supernumerary Hand Induced by an Imitation Brain-Computer Interface". Retrieved February 9, 2022.

- ↑ Bublitz, Christoph (November 16, 2018)"Legal liabilities of BCI-users: Responsibility gaps at the intersection of mind and machine?". Retrieved February 9, 2022.

- ↑ Marcella, Ienca (April 16, 2016). "Hacking the brain: brain–computer interfacing technology and the ethics of neurosecurity". Retrieved January 24, 2022.

- ↑ Marcella, Ienca (April 16, 2016). "Hacking the brain: brain–computer interfacing technology and the ethics of neurosecurity". Retrieved January 24, 2022.

- ↑ Marcella, Ienca (April 16, 2016). "Hacking the brain: brain–computer interfacing technology and the ethics of neurosecurity". Retrieved January 24, 2022.

- ↑ Marcella, Ienca (April 16, 2016). "Hacking the brain: brain–computer interfacing technology and the ethics of neurosecurity". Retrieved January 24, 2022.

- ↑ D'Ignazio, Catherine (February 21, 2020). "Data Feminism". Retrieved February 6, 2022.

- ↑ Gianfrancesco, Milena (November 2018). "Potential Biases in Machine Learning Algorithms Using Electronic Health Record Data". Retrieved February 9, 2022.

- ↑ Fitz, Nicholas (2015). "The challenge of crafting policy for do-it-yourself brain stimulation". Retrieved February 9, 2022.

- ↑ Marcella, Ienca (September, 2018). "Brain leaks and consumer neurotechnology". Retrieved January 24, 2022.

- ↑ Eaton, Margaret (April 2007). "Commercializing cognitive neurotechnology—the ethical terrain". Retrieved February 6, 2022.

- ↑ Shook, John (December 5, 2014). "Cognitive enhancement kept within contexts: neuroethics and informed public policy". Retrieved January 25, 2022.

- ↑ Wexler, Anna (January 18, 2019). "Oversight of direct-to-consumer neurotechnologies". Retrieved January 25, 2022.

- ↑ Shujhat, Khan (September 16,2019)."Transcending the brain: is there a cost to hacking the nervous system?". Retrieved February 9, 2022.

- ↑ Buchanan, Allen (2011). "The Ethics of Biomedical Enhancement". Retrieved January 25, 2022.

- ↑ Shook, John (December 5, 2014). "Cognitive enhancement kept within contexts: neuroethics and informed public policy". Retrieved January 25, 2022.

- ↑ Shook, John (December 5, 2014). "Cognitive enhancement kept within contexts: neuroethics and informed public policy". Retrieved January 25, 2022.

- ↑ Shujhat, Khan (September 16, 2019). "Transcending the brain: is there a cost to hacking the nervous system?". Retrieved February 9, 2022.

- ↑ Yuste, Rafael (November 9, 2017). "Four ethical priorities for neurotechnologies and AI". Retrieved February 9, 2022.

- ↑ Palchik, Guillermo (May 8, 2019). "Technology, Neuroscience & the Nature of Being: Considerations of Meaning, Morality and Transcendence Part I: The Paradox of Neurotechnology ". Retrieved February 9, 2022.

- ↑ Shook, John (December 5, 2014). "Cognitive enhancement kept within contexts: neuroethics and informed public policy". Retrieved January 25, 2022.

- ↑ Psychology Today (2018). "Identity". Retrieved January 24, 2022.

- ↑ Goering, Sara (March 10, 2021). "Neurotechnology ethics and relational agency". Retrieved January 24, 2022.

- ↑ Postan, Emily (September 28, 2020). "Narrative Devices: Neurotechnologies, Information, and Self-Constitution". Retrieved February 1, 2022.

- ↑ Decker, Michael (December 15, 2008). "Contacting the brain – aspects of a technology assessment of neural implants". Retrieved February 1, 2022.

- ↑ Frank, Michael (November 23, 2007). "Hold Your Horses: Impulsivity, Deep Brain Stimulation, and Medication in Parkinsonism". Retrieved February 1, 2022.

- ↑ Ienca, Marcello (April 26, 2017). "Towards new human rights in the age of neuroscience and neurotechnology". Retrieved February 1, 2022.

- ↑ Shujhat, Khan (September 16,2019)."Transcending the brain: is there a cost to hacking the nervous system?". Retrieved February 9, 2022.

- ↑ Karwowski, Waldemar (2013)."Neuroadaptive Systems". Retrieved February 9, 2022.

- ↑ Shujhat, Khan (September 16,2019)."Transcending the brain: is there a cost to hacking the nervous system?". Retrieved February 9, 2022.

- ↑ Racine, Eric (February 1, 2005). "fMRI in the public eye". Retrieved February 5, 2022.

- ↑ Purcell-Davis, Allyson (April 21, 2015). "The Representations of Novel Neurotechnologies in Social Media". Retrieved February 5, 2022.

- ↑ Giattino, Charles (January 2019)."The Seductive Allure of Artificial Intelligence-Powered Neurotechnology". Retrieved February 9, 2022.

- ↑ Wexler, Anna (September 18,2018)."Mind-Reading or Misleading? Assessing Direct-to-Consumer Electroencephalography (EEG) Devices Marketed for Wellness and Their Ethical and Regulatory Implications". Retrieved February 9, 2022.