Fact Checking

Fact checking is the process of investigating an issue or information in order to verify the facts. The process of fact-checking has become more and more relevant in the discussion of the roles that major social media companies have as news sources. According to a survey in 2018, over two-thirds of Americans get some of their news from social media. They reported that 43% of Americans get their news from Facebook, 21% from YouTube, and 12% from Twitter [1]. While the process of fact-checking the information you are exposed to has always been a part of the discussion (i.e. using reliable sources, researching the author(s), etc.) there is now discussion surrounding who is responsible for fact checking information online. Is it the responsibility of the user to ensure their information comes from a reliable source or is is the responsibility of platforms that share this information (i.e. Facebook, Twitter, Google etc.)? Fact-checking is important because exposure to misinformation can greatly influence people’s opinion and in turn their actions.

There are different types of information on the internet that might be fact-checked. Misinformation is incorrect or misleading information[2]. Misinformation is unique in the regards that the information is spread by people who don’t know the information is false. An example of misinformation is “fake news”. Fake news is a term used to describe any type of false information, both intentionally and unintentionally spread. Fake news is often spread because it catches users attention and is interesting. Disinformation is the spreading of false information knowing that it is false[3].

Contents

History

Rise of Fact-Checking

The rise of the internet in the early 2000's allowed people to get their information from a wide variety of sources. People no longer had to rely on their thoroughly edited newspapers or radios to get their news. The growth of online platforms created many concerns such as a concentration of power in the hands of a few large media companies, and the growing likeliness for user's to get trapped in echo chambers or filter bubbles. An echo chamber or filter bubble occurs when people get isolated from information opposing their own perspectives and viewpoints. People tend to look for information that confirms their current beliefs, not information that challenges it. This means that people either needed to fact-check the information they are exposed to for accuracy or that the distributors of the information(i.e. social media platforms) are responsible for monitoring the accuracy of information posted on their sites or notifying users that it might not be correct. Documents that Facebook released following the January 6th Capitol insurrection showed that misinformation shared by politicians is more damaging than that coming from ordinary users[4]. Because of this, fact-checking becomes an especially prevalent topic of conversation and controversy surrounding elections, political events such as the 2016 and 2020 US Presidential election and the January 6th, 2021 Capitol Insurrection, and public health issues.

2016 US Presidential Election

The term "fake news" became exponentially popular during the 2016 US Presidential Election. After the election, and Donald Trump's victory, there was much debate about the impact social media as news platforms and the spreading of "fake news" and misinformation had on the results of the election. Research done for the Journal of Economic Perspectives in 2017, following the 2016 election, concluded that "fake news" stories were shared more frequently than mainstream new stories, many people who are exposed to fake news report their believed it, and that most "fake news" stories favored Donald Trump over his competitor Hillary Clinton[5]. This drove leaders in tech, government officials, and users of these platforms to look to create a solution before the 2020 Presidential election. Additionally, Russian internet trolls worked frequently through Facebook's platform to create accounts and pages that looked as if they were American's posting to sway public favor towards Trump[6].

January 6th Capitol Insurrection

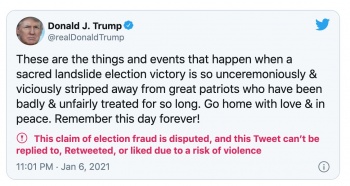

On January 6th, 2021, the US Congress assembled to confirm President Joe Biden's victory over former President Donald Trump. On this day nearly 2,000 right wing activists breached the Capitol's walls and police barriers to raid the capitol. The rioters were violent and defaced much of the Capitol. After the event, officials looked into the factors that encouraged this event, and ultimately allowed the raid to happen. At the forefront of a multitude of factors was Donald Trump's multitude of false Tweets claiming he was in fact the next elected President due to voter fraud. These tweets motivated extremists to take matters into their own hands. After the insurrection, Facebook reported they did not act forcefully enough against the Stop the Steal movement. User reports of "fake news" reached about 40,000 posts per hour and the account reported most often for inciting violence was @realdonaldtrump, the President. Facebook also reported that the people who were to blame were the people involved in the attack on the Capitol and those who encouraged it, not necessarily Facebook and other media platforms. After January 6th, Facebook removed content with the phrase ‘stop the steal’ under their Coordinating Harm policy and suspended Trump from their platforms. Mark Zuckerberg, Facebook's CEO, also reported after the event that while Facebook did have a role in preventing the spread of information that incited violence, many possible mitigation tactics would cause too many "false positive" and stop people from engaging with its platform[7].

COVID-19 Pandemic

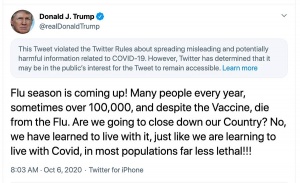

The COVID-19 Pandemic has been a tension filled time for many Americans. This is partially due to the ever complicating factor that the official advice regarding COVID-19 is constantly changing as officials learn more about it. Because of this, information regarding COVID-19 is very present in the media. Instances of disinformation such as blaming racial groups, and misinformation such as false treatment remedies have been spreading over the internet since the pandemics start in 2019[8]. Facebook has publicly made a commitment to connect people with reliable COVID-19 information and to limit the spread of COVID-19 hoaxes and misinformation. Facebook launched the COVID-19 Information Center which is featured at the top of the News Feed on Facebook and shows educational pop-ups with information from the World Health Organization (WHO) and Center for Disease Control (CDC). In terms of removing misinformation, Facebook has committed to removing COVID-19 information that could contribute to imminent physical harm such as claims that physical distancing doesn't help prevent the spread of COVID[9]. For claims that don't contribute to physical harm such as conspiracy theories, if the claims are proven false by their fact-checkers the distribution of the post will be reduces and it will be accompanied by strong warning labels to those who come across it.

Responsibility of Platforms

Third parties such as major social media platforms and government officials are facing pressures to combat the spread of fake news and misinformation. The United States of America both aims to protect the 1st Amendment of Free Speech, but also ensure their people have access to correct information. Until recently, user’s have generally been faced with the responsibility to fact-check their news and evaluate it for truth up until the last couple years. Instances such as the 2016 President election have shown how sometimes fact-checking might be taken out of the user's hands because of trolls, bots, and the sheer amount of misinformation. There are ethical implications for both allowing the spread of misinformation, but also infringing on people's right to free speech. Below is a cross analysis of different third parties policies regarding fact-checking.

Platfroms

Facebook is an online social media and social networking service founded in 2004 by Mark Zuckerberg who has just recently announced the companies transition to its new name 'Meta'. Meta is a part of the Technology Conglomerate which includes Facebook, Instagram, and Whatsapp. The policies outlined below apply to all Meta owned applications but Facebook is at the head of the discussion of fact-checking information.

Twitter is a social networking and microblogging platform founded in 2006 by Jack Dorsey. Their CEO is currently Parag Agrawal. 80% of Twitter's user base lies outside the U.S. [10]

YouTube is an online video sharing and social media platform owned by Google. It was founded in 2005. Its CEO is Susan Wojcicki.

Fact-Check Policies

Below is a summery of different platform's attitudes and policies towards fact-checking:[11][12]

| Platform | Misinformation Policy | Examples |

|---|---|---|

| Facebook/Meta | Facebook generally identifies misinformation after it goes viral and after harm has been done. It refers it to third party fact-checkers before applying a label to posts. Punishments are threatened for repeat offenders. | October 6th, 2020: Facebook takes down Trump post comparing COVID-19 to the flu.

|

| There is no comprehensive misinformation policies. They will label or remove manipulated media, or anything intended to misinform or interfere with elections or other civic processes. They categorize tweets as either misleading, disputed, or unverified before fact-checking. Exceptions have been made for key elected officials. | May 27th, 2020: Twitter fact-checks Trump post for the first time. | |

| YouTube/Google | YouTube provides information from third-party fact-checkers on specific searches in some countries to give context to help users make their own informed decisions about videos they watch on YouTube. Specific videos are not fact-checked[13]. | April 28th, 2020: YouTube begins fact-checking videos in the United States. |

Misinformation Policies

Establishing a political misinformation policy means that platforms acknowledge disinformation when it appears and potentially enforce penalties for content/users that frequently violate their guidelines.

- in 2016 Facebook has partnered with a third party fact check platform to implement a remove/reduce/inform policy. This means that ad content that is flagged as false is either covered with a disclaimer and followed with a fact-check. Its reach (the total number of people who see the content) is also reduced using their algorithms. Users who attempt to share or post fact-checked information are prompted with a notification informing them the information might not be correct. The scale of Meta’s platforms is currently too large for the remove/reduce/inform policy. Elected officials and certain Facebook-approved candidates are exempt from this policy and have been linked to some of the greatest sources of misinformation on the site. Overall, Facebook has a very strong stance that social media shouldn't fact-check political speech[14].

- Twitter has established a fact-checking policy that applies to information that could alter confidence in democratic elections, but does not have a widespread fact-checking program. In May of 2020, Twitter fact-checked a Trump tweet for the first time on mail-in-voting. They followed by putting labels on certain tweets of Trump's that violated company policies [15]. They are also currently working on launching a program that will ask users who try to retweet articles they have not opened and read if they are sure they want to retweet it [16]

- YouTube also has a fact-checking policy against information that could alter confidence in democratic elections. They have also introduced fact-check panels to debunk popular false claims (i.e. conspiracy theories). However, this policy overall has not been enforced.

Hate Speech

Enforcing guidelines on hate speech and removing content that is intended to intimidate or dehumanize individuals or groups to reduced real-world violence. All major social media companies have policies against hate speech but enforcement and accuracy against hate speech is inconsistent across platforms. Algorithms generally do not treat minority groups the same as those in the majority.

- After the 2016 election incident, Facebook has implemented and improved on hate speech policies. Removing white nationalism, militarized social movements, and Holocaust denial from their platform has been a major goal for Facebook. However as seen by the January 6 2021 Capitol insurrection, which was largely planned on Facebook, these policies have not been enforced well.

- Twitter adapted its hate speech policy to include tweets that target individuals or groups based on age, disability, disease, race, or ethnicity. However, as seen with Facebook these policies are not well enforced.

- YouTube removed channels run by hate group leaders, but their recommendation algorithm still suggests racist and hateful videos. Studies have shown that YouTube facilitates right-wing radicalization by encouraging users to “go down a rabbit hole” (easy to move from mainstream to extreme content in one sitting) with their recommendation algorithms.

Anonymous Accounts

Reducing anonymous accounts on platforms reduces the ability of foreign/bad actors. This can come in the form of requiring users to confirm their identity.

- Facebook requires authenticity for accounts and flags accounts they detect as fake.

- Twitter does not require accounts to represent humans. In 2014 Twitter revealed 23 million of their accounts were "bots" while nearly 45% of them were active Russian accounts[17]

- YouTube does not require accounts to be authentic. Google however, considers anonymous accounts to be low quality and they receive a low pagerank.

Recommendation Algorithms

Content recommendation algorithms are used to increase screen time. Making these algorithms transparent to users puts the responsibility onto the users a bit more.

- Facebook has provided very little transparency into the algorithms behind their “NewsFeed”.

- Twitter has provided very little transparency into the algorithms behind their top search results, ranked tweets, and who to follow.

- Google’s ranking algorithm has been greatly documented. YouTube however has provided very little transparency into their recommendation algorithms.

Hacked Content

All platforms have very strict policies banning publication of hacked materials on their platform. Hacked materials are considered stolen, forged, or manipulated documents.

Ethical Concerns of Fact-Checking

There are many ethical concerns regarding fact-checking, but also in allowing the spread of misinformation on social media platforms.

1. Many claims are not as simple as a true or false answer. Information that is flagged to be fact-checked can be complicated and convoluted not yielding to a simple true or false

- People often see different things when looking at the same information. People's opinions can be impacted by their political affiliation, religious beliefs, life experiences among many other things. The validity of the information being fact-checked could be impacted by the background of the person/team doing the fact checking.

- For instance, a political claim that is fact-checked by a partisan team might have a different result than one fact-checked by a bi-partisan team.

2. Fact-checking can infringe on social media as a platform that gives people a voice and free expression.

- In an interview with CNBC in 2017, Zuckerberg stated "But overall, including compared to some of the other companies, we try to be more on the side of giving people a voice and free expression."[18]

- Social media companies are not the “arbiter of truth”[19]

- Social media companies are not state actors and their platforms are not public forums, so they are not protected fully by the First Amendment. This means that those who post on social media platforms to not have the unchecked right to free speech on platforms.

- Langdon v. Google, Inc. created the precedent that if an advertisement is shown to be misleading or unlawful, a restriction on that speech is permissible but not mandatory[20]

3. Misinformation can incite violence, cause personal harm, and lead to voter suppression

- Feiner v. People of State of New York set precedent that speech that is considered an incitement to riot and creates a clear and present danger of causing a disturbance of the peace, is not protected by the First Amendment[21].

- Zuckerberg is firm in his commitment that "no one is allowed to use Facebook to cause violence or harm to themselves or to post misinformation that could lead to voter suppression."[22]

4. Social media companies need to maintain neutrality[23]

- If platforms removed articles from their platforms because they did not agree with claims they would loose a large part of their customer-base

- Many major platforms are so popular because they have a diverse user base

- Social media companies are a business. They aim to maximize their profits by attracting a large set of users.

Conclusion

To conclude, there are both positive and negative consequences to technology platforms creating and enforcing various fact-checking policies versus relying on users to fact-check their information. The range of consequences is what has made fact-checking policies an ethical debate. The positive consequences of platforms such as Facebook, Twitter, and YouTube implementing fact-check policies are that user's are less likely to be exposed to misinformation and "fake news". This can prevent users from getting trapped in echo chambers and filter bubbles where they can easily develop extremist views that can turn to be dangerous to both themselves and those around them. The term "not everything you see on the internet" is now truer than ever because of the sheer amount of information on the internet, but this also makes it extremely difficult for users to discern what is true and what is not. The negative consequences of major news platforms implementing fact-check policies are that user's might feel their rights are being infringed upon. Freedom of speech, including freedom of the press are values that The United States value and are crucial to what makes them a free country. People go on social media to be exposed to opposing views and diverse perspectives, and fact-checking reduces that to an extent.

References

- ↑ Comparative Social Media Policy Analysis. Democrats, 27 Aug. 2021. Retrieved January 26, 2022

- ↑ “Fake News, Misinformation, & Fact-Checking: Ohio University MPA”. Ohio University, 17 Oct. 2019

- ↑ “Fake News, Misinformation, & Fact-Checking: Ohio University MPA”. Ohio University, 17 Oct. 2019

- ↑ Timberg, Craig, et al. “Inside Facebook, Jan. 6 Violence Fueled Anger, Regret over Missed Warning Signs”. The Washington Post, WP Company, 29 Oct. 2021. Retrieved January 26, 2022

- ↑ Allcot, Hunt, etc al. “Social Media and Fake News in the 2016 Election”. Journal of Economic Perspectives, 2017. Retrieved January 26, 2022

- ↑ O'Sullivan, Donie, et al. [https://www.cnn.com/2021/10/22/business/january-6-insurrection-facebook-papers/index.html “Not Stopping 'Stop the Steal:' Facebook Papers Paint Damning Picture of Company's Role in Insurrection”]. CNN, Cable News Network, 24 Oct. 2021. Retrieved January 26, 2022

- ↑ Timberg, Craig, et al. “Inside Facebook, Jan. 6 Violence Fueled Anger, Regret over Missed Warning Signs”. The Washington Post, WP Company, 29 Oct. 2021. Retrieved January 26, 2022

- ↑ Nyilasy, Greg. “Fake News in the Age of COVID-19”. University of Melbourne. Retrieved February 11, 2022

- ↑ Clegg, Nick. “Combating COVID-19 Information Across our Apps”. Meta, 25 March 2020. Retrieved February 11, 2022

- ↑ Barbaro, Michael, host. “Jack Dorsey on Twitter’s Mistakes.” The Daily, NYT, 7 Aug. 2020. Available from: https://www.nytimes.com/2020/08/07/podcasts/the-daily/Jack-dorsey-twitter-trump.html. Retrieved January 26, 2022

- ↑ Rich, Timothy S., et al. “Research Note: Does the Public Support Fact-Checking Social Media? It Depends Whom and How You Ask: HKS Misinformation Review.” Misinformation Review, 6 Feb. 2021

- ↑ Comparative Social Media Policy Analysis. Democrats, 27 Aug. 2021. Retrieved January 26, 2022

- ↑ “See Fact Checks in YouTube Search Results - Youtube Help.” Google, Google

- ↑ Rich, Timothy S., et al. “Research Note: Does the Public Support Fact-Checking Social Media? It Depends Whom and How You Ask: HKS Misinformation Review.” Misinformation Review, 6 Feb. 2021

- ↑ Rich, Timothy S., et al. “Research Note: Does the Public Support Fact-Checking Social Media? It Depends Whom and How You Ask: HKS Misinformation Review.” Misinformation Review, 6 Feb. 2021

- ↑ Barbaro, Michael, host. “Jack Dorsey on Twitter’s Mistakes”. The Daily, NYT, 7 Aug. 2020. Retrieved January 26, 2022

- ↑ Dudley-Nicholson, Jennifer. “The Real Reasons Fake News Spreads on Social Media.” News, News.com.au - Australia's Leading News Site, 26 June 2017

- ↑ Rodriguez, Salvador. “Mark Zuckerberg says social networks should not be fact-checking political speech”. CNBC, May 28, 2021. Retrieved February 11, 2022

- ↑ Rodriguez, Salvador. “Mark Zuckerberg says social networks should not be fact-checking political speech”. CNBC, May 28, 2021. Retrieved February 11, 2022

- ↑ Pinkus, Brett. “The Limits of Free Speech in Social Media”. UNT Dallas College of Law, April 26, 2021. Retrieved February 11, 2022

- ↑ Pinkus, Brett. “The Limits of Free Speech in Social Media”. UNT Dallas College of Law, April 26, 2021. Retrieved February 11, 2022

- ↑ Rodriguez, Salvador. “Mark Zuckerberg says social networks should not be fact-checking political speech”. CNBC, May 28, 2021. Retrieved February 11, 2022

- ↑ Davis, Courtney. “The Ethics of Making on the Handling the New York Post “October Surprise”. Markkula Center for Applied Ethics at Santa Clara University, February 5, 2021. Retrieved February 11, 2022