Facebook newsfeed curation

Facebook presents content to its users through personalized newsfeeds. The newsfeed is the home page that loads when a user logs into the application. It contains a curation of posts and activity of the user's friends and followed pages. The newsfeed was an addition to the Facebook platform in 2000, and has continued to expand as the most prominent aspect of a user’s Facebook experience. Newsfeed curation is the process of how Facebook determines what content to present to users. Content curation is done through a combination of algorithmic curation and the user’s own curation. There are different methods of content curation that each can produce a personalized newsfeed that is interesting and engaging to each user. In addition to the personal content in newsfeed, Facebook also exposes users to popular stories through the Trending section. Ethical controversies have arisen surrounding potential biases from those determining what content gets generated for each user.

Contents

History & Importance

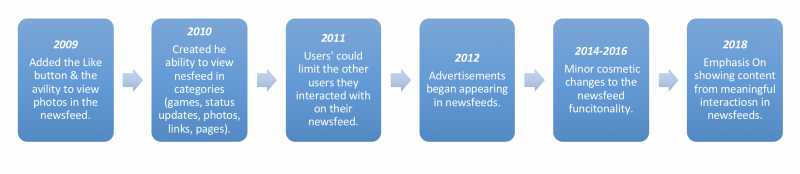

Facebook’s newsfeed model began in 2006 as a manner of centralizing the profile updates of the a user's friends. [1]. Throughout the following years, Facebook continued to update the capabilities, appearance, and purpose of the Facebook newsfeed.

Newsfeed Development

Newsfeed curation is the process of determining what information appears in what order when a user logs into their account. Throughout a day, a users can be exposed to 1500 posts, but many factors curate this number down to around 300 posts.[2] This is done through both algorithmic curation and self-curation.

Algorithmic Curation

Facebook originally used an algorithm called Edgerank to organize and choose what content should appear on a given user’s newsfeed. Since then, the algorithm has been updated and now includes more factors in determining how to choose content. There are thousands of factors, but the most prominent components in choosing what posts to display are: recency, post type (such as a photo, video, or status update), relationship to poster[1], and social rating. A post’s social rating is the culmination of how similar user’s have interacted with this post, the amount of likes the post has, and the location of where the post was made. This results in a score, which is then used to determine what posts are included in a newsfeed. The end result is in essence a filter bubble of personalized results about information that the algorithm deems interesting to the user.

In 2014, Facebook hired a team of editors to assist in the newsfeed curation process after receiving backlash that there were important news stories excluded from user’s feeds. These editors get paid to troll newsfeed pages and compare the content they are viewing to top news stories from a select 10 news sources.[3]

Self-Curation

Facebook’s algorithm relies on a set of predetermined factors to establish what content to include in a user’s newsfeed. These do not always accurately capture a user’s preferences. For this reason, Facebook has created many outlets for users to self-curate their own newsfeed.

Self-curation is composed of 5 main ways to categorize a user’s preferences.[4] The first feature is to prioritize who a user wants to see at the top of their newsfeed. Users can prioritize up to 30 friends, so when these friends update their statuses, upload photos, or change information on their timelines, it will appear at the top of their newsfeed. The next two methods of self-curation are following and unfollowing other users. When a user follows another user, all of their posts and profile updates will appear in the newsfeed. Conversely, if you unfollow a user, their content will not appear in the first user's newsfeed.

There is also a feature to discover pages that may match a user’s interest. A user can like pages and get updates from these pages in their newsfeed. The updates from pages appear in the same format as content updates from friends. The last method of self-curation is to set preferences of the type of content a user wants to see, such as apps and games.

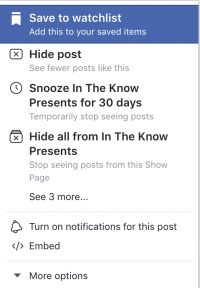

These 5 methods provide a general form to assist Facebook’s algorithm in how to sort and adopt content to display. Since algorithms are not perfect, user’s also have the ability to share their feelings on particular posts. If a user comes across a post they do not want to see again, they can click on the three dots in the upper right corner of the post. Here, a user can choose to hide that particular post, snooze that user, or unfollow that user. Hiding the post removes it from the user’s newsfeed. Snoozing a friend means that a user will not see content from them for 30 days,[5] and when a user unfollows a friend it means they will not get updates from them. A user has no way of knowing if another user has snoozed or unfollowed them.

Trending

A user’s newsfeed is filled with posts and status updates from their friends and pages liked. Yet, a huge portion of Facebook’s content is news. This news comes from the trending section of Facebook’s newsfeed. Trending became a part of the newsfeed in 2014 in order to help user’s discover interesting and relevant content going around Facebook.[6]

Facebook’s Trending section began to use algorithms in 2016 in order to “cover more topics and make it available to more people globally over time,”.[7] This initiative was implemented to make Facebook users more aware.

Ethical Concerns

Biased Content

Facebook’s newsfeed is a powerful information dissemination tool for both personal content and advertising. With over 1 billion active users [8], Facebook is considered to be one of the main news sources. This responsibility raises a dilemma over how to determine what information to display.

The programmers behind Facebook’s newsfeed curation algorithm are responsible for choosing how the algorithm responds to inputs. Philip Brey describes this as the embedded values approach. Computers and computer programs are not neutral because they inherently “promote or demote particular moral values and norms”[9]

Facebook’s newsfeed curation, particularly in the trending section, is not neutral. The newsfeed curation algorithm contain the moral biases of those who code it. This was seen during the 2016 election season[10], when news and announcements about the Republican party were often not included in newsfeeds. The exclusion of conservative news is attributed to the trending team not recognizing it as news, personal bias, and the absence of conservative news from the list of 10 trusted news sources from which trending news stories are compared. Conservative news sources such as Fox or or Newsmax.com were excluded from the list to compare for potential trending stories. The biases of those on the trending team stem from the composition of the trending team: millennials with a liberal, often ivy-league education. This liberal educational background can alter, even subconsciously, their positions concerning national news and politics. As Facebook continues to hire this demographic, their top news stories will reflect a skewed view on trending news. This raises many moral and ethical dilemmas for the availability of information to Facebook users since 63% of users consider Facebook to be a news source.[11]

2018 Facebook Hearing

In April of 2018, Facebook founder and CEO Mark Zuckerberg was brought before congress to address concerns about Facebook's privacy and security shortcomings. Senators addressed topics including user privacy controls, hiring practices, and the investigation into Russian influence over the 2016 US presidential election. Additionally, congress grilled Zuckerberg on content and advertisement curation and Facebook's use of cookies or data from third-party applications to personalize advertisements and bolster security. It was suggested during the hearing that Facebooks utilizes methods that breach user privacy, such as tapping microphones and collecting data from people who do not even have Facebook accounts to curate their content for an optimal user experience. Zuckerberg denied any claims that Facebook transgresses privacy guidelines, and maintained that users are in control of their information.[12]

References

- ↑ 1.0 1.1 1.2 Manjoo, Farhad. “Can Facebook Fix Its Own Worst Bug?” The New York Times Magazine, 25 April 2017, https://www.nytimes.com/2017/04/25/magazine/can-facebook-fix-its-own-worst-bug.html. Accessed 14 March 2018.

- ↑ Luckerson, Victor. “How a controversial feature grew into one of the most influential products on the Internt.” Time Magazine, 9 July 2015, http://time.com/collection-post/3950525/facebook-news-feed-algorithm/. Accessed 13 March 2018.

- ↑ Thielman, Sam. “Facebook news selection is in hands of editors not algorithms, documents show.” The Guardian, 12 May 2016, https://www.theguardian.com/technology/2016/may/12/facebook-trending-news-leaked-documents-editor-guidelines. Accessed 13 March 2018.

- ↑ Lua, Alfred. “How to Customize Your Facebook News Feed to Maximize Your Productivity.” Buffer Social, 30 May 2017, https://blog.bufferapp.com/customize-my-news-feed. Accessed 12 March 2018.

- ↑ https://www.facebook.com

- ↑ “Search FYI: An Update to Trending.” Facebook Newsroom, 26 Aug 2016, https://newsroom.fb.com/news/2016/08/search-fyi-an-update-to-trending/. Accessed 15 March 2018.

- ↑ Thielman, Sam. “Facebook fires trending team, and algorithm without humans goes crazy.” The Guardian, 29 Aug 2016, https://www.theguardian.com/technology/2016/aug/29/facebook-fires-trending-topics-team-algorithm. Accessed March 10 2018.

- ↑ Facebook Newsroom: Our Company, Stats." https://newsroom.fb.com/company-info/. Accessed March 22 2018.

- ↑ Brey, Philip. Cambridge Handbook, Chapter 3, “Values in technology and disclosive computer ethics” (p. 42).

- ↑ Nunez, Michael. “Former Facebook Workers: We routinely Suppressed Conservative News.” Gizmodo, 9 May 2016, https://gizmodo.com/former-facebook-workers-we-routinely-suppressed-conser-1775461006. Accessed 15 March 2018.

- ↑ Herrman, John and Mike Isaac. “Conservatives Accuse Facebook of Political Bias.” The New York Times, https://www.nytimes.com/2016/05/10/technology/conservatives-accuse-facebook-of-political-bias.html. Accessed 22 March 2018.

- ↑ https://www.washingtonpost.com/news/the-switch/wp/2018/04/11/zuckerberg-facebook-hearing-congress-house-testimony/?noredirect=on&utm_term=.706c198b95e7