Ethical issues in Digital Assistants

Digital assistants may definitely make the daily activities of human’s easier by automating tasks. They are present in various technologies in today's world. For example, smartphones and smart speakers use digital voice assistants. An intelligent digital assistant can help someone with basic tasks such as can creating meeting requests, reporting a sports score, and sharing the weather forecast [3]. 19.7% of adults in the US use a smart speaker, which is a form of a digital assistant [3]. However, there are still numerous ethical concerns tied to them [4]. For example, one of the biggest concerns is regarding privacy and who has access to the information collected by the device. Additionally, there are concerns involving the security of these devices, their role in reinforcing gender biases, and providing unethical, sometimes dangerous, responses to some of the common questions asked by the users [4]. Companies developing these digital assistants have also been scrutinised for misusing the data collected by these digital assistants.

Contents

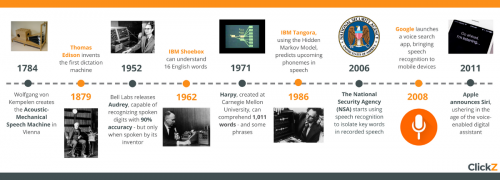

History of Digital Assistants

Humans started communicating with machines back in the 1960’s. One of the very first natural language processing computer programs called Eliza was developed by an MIT professor named Joseph Weizenbaum [5]. Soon after, IBM developed the Shoebox which was able to recognize human speech. The shoebox served as a voice-activated calculator. The next breakthrough in this space came in the 1970s when “Harpy” was introduced, which understood around 1000 English spoken words [6]. In the next decade, IBM capitalized on the success of Shoebox and introduced “Tangora”, which was a voice recognizing typewriter that could recognize around 20,000 English words. [6]IBM developed the first virtual assistant, Simon. “Simon was a digital speech recognition technology that became a feature of the personal computer with IBM and Phillips” [6]. As years went by, the first chatbot was active on messaging platforms used back then like AIM and MSN Messenger. In 2010, Apple integrated voice digital assistants in the iPhone, which turned out to be a big breakthrough on how humans interact with digital assistants [6]. Siri was the first modern digital assistant installed on a smart phone. Google, Amazon, IBM, Microsoft, and other technology companies followed Apple and introduced their own versions of digital assistants to the general public [6]. Amazon came out with Alexa along with Echo in 2014 and Microsoft also released Cortana in April of the same year [3].

Technical Aspects of Digital Assistants

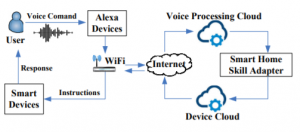

Digital assistants use machine learning algorithms which are continuously improving [7]. The workings of a digital assistant can essentially be broken down into 3 steps. The first step is converting human speech to text that the computer program understands [8]. This step uses Natural Language Processing and Natural Language Understanding to achieve the goal. The next step is to convert the text to an intent and help the computer understand what exactly the user means by the voice/text command [8]. Typically, it is important to have the digital assistant connected to the internet. Finally, the third step is to convert the intent to an action [8]. In this step, the digital assistant sends the user’s request to its database, where it is broken down into separate smaller commands and compared against similar requests [9]. After this process is complete, a response is sent to the digital assistant, which is then communicated to the user.A common issue in digital assistants is the inaccuracy of results. For example, if you ask a digital assistant to play a certain genre of music, and it plays a different genre then humans generally command them to stop immediately. This teaches the device that the response it received from its servers might be wrong. This is then communicated to the servers which work on improving the machine learning algorithms present on the server side to provide accurate responses to the commands given by the user. [9]

Uses of a Digital Assistant

Digital assistants provide the user a variety of uses. Even though each digital assistant may have their own set of functions, uses, and services it can provide, some uses which appear to be common across all of them are provided below -:

- Find information online such as weather details, latest news, sports scores, etc

- Make reminders and set alarms

- Send messages and make calls

- Help with playing music through various streaming services such as Spotify, Apple Music, etc

- Control smart devices such as smart lights, locks, etc

- Provide translation of languages on the go

- Make reservations for restaurants, taxi services like Uber and Lyft

- Read notifications and allow you to respond to them if needed

- Act as a digital encyclopedia by looking up answers to questions

Ethical Concerns

Privacy

Most of the digital assistants require voice activation to use the device. For example, the Amazon Echo which uses Amazon Alexa always listens for the word “Alexa”. This means that these devices are constantly listening to their surroundings. These digital assistants are often activated by words sounding similar to their wake words. “This situation raises privacy concerns for end-users who may have private communication that addresses their financial, their emotional, or their health issues recorded without their consent. Unintended voice recordings can also be the result of malicious voice commands.” [12]

Furthermore, digital assistants are essentially just the client which sends requests to the cloud server to carry out the request for the user [13]. The companies who make these digital assistants have access to the recordings of the users [13]. Even though the user can go to the cloud and delete these recordings, they run the risk of negatively impacting their customer experiences. French sociologist Antonio A. Casilli states that it raises a significant privacy concern when digital assistant companies use the recordings of their customers to further train their digital assistants to improve the accuracy of their results [14]. Additionally, people generally are not aware of this fact. Furthermore, there are newspaper reports claiming that Google employees are constantly listening to the voice recordings picked up by the Google Home [15]. These recordings have conversations that should not be recorded in the first place and might contain sensitive information [15]. However, Google has issued many statements since then to assure the users that they do not listen to the conversations, and they are just recorded to improve their existing assistants to ensure there is a better user experience [16].

Burger King took advantage of the wake word of Google Home in a recent advertisement campaign run by them. This advertisement featured a "OK Google, what is the Whopper burger?” [17]. The "OK Google" triggered the initialization of the Google Assistant, which read the description of the hamburger’s ingredients aloud from Wikipedia. When people became aware of what Burger King was trying to do, the Wikipedia entry for "Whopper burger" was changed to include other, inappropriate statements, such as listing one of the ingredients as cyanide [17]. Google responded by quickly making sure that the advertisement’s recording does not trigger the device [18]. This incident is just an example of how third-parties have the ability to transform a private home into a public space by triggering digital assistants. The manipulation of these devices can cause concern for the rights, safety, and privacy of the users.

In a video posted on Youtube, Alexa was asked “Are you connected to the CIA?”, which caused Alexa to shut off and not answer the question [19]. This made many individuals worried that their information was being sent to others, potentially the CIA. This concern also stemmed from the fact that Alexa, like other digital assistants, is constantly listening to conversations [12]. Customers are questioning the ethical implications behind a device that stores their personal conversations. To settle these controversies and doubts, an Amazon spokesperson told Forbes that "Amazon will not release customer information without a valid and binding legal demand properly served on us.” [20]

There is a significant privacy concern for the people surrounding the user even though they are just in the vicinity of the digital assistant [21]. For instance, a digital assistant may record the conversations taking place in the background of the legitimate user which may include personal details like business dealings, private conversations, among other things.

Former employees and current executives of some of the big tech companies developing digital assistants have raised concerns about the data collected by these companies and whether collecting and using this data is ethically permissible. For instance, a former Amazon employee told the Guardian “Having worked at Amazon, and having seen how they used people’s data, I knew I couldn’t trust them”, after Alexa seemed to be repeating previous requests that had already been completed [22].

Security

Digital assistants can often pose a great security risk to their users. Due to the nature of the digital assistants, they require to be activated by voice commands. As a result, a TV, music player, or radio can cause the device to become active. Once they are active, they record everything and constantly communicate with their servers [23]. This could lead to the device eavesdropping and carrying out instructions based on what they hear even though they are not directly spoken to. An example of this is when Amazon’s Alexa had recorded a part of their private conversation and sent it to a random person on their contact list [24]. Amazon released a statement following this incident saying that this was due to misinterpreted speech. Researchers at Northeastern University have found some digital assistants that activate by mistake up to 19 times each day [25].

Hackers and other malicious people have utilized the security flaws in digital assistants to gain valuable information. For example, they could place orders, send malicious messages, leak credit card information, among other things. Routers and unsecured wireless networks are avenues that hackers have exploited to gain access to digital assistants, which allows them to carry out malicious activities and pose a security threat for the users [26]. A new technique hackers have discovered is using inaudible ultrasonic waves to hack into digital assistants. These attacks are called “surfing attacks” [27]. Some digital assistants have developed an authentication method that allows registered users to activate the digital assistant. To find another way to hack into the system, hackers developed a “method called “phoneme morphing”, where the device is tricked into thinking a modified recording of an attacker’s voice is the device’s registered user” [13].

Digital assistants have the ability to be connected to other smart devices in the home like a smart bulb, alarms, locks, security cameras etc. This may pose an additional security risk as the hacker may gain access to these devices as well. For example, there are many smart locks that can be locked and unlocked using an Alexa or a Google Home by just providing a PIN [28]. This PIN can be compromised in numerous ways which could be dangerous for the physical security of the user. Additionally, researchers have found a new way to hack digital assistants using laser lights [29]. The lights can be directed to the speaker’s sensor and the device can mistake this as a sound. Researchers at the University of Michigan were able to successfully control a Google Home device from 230 feet away and open doors, and switch lights off of the house among other activities [29]. This attack, even though there are many specifications to getting it executed successfully, can pose a multitude of security concerns for the user.

Another security controversy arose when a 6-year-old ordered a $160 dollhouse and 4 pounds of cookies using Amazon’s Alexa. A TV news report presenting the story of the 6-year-old inadvertently set off some viewers’ Echo devices, which in turn tried to order dollhouses using Alexa [30]. Many Alexa owners feel that the devices may be a threat to their privacy due to their constant listening. Additionally, people were also concerned that people who get access to the device can maliciously place orders on Amazon. To this, an Amazon spokesperson told Fox News that there were security measures in place to avoid troubles of accidental and malicious orders using Alexa [31]. For instance, “they must then confirm the purchase with a “yes” response to purchase via voice. If users ask Alexa to order something by accident, they can simply say "no" when asked to confirm.” [31] She also mentioned that one can manage their shopping settings in the Alexa app, such as turning off voice purchasing or requiring a confirmation code before every order [31].

Reinforcing Gender Biases

“Women are expected to assume traditional “feminine” qualities to be liked, but must simultaneously take on—and be penalized for—prescriptively “masculine” qualities, like assertiveness, to be promoted. As a result, women are more likely to both offer and be asked to perform extra work, particularly administrative work—and these “non-promotable tasks” are expected of women but deemed optional for men.” [32] Furthermore, women are perceived as caregivers, which is why there “seems to be a tendency to associate feminine voices with warm and tender figures.” [33]

As a result, digital assistants, especially voice assistants, have the default voice set to a female’s voice, which reinforces the gender biases in today’s society. Considering the 4 big technology companies that make these digital assistants, Amazon's Alexa uses a female-sounding voice, Apple’s Siri uses a female-sounding voice, Microsoft’s Cortana uses a female-sounding voice, and Google’s Google Assistant also does the same. A research report conducted by researchers at Stanford University says that users treat digital assistants based on the gender roles and stereotypes associated with their voices, which are either male or female sounding [34]. This also reflects on how male dominant teams view and think about women, and often form a connection between women and being subservient.

Research conducted at Harvard University says that every time we order the female sounding digital assistant to carry out a task - be it ordering something online, or booking a flight ticket - the stereotype of associating a woman as an assistant is strengthened [35].

Thus, by using feminine voices, digital assistants are reinforcing gender stereotypes. After receiving backlash from the general public about this, companies producing digital assistants have taken steps to continue their mission of promoting diversity and inclusion. Amazon and Apple now give the user the option to select the type of voice they would like their digital assistants to have [36]. This also avoids the default biases from influencing this selection.

Misuse of Data

The data collected by the manufacturing companies of digital assistants can be used for various purposes without the knowledge of the user. One of the main concerns raised is that the sensitive data available to the digital assistants can be accessed by the manufacturers or by other third parties to provide certain functionalities [12]. Users generally do not know with whom this data is being shared, which is a breach of the data-sharing privacy [21].

User data shared with malicious third parties can be used for criminal purposes, which can give rise to criminal intent privacy [21]. "Whatever data is collected by the VAs, the users are the data-owners and, therefore, have the right to decide whether they want to keep them for further processing or delete them. In the absence of such a scenario, it gives rise to user-rights privacy. Many times, before using a service, a user must agree to certain terms and conditions, often not realizing the extent to which their personal information can be collected and utilized, thereby breaching the user-consent privacy." [21]

Audio data is extremely valuable for the inference of information. Various characteristics of the user such as their body measurements, age, gender, personality traits, physical health, among other things can be inferred by just their audio. “Additional sounds produced by the end-users (e.g., coughing and laughing) and background noises (e.g., pets or vehicles) provide further information." [37] This adds more responsibility on the end-user to respect the customer’s privacy and not share their data with other third parties and use it to negatively impact the users.

Unethical Responses by Digital Assistants

Over the years, digital assistants have provided unethical responses and carried out unwanted actions to some common questions asked by users.

Kristin Livdahl’s 10-year-old daughter was practising some physical challenges like rolling over holding a foot or a shoe. After doing challenges like these, her daughter asked Amazon’s Alexa for another one, and Amazon’s Alexa asked the 10-year-old kid to do the “Penny Challenge” [38]. This is a challenge that involves people plugging a charger halfway into an outlet so that the prongs are exposed. After this, people take a penny and hold it against the exposed prongs. This often results in the person getting an electric shock but it can also start fires and cause serious lifelong injuries. The kid was stopped immediately by her parents [39]. Amazon quickly issued an apology and made the necessary changes to make sure these things do not happen in the future [40]. In another incident, Amazon’s Alexa responded to a kid who asked Alexa to “play digger digger” by saying crude words which contained abusive words relating to pornography [41]. These two incidents coupled with the dollhouse incident where the digital assistant ordered expensive dollhouses raised concerns among parents about having these devices present in households that have kids.

In a domestic violence incident that took place in New Mexico, Eduardo Barros asked his girlfriend if she called the police [42]. He asked her “Did you call the sheriff”? The Amazon Alexa present in the same room as them interpreted it differently and called 911 [42]. After seeing the 911 call, Barros pushed his girlfriend to the ground and kicked her [43]. Soon after, when the police arrived, they arrested Barros and charged him with beating and threatening to kill his girlfriend [43]. CNN asked Amazon about how this 911 call was possible, and a spokesperson responded by saying that the Echo does not have the built-in capabilities to call the police [42]. She said that Amazon Echo can only be used to call another Echo device or an Alexa App [42]. The device does not have the functionality to conduct a public switched network call, which is what happened in this incident [42]. However, the sheriff’s spokesperson said that the 911 caller could be heard saying, “Alexa call 911”. While in this scenario a device was able to help save a life, the device was reportedly not asked to call 911 but still ended up doing so based on hearing the conversation in the room. This brings up the concerns that the security functions of these devices are not strong enough. It also brings to light that digital assistants like this can misinterpret what is being said and carry out unwanted actions, which may have severe consequences.

See Also

References

- ↑ Tulshan A.S., Dhage S.N. (2019) Survey on Virtual Assistant: Google Assistant, Siri, Cortana, Alexa. In: Thampi S., Marques O., Krishnan S., Li KC., Ciuonzo D., Kolekar M. (eds) Advances in Signal Processing and Intelligent Recognition Systems. SIRS 2018. Communications in Computer and Information Science, vol 968. Springer, Singapore. https://doi.org/10.1007/978-981-13-5758-9_17

- ↑ Computer Hope. (2021, December 30). What is the Digital Assistant? Digital Assistant. https://www.computerhope.com/jargon/d/digital-assistant.htm.

- ↑ 3.0 3.1 3.2 Ramos, D. (2018). Voice Assistants: How Artificial Intelligence Assistants Are Changing Our Lives Every Day. Smartsheet. https://www.smartsheet.com/voice-assistants-artificial-intelligence

- ↑ 4.0 4.1 Wilson, R., & Iftimie, I. (2021). Virtual assistants and privacy: An anticipatory ethical analysis. 2021 IEEE International Symposium on Technology and Society (ISTAS). https://doi.org/10.1109/istas52410.2021.9629164 .

- ↑ Epstein, J., & Klinkenberg, W. (2001). From Eliza to Internet: a brief history of computerized assessment. Computers in Human Behavior, 17(3), 295–314. https://doi.org/10.1016/s0747-5632(01)00004-8

- ↑ 6.0 6.1 6.2 6.3 6.4 Afshar, V. (2021b, April 7). AI-powered virtual assistants and the future of work. ZDNet. https://www.zdnet.com/article/ai-powered-virtual-assistants-and-future-of-work/

- ↑ Digital Assistants – Ultimate Guide. (n.d.). SYKES Digital. https://www.sykes.com/digital/learn-with-us/guides/digital-assistants-ultimate-guide/

- ↑ 8.0 8.1 8.2 How do digital voice assistants (e.g. Alexa, Siri) work? | USC Marshall. (2017, October 17). USC Marshall. https://www.marshall.usc.edu/blog/how-do-digital-voice-assistants-eg-alexa-siri-work

- ↑ 9.0 9.1 How Can Voice Assistants Understand Us? (2020, February 12). Wonderopolis. https://www.wonderopolis.org/wonder/how-can-voice-assistants-understand-us

- ↑ Hoy, M. B. (2018). Alexa, Siri, Cortana, and More: An Introduction to Voice Assistants. Medical Reference Services Quarterly, 37(1), 81–88. https://doi.org/10.1080/02763869.2018.1404391

- ↑ Smith, D. (2022, January 13). Roundup of every Alexa command you can give your Amazon Echo device now. CNET. https://www.cnet.com/home/smart-home/roundup-of-every-alexa-command-you-can-give-your-amazon-echo-device-now/

- ↑ 12.0 12.1 12.2 Hernández Acosta, L., & Reinhardt, D. (2022). A survey on privacy issues and solutions for Voice-controlled Digital Assistants. Pervasive and Mobile Computing, 80, 101523. https://doi.org/10.1016/j.pmcj.2021.101523

- ↑ 13.0 13.1 13.2 Bolton, T., Dargahi, T., Belguith, S., Al-Rakhami, M. S., & Sodhro, A. H. (2021). On the Security and Privacy Challenges of Virtual Assistants. Sensors, 21(7), 2312. https://doi.org/10.3390/s21072312

- ↑ I. (2019, February 22). From personal data to artificial intelligence: who benefits from our clicking? I’MTech. https://imtech.wp.imt.fr/en/2019/02/22/from-personal-data-to-ai/

- ↑ 15.0 15.1 Flanders News. (2019, July 10). Google employees are eavesdropping, even in your living room, VRT NWS has discovered. Vrtnws.Be. https://www.vrt.be/vrtnws/en/2019/07/10/google-employees-are-eavesdropping-even-in-flemish-living-rooms/

- ↑ Smith, D. (2020, August 9). Google’s privacy controls on recordings change. What that means for your Google Home. CNET. https://www.cnet.com/home/smart-home/googles-privacy-controls-on-recordings-changes-what-that-means-for-your-google-home/

- ↑ 17.0 17.1 Price, M. (2021, June 9). Watching Burger King’s Newest Ad Puts Your Privacy in Jeopardy. Fortune. https://fortune.com/2017/04/22/burger-king-google-home-ad-issue-wikipedia-whopper-commercial/

- ↑ https://www.nytimes.com/2017/04/12/business/burger-king-tv-ad-google-home.html

- ↑ https://fortune.com/2017/03/10/amazon-alexa-cia/

- ↑ Brewster, T. (2017, February 24). Amazon Argues Alexa Speech Protected By First Amendment In Murder Trial Fight. Forbes. https://www.forbes.com/sites/thomasbrewster/2017/02/23/amazon-echo-alexa-murder-trial-first-amendment-rights/

- ↑ 21.0 21.1 21.2 21.3 Pal, D., Arpnikanondt, C., Razzaque, M. A., & Funilkul, S. (2020). To Trust or Not-Trust: Privacy Issues With Voice Assistants. IT Professional, 22(5), 46–53. https://doi.org/10.1109/mitp.2019.2958914

- ↑ https://www.theguardian.com/technology/2019/oct/09/alexa-are-you-invading-my-privacy-the-dark-side-of-our-voice-assistants

- ↑ A. (2021, May 27). Cybersecurity: How Safe Are Voice Assistants? Kiuwan. https://www.kiuwan.com/safety-voice-assistants/

- ↑ Kim, E. (2018, May 25). Amazon Echo secretly recorded a family’s conversation and sent it to a random person on their contact list. CNBC. https://www.cnbc.com/2018/05/24/amazon-echo-recorded-conversation-sent-to-random-person-report.html

- ↑ Cuthbertson, A. (2020, February 24). Smart speakers could accidentally record users up to 19 times per day, study reveals. The Independent. https://www.independent.co.uk/life-style/gadgets-and-tech/news/smart-speaker-recording-alexa-google-home-secret-a9354106.html

- ↑ Lemos -, B. R., Preimesberger -, C., Preimesberger -, C., eWEEK EDITORS -, Kerravala -, Z., & Rash -, W. (2021, February 2). How Voice-Activated Assistants Pose Security Threats in Home, Office. eWEEK. https://www.eweek.com/security/five-ways-digital-assistants-pose-security-threats-in-home-office/

- ↑ https://source.wustl.edu/2020/02/surfing-attack-hacks-siri-google-with-ultrasonic-waves/

- ↑ Wroclawski, B. D. (2018, May 10). Amazon Alexa Can Now Unlock Smart Locks. Consumer Reports. https://www.consumerreports.org/smart-locks/amazon-alexa-now-unlocks-smart-locks/

- ↑ 29.0 29.1 Telford, T. (2019, November 5). Hackers can hijack your iPhone or smart speaker with a simple laser pointer — even from outside your home. Washington Post. https://www.washingtonpost.com/business/2019/11/05/hackers-can-hijack-your-iphone-or-smart-speaker-with-simple-laser-pointer-even-outside-your-home/

- ↑ Jennifer, J. (2017, January 5). 6-year-old Brooke Neitzel orders expensive dollhouse, cookies using Amazon’s voice-activated Echo Dot. CBS News. https://www.cbsnews.com/news/6-year-old-brooke-neitzel-orders-dollhouse-cookies-with-amazon-echo-dot-alexa/

- ↑ 31.0 31.1 31.2 https://www.foxnews.com/tech/tv-news-report-prompts-viewers-amazon-echo-devices-to-order-unwanted-dollhouses

- ↑ https://www.brookings.edu/research/how-ai-bots-and-voice-assistants-reinforce-gender-bias/

- ↑ Piper, Allison. (n.d.). Stereotyping femininity in disembodied virtual assistants. ProQuest Dissertations Publishing.

- ↑ The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places (CSLI Lecture Notes S) by Byron Reeves (2003–01-29). (2022). Center for the Study of Language and Inf.

- ↑ Stop Giving Digital Assistants Female Voices. (2016, June 23). The New Republic. https://newrepublic.com/article/134560/stop-giving-digital-assistants-female-voices

- ↑ Griffin, A. (2021, April 1). Apple stop using ‘female’ or ‘male’ voice by default and allow users to choose how voice assistant speaks. The Independent. https://www.independent.co.uk/life-style/gadgets-and-tech/apple-siri-voice-change-default-b1825465.html

- ↑ https://link.springer.com/chapter/10.1007%2F978-3-030-42504-3_16#citeas

- ↑ BBC News. (2021, December 28). Alexa tells 10-year-old girl to touch live plug with penny. https://www.bbc.com/news/technology-59810383

- ↑ Shead, S. (2021, December 29). Amazon’s Alexa assistant told a child to do a potentially lethal challenge. CNBC. https://www.cnbc.com/2021/12/29/amazons-alexa-told-a-child-to-do-a-potentially-lethal-challenge.html

- ↑ Pundir, R. (2021, December 29). Alexa Endangers 10-Year-Old By Suggesting THIS Dangerous Challenge. The Blast. https://theblast.com/149614/amazons-alexa-messes-up-asks-10-year-old-to-do-dangerous-penny-challenge/

- ↑ Michael, T. (2016, December 31). Shocking moment Amazon’s Alexa starts spouting crude porno messages to toddler. . . The Sun. https://www.thesun.co.uk/news/2509172/amazon-alexa-crude-porno-messages-toddler/

- ↑ 42.0 42.1 42.2 42.3 42.4 Hassan, C. C. (2017, July 11). Voice-activated device called 911 during attack, authorities say. CNN. https://edition.cnn.com/2017/07/10/us/alexa-calls-police-trnd/index.html

- ↑ 43.0 43.1 Collman, A. (2019, July 12). New Mexico authorities credited an Amazon Echo with calling 911 when a woman said her boyfriend was beating her — but Amazon said it wasn’t possible. Insider. https://www.insider.com/amazon-echo-911-call-new-mexico-domestic-disturbance-2019-7