Deepfake Detectors

A deepfake is a false piece of media that has been altered or generated by deep learning algorithms, specifically using the AI technology of deep neural networks (DNNs). [1] Deepfake technology has existed since the late 1990's and has been used with varying intentions in a plethora of disciplines and contexts.[1] These include, but are not limited to, film, audio, pornography, and politics.[1] For about twenty years after the inception of deepfake technology, it was almost exclusively used in academic contexts to learn about artificial intelligence and machine learning.[1] After the coining of the term "deepfake" in 2017[2], deepfake media became highly popularized and found itself a regular part of mainstream media.[1] With so much attention, many began to worry about the applications of deepfake technology and looked for ways to prevent malicious uses.[3] In response to the rapidly increasing production of deepfake media, the concern for deepfake detection technology has followed.[3]

Contents

How Deepfake Technology Works

Generative Adversarial Networks (GANS)

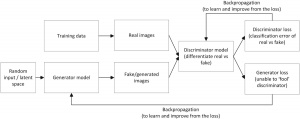

Deepfake technology applies Generative Adversarial Networks (GANS) to alter and manipulate various types of media, including photos, texts, audio, videos, etc.[4]As defined by researchers, Andrei Kwok and Sharon Koj, “GANs is a machine learning innovation that simultaneously trains two competing models (neural networks), comprising a generator and a discriminator to synthesize images.” As a result, the algorithm attempts to mirror the content and produce realistic but fake images and videos. In the process, the discriminator neural network finds differences between the fake content and the real content, to improve the quality until there is difficulty distinguishing between the content.[4]

Facial Manipulation and Swapping

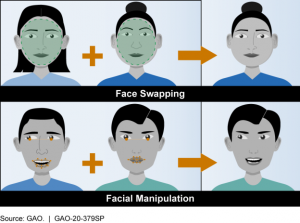

While it is unclear the original reason deepfakes were invented, one of its first uses was in overdubbing video to lip-sync with a speaker.[1] Deep fake technology used for video synthesis techniques commonly falls into two main categories: face-swap and facial reenactment, or facial manipulation.[5] During facial manipulation, the expressions are imitated from the filmed footage into the previously existing footage, but in face-swapping, the face is simply transformed and morphed onto another body from previously existing footage. [6] The technology mobilizes machine learning techniques and then gives the computer real data about the image or video so it can create a fake version of it. [7] The algorithms that feed image data into a computer use spatial and material information to learn how to map facial movements from one person or image to another. [8]

Deepfake Applications

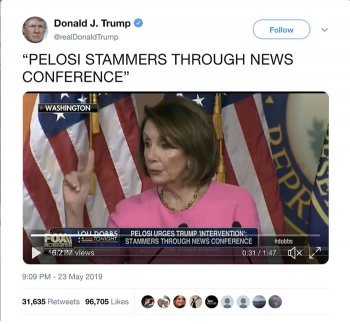

The mass propagation of deepfakes causes the distortion of democratic discourse and eroding of trust in institutions, both of which are highly relevant to democratic elections. Deepfakes are a form of disinformation; however, the law with regard to campaign speech is not very helpful in addressing the threat of deep fakes because election law is shaped by a compelling concern for the protection of first amendment rights[9]. This is especially true when it comes to deepfake parodies for they are generally seen as a form of free expression. The law favors false campaign speech over violations of free speech for fear that regulating campaign speech would become political[10]. Despite the law, however, the harm that deepfakes cause in undermining trust in electoral outcomes stands. Deepfakes along with other kinds of false speech distort campaign results and threaten public trust in those results.

For instance, deepfakes are used to deter voters from voting by means of a threat to release deepfaked pornographic images. Even if it was unclear how many people had been deterred, once voters become aware of the tactic, trust in the integrity of election results may be eroded[11]. Another scenario is when a deepfake is used to instill mistrust by falsely suggesting that a candidate cheated in a public debate, thereby calling into question the legitimacy of the political process. Overall, reputational harm and misattribution distort how voters perceive and understand candidates and, even if an individual viewer is aware of the manipulations, he or she may believe that others are not, which could further degrade their trust in the ability of others to make well-informed voting decisions[12]. With new uncertainties injected into the question of whether voters think their peers are well-informed, trust in democratic processes is undermined.

Advantages of Deepfake Technology

Although deep fakes are known for having harmful and negative impacts, researcher David Brumley has justified several beneficial attributes of deepfakes. He discovered that they can be used in language learning programs to generate sample sentences to help users learn different languages. [13] It is believed that they could be used in advertising to construct narratives and produce certain positive agendas without using real people. [14] Even though the advertisement would be fake it would be perceived as real and be effective to its viewers. Deepfakes have been creating artistic and innovative opportunties in the video game, robotics, and virtual reality industries, but it has also been really helpful to the movie indsutry. Deepfake technology has helped the movie editors keep certain actors in their character roles for a cohesive storyline when they are not available. [15] Deepfakes have been used in blockbuster film series like Star Wars and Fast and Furious, and its effects are being more creatively applied every day in this industry.

Deepfake Detectors

As deepfake technology has become more accessible, it has become increasingly important to figure out a way to detect a deepfake video or image.Companies such as Facebook, Microsoft, and Google were among the firstto begin research into deepfake detection. Researchers from the USC Information Sciences Institute developed a new tool that focuses on subtle face and head movements to detect a deepfake video with 96% accuracy. [16] Microsoft starting using a video authenticator technology that finds things like greyscale pixels at the boundaries of where the deepfaked face was pasted onto the original face. Microsoft ran a lot of data (including some from a Facebook face-swap database) to create the video authenticator. [17]Some technologies use what researchers call a soft biometric signature [18], which is basically the mannerisms and speech patterns of a certain person, to show that a video is not actually that person. It is really hard to mimic the mannerisms and facial expressions of a person digitally so using the soft biometric signature is very accurate right now with a detection rate of about 92%.[19] Deepfake detection is still fairly new and as deepfakes rise in popularity the need for accurate deepfake detectors will rise as well.

Ethical Concerns

Due to the wide availability of open source tools for deepfake technology, which lowers the barriers in cost and technical expertise[5], one of the most prominent threats of DeepFake technology is the trustworthiness of online information.[20] Since faces are intrinsically associated with identity, high-quality deepfake media can create illusions of a person’s presence and activities that do not actually occur. For example, deepfake technology can produce a humorous, pornographic, or political video of a person saying anything they choose, without the consent of the person whose image and voice is involved.[21] This can lead to serious political, social, financial, and legal consequences.[21]. While a majority of deepfake scandals and incidents have been centered around political leaders, actresses, comedians, social media influencers, there is growing concern that this technology will be used for bullying, fake video evidence in courts, political sabotage, terrorist propaganda, blackmail, market manipulation, and fake news [21]. Nonetheless, researcher Mika Westerlund suggests that “...while deepfakes are a significant threat to our society, political system and business, they can be combated via legislation and regulation, corporate policies and voluntary action, education and training, as well as the development of technology for deepfake detection, content authentication, and deepfake prevention.”[21].In response to these worries, many companies, such as Facebook and Twitter, banned and made an effort to remove deepfakes from their platforms.[22]

Shortcomings in Deepfake Detection

In the future, deepfake technology concerns will not only apply to widely known celebrities and political figures but rather that private citizens will be targeted and won't have the funds or resources to clear their name.[23] By imitating a CEO asking employees to send money, corporate scams also pose a threat.[24] Identity theft using deepfake technology can be used to commit financial fraud and other crimes.[25] While there has been tremendous advancement in detecting deepfake media, this detection technology remains far behind the technology used to create the deepfakes.[26] Recognizing this, some companies that produce deepfake technology have issued ethics statements in order to be more transparent about the potential for misuse of their technology.[26] In one of these ethics statements, deepfake technology company Descript admits that "the quality of generative media could increase at a rate that outpaces technology designed to detect it."[26] This would present a deficit for deepfake detection technology. Along those lines, Descript admits that despite all the research underway to help detect deepfake media, it remains unclear how this issue will be resolved.[26] In order to minimize the dissemination and impact of deepfake media on society and individuals, consumers are urged to be critical of every piece of media they consume and to verify any information they find with other reliable sources before sharing with others.[3][26]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 Arnold. (2020, November 25). Deepfake History: When Was Deepfake Technology Invented?. Deepfake Now. Retrieved April 1, 2021.

- ↑ Somers, M. (2020, July 21). "Deepfakes, explained". MIT Sloan School of Management. Retrieved April 2, 2021.

- ↑ 3.0 3.1 3.2 Matt. (2020, December). "Detect DeepFakes: How to counteract misinformation created by AI". MIT Media Lab. Retrieved April 2, 2021.

- ↑ 4.0 4.1 4.2 Kwok, A. O., & Koh, S. G. (2020). Deepfake: A social construction of technology perspective. Current Issues in Tourism, 1-5. doi:10.1080/13683500.2020.1738357

- ↑ 5.0 5.1 Diakopoulos, N., & Johnson, D. (2020). Anticipating and Addressing the Ethical Implications of Deepfakes in the Context of Elections. SSRN Electronic Journal. doi:10.2139/ssrn.3474183

- ↑ Howard, Karen. “Deconstructing Deepfakes-How Do They Work and What Are the Risks?” WatchBlog: Official Blog of the U.S. Government Accountability Office, WordPress, 13 Oct. 2020, blog.gao.gov/2020/10/20/deconstructing-deepfakes-how-do-they-work-and-what-are-the-risks/.

- ↑ NBCNews, director. Deep Fakes: How They're Made And How They Can Be Detected | Mach | NBC News. YouTube, YouTube, 26 Oct. 2018, www.youtube.com/watch?v=C8FO0P2a3dA.

- ↑ NBCNews, director. Deep Fakes: How They're Made And How They Can Be Detected | Mach | NBC News. YouTube, YouTube, 26 Oct. 2018, www.youtube.com/watch?v=C8FO0P2a3dA.

- ↑ Hasen RL (2019) Deep Fakes, Bots, and Siloed Justices: American Election Law in a Post-Truth World. St. Louis University Law Review.

- ↑ Marshall WP (2004) False Campaign Speech and the First Amendment. U. Pennsylvania Law Review 153.

- ↑ Daniels GR (2009) Voter Deception. Indiana Law Review 43.

- ↑ Chesney R and Citron DK (2019) Deep Fakes: A Looming Challenge for Privacy, Democracy, and National Security. California Law Review 107.

- ↑ Johnson, A., & Grumbling, E. (2019). In Implications of artificial intelligence for cybersecurity: proceedings of a workshop (pp. 54–60). essay, the National Academies Press. https://www.nap.edu/read/25488/chapter/7#60.

- ↑ Johnson, A., & Grumbling, E. (2019). In Implications of artificial intelligence for cybersecurity: proceedings of a workshop (pp. 54–60). essay, the National Academies Press. https://www.nap.edu/read/25488/chapter/7#60.

- ↑ Howard, Karen. “Deconstructing Deepfakes-How Do They Work and What Are the Risks?” WatchBlog: Official Blog of the U.S. Government Accountability Office, WordPress, 13 Oct. 2020, blog.gao.gov/2020/10/20/deconstructing-deepfakes-how-do-they-work-and-what-are-the-risks/.

- ↑ Shruti Agarwal and Hany Farid (2019) Protecting World Leaders Against Deep Fakes, The Computer Vision Foundation

- ↑ Leo Kelion(2020) Deepfake Detector Tool unveiled by Microsoft

- ↑ Will Knight (2019), A New Deepfake Detection Tool, MIT Technology Review

- ↑ Manke, K. (2019, June 20). "New technology helps media detect 'deepfakes'". University of California. Retrieved April 2, 2021.

- ↑ Li, Y., Yang, X., Sun, P., Qi, H., & Lyu, S. (2020). Celeb-DF: A Large-Scale Challenging Dataset for DeepFake Forensics. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). doi:10.1109/cvpr42600.2020.00327

- ↑ 21.0 21.1 21.2 21.3 Westerlund, M. (2019). The Emergence of Deepfake Technology: A Review. Technology Innovation Management Review, 9(11), 39-52. doi:10.22215/timreview/1282

- ↑ Banerjee, P. (2020, February 5). "After Facebook, Twitter moves to ban deepfakes on its platform". mint. Retrieved April 2, 2021.

- ↑ Angela Chen (2019) Three Threats Posed by Deepfakes that Technology won't Solve, MIT Technology Review

- ↑ Damiani, J. (2019, September 3). "A Voice Deepfake Was Used To Scam A CEO Out Of $243,000". Forbes. Retrieved April 2, 2021.

- ↑ Alison Grace Johansen (2020), Deepfakes: What they are and why they’re threatening, NortonLifeLock

- ↑ 26.0 26.1 26.2 26.3 26.4 Descript. (2021). "Descript Ethics Statement". Descript. Retrieved April 2, 2021.