Deepfake

Deepfakes are artificially produced media which use a form of artificial intelligence called deep learning to make images or audio of fake events. While the action of faking media content has been seen in the past through agents such as photoshop, the machine learning techniques used by deepfakes make them particularly deceiving. The use of deepfakes to alter video transcripts, create pornographic content, and execute financial fraud has led to responses to make them more easily detectable and to limit their use. Although deepfakes are most widely known for these adverse applications, they can also be used in more positive ways, such as in film editing and educational applications.

Contents

Development

Overview

The development of deepfake technology began with the progress within the fields of machine learning and computer vision. Computer vision enables computers to see, identify and process images in the same way that human vision does, and then provide appropriate output. Machine learning provides systems the ability to automatically learn and improve from experience without being explicitly programmed. The computer vision aspect of deepfakes allow the computer to detect a human face and then reanimate it to appear as somebody else. The machine learning aspect then helps the computer learn from its own mistakes, which means that it will create ever more convincing deepfakes going forward. As the technology has developed, these two aspects have combined to create the deepfakes that we have now, which can be extremely convincing and sometimes impossible to tell whether they are real or fake.

Key People and Programs

The first software to fully use facial reanimation was the Video Rewrite program, which was published in 1997. The program used machine learning techniques to make connections between the sounds produced by a video's subject and the shape of the subject's face.[1] This was a groundbreaking advancement at the time, as no program up to that point had been able to successfully implement facial reanimation in this way.

Although this technology was first established in 1997, the term deepfake wasn't coined until 2017. The term arose from a Reddit user name "deepfakes", who was well known on the site for creating and sharing many of these edited videos. While he made many humorous videos, such as editing the face of Nicolas Cage onto other actors and actresses, he also created a significant amount of videos where the faces of celebrities were swapped onto the actresses in pornographic videos.[2]

Since 2017, multiple different applications have been created and shared to the general public. These applications, such as FakeApp, Faceswap, and DeepFaceLab allow anyone to create Deepfakes of whoever they choose. This can be done because these apps simply take in a gallery of photos that the user provides and use these images to create a deepfake. This means that the user does not need to have any experience in computer development or computer vision in order to create these deepfakes, they simply need to provide enough photos to the application. This simplicity and ease of use means an increase in the number of deepfakes that are created, which further the impacts of deepfakes, both positive and negative.

Ethical Issues

Pornographic Uses

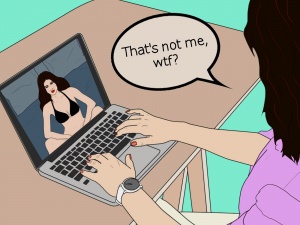

A large majority of the deepfakes that are currently on the internet are those of pornographic nature. As of September 2019, of the 15,000 deepfake videos on the internet, 96% were pornographic and 99% of those mapped faces of celebrities onto porn stars.[3] With the development of applications such as FakeApp, Faceswap, and DeepFaceLab, which grant anyone the ability to create convincing deepfakes, people have unfortunately began to create fake porn videos of people they know in real life, for example friends, casual acquaintances, exes, and classmates, without their permission. Obviously this a massive ethical issue, as these fake yet seemingly authentic videos can have a drastic effect on the lives of those who are portrayed in them. Especially for normal, everyday people, these types of videos can ruin their reputation, stature in the community, and ultimately their lives.

Fraudulent Uses

Financial fraud schemes have been carried out through the use of audio deepfakes, which allow scammers to mimic the voices of trusted individuals. In March of 2019, the chief of a UK subsidiary of a German energy firm paid nearly £200,000 into a Hungarian bank account after being phoned by a fraudster who mimicked the German CEO’s voice.[3] In another case, cybersecurity firm Symantec says it has come across at least three cases of deepfake voice fraud used to trick companies into sending money to a fraudulent account, one of which resulted in millions of dollars in loses. [4]

Alteration of Video Transcripts

Deepfakes are also commonplace in the creation of fake news. In June of 2019, a deepfake video was posted which falsely portrays Zuckerberg as saying, "Imagine this for a second: One man, with total control of billions of people's stolen data, all their secrets, their lives, their futures."[5] The video resulted in widespread questioning of the control that Facebook has over their data, despite the fact that the video was false. Another example that received widespread attention is an altered video of President Barack Obama that was circulated by researchers at the University of Washington to illustrate the potential harm that could result from the use of deepfakes with ill intent.[6] Videos like this are just the tip of the iceberg. In the future, an AI-produced video which depicts a president saying or doing something inflammatory could sway an election, trigger violence in the streets, or spark an international armed conflict.[7]

Positive Uses

Film Editing

Deepfakes have been used in many positive ways in film and video. For example, in 2019 a UK-based health charity used deepfake technology to have David Beckham delivering an anti-malaria message in nine languages.[8] Deepfake technology was also used in the movie Fast and Furious 7. After Paul Walker was killed while the movie was being filmed, the film crew used a deepfake to place his face onto the body of his brother, who acted in his place for the final scene of the movie.

Educational Applications

There are vast possibilities for the use of deepfake technologies in educational applications, such as using deepfakes to bring historical characters back to life to create more interactive and engaging schooling. Companies like CereProc have used deepfake technology to create realistic audio of the speech that John F. Kennedy planned to give before he was assassinated in 1963. CereProc did so by analyzing the audio of 831 speeches given by John F. Kennedy, which they then further separated into 116,177 phonetic units.[6] The final product was a very realistic audio clip that seemed as though it had truly been spoken by JFK.

Detection and Prevention

With deepfakes having the capability to be extremely unethical and dishonest, measures have begun to help detect deepfakes. The US Defense Advanced Research Projects Agency has developed tools for catching deepfakes through a program called Media Forensics.[9] These tools help identify subtle cues that can help indicate that a video is really a deepfake, such as eye-blinks, head movements, and eye color. More recently, researchers from UC Berkeley and the University of Southern California have used existing tools to extract the face and head movements of individuals. Machine learning is then used to distinguish unique head and face movements of different people. For example, the way that somebody nods after saying a specific word or the unique way they tilt their head between sentences are not currently modeled by deepfake algorithms. In testing, this method was shown to be 92% effective in detecting deepfakes.[7] Although the technological capabilities to detect deepfakes are becoming more accurate and proficient, deepfake systems can be trained to outmaneuver forensics tools. As both deepfake and detection technologies evolve, the battle between deepfake forgery and deepfake detection will continue.

References

- ↑ Bregler, Christoph; Covell, Michele; Slaney, Malcolm (1997). "Video Rewrite: Driving Visual Speech with Audio". Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques. 24: 353–360

- ↑ Cole, S. (2018, January 24). We Are Truly Fucked: Everyone Is Making AI-Generated Fake Porn Now. Retrieved from https://www.vice.com/en_us/article/bjye8a/reddit-fake-porn-app-daisy-ridley

- ↑ 3.0 3.1 Sample, I. (2020, January 13). What are deepfakes – and how can you spot them? Retrieved from https://www.theguardian.com/technology/2020/jan/13/what-are-deepfakes-and-how-can-you-spot-them

- ↑ Statt, N. (2019, September 5). Thieves are now using AI deepfakes to trick companies into sending them money. Retrieved from https://www.theverge.com/2019/9/5/20851248/deepfakes-ai-fake-audio-phone-calls-thieves-trick-companies-stealing-money

- ↑ Metz, R., & O'Sullivan, D. (2019, June 12). A deepfake video of Mark Zuckerberg presents a new challenge for Facebook. Retrieved from https://www.cnn.com/2019/06/11/tech/zuckerberg-deepfake/index.html

- ↑ 6.0 6.1 Kalmykov, M. (2019, November 12). Positive Applications for Deepfake Technology. Retrieved March 26, 2020, from https://hackernoon.com/the-light-side-of-deepfakes-how-the-technology-can-be-used-for-good-4hr32pp

- ↑ 7.0 7.1 Knight, W. (2019, June 21). A new deepfake detection tool should keep world leaders safe-for now. Retrieved March 26, 2020, from https://www.technologyreview.com/s/613846/a-new-deepfake-detection-tool-should-keep-world-leaders-safefor-now/

- ↑ Chandler, S. (2020, March 9). Why Deepfakes Are A Net Positive For Humanity. Retrieved March 26, 2020, from https://www.forbes.com/sites/simonchandler/2020/03/09/why-deepfakes-are-a-net-positive-for-humanity/#4da445602f84

- ↑ Knight, W. (2018, August 9). The Defense Department has produced the first tools for catching deepfakes. Retrieved March 26, 2020, from https://www.technologyreview.com/s/611726/the-defense-department-has-produced-the-first-tools-for-catching-deepfakes/