Dark Patterns

Dark Patterns are design strategies used in websites and applications to guide users to perform an action they may not have done otherwise.[1] Such an action might include buying a product that was automatically added to their shopping cart, signing up for a subscription service, or posting a social media status without explicit permission.

Dark Patterns may hide information or decrease visibility of an opt-out option for the user in order to increase a company’s revenue or gain new users. It is widely considered the “dark side” of user experience (UX) design—which is the process of making a product usable, accessible, and pleasurable to use for customers—and refers primarily to designing in such a way that may harm users to the benefit of the company or business.[2]

Contents

History

The name Dark Patterns was coined in 2010 by London-based UX designer Harry Brignull.[3] He created darkpatterns.org to publicly shame websites that employ Dark Patterns and to raise awareness about these practices.

In addition to the examples of Dark Patterns listed on the website, Brignull uses Twitter to let the public contribute to and submit Dark Patterns by taking a screenshot and tagging @darkpatterns or using the hashtag #darkpattern.

Types of Dark Patterns

As of February 2017, there are eleven different types of Dark Patterns. Many of the listed examples combine one or more Dark Patterns.

Bait and Switch

The bait and switch is when a user tries to do one task, but an undesirable thing happens instead.

An example is when Microsoft employed the use of pop-up prompts asking users to upgrade to Windows 10 operating system. Over time, these pop-ups became increasingly more forceful and began employing dark patterns such as the bait and switch. The “X” in the upper right corner of the pop-up window, which typically means close or cancel, was changed so that when clicked, the Windows 10 update would proceed without the user intending to do so.[4]

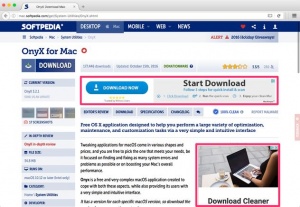

Disguised Ads

Advertisements are disguised to look like part of the website or application, typically as part of navigation or content, to encourage users to click on them.

An example is Softpedia, a software download site, which uses advertising to get revenue. Advertisements on this site often look like download buttons, causing users to click on the ads instead of what they actually wanted to download.[5]

Forced Continuity

Forced continuity is when a free trial with a service ends and a user’s credit card is charged without a reminder. In some cases, it might be hard to cancel the membership.

An example is Affinion Group, an international loyalty program that works on customer relations for businesses. When buying a train ticket through Trainline (a partner ecommerce business), users are confronted with a disguised ad at the top of the confirmation page with a large “Continue” button, fooling users into clicking it. They’re then taken to an external website that tries to convince the user to provide their payment info to receive a voucher off their purchase. In doing so, the user actually is signing up for a Complete Savings membership, which is not clearly defined on the page. If users do decide to sign up, they may not realize it is a subscription service until the charges appear on their bank statements.[6]

Friend Spam

The website or application asks for a user’s email or social media permissions to find a user’s friends and sends the user’s contacts a message.

For example, when signing up for a LinkedIn account, the website encourages users to grant LinkedIn access to their email account contacts to grow their network on LinkedIn. If a user accepts, LinkedIn sends emails to all of a user’s contacts claiming to be from the user.[7]

Hidden Costs

The hidden costs pattern typically occurs in the checkout process where some costs are not upfront or transparent.A user may find some unexpected charges like delivery or tax fees at the very end of the checkout process before confirming an order. By adding these hidden costs at the very end, a user is less inclined to find another service to get the product after investing a lot of time in the checkout process.

For example, ProFlowers, a flower retailer in the United States, has a six-step checkout process. When a user adds something to their shopping cart, the cost of that item is upfront. After going through the checkout process and getting past the fifth step of entering payment information, the last step before confirming the order displays delivery and care and handling costs that were not mentioned prior to that step.[8]

Misdirection

Misdirection draws a user’s attention from one part of the page or website to another part of the interface.

For example, the Australian low-cost airline jetstar.com by default pre-selects an airplane seat for the customer during the flight booking process, but this costs $5. A user can also skip seat selection for no extra cost, but the page hides this skip option at the bottom of the page, therefore misdirecting the customer to think they should choose a seat. [9]

Price Comparison Prevention

The price comparison prevention Dark Pattern makes it hard for a user to compare the price of an item with another, making it harder to make an informed decision.This is common with online retailers that sell packaged products without showing price per weight, making it hard to work out the unit price of items within a bundle.

An example is sainsburys.co.uk, an online grocery service. The company hides the price per weight so users cannot calculate if it’s cheaper to buy loose items or the packaged/bundled item. [10]

Privacy Zuckering

Privacy zuckering is a design pattern that gets users to publicly sharing more information about themselves than they intend to. It is named after Facebook CEO Mark Zuckerberg because older versions of Facebook made it easy for users to overshare their data by making privacy settings hard to find or access.

Now, this pattern typically occurs in the small print within the Terms and Conditions of a service, which gives them permission to sell a user’s personal data, such as physical and mental health information, to data brokers. Data brokers then buy and resell the information to third parties. [11]

Roach Motel

The roach motel Dark Pattern makes it easy for a user to get into a particular situation but is difficult to opt-out of it later.

An example is a ticket sales site like Ticketmaster, which by default sneaks a magazine subscription into a user’s shopping cart. If a user doesn’t notice this and proceeds, they are automatically charged. To get a rebate and unsubscribe, the user has to download a form and send the request via postal service. [12]

Sneak into Basket

The Dark Pattern “sneak into basket,” also known as “negative option billing” and “inertia selling,” appears when a website sneaks an additional item into a user’s shopping cart. This occurs most often via an opt-out radio button or checkbox on a preceding page.

An example of this Dark Pattern is GoDaddy.com, a domain name hosting site. When a user buys a domain name bundle (e.g. getting 3 domains and therefore saving 69%), the site automatically adds privacy protection at $7.99 per domain per year. When a user proceeds to checkout, it’s revealed that two years of domain name registration for all purchased domains has been added to the basket. [13]

Trick Questions

The trick questions Dark Pattern is when a user is asked a question that at first glance seems to ask one thing, but upon closer reading actually means another thing entirely. This often occurs when registering or signing up for a service.

An example is on Currys PC World’s registration form, which presents two checkboxes: one that says “Please do not send me details of products and offers from Currys.co.uk” and below that, “Please send me details of products and offers from third party organisations...” If the user doesn’t read carefully, they may check both boxes assuming they are meant to unsubscribe. [14]

Ethical Implications

Misinformation (Floridi 78-79)

Trust

See Also

External Links

References

- ↑ https://darkpatterns.org/

- ↑ https://www.usertesting.com/blog/2015/10/01/dark-patterns-the-sinister-side-of-ux/

- ↑ https://arstechnica.com/security/2016/07/dark-patterns-are-designed-to-trick-you-and-theyre-all-over-the-web/

- ↑ https://darkpatterns.org/types-of-dark-pattern/bait-and-switch

- ↑ https://darkpatterns.org/types-of-dark-pattern/disguised-ads

- ↑ https://darkpatterns.org/types-of-dark-pattern/forced-continuity

- ↑ https://darkpatterns.org/types-of-dark-pattern/friend-spam

- ↑ https://darkpatterns.org/types-of-dark-pattern/hidden-costs

- ↑ https://darkpatterns.org/types-of-dark-pattern/misdirection

- ↑ https://darkpatterns.org/types-of-dark-pattern/price-comparison-prevention

- ↑ https://darkpatterns.org/types-of-dark-pattern/privacy-zuckering

- ↑ https://darkpatterns.org/types-of-dark-pattern/roach-motel

- ↑ https://darkpatterns.org/types-of-dark-pattern/sneak-into-basket

- ↑ https://darkpatterns.org/types-of-dark-pattern/trick-questions