Automatic gender recognition

Automatic gender recognition (AGR) is the use of computer algorithms to attempt to identify a person’s gender based on pictures, video, or audio recordings. Although a relatively new form of artificial intelligence, it has been lauded for its potential to improve consumer marketing techniques that are specified to individual users. At the same time, AGR has received a great deal of skepticism as the algorithmic methods it relies on have a studied history of misidentifying and misgendering users, especially from already marginalized communities such as transgender and non-binary people and people of color. There have also been concerns about the privacy of users, in addition to the inability to know how AGR has gendered them and for what reasons in most applications. This issues have made AGR one the most highly contested and controversial forms of artificial intelligence to date.

Contents

Background

Automatic gender recognition works by using machine learning to infer a user's gender. Through processes like computer vision and facial recognition, algorithms analyze pictures and videos, looking for key features such as facial hair, makeup, or the tone of a person’s voice and comparing that to previously collected data that has the gender correctly labeled[1]. If the user exhibits enough matching characteristics to previously recorded data of a specific gender, that user is labeled as that gender. For example, if many of the correctly 'male' labeled data points have beards, and the user being labeled has a beard, they will likely be classified as 'male' by the AGR system.

Much like other forms of artificial intelligence, automatic gender recognition is increasingly being used to try and improve the user experience of interactive technology, and is primarily being used for advertising in both online and offline shopping by using gender to help build up a user persona of what a user might like or want. In addition to gender, user personas might include age, race, where a user is from, and more.

Targeted Marketing

The primary way that automatic gender recognition is currently being used is for targeted marketing. If a company or platform can distinguish the gender of a user, they use that information to cater to them with specific advertisements that are deemed to be popular with that specific gender. For example, in a restaurant in Norway, an advertisement billboard that crashed and revealed the use of AGR was found to be showing advertisements for salad to women and pizza to men[2]. This type of targeted advertising is often used on social media as well, where platforms will often ask for your gender to create your profile, but use that data to send you specific and personalized advertisements[3].

Ethical Issues

As with many new technologies, there are many ethical issues that arise with automatic gender recognition, largely focused on the problems that come with a breach in the privacy of and/or the misgendering of a user.

Misgendering

To misgender a person is to identify a person as a gender that does not match their gender identity, usually by way of pronouns. For example, if a user identifies as male and is referenced as “she,” this user is being misgendered.

Transgender and non-binary people

Automatic gender recognition specifically places transgender (trans) people, those whose gender identity differs from the sex they were assigned at birth, and non-binary people, those whose gender identity exists outside of the gender binary, at risk.

The gender binary is the classification of gender as either male or female, and the belief that the sex a person is assigned at birth will align with the societally accepted corresponding gender identity, e.g. a person born with male genitalia will identify as a man[4].

Although there has been an increase in inclusivity of all genders in recent years, there are still many companies and platforms that only follow the gender binary, including most applications of automatic gender recognition. This leaves trans users at a much higher risk of being misgendered, and if a user’s gender does not exist within the given options, as is the case for non-binary users, they are going to be misgendered.

Studies have shown that misgendering of trans people increases feelings of social exclusion and oppression, creating an overall negative effect on their mental and physical well-being[1].

Where trans and non-binary people previously only had to battle being misgendered in person, they now must also combat it online, including cases where they may not be able to tell how they are being gendered, like in the case of advertising.

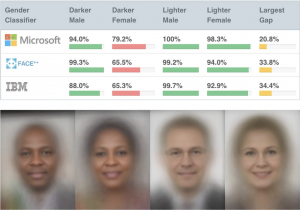

People of color

Foundational components of automatic gender recognition such as computer vision and facial recognition have been found to often incorrectly label the gender of people of color, especially women of color. One study called "Gender Shades" done by MIT computer scientist Joy Buolamwini showed that lighter skinned individuals were labeled correctly more often than darker skinned individuals, and that males were labeled correctly more often than females[5]. None of the services tested provided gender options outside of the gender binary. In this same study, in women, the darker the skin tone got, the closer the chance of being correctly gendered got to a coin toss[5].

This type of gender and racial bias in facial recognition can perpetuate longstanding stereotypes of darker skinned people being more masculine. The historical Sapphire stereotype paints black women as aggressive, angry, and masculine[6]. Studies have shown that when black women are portrayed as masculine, there is a focus on the stereotypically negative aspects of masculinity, such as anger, instead of the stereotypically positive ones, such as intelligence[7]. These stereotypes stem from a long history of oppression and exclusion of black women, and can lead to harmful internalization for black women. Misgendering by automatic gender recognition can be seen as another enforcement of these negative stereotypes, and brings these stereotypes to the online world.

Privacy

Another major concern regarding automatic gender recognition is privacy. As many users flock to the internet to escape their realities, having a computer assume your gender can be a harmful reinforcement of something a user may be trying to escape in the real world. This type of "informational privacy" is something that philosophy professor David Shoemaker describes as the “control over the access to and presentation of information about one’s self-identity.”[8] Not having this kind of control about what information a given algorithm knows about you can be considered a breach of that privacy, and can be dangerous for users.

Automatic gender recognition may be a breach of privacy from the viewpoint of technology philosopher Luciano Floridi as well, who states that to have privacy is to live “without having records that mummify your personal identity forever, taking away from you the power to form and mould who you are and can be.”[9] This can be especially important for transgender users, who may have have been classified as a specific gender online in the past that is now incorrect.

See Also

References

- ↑ 1.0 1.1 Hamidi, Foad, and Stacy M. Branham. “Gender Recognition or Gender Reductionism?: The Social Implications of Embedded Gender Recognition Systems.” Gender Recognition or Gender Reductionism? | Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, 1 Apr. 2018, dl.acm.org/doi/10.1145/3173574.3173582.

- ↑ Turton, William. “A Restaurant Used Facial Recognition to Show Men Ads for Pizza and Women Ads for Salad.” The Outline, The Outline, 12 May 2017, theoutline.com/post/1528/this-pizza-billboard-used-facial-recognition-tech-to-show-women-ads-for-salad?zd=1&zi=kcmponzy.

- ↑ Bivens, Rena, and Oliver L. Haimson. “Baking Gender Into Social Media Design: How Platforms Shape Categories for Users and Advertisers.” Social Media + Society, Oct. 2016, doi:10.1177/2056305116672486.

- ↑ Newman, Tim. “Sex and Gender: Meanings, Definition, Identity, and Expression.” Medical News Today, MediLexicon International, 7 Feb. 2018, www.medicalnewstoday.com/articles/232363.

- ↑ 5.0 5.1 Buolamwini, Joy. “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification.” Gender Shades, 2018, gendershades.org.

- ↑ “Popular and Pervasive Stereotypes of African Americans.” National Museum of African American History and Culture, 19 July 2019, nmaahc.si.edu/blog-post/popular-and-pervasive-stereotypes-african-americans.

- ↑ Kwate, Naa Oyo A, and Shatema Threadcraft. “Perceiving the Black female body: Race and gender in police constructions of body weight.” Race and social problems vol. 7,3 (2015): 213-226. doi:10.1007/s12552-015-9152-7

- ↑ Shoemaker, David W. “Self-Exposure and Exposure of the Self: Informational Privacy and the Presentation of Identity.” Ethics and Information Technology, vol. 12, no. 1, 2009, pp. 3–15., doi:10.1007/s10676-009-9186-x.

- ↑ Floridi, Luciano. ”The 4th Revolution: How the Infosphere Is Reshaping Human Reality", Oxford University Press, 2016, pp. 110–110