Difference between revisions of "Automated Resume Screening"

| Line 1: | Line 1: | ||

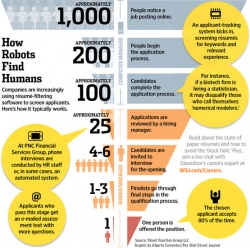

| − | [[File:RobotsFindHumans.jpeg | | + | [[File:RobotsFindHumans.jpeg |250px|thumbnail|right| Steps in the hiring process using Automated Resume Screening. <ref name=mbacrystalball> Online Job Application Systems. ''MBA Crystal Ball'' https://www.mbacrystalball.com/blog/2014/10/19/online-job-application-system/</ref>]] |

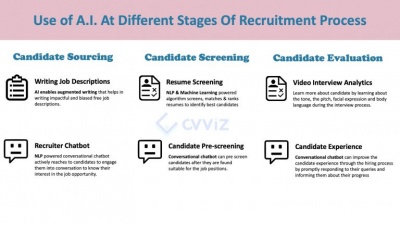

'''Automated Resume Screening''' refers to the use of [[Wikipedia: Machine Learning|machine learning]] [[Wikipedia: Algorithms|algorithms]] and [[Wikipedia: artificial intelligence|artificial intelligence (AI)]] to parse and extract information from applicant [[Wikipedia: Resume|resumes]]. This technology can greatly save companies and recruiters time and other valuable resources while finding the most appropriate hires from amongst big pools of candidates. Different algorithms are used to identify relevant skills and experience for the job from the applicant's resume using keywords in the job description and requirements. Variations of algorithms can be used according to the company's hiring criteria. Certain companies may use algorithms that just do word matching between the job post and the resume, while some may place weights on certain past experiences and backgrounds. The use of automated resume screening then may stimulate bias against underprivileged applicants with lesser experience and relevant job backgrounds resulting in [[Wikipedia: gender bias|gender bias]], [[Wikipedia: racial bias|racial bias]], and circumstantial bias. | '''Automated Resume Screening''' refers to the use of [[Wikipedia: Machine Learning|machine learning]] [[Wikipedia: Algorithms|algorithms]] and [[Wikipedia: artificial intelligence|artificial intelligence (AI)]] to parse and extract information from applicant [[Wikipedia: Resume|resumes]]. This technology can greatly save companies and recruiters time and other valuable resources while finding the most appropriate hires from amongst big pools of candidates. Different algorithms are used to identify relevant skills and experience for the job from the applicant's resume using keywords in the job description and requirements. Variations of algorithms can be used according to the company's hiring criteria. Certain companies may use algorithms that just do word matching between the job post and the resume, while some may place weights on certain past experiences and backgrounds. The use of automated resume screening then may stimulate bias against underprivileged applicants with lesser experience and relevant job backgrounds resulting in [[Wikipedia: gender bias|gender bias]], [[Wikipedia: racial bias|racial bias]], and circumstantial bias. | ||

Revision as of 21:10, 11 February 2022

Automated Resume Screening refers to the use of machine learning algorithms and artificial intelligence (AI) to parse and extract information from applicant resumes. This technology can greatly save companies and recruiters time and other valuable resources while finding the most appropriate hires from amongst big pools of candidates. Different algorithms are used to identify relevant skills and experience for the job from the applicant's resume using keywords in the job description and requirements. Variations of algorithms can be used according to the company's hiring criteria. Certain companies may use algorithms that just do word matching between the job post and the resume, while some may place weights on certain past experiences and backgrounds. The use of automated resume screening then may stimulate bias against underprivileged applicants with lesser experience and relevant job backgrounds resulting in gender bias, racial bias, and circumstantial bias.

Contents

Algorithms for Resume Screening

With development in machine learning, company can customize, modify, and personalize algorithms to follow a specific criteria they are looking for in potential hires.

Some companies use algorithms that are look for certain technical and interpersonal skills in the applicants' resumes. The algorithms then use certain words as keywords to look for in new resumes. For example software engineering companies looking for software developers with knowledge of C++ search for C++ as a keyword in resumes. If a resume contains that word then that will get a higher score for being fit for the role and company. Moreover, if a company is looking for a hire for a leadership position, the algorithms use words like "lead". "led", and "President" in resumes and score accordingly.

Companies looking for candidates with skills as well as past experience can use algorithms that can extract the work history and experience of the applicants. Such algorithms can parse the dates and years on the resume to calculate the work experience of the algorithms. This helps companies screen out applicants that do not meet the eligibility criteria for the company's job posting reducing the applicants pool to only those of interest to the company.

Algorithms can also be adjusted to look for applicants whose experiences relate closely to the job role responsibilities. These algorithms find the correlation between the applicant's resumes and the job descriptions. They not only look for keywords present in both the resume and the job description, but also the context these words are used in. Applicants with past experiences similar to what the job responsibilities include then receive a better score than applicants with less relevant past experiences.

The effectiveness of the resume screening algorithms depends on the ability of the algorithm to parse

Benefits for Companies

Time Efficient

Automating resume screening using AI algorithms saves companies time-to-hire. On average, a recruiter spends 6 seconds to manually scan a resume [4]. AI algorithms can process exponentially more applicants than humans in the same time and can also extract more meaningful information than a human can in the same time. This reduces the amount of applicants recruiters have to manually evaluate before hiring someone for a specific position, in turn reducing the time-to-hire.

Organized

Most resume screening programs come as part of applicant tracking system. These programs can store personal and contact information of different applicants categorized by their key skills and talents. If some candidate does not seem a good fit for some role at the current time, these programs keep their information in the system and can use it in future in case a relevant role opens that is a good fit for the candidate. This increases the pool of applicants and helps companies find a better fit for their roles.

Outreach

Outreach is a time-consuming part of the recruiting process. Companies aim to attract as many applicants as possible in order to find the most appropriate and beneficial fit for their role. More applicants mean more work in terms of organizing, analyzing, and reviewing data for the recruiter which be really inefficient if done manually. Automated resume screening and their accompanying applicant tracking systems speed up the processing of resumes and help recruiters consider both active applicants (applicants applying for the first time) as well as passive applicants (applicants who might have previously applied for a similar role). This makes easier to source more applicants and get a bigger pool and also increases the standards of the applicant pool by considering passive applicants as well.

Different versions of resume screening algorithms can be modified to screen job profiles on certain social media groups and recruiting applications including LinkedIn [5]. Given the millions of users from all over the world on these platforms, companies can extensively expand their outreach.

Ethical Implications

Machine learning and artificial algorithms have grown really powerful and efficient over the years. But still they are not a hundred percent accurate, efficient, and perfect in making decisions. These algorithms are stochastic, not deterministic [7]. These algorithms aim to learn and draw out patterns from past successes and failures. For example, the algorithms learn patterns in attributes of successful applicants and treat them as positives while looking at new applicants. At the same time, they learn attributes of unsuccessful applicants and treat them as negatives while looking at new applicants. This results in a bias towards certain qualities and skills for who is going to get hired. This may seem ideal for companies at the first glance: companies then only get employees that will be beneficial for them or similar to the employees in the past that have been beneficial for them. But sometimes, if the algorithms pick up correct but misleading patterns (correlation is not causation [8]), there can be ethical implications against many groups and subjects including genders, minorities, and applicants' circumstances. Moreover, the same problems can result if the data or past applicants for a certain company or job posting have undesired skills and qualifications because then efficient algorithms can still learn patterns and trends which may not be desirable.

Gender Bias

Gender bias refers to the tendency to prefer one gender over another. It is a form of unconscious bias, or implicit bias, which occurs when one individual unconsciously attributes certain attitudes and stereotypes to another person or group of people [9]. In context of job applications, gender bias implies discriminating applicants based on their gender rather than their skills and abilities.`

Learning past applicants and evaluating new applicants based on that knowledge can lead to a gender bias. If a company or specific job role has been previously, or stereotypically, been dominated by a specific gender, the algorithms may learn that as a trend and hence choose future applicants biased for that gender. A real world example of this is illustrated by Amazon s recent experience with its AI recruiting tool [11]. Being a men dominated tech company, at a time when tech was in turn dominated by men, Amazon's AI recruiting tool which it had been developing for past 4 years learnt to prefer male applicants over female applicants. Therefore, it flagged keywords like "women's club" and "president women's chess club" as negatives where they would be otherwise preferred as positives as they indicate leadership positions. Therefore, this led to a bias against the female gender.

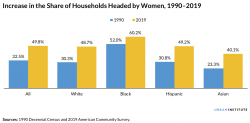

At a time when marriage rate is declining and more single women are becoming homeowners [10], such biases, be it intentional or unintentional, can lead to severe discrepancies in financial conditions of many households.

Ethnic Bias

Ethnic bias can be defined as engaging in discriminatory behavior, holding negative attitudes toward, or otherwise having less favorable reactions toward people based on their ethnicity [12]. In context of job applications, it implies preferring or discriminating between applicants based on their race or ethic backgrounds and not their skills and qualifications.

Companies may not have diverse employee racial and ethnic backgrounds. Countries, cities, and neighborhoods tend to have people with similar ethnicity living there anywhere throughout the world with small populations of minorities. Using machine algorithms to analyze past applicants then may result in a bias against the racial minorities as past employees may not have those ethnicities. This in turn results in consequential problems to racial minorities households as they may suffer from employment more than other ethnicities while also being the ones in most need. Therefore, using automated resume screening can result in an ethnic bias.

While the screening process can result in a lack of opportunities for racial minorities, it creates more opportunities for them as well but in specific roles only which can have ethical implications. Many companies and applicant tracking systems partner with social media companies like Facebook [13]. Facebook uses the similarity between social media profiles to recommend job similar job postings liked or worked by similar social media profiles. A team led by Muhammad Ali and Piotr Sapiezynski at Northeastern University found out that job postings for preschool teachers and secretaries, for example, were shown to a higher fraction of women. In contrast, algorithms showed postings for janitors and taxi drivers to a higher proportion of minorities [14]. This dilemma increases the discrepancy in salaries of different racial and ethnic groups which in turn leads to more superior lifestyle of ethnic majorities and more inferior lifestyle of ethnic minorities.

Circumstantial Bias

Circumstantial bias refers to discriminating behavior to a group based on their circumstance. In context of job applications, circumstantial bias can be thought of as a bias against applicants based on lack of opportunities and experience of applications when they may have the same potential and intellectual potential as other successful applicants.

While filtering out applicants based on a lack of qualifications and skills, the automated resume screening process doesn't evaluate the applicant's learning potential. An applicant may not be able to have received the same opportunities to earn experience or learn skills as other successful applicants. Therefore, they will be screened out early on in the recruitment process. This can have many ethical implications. Applicants with households with poor financial conditions get affected by unemployment the most severely and need a job to improve their financial conditions the most urgently. However, the screening process creates a reverse loop and leads to unemployment affecting already unemployment people more. Moreover, this also affects racial minorities who did not get the chance to earn the same skills and qualifications as other applicants due to lack of eligibility and resources rather than lack of aptitude. In 2017, there were only 4 African American CEOs (all men) of Fortune 500 companies, accounting for 2% of the list [4]. This results in racial minorities getting filtered early on due to their circumstances rather than their aptitude.

Reducing Bias

Choosing the Correct Learning Model

To reduce bias in the decision making process of the resume screening, it is important to choose a correct learning model. While making the machine learning algorithms, companies can make sure irrelevant attributes do not have any impact while differentiating between candidates. If a company is looking for a specific skill for a relevant job posting, gender and ethnicity should not pose a big factor in the decision making. The machine learning algorithm should be able to learn to ignore such attributes while figuring out patterns and correlations. Words like "nurse" and "teacher" should not be linked with any gender and words like "janitor" and "driver" should not be associated with any race.

Account for Infrastructural Issues

During the decision making process, partial weight can be given to the results of the algorithms. A difference between humans and computers is the ability to use judgment based on ethical and emotional data. After the algorithms give evaluations, more review can be added to the applicants based on their circumstances and other infrastructural and external factors that might have disadvantaged them compared to other applicants. These factors can be accounted for during the interview process but at that time most applicants have already be screened out. Therefore, these factors can be kept in mind while making decisions.

Monitoring Results

A company using the resume screening progress can continually evaluate the results of its recruitment process based on certain metrics including its performance, diversity, and the work culture. If the statistics tend to be biased towards certain attributes, the company can try to reconsider its recruitment process to make sure to reduce those biases.

Companies can make use of subpopulation analysis. It is the procedure of considering just a target subpopulation from the whole dataset and calculating the model evaluation metrics for that population. This type of analysis can help and identify if the model favors or discriminates against a certain section of the population including races, genders, and age groups [16]. This technique can help companies perform a more thorough and comprehensive analysis of their recruitment process.

Data Security

The quality of data has a significant impact on the results of the machine learning algorithms. Even slight or random changes can enable or prevent the machine learning algorithms from learning important or unimportant trends in the data. Companies can have strict and proper laws to make sure that the data is secure and safe from unintended access and changes. Levels of security and accesses can be placed depending on the role and seniority of the employees to prevent any personal bias of employees from altering the data.

Data of past hires should be representative of the applicant pool so that the future decisions that are made are appropriate. Therefore, if some role is different from the previous roles posted, companies can make sure to only use the appropriate part of relevant data for that job posting.

References

- ↑ Online Job Application Systems. MBA Crystal Ball https://www.mbacrystalball.com/blog/2014/10/19/online-job-application-system/

- ↑ AI For Recruiting - A Complete Guide. CVViZ https://cvviz.com/ai-recruiting/

- ↑ The Advantages of an Applicant Tracking System. ZAMP HR https://blog.zamphr.com/the-advantages-of-an-applicant-tracking-system

- ↑ 4.0 4.1 Bart Turczynski. 2021 HR Statistics: Job Search, Hiring, Recruiting & Interviews. Zety. https://zety.com/blog/hr-statistics#resume-statistics

- ↑ LinkedIn Scraper. Python Package Index. https://pypi.org/project/linkedin-scraper/

- ↑ Avoiding shortcut solutions in artificial intelligence. MIT CSail https://www.csail.mit.edu/news/avoiding-shortcut-solutions-artificial-intelligence

- ↑ The Limitations of Machine Learning. Towards Data Science. https://towardsdatascience.com/the-limitations-of-machine-learning-a00e0c3040c6

- ↑ Correlation is not causation. The Guardian. https://www.theguardian.com/science/blog/2012/jan/06/correlation-causation

- ↑ What Is Gender Bias in the Workplace? Built In. https://builtin.com/diversity-inclusion/gender-bias-in-the-workplace

- ↑ 10.0 10.1 More Women Have Become Homeowners and Heads of Household. Could the Pandemic Undo That Progress? Urban Institute https://www.urban.org/urban-wire/more-women-have-become-homeowners-and-heads-household-could-pandemic-undo-progress

- ↑ Amazon scraps secret AI recruiting tool that showed bias against women. Reuters. https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G

- ↑ Measuring Ethnic Bias: APA Dictionary of Psychology American Psychological Association. https://dictionary.apa.org/ethnic-bias

- ↑ Facebook Page Admins and Jobs Distribution/ATS Partners Facebook. https://www.facebook.com/business/help/286846482350955

- ↑ Hao, Karen. "Facebook's Ad-Serving Algorithm Discriminates by Gender and Race." MIT Technology Review, 2 Apr. 2020, www.technologyreview.com/2019/04/05/1175/facebook-algorithm-discriminates-ai-bias.

- ↑ Tackling bias in artificial intelligence (and in humans). McKinsey and Company https://www.mckinsey.com/featured-insights/artificial-intelligence/tackling-bias-in-artificial-intelligence-and-in-humans

- ↑ How to reduce machine learning bias. Medium. https://medium.com/atoti/how-to-reduce-machine-learning-bias-eb24923dd18e