Artificial SuperIntelligence

An artificial superintelligence is a hypothetical subset of artificial general intelligence, that would greatly exceed human levels of performance in all cognitive domains. It is distinct from currently existing narrow artificial intelligences, which are only able to demonstrate superhuman performance in a particular domain, such as IBM Watson's ability to answer questions or AlphaGo's ability to play Go. Philosophers speculate on whether artificial superintelligence would be conscious and have subjective experiences; unlike narrow artificial intelligence, all forms of artificial general intelligence would be able to pass the Turing Test, including superintelligences. Ethical issues related to artificial superintelligence include the moral weight of artificial agents, existential risk, and problems of bias and value alignment.

Timeline

Experts are mixed regarding the timeline of the development of artificial superintelligence. A poll of AI researchers in 2013 found that the median prediction was a 50% chance artificial general intelligence would be developed by 2050, and a 90% chance artificial general intelligence would be developed by 2075. The median estimate of the time from artificial general intelligence to artificial superintelligence was 30 years.[1]

Opinions regarding the time from the development of artificial general intelligence to artificial superintelligence are roughly split into two camps: soft takeoff and hard takeoff. Soft takeoff posits that there will be a great deal of time between the development of artificial general intelligence and artificial superintelligence, on the other of years or decades. On the other hand, hard takeoff suggests that artificial general intelligence will rapidly develop into superintelligence, on the order of months, days or even minutes. This is generally considered possible through recursive self-improvement, first conceived of by mathematician I.J. Good.[2] As Good and others predict, an artificial general intelligence with expert-level performance in the domain of programming might be able to modify it's own source code, producing a version of itself that was slightly smarter and more capable. It could then self-modify further, undergoing an intelligence explosion, with each iteration becoming more and more intelligent, until it had reached the level of superintelligence.

Applications

Nick Bostrom claims that ASI could manifest itself for 3 subsections of use.[3] The first use is as a question answering service in which the user can ask questions to a machine, which can answer. This service is similar to Google but is capable of answering much more complicated questions that humans feasibly cannot answer. The question “Is there life outside our galaxy?” is an example of this form of a question. The second use of ASI is a service that creates tangible solutions to problems. An example of this would be asking the ASI device, “use stem cells to create a solution to solving cancer”. The third function for ASI is to act on its own to intelligently solve problems. The service can independently recognize problems in daily life and use its resources to present a solution without the inquiry or involvement of humans at all. Elieze Yudkowsky, an expert in AI, states that “There are no hard problems, only problems that are hard to a certain level of intelligence. Move the smallest bit upwards in intelligence, and some problems will suddenly move from “impossible” to “obvious.” Move a substantial degree upwards, and all of them will become obvious.” Additionally, many experts believe that ASI will help us become immortal. The machines will find cures for the deadliest diseases, solve environmental destruction, help humans cure hunger, and combine with biotech to create anti-aging solutions that prevent people from dying. Furthermore, ASI will lead the human race to live in a manner that is experientially better than its current state. Bostrom states that the implementation of a superintelligence will help humans generate opportunities to increase their utility through intellectual and emotional avenues. It will help generate a world that is much more appealing than the current one. Through the assistance of a superintelligence, humans will devote their lives to more enjoyable activities, like game-playing, developing human relations, and living closer to their ideals.

Ethical Implications

Experts have concluded that they don’t know what the result of an ASI world would be. Bostrom claims that as machines get smarter, they don’t just get score well on intelligence exams, rather they gain superpowers. The machines will be able to help itself become even smarter than it previously was. They will be the ability to be persuasive through social manipulation. They will also be able to prioritize tasks. They will be strategizing things like long term goals, and step by step how to accomplish them in the short term. It must be noted that ASI will be significantly more developed than humans in these areas. There will be a race among different groups to accomplish ASI superiority. These groups will likely consist of governments, tech firms, and black-market groups. Depending on who solves the problem first, it could prove consequential. This will be a revolutionary technology, and Bostrom believes that the first group to develop ASI will have a strategic advantage over any successors, as the first mover will have an advantage because it would be far enough ahead to oppress other ASI’s as they come about. This could give power to those who create successful ASI first. The issue is that the first ASI could be backed by morally unethical individuals. In this case, ASI could be harmful.

Additionally, if ASI can help humans become immortal, is this ethical? In a sense, ASI and humans would be playing the hand of God, which leaves people divided about the idea. Furthermore, there are serious implications if people become immortal. In a world where there is a no death rate, but still a birth rate, a serious impact on our living conditions and other species around us could occur. Could this lead to overpopulation? Or would ASI provide a solution to this? What if ASI concludes that certain humans/species pose a threat to society as a whole, and its solution is to eliminate a certain group of humans/species? What if we don’t like the answers that ASI has for humanity?

ASI could also face issues with bias. Because any ASI would need to be programmed by humans initially, it is extremely unlikely that one would be created without some level of bias. Almost all human records, including medical, housing, criminal, historical, and educational, have some degree of bias against minorities. This is caused by human flaws and failures, and the question remains if an ASI would act to further these shortcomings or fix humanity's mistakes. As stated, experts don't know what to expect from an ASI. Nonetheless, humans will give a vast library of bias filled information that is present both today and in the past. While we don't know how an ASI would act on this knowledge, it could be motivation to promote biased actions.

Media

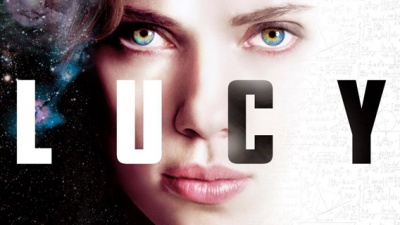

Lucy (film)

Lucy is a 2013 science-fiction drama film starring Scarlet Johansson. The plot follows Lucy, a woman who gains telekinetic abilities following her exposure to a cognitive enhancement drug. Immediately following her exposure to the drug, Lucy begins to gain enhanced mental and physical abilities such as telepathy and telekinesis. In order to prevent her body from disintegrating due to cellular degeneration, Lucy must continue taking more of the drug. The additional doses work to further increase Lucy's cerebral capacity well beyond that of a normal human being, which gifts her with telekinetic and time-travel abilities. Her emotions are dulled and she grows more stoic and robotic. Her body begins to change into a black, nanomachine type substance that spreads over the computers and electronic objects in her lab. Eventually, Lucy transforms into a complex supercomputer. She becomes an all-knowing entity, far beyond the intellectual capacity of any human being. She eventually reaches 100% of her cerebral capacity and transcends this plane of existence and enters into the spacetime continuum. She leaves behind all of her knowledge on a superintelligent flash drive so that humans may learn from all of her knowledge and insight about the universe[4].

The story of Lucy can be likened to the concept of artificial superintelligence. Lucy is transformed into an all-knowing supercomputer with intelligence much greater than any human. Although she is not artificial, but rather a superintelligent human, she gains the ability to create solutions to problems that are unfathomable to the human mind. In a sense, Lucy loses her humanity and evolves into a machine with an intellect that is smarter than that of any human.

References

- ↑ Müller, Vincent C.; Bostrom, Nick (2016). "Future Progress in Artificial Intelligence: A Survey of Expert Opinion". Fundamental Issues of Artificial Intelligence. 376: 555-572. doi:10.1007/978-3-319-26485-1_33. Retrieved April 27, 2019.

- ↑ Good, I. J. (1965). "Speculations Concerning the First Ultraintelligent Machine". Advances in Computers. 6: 31-88. doi:10.1016/S0065-2458(08)60418-0. ISBN 9780120121069. Retrieved April 27, 2019.

- ↑ "Nick Bostrom on artificial intelligence", September 8, 2015., https://blog.oup.com/2014/09/interview-nick-bostrom-superintelligence/

- ↑ retrieved April 20, 2019., https://www.imdb.com/title/tt2872732/

- https://nickbostrom.com/superintelligence.html

- The Singularity Is Near: When Humans Transcend Biology by Ray Kurzweil

- http://yudkowsky.net/