Difference between revisions of "Artificial Intelligence in the Music Industry"

| Line 1: | Line 1: | ||

{{Nav-Bar|Topics##}}<br> | {{Nav-Bar|Topics##}}<br> | ||

| − | '''Artificial Intelligence in the Music Industry''' is a recent phenomenon that has already had a significant impact on the way we conceive, produce, and consume music. Artificial intelligence (AI) as a system works by learning and responding in a way that mimics the thought processes of a human. Major corporations in the music industry have developed applications that can create, perform, and/or curate music through the power of AI's simulation of human intelligence <ref> Freeman, Jeremy. “Artificial Intelligence and Music — What the Future Holds?” Medium, 24 Feb. 2020, medium.com/@jeremy.freeman_53491/artificial-intelligence-and-music-what-the-future-holds-79005bba7e7d. </ref> . This microcosm of the music industry is relatively new, but growing at a rapid rate. Although its advancements have been great, using AI in music could cause issues in the future for artists who could have to compete against machines for recognition. Potential legal discrepancies regarding copyright infringement are also of concern. | + | '''Artificial Intelligence in the Music Industry''' is a recent phenomenon that has already had a significant impact on the way we conceive, produce, and consume music. Artificial intelligence (AI) as a system works by learning and responding in a way that mimics the thought processes of a human. Major corporations in the music industry have developed applications that can create, perform, and/or curate music through the power of AI's simulation of human intelligence <ref name="Freeman"> Freeman, Jeremy. “Artificial Intelligence and Music — What the Future Holds?” Medium, 24 Feb. 2020, medium.com/@jeremy.freeman_53491/artificial-intelligence-and-music-what-the-future-holds-79005bba7e7d. </ref> . This microcosm of the music industry is relatively new, but growing at a rapid rate. Although its advancements have been great, using AI in music could cause issues in the future for artists who could have to compete against machines for recognition. Potential legal discrepancies regarding copyright infringement are also of concern. |

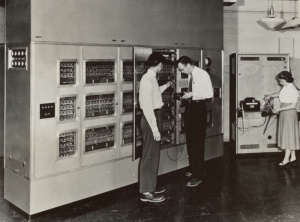

[[Image:Illiac.jpeg|300px|thumb|The Illinois Automatic Computer (ILLIAC)]] | [[Image:Illiac.jpeg|300px|thumb|The Illinois Automatic Computer (ILLIAC)]] | ||

| Line 8: | Line 8: | ||

===AI In Music=== | ===AI In Music=== | ||

| − | The first recorded instance of computer-generated music was in 1951 by Turing. Turing built the BBC outside-broadcast unit at the Computing Machine Library in Manchester, England, and used it to generate a variety of different melodies. To do so, he programmed individual musical notes into the computer. Artificial intelligence was first used to create music in 1957 by Lejaren Hiller and Leonard Isaacson from the University of Illinois at Urbana–Champaign <ref> Li, Chong. “A Retrospective of AI + Music - Prototypr.” Medium, 25 Sept. 2019, blog.prototypr.io/a-retrospective-of-ai-music-95bfa9b38531. </ref>. Hiller and Isaacson programmed the ILLIAC (Illinois Automatic Computer) to generate music written start-to-finish by artificial intelligence. Around the same time, Russian researcher R.Kh.Zaripov published the first widely-available paper on algorithmic music composing. He used the historical Russian computer, URAL-1, to do so <ref | + | The first recorded instance of computer-generated music was in 1951 by Turing. Turing built the BBC outside-broadcast unit at the Computing Machine Library in Manchester, England, and used it to generate a variety of different melodies. To do so, he programmed individual musical notes into the computer. Artificial intelligence was first used to create music in 1957 by Lejaren Hiller and Leonard Isaacson from the University of Illinois at Urbana–Champaign <ref name="Chong"> Li, Chong. “A Retrospective of AI + Music - Prototypr.” Medium, 25 Sept. 2019, blog.prototypr.io/a-retrospective-of-ai-music-95bfa9b38531. </ref>. Hiller and Isaacson programmed the ILLIAC (Illinois Automatic Computer) to generate music written start-to-finish by artificial intelligence. Around the same time, Russian researcher R.Kh.Zaripov published the first widely-available paper on algorithmic music composing. He used the historical Russian computer, URAL-1, to do so <ref name="Chong"/> |

| − | <ref | + | <ref name="Freeman"/>. |

| − | Since those milestones, research and software in AI generated music has flourished. In 1974, the first International Computer Music Conference (ICMC) was hosted at Michigan State University in East Lansing, Michigan. The ICMC is now an annual event hosted by the International Computer Music Association (ICMA) for AI composers and researchers alike <ref | + | Since those milestones, research and software in AI generated music has flourished. In 1974, the first International Computer Music Conference (ICMC) was hosted at Michigan State University in East Lansing, Michigan. The ICMC is now an annual event hosted by the International Computer Music Association (ICMA) for AI composers and researchers alike <ref name="Freeman"/>. |

==Applications== | ==Applications== | ||

===Music Production=== | ===Music Production=== | ||

| − | One of the biggest breakthroughs in computer-generated music was the '''Experiments in Musical Intelligence (EMI)''' system. Developed by David Cope, an American composer and scientist at the University of California, Santa Cruz, EMI was able to analyze different types of music and create unique compositions by genre. It has now created more than a thousand different musical works based on over 30 different composers<ref | + | One of the biggest breakthroughs in computer-generated music was the '''Experiments in Musical Intelligence (EMI)''' system. Developed by David Cope, an American composer and scientist at the University of California, Santa Cruz, EMI was able to analyze different types of music and create unique compositions by genre. It has now created more than a thousand different musical works based on over 30 different composers <ref name="Chong"/> |

| − | <ref | + | <ref name="Freeman"/> . |

In 2016, Google, a leader in AI technology, released '''Magenta'''. Magenta's mission statement writes that it is “an open-source research project exploring the role of machine learning as a tool in the creative process” <ref> Magenta. Google, magenta.tensorflow.org. </ref>. Instead of learning from hard-coded rules, Magenta learns by example from other humans. In this way, it acts as an assistant to humans in the creative process, rather than a machine-part replacement<ref> “How Google Is Making Music with Artificial Intelligence.” Science | AAAS, 8 Dec. 2017, www.sciencemag.org/news/2017/08/how-google-making-music-artificial-intelligence. </ref>. | In 2016, Google, a leader in AI technology, released '''Magenta'''. Magenta's mission statement writes that it is “an open-source research project exploring the role of machine learning as a tool in the creative process” <ref> Magenta. Google, magenta.tensorflow.org. </ref>. Instead of learning from hard-coded rules, Magenta learns by example from other humans. In this way, it acts as an assistant to humans in the creative process, rather than a machine-part replacement<ref> “How Google Is Making Music with Artificial Intelligence.” Science | AAAS, 8 Dec. 2017, www.sciencemag.org/news/2017/08/how-google-making-music-artificial-intelligence. </ref>. | ||

Revision as of 14:42, 26 March 2021

Artificial Intelligence in the Music Industry is a recent phenomenon that has already had a significant impact on the way we conceive, produce, and consume music. Artificial intelligence (AI) as a system works by learning and responding in a way that mimics the thought processes of a human. Major corporations in the music industry have developed applications that can create, perform, and/or curate music through the power of AI's simulation of human intelligence [1] . This microcosm of the music industry is relatively new, but growing at a rapid rate. Although its advancements have been great, using AI in music could cause issues in the future for artists who could have to compete against machines for recognition. Potential legal discrepancies regarding copyright infringement are also of concern.

Contents

History

A Look Into AI

An understanding of the history of AI can allow us to better understand the ethics involved in any discussion of AI in music. AI as a concept came into popular consciousness in the 1950s with British mathematician Alan Turing paper on how to build an 'intelligent machine' and how to test its intelligence[2]. Since then, interest in AI as a potential field of study has gone waxed and waned. The increased use of AI as a subject of media like books and movies increased awareness of AI in the mainstream and in scientific circles. Since its inception, we have seen an exponential increase in the implementation of AI in our everyday lives, from virtual assistants like Alexa and Siri to fraud detection in personal banking.

AI In Music

The first recorded instance of computer-generated music was in 1951 by Turing. Turing built the BBC outside-broadcast unit at the Computing Machine Library in Manchester, England, and used it to generate a variety of different melodies. To do so, he programmed individual musical notes into the computer. Artificial intelligence was first used to create music in 1957 by Lejaren Hiller and Leonard Isaacson from the University of Illinois at Urbana–Champaign [3]. Hiller and Isaacson programmed the ILLIAC (Illinois Automatic Computer) to generate music written start-to-finish by artificial intelligence. Around the same time, Russian researcher R.Kh.Zaripov published the first widely-available paper on algorithmic music composing. He used the historical Russian computer, URAL-1, to do so [3] [1].

Since those milestones, research and software in AI generated music has flourished. In 1974, the first International Computer Music Conference (ICMC) was hosted at Michigan State University in East Lansing, Michigan. The ICMC is now an annual event hosted by the International Computer Music Association (ICMA) for AI composers and researchers alike [1].

Applications

Music Production

One of the biggest breakthroughs in computer-generated music was the Experiments in Musical Intelligence (EMI) system. Developed by David Cope, an American composer and scientist at the University of California, Santa Cruz, EMI was able to analyze different types of music and create unique compositions by genre. It has now created more than a thousand different musical works based on over 30 different composers [3] [1] .

In 2016, Google, a leader in AI technology, released Magenta. Magenta's mission statement writes that it is “an open-source research project exploring the role of machine learning as a tool in the creative process” [4]. Instead of learning from hard-coded rules, Magenta learns by example from other humans. In this way, it acts as an assistant to humans in the creative process, rather than a machine-part replacement[5].

Recommendation Models

Another way AI is used in the music industry is by creating recommendation models. One of the most prominent users of this tool today is Spotify. Spotify uses a host of machine learning techniques to predict and customize playlists for its users. One example of this is the Discover Weekly playlist, a collection of 30 songs curated specifically for the user each week based on search history, listening patterns, and predictive models. One way they do this is through collaborative filtering. Spotify uses collaborative filtering to compare different users with similar behaviors to predict what a user might enjoy or want to listen to next. Another tool is natural language processing (NPL). NPL analyses human speech patterns through text. AI accumulates words that are associated with different artists by scanning the internet for articles and posts written about them. They can then associate artists who have similar cultural vectors, or top terms which each other and recommend similar artists to their users. One final tool Spotify uses to curate user-specific playlists is audio models. Audio models are most useful when an artist is new and doesn’t have much online about them yet or many listeners. Audio models analyze raw audio tracks and categorize them with similar songs. This way, they are able to recommend lesser-known artists alongside popular tracks [6].

Ethical Implications

Commodification of Music

Some ethical concerns about the future of music and AI have arisen over recent years. One of which is the commodification of music. With the popularization of AI-generated music on the horizon, there is concern that music will be or is being made and sold solely for profit. Music has always been an essentially-human art medium that pulls from emotion and experience. You would assume real music is something no machine could recreate. However, emotion and experience might not be key ingredients to a chart-topping song these days. AI technology can easily generate songs that are trendy and catchy with the power to analyze and remix music that is already popular. This also poses the threat of music becoming homogenous or lacking variety. Ideally, people will continue to prefer human-made music, but as algorithms continue to advance and more data on our musical preferences are collected, it’s impossible to say what the future holds [7].

Since its beginnings, AI has always carried the negative associations of leading to high volumes of unemployment. This phenomenon has begun to and will continue to take away jobs in the music industry as AI is increasingly implemented. While it makes many jobs redundant, it can also provide new opportunities [8]. Like many aspects of AI's future in music, how exactly its effect on employment and opportunities will play out is unclear.

Copyright

One of the biggest ethical dilemmas that AI-generated music is facing is copyright infringement. Allowing the AI to listen to copyrighted music and then generate similar songs without compensating or citing the original artist could result in huge legal complications. As laws currently stand now, copyright infringement can only occur if AI creates a song that sounds similar to an existing song and claims it as its own. This is a hard line to draw because it may depend on how similar the songs are to make this call—what does "similar" look like and can it be defined in a legal context? This law was written without AI systems in mind, meaning it is likely that they never took into consideration the implications of its consequences, like a machine listening to and pulling from an artist's entire discography to create one song. From an artist's point of view, it may seem unfair to not be credited or compensated for something like this [9] [10].

See Also

References

- ↑ 1.0 1.1 1.2 1.3 Freeman, Jeremy. “Artificial Intelligence and Music — What the Future Holds?” Medium, 24 Feb. 2020, medium.com/@jeremy.freeman_53491/artificial-intelligence-and-music-what-the-future-holds-79005bba7e7d.

- ↑ Anyoha, Rockwell. “The History of Artificial Intelligence” Harvard University, August 28, 2017, https://sitn.hms.harvard.edu/flash/2017/history-artificial-intelligence/.

- ↑ 3.0 3.1 3.2 Li, Chong. “A Retrospective of AI + Music - Prototypr.” Medium, 25 Sept. 2019, blog.prototypr.io/a-retrospective-of-ai-music-95bfa9b38531.

- ↑ Magenta. Google, magenta.tensorflow.org.

- ↑ “How Google Is Making Music with Artificial Intelligence.” Science | AAAS, 8 Dec. 2017, www.sciencemag.org/news/2017/08/how-google-making-music-artificial-intelligence.

- ↑ Sen, Ipshita. “How AI Helps Spotify Win in the Music Streaming World.” Outside Insight, 26 Nov. 2018, outsideinsight.com/insights/how-ai-helps-spotify-win-in-the-music-streaming-world.

- ↑ Staff. “What Does Commodification Mean for Modern Musicians?” Dorico, 13 Mar. 2018, blog.dorico.com/2018/01/commodification-music-mean-modern-musicians.

- ↑ Thomas, Mike. “ARTIFICIAL INTELLIGENCE'S IMPACT ON THE FUTURE OF JOBS” builtin, 8 Apr. 2020, https://builtin.com/artificial-intelligence/ai-replacing-jobs-creating-jobs.

- ↑ “How AI Is Benefiting The Music Industry?” Tech Stunt, 20 Aug. 2020, techstunt.com/how-ai-is-benefiting-the-music-industry.

- ↑ “We’ve Been Warned About AI and Music for Over 50 Years, but No One’s Prepared.” The Verge, 17 Apr. 2019, www.theverge.com/2019/4/17/18299563/ai-algorithm-music-law-copyright-human.