Difference between revisions of "Artificial Intelligence in the Music Industry"

| Line 17: | Line 17: | ||

<ref> Freeman, Jeremy. “Artificial Intelligence and Music — What the Future Holds?” Medium, 24 Feb. 2020, medium.com/@jeremy.freeman_53491/artificial-intelligence-and-music-what-the-future-holds-79005bba7e7d. </ref> . | <ref> Freeman, Jeremy. “Artificial Intelligence and Music — What the Future Holds?” Medium, 24 Feb. 2020, medium.com/@jeremy.freeman_53491/artificial-intelligence-and-music-what-the-future-holds-79005bba7e7d. </ref> . | ||

| − | + | In 2016 Google, one of the leaders in AI technology, released '''Magenta'''. Magenta is “an open source research project exploring the role of machine learning as a tool in the creative process” <ref> Magenta. Google, magenta.tensorflow.org. </ref>. Instead of learning from hard-coded rules, Magenta learns by example from other humans. It can be thought of as an assistant to humans in the creative process, rather than a replacement<ref> “How Google Is Making Music with Artificial Intelligence.” Science | AAAS, 8 Dec. 2017, www.sciencemag.org/news/2017/08/how-google-making-music-artificial-intelligence. </ref>. | |

===Recommendation Models=== | ===Recommendation Models=== | ||

Revision as of 19:50, 13 March 2021

Artificial Intelligence in the Music Industry is a relatively new phenomenon that has already had a huge impact on the way we produce, consume, and experience music. Artificial intelligence (AI) itself is essentially a system created to learn and respond in a way that is similar to human beings. To some degree, AI technologies can simulate human intelligence and many different companies have developed applications that can create, perform, and/or curate music through the power of AI [1] . This industry is relatively new, but growing rapidly. This may cause future problems for artists competing against machines for recognition and potential legal discrepancies regarding copyrighted materials used in the AI learning process.

Contents

History

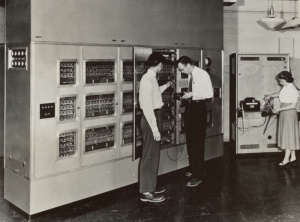

The first recorded instance of computer-generated music was in 1951 by British mathematician, Alan Turing. Turing built the BBC outside-broadcast unit at the Computing Machine Library in Manchester, England, and used it to generate a variety of different melodies. To do so, he programmed individual musical notes into the computer. Artificial intelligence was first used to create music in 1957 by Lejaren Hiller and Leonard Isaacson from the University of Illinois at Urbana–Champaign [2]. They programmed the ILLIAC (Illinois Automatic Computer) to generate music completely written by artificial intelligence. Around this same time, Russian researcher R.Kh.Zaripov published the first paper on algorithmic music composing that was available worldwide. He used the historical Russian computer, URAL-1, to do so [3] [4] .

From then on, research and software around AI generate music began to flourish. In 1974, the first International Computer Music Conference (ICMC) was hosted at Michigan State University in East Lansing, Michigan. This became an annual event hosted by the International Computer Music Association (ICMA) for AI composers and researchers alike [5] .

Applications

Music Production

One of the biggest breakthroughs in computer-generated music was the Experiments in Musical Intelligence (EMI) system. Developed by David Cope, an American composer and scientist at the University of California, Santa Cruz, EMI was able to analyze different types of music and create unique compositions by genre. It has now created more than a thousand different musical works based on over 30 different composers[6] [7] .

In 2016 Google, one of the leaders in AI technology, released Magenta. Magenta is “an open source research project exploring the role of machine learning as a tool in the creative process” [8]. Instead of learning from hard-coded rules, Magenta learns by example from other humans. It can be thought of as an assistant to humans in the creative process, rather than a replacement[9].

Recommendation Models

Spotify Collaborative Modeling

Natural Language Processing

Convolutional Neural Networks

Ethical Implications

Commodification of Music

Big Data

Copyright

See Also

References

- ↑ Freeman, Jeremy. “Artificial Intelligence and Music — What the Future Holds?” Medium, 24 Feb. 2020, medium.com/@jeremy.freeman_53491/artificial-intelligence-and-music-what-the-future-holds-79005bba7e7d.

- ↑ Li, Chong. “A Retrospective of AI + Music - Prototypr.” Medium, 25 Sept. 2019, blog.prototypr.io/a-retrospective-of-ai-music-95bfa9b38531.

- ↑ Li, Chong. “A Retrospective of AI + Music - Prototypr.” Medium, 25 Sept. 2019, blog.prototypr.io/a-retrospective-of-ai-music-95bfa9b38531.

- ↑ Freeman, Jeremy. “Artificial Intelligence and Music — What the Future Holds?” Medium, 24 Feb. 2020, medium.com/@jeremy.freeman_53491/artificial-intelligence-and-music-what-the-future-holds-79005bba7e7d.

- ↑ Freeman, Jeremy. “Artificial Intelligence and Music — What the Future Holds?” Medium, 24 Feb. 2020, medium.com/@jeremy.freeman_53491/artificial-intelligence-and-music-what-the-future-holds-79005bba7e7d.

- ↑ Li, Chong. “A Retrospective of AI + Music - Prototypr.” Medium, 25 Sept. 2019, blog.prototypr.io/a-retrospective-of-ai-music-95bfa9b38531.

- ↑ Freeman, Jeremy. “Artificial Intelligence and Music — What the Future Holds?” Medium, 24 Feb. 2020, medium.com/@jeremy.freeman_53491/artificial-intelligence-and-music-what-the-future-holds-79005bba7e7d.

- ↑ Magenta. Google, magenta.tensorflow.org.

- ↑ “How Google Is Making Music with Artificial Intelligence.” Science | AAAS, 8 Dec. 2017, www.sciencemag.org/news/2017/08/how-google-making-music-artificial-intelligence.