Artificial Agents

Artificial Agents are bots or programs that autonomously collect information or perform a service based on user input or its environment.[1] Typical characteristics of agents can include adapting based on experience, problem solving, analyzing success and failure rates, as well as using memory-based storage and retrieval. [1] Humans are responsible for the design and behavior of the agent, however the agent itself has the ability to interact with its environment freely within the scope of its granted domain. Luciano Floridi explains that this autonomy, exhibited by the artificial agents, allows for the agents to learn and adapt entirely on their own. Artificial agents differ from their human inventors as they do not acquire feelings or emotions in achieving their goals, according to Floridi, artificial agents are still classified as moral agents. An artificial agent's morality, actions, embedded values, and bias is ethically controversial. [2]

Contents

Differentiation From Humans

Compared to humans, computers are highly efficient in their ability to process complex calculations and complete repetitive tasks with minimal margins of error. Such realizations combined with needs to increase productivity lead to the birth of artificial agents. This is where the differentiation lies. Humans make artificial agents, but artificial agents are not human. Though artificial agents can adapt and learn just as we describe humans as being able to, they do so in a different way. Artificial agents do not experience emotion or feeling which, ultimately, could lead to larger issues when artificial agents take on moral tasks. Human beings are able to comprehend the impact of their actions, but artificial agents tend to be goal-driven in the sense that they will do whatever is necessary to reach the desired outcome.

In 1950, Alan Turing tested a machine's ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. His findings showed that machines can not feel, therefore they are not human. This experiment is known today as the Turing Test. Consciousness is something that humans have while artificial agents, likely, do not. It is a challenge to claim that an artificial agent is aware of its being. The Turing Test helps to prove this concept by quantifying the ability for a machine to act human-like. Thus, artificial agents differ from humans because they have the ability to make their own decisions, however without being fully aware of how or why, while humans on the other hand are able to consciously make decisions.[3]

Artificial Agents Three Criteria

Floridi lays out three basic criteria of an agent. [2] Those being:

- Interactivity

- Autonomy

- Adaptability

Interactivity

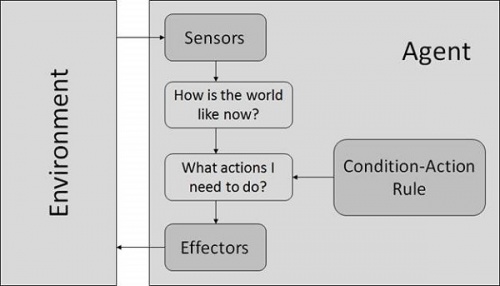

Interactivity refers to the idea that the agent and its environment have the ability to act upon each other. Input or output of a value is a common example of interactivity. Interactivity can also refer to action by agents and participants that are occurring at the same time [2].

Autonomy

An autonomous agent is one with a certain degree of intelligence so that it can act on the user's behalf. This means, without having a direct intervention from an outside source an agent can change its state. However, having autonomy doesn't mean the agent can do whatever it pleases. It can only act in within the degree of intelligence it has been installed with, when it comes to decision making.[4] There is a sense of complexity when it comes to autonomy. If an agent can perform internal transitions that change an agent’s state therefore causing the agent to have two or more states is autonomy [2] [5].

Adaptability

Adaptability refers to the idea that the agent has the ability to change to a different state without having a direct response with interaction. Adaptability comes after interactivity and autonomy if in the internal state, the agent has its transition rules stored [2].

Examples of Artificial Agents

Webbot:

Web bots are widely used as filters for users' email accounts. Webbots satisfy the criteria to be considered as artificial agents in that they interact with its environment - in this case, the users' email - by blocking unwanted messages. This process is fully automated without users having to delete unwanted emails manually. Web bots are also constantly learning to adapt to users' preferences in order to improve the accuracy of their filters.

Smart Thermostats:

Smart thermostats provide solutions for maintaining optimal residential temperature. The device interacts with the residential environment by engaging in heating or cooling activities. The device is designed to operate with minimal human interaction to reduce the number of human errors and is able to adapt to its environment through sensors that allow the device to determine whether to suspend or engage in heating or cooling activities.

Autonomous Vehicles

Autonomous vehicles remove the burden of driving from their human controllers and place the responsibility on themselves to handle the various responsibilities involved in driving. In order to function, autonomous vehicles use a variety of computer vision techniques in order to adapt to changes in their environment. In conjunction with complex algorithm to handle incoming computer vision data, these artificial agent may in some cases outperform their human counterparts.

Learning and Intentionality

Human beings often learn from experiences, this, however, is a quality that extends to artificial agents, as well. As artificial agents encounter different computational situations, they are able to modify their actions. This not only exhibits their ability to learn but also to interact with their environment without human assistance. Frances Grodzinsky postulates that as an artificial agent learns and becomes more complex, its future behavior becomes harder to predict.[6] This becomes increasingly important as artificial agents become integrated with more risky facets. When artificial agents are able to learn and adapt on their own, they can surpass their human creators which poses major problems should the designer lose control of the agent. This idea that an agent can break free from its creators intent hinders the agent's ability to be considered intentional. Intentionality of an artificial agent requires that it is essentially predictable, but does not imply that has consciousness. Therefore, an artificial agent has intentionality so long as it does not surpass its creator's desired outcomes. Once the human inventor loses control of the artificial agent, the agent loses intentionality and can become dangerous. [6]

Artificial Agents in Gaming

DeepMind is a company that has taken artificial agents and applied them to gaming. The employees there have created a gaming system that aims to play Atari games. CEO of DeepMind, Demis Hassabis explains how his staff has made an algorithm that should learn on its own through experience. After a few hundred attempts at playing, the artificial agent learns how to win the games in the most efficient manner. Though in gaming, the severity of an artificial agent’s actions is relatively slim, the growing use of artificial agents poses bigger issues. For example, one-day artificial agents may be making medical, financial, and even governmental decisions.[8] This makes the stakes higher especially because the creators can easily lose control of the actions of the agent.

For example, at DeepMind the programmers made an artificial agent that was able to complete the game in such a way that the human creators had never thought of themselves. The agent acted in a way that the creator was unable to predict.[6]

Ethics

The rise of revolutionary technologies demands a symmetric rise in information ethics, at least according to leading ICT ethicist Phillip Brey [9]. The value that technology can provide to humans makes their integration into our lives both consistent and ever-expanding. James Hogan (author of Two Faces of Tomorrow) discusses how we must control the amount of power we give artificial agents. It is important to understand and prioritize the fact that artificial agents are autonomous. The more power they are given, the less certain we can be about how they will act. [10] Another major ethical concern with artificial agents is the proposition of them being moral agents has raised ethical controversy.

Artificial Agents as Moral Agents

Morality is the ability to distinguish between right and wrong. Often, this implies an understood foundation of law meaning that there are consequences for certain actions and praise for others. In order to be considered moral, this ability to punish or honor should be intact. Moral agents can perform actions for good or for evil[11]. In the case of artificial agents, it is unclear if repercussions and rewards are applicable.

An artificial agent can do things that have moral consequences.[2] This insinuates that artificial agents can decipher between right and wrong, or can at least provide a right or wrong outcome. Kenneth Himma does not go so far as to say that artificial agents are moral agents. Rather, they recognize that artificial agents can only be moral agents if there is evidence that the artificial agent understands morality and is able to make decisions on their own. A better understanding of a moral agent comes down to who is responsible for the undergone actions. [3]

Responsibility for Artificial Agent’s Actions

By nature, an artificial agent is just that—artificial, made by humans. Due to this inherent fact, it is assumed that human beings are to blame for the actions of their produced agents. To better understand this, it is important to know what responsibility entails. A responsible entity has intention and awareness of their actions.

Himma makes a clear correlation here in discussing that society doesn't hold people with cognitive disabilities morally accountable in the same way that we hold other people accountable, because their disability interferes with their ability to comprehend moral consequences."[3] Likewise, we do not punish the artificial agent because it is unclear if they understand the difference between right and wrong, even if they can produce a moral outcome. We must keep the people who design artificial agents accountable for that agent's actions, otherwise, no one can be held accountable.[8] Johnson recognizes that even though it may be the actions of the artificial agent that are deemed right or wrong, it is still the responsibility of the creator to understand the risk in designing an autonomous agent. Frances Grodzinsky echo's this sentiment placing the responsibility of artificial agents' behavior on the designer [12]. When designing and planning the actions of artificial agents, it's important for the designer to establish what their intentions are, and to try to anticipate any behaviors that could result in unmoral interactions. For this reason, when creating artificial agents, designers must be careful when developing and designing the interactions .[6] .

Artificial Agents and Bias

Because artificial agents are human-made but still autonomous, major ethical issues arise when values are brought into play. Innately, human beings have opinions, and thus, as the creators of artificial agents, these opinions can slip into the technologies. As it has become a trend to apply artificial agents in the real world, the biases they pose inevitably influence certain groups of people.

Examples COMPAS is an AI algorithm used by law enforcement departments to predict whether criminals are likely to commit another crime in the future. It was designed to take personal human bias out of the process of computing this sensitive metric, based on information including a criminal's age, gender, and previously committed crimes. However, tests of this system have found it to overestimate the likelihood of recidivism for black people at a much higher rate than it does for white people[14] because it was designed by people who have their own biases.

The recruiting AI adopted by Amazon to review resumes was found to be biased against female applicants.[15]

While the intention of these algorithms is to remove human bias from the equation, humans have moral consciousness, whereas it is unclear if artificial agents do. When artificial agents have their creator's biases built in and then go off on their own, these biases can be exploited and amplified. [16]

See Also

References

- ↑ 1.0 1.1 Rouse, M. Intelligent Agent. SearchEnterpriseAI. Teach Target. 2019. https://searchenterpriseai.techtarget.com/definition/agent-intelligent-agent

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 Floridi, Luciano and Sanders, J.W. “On the Morality of Artificial Agents” 2004.

- ↑ 3.0 3.1 3.2 Himma, Kenneth. “Artificial agency, consciousness, and the criteria for moral agency: what properties must an artificial agent have to be a moral agent?” 2009.

- ↑ "Chang, Chia-hao, and Yubao Chen. “Autonomous Intelligent Agent and Its Potential Applications.” Computers & Industrial Engineering , vol. 31, 1–2, Oct. 1996, p. 409–412."

- ↑ “Artificial Intelligence”, Stanford Encyclopedia of Philosophy, 2018.

- ↑ 6.0 6.1 6.2 6.3 Grodzinsky, Frances, Miller, Keith, Wofl, Marty. "The ethics of designing artificial agents" 2008.

- ↑ Hornyak, Tim. “Google's Powerful DeepMind AI Masters Classic Atari Video Games”2015.

- ↑ 8.0 8.1 Johnson, Deborah, and Miller, Keith. “Un-making artificial moral agents” 2008.

- ↑ Brey, Phillip. Anticipating ethical issues in emerging IT. Dec 2012. Ethics and Information Technology. https://link.springer.com/article/10.1007/s10676-012-9293-y

- ↑ Hogan, James. “Two Faces of Tomorrow" 1979.

- ↑ Floridi, Luciano. Minds and Machines. Aug 2004. On the Morality of Artificial Agents. https://link.springer.com/article/10.1023/B:MIND.0000035461.63578.9d

- ↑ Grodzinsky, Frances S., et al. “The Ethics of Designing Artificial Agents.” Ethics and Information Technology, vol. 10, no. 2-3, 2008, pp. 115–121., doi:10.1007/s10676-008-9163-9.

- ↑ Torres, Monica. “Amazon Reportedly Scraps AI Recruiting Tool That Was Biased against Women” 2018.

- ↑ Larson, J., Mattu, S., Kirchner, L., and Angwin, J., "How We Analyzed the COMPAS Recidivism Algorithm", May 2016

- ↑ Marr, Bernard. “Artificial Intelligence Has A Problem With Bias, Here's How To Tackle It” 2019.

- ↑ Brey, Philip. “Values in technology and disclosure computer ethics” 2012.