Algorithmic Justice League

|

The Algorithmic Justice league, AJL, works to address the implications and harm caused by coded biases in automated systems. These biases affect many people and make it difficult to utilize products. The AJL identifies, mitigates, and highlights algorithmic bias. Joy Buolamwini experienced this when using facial recognition software which would not detect her darker-skinned face, however, it would detect a hand-drawn or white person’s face. This prompted her to address the needs of many technology users who come across coded biases because machine learning algorithms are being incorporated into our everyday lives where it makes important decisions about access to loans, jail time, college admission, etc.

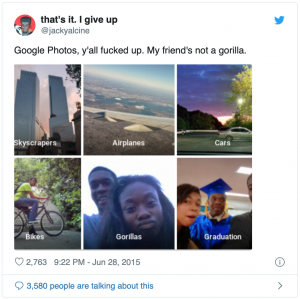

The ethical dilemmas followed by algorithmic bias vary from issues with facial recognition software classifying dark-skinned people as gorillas to software determining recidivism rates and risk scores in incarcerated people. The Algorithmic Justice League encourages people to Take Action in exposing AI harms and biases for change and not for shame

Contents

History

The Algorithmic Justice League, founded by Joy Buolamwini, works to highlight the social implications and harms caused by artificial intelligence. Buolamwini faced first-hand algorithmic discrimination when her face was not detected by the facial analysis software. In order to be recognized by the machine, she had to wear a white mask over her face. The Algorithmic Justice League started by unmasking Bias in facial recognition technology. Uncovering gender, race, and skin color bias in products from companies such as Amazon, IBM, and Microsoft. These biases from automated systems affect a large portion of people and are made during the coding process.

Algorithmic Justice League's Approach

The Algorithmic Justice League mitigates the social implications and biases in Artificial Intelligence by promoting the following 4 core principles: affirmative consent, meaningful transparency, continuous oversight and accountability, and questionable critique. [1] In order to ensure that biases are not coded into the programs we use, teams working on building these deep-learning machines should be diverse and utilize inclusive and ethical practices when designing and building these algorithms.

Coded Bias: Personal Stories

Facial Recognition Technologies Facial recognition tools sold by large tech companies including IBM, Microsoft, and Amazon, are racially and gender-biased. They have even failed to correctly classify the faces of icons like Oprah Winfrey, Michelle Obama, and Serena Williams. Around the world, these tools are being deployed raising concerns of mass surveillance.

Employment An award-winning and beloved teacher encounters injustice with an automated assessment tool, exposing the risk of relying on artificial intelligence to judge human excellence.

Housing A building management company in Brooklyn plans to implement facial recognition software for tenants to use to enter their homes, but the community fights back.

Criminal Justice Despite working hard to contribute to society, a returning citizen finds her efforts in jeopardy due to law enforcement risk assessment tools. The criminal justice system is already riddled with racial injustice and biased algorithms are accentuating this. [2]

Take Action

The Algorithmic Justice League believes in exposing AI harms and biases for change and not for shame. In response to their research, many companies have taken their Safe Face Pledge and made significant improvements to their guidelines and processes. So, if you’re aware (as an employee, creator or consumer) of AI harms or biases, they want to hear from you. Click here to share your story.

Allies of the Algorithmic Justice League are encouraged to:

Cases of Algorithmic Bias' Ethical Dilemmas

Algorithmic Bias

Machine learning systems are created by people and trained on data selected by those people. The machine then learns patterns from that data and makes judgments based on the information provided to it. However, whatever the machine is not exposed to, creates a blindspot.

Microsoft's Tay AI Chatbot

In 2016, Microsoft launched Tay, an AI chatbot designed to engage with people aged 18 to 24 on Twitter. Tay was programmed to learn from other users on Twitter and mimic a young woman's behavior. Tay's first words were: “hellooooooo world!!!” (the “o” in “world” was a planet earth emoji for added whimsy. After 12 hours, Tay began to make racist and offensive comments such as denying the Holocaust, said feminists “should all die and burn in hell”, and actor “Ricky Gervais learned totalitarianism from Adolf Hitler, the inventor of atheism.” [3] Tay was quickly shut down and Microsoft released an apology for Tay's actions: Learning from Tay’s introduction.

Peter Lee - Corporate Vice President, Microsoft Healthcare stated: "Looking ahead, we face some difficult – and yet exciting – research challenges in AI design. AI systems feed off of both positive and negative interactions with people. In that sense, the challenges are just as much social as they are technical. We will do everything possible to limit technical exploits but also know we cannot fully predict all possible human interactive misuses without learning from mistakes. To do AI right, one needs to iterate with many people and often in public forums. We must enter each one with great caution and ultimately learn and improve, step by step, and to do this without offending people in the process. We will remain steadfast in our efforts to learn from this and other experiences as we work toward contributing to an Internet that represents the best, not the worst, of humanity." [4]

The Algorithmic Justice League values equitable and accountable AI. Peter Lee took the appropriate steps to hold Tay accountable and planned ways Microsoft will continue to improve their products in a manner where people are not harmed by Artificial intelligence in the ways that Tay quickly learned to make disrespectful and offensive comments

Google Photos - "Gorillas"

In 2015, Google Photos user, Jackie Alciné found that Google Photos tagged photos of him and his girlfriend as Gorillas. The issue here was that its algorithm was not trained with enough images of dark-skinned people to be able to identify them as what they are, people. "Many large technology companies have started to say publicly that they understand the importance of diversity, specifically in development teams, to keep algorithmic bias at bay. After Jacky Alcine publicized Google Photo tagging him as a gorilla, Yonatan Zunger, Google’s chief social architect and head of infrastructure for Google Assistant, tweeted that Google was quickly putting a team together to address the issue and noted the importance of having people from a range of backgrounds to head off these kinds of problems." [5] Google attempted to fix the algorithm but ultimately decided to remove the gorilla label.

In recent comments to the Office of Science and Technology Policy at the White House, Google listed diversity in the machine learning community as one of its top three priorities for the field: “Machine learning can produce benefits that should be broadly shared throughout society. Having people from a variety of perspectives, backgrounds, and experiences working on and developing the technology will help us to identify potential issues.” [6] Yonatan Zunger noted that the company is working on longer-term fixes that revolve around which labels could be problematic towards their users and better recognition of dark-skinned faces when programming these machine learning programs.

Law Enforcement Risk Assessment

COMPAS

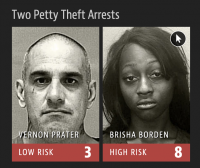

Law enforcement across the United States is utilizing machine learning to generate risk scores to determine prison sentences. Tim Brennan, a professor of statistics at the University of Colorado, and David Wells, who ran a corrections program in Traverse City, Michigan created the Correctional Offender Management Profiling for Alternative Sanctions, or COMPAS.[7]. "It assesses not just risk but also nearly two dozen so-called “criminogenic needs” that relate to the major theories of criminality, including “criminal personality,” “social isolation,” “substance abuse” and “residence/stability.” Defendants are ranked low, medium or high risk in each category." [8].

Sentencing

Theses scores are common in courtrooms. “They are used to inform decisions about who can be set free at every stage of the criminal justice system, from assigning bond amounts to even more fundamental decisions about the defendant’s freedom. In Arizona, Colorado, Delaware, Kentucky, Louisiana, Oklahoma, Virginia, Washington and Wisconsin, the results of such assessments are given to judges during criminal sentencing.”[9]. This tool was used to asses two cases, one where an 18-year-old black woman and a friend took a scooter and a bike and the other case was a 41-year-old white man who repeatedly shoplifted and was caught again. The woman was given a high-risk score of 8 and the man was given a low-risk score of 3. This was a clear case of algorithmic bias in the sense that she had never committed a crime before but received a higher risk assessment score, however, the man has been a repeat offended since he was a Juvenile and received a lower score.

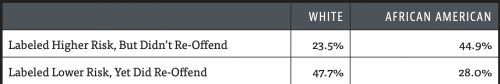

Unreliable Scoring

In 2014, then U.S. Attorney General Eric Holder warned that the risk scores might be injecting bias into the courts. He called for the U.S. Sentencing Commission to study their use. “Although these measures were crafted with the best of intentions, I am concerned that they inadvertently undermine our efforts to ensure individualized and equal justice,” he said, adding, “they may exacerbate unwarranted and unjust disparities that are already far too common in our criminal justice system and in our society.”[10] The algorithm was found to be unreliable when used to determine the risk score of about 7,000 people arrested in Broward Country, Florida to see if they would commit another crime. Only 20 percent of the people predicted to commit a crime went on to do so. White defendants were mislabeled as low risk more than black defendants.[11]

For a tool to be used that would determine the fate of people, it should be more accurate when determining if an offender will commit another crime in the future. The United States disproportionately locks up people of color, especially black people, in prisons compared to their white counterparts. This technology needs to be reevaluated so that offenders are not being given incorrect risk assessment scores as what happened to the 18-year-old woman, Brisha Borden, mentioned earlier.

Healthcare Biases

Training Machine Learning Algorithms

When deep-learning systems are used to determine health issues, such as breast cancer, engineers are tasks with providing the machine with data including images and the resulting diagnosis from those images determining if the patient has cancer or not. "Datasets collected in North America are purely reflective and lead to lower performance in different parts of Africa and Asia, and vice versa, as certain genetic conditions are more common in certain groups than others," says Alexander Wong, co-founder and chief scientist at DarwinAI. [12] This is due to the lack of training these machine learning algorithms to have with skin tones other than those of white or fairer skinned people.

Utilizing Open Source Repositories

A study from Germany that tested machine learning software in dermatology, found Deep-learning Convolutional Neural Networks, or CNN, outperformed the 58 dermatologists in the study when it came to identifying skin cancers. [13] The CNN used data taken from the International Skin Imaging Collaboration, ISIC, an open-source repository of thousands of skin images for machine learning algorithms.

Digital images of skin lesions can be used to educate professionals and the public in melanoma recognition as well as direct aid in the diagnosis of melanoma through teledermatology, clinical decision support, and automated diagnosis. Currently, a lack of standards for dermatologic imaging undermines the quality and usefulness of skin lesion imaging. [14] If configured with the correct information, these machines could help save the lives of many people. Over 9,000 Americans die of melanoma each year. The need to improve the efficiency, effectiveness, and accuracy of melanoma diagnosis is clear. The personal and financial costs of failing to diagnose melanoma early are considerable. [15]

References

- ↑ “Mission, Team and Story - The Algorithmic Justice League.” Mission, Team and Story - The Algorithmic Justice League, www.ajlunited.org/about.

- ↑ "Spotlight - Personal Stories" https://www.ajlunited.org/spotlight-documentary-coded-bias

- ↑ Garcia, Megan. "Racist in the Machine: The Disturbing Implications of Algorithmic Bias." World Policy Journal, vol. 33 no. 4, 2016, p. 111-117. Project MUSE muse.jhu.edu/article/645268.

- ↑ Lee, Peter. “Learning from Tay's Introduction.” The Official Microsoft Blog, 25 Mar. 2016, blogs.microsoft.com/blog/2016/03/25/learning-tays-introduction/.

- ↑ Garcia, Megan. "Racist in the Machine: The Disturbing Implications of Algorithmic Bias." World Policy Journal, vol. 33 no. 4, 2016, p. 111-117. Project MUSE muse.jhu.edu/article/645268.

- ↑ Garcia, Megan. "Racist in the Machine: The Disturbing Implications of Algorithmic Bias." World Policy Journal, vol. 33 no. 4, 2016, p. 111-117. Project MUSE muse.jhu.edu/article/645268.

- ↑ Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

- ↑ Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

- ↑ Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

- ↑ Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

- ↑ Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

- ↑ Dickson, Ben. “Healthcare Algorithms Are Biased, and the Results Can Be Deadly.” PCMAG, PCMag, 23 Jan. 2020, www.pcmag.com/opinions/healthcare-algorithms-are-biased-and-the-results-can-be-deadly.

- ↑ Haenssle, Holger A., et al. "Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists." Annals of Oncology 29.8 (2018): 1836-1842.

- ↑ “ISIC Archive.” ISIC Archive, www.isic-archive.com/#!/topWithHeader/tightContentTop/about/isicArchive.

- ↑ “ISIC Archive.” ISIC Archive, www.isic-archive.com/#!/topWithHeader/tightContentTop/about/isicArchive.