Difference between revisions of "Algorithmic Justice League"

(info box) |

(updates infobox) |

||

| Line 4: | Line 4: | ||

{| border="0" style="float:center" | {| border="0" style="float:center" | ||

|+ | |+ | ||

| − | |align="center" width="300px"|[[Image:{{{IMAGE|ajl.png}}}|frameless|center| | + | |align="center" width="300px"|[[Image:{{{IMAGE|ajl.png}}}|frameless|center|200px|]] |

|- | |- | ||

|align="center" style="font-size:80%"|{{{CAPTION|Algorithmic Justice League Logo}}} | |align="center" style="font-size:80%"|{{{CAPTION|Algorithmic Justice League Logo}}} | ||

| Line 11: | Line 11: | ||

|- valign="top" | |- valign="top" | ||

|- style="vertical-align:top;" | |- style="vertical-align:top;" | ||

| − | |''' | + | |'''Abbreviation''' |

| − | |{{{ | + | |{{{Abbreviation|AJL}}} |

|- style="vertical-align:top;" | |- style="vertical-align:top;" | ||

|'''Founder''' | |'''Founder''' | ||

|{{{Founder|Joy Buolamwini}}} | |{{{Founder|Joy Buolamwini}}} | ||

|- style="vertical-align:top;" | |- style="vertical-align:top;" | ||

| − | |''' | + | |'''Established''' |

| − | |{{{ | + | |{{{Established|October 2016}}} |

| − | + | ||

| − | + | ||

| − | + | ||

|- style="vertical-align:top;" | |- style="vertical-align:top;" | ||

|'''Mission''' | |'''Mission''' | ||

| − | |{{{MISSION| | + | |{{{MISSION|The Algorithmic Justice League is leading a cultural movement towards ''equitable and accountable AI.''}}} |

|- style="vertical-align:top;" | |- style="vertical-align:top;" | ||

| − | |''' | + | |'''Website''' |

| − | |{{{ | + | |{{{WEBSITE|https://www.ajlunited.org}}} |

| + | |- style="vertical-align:top;" | ||

| + | |'''Take Action''' | ||

| + | |{{{Take Action|https://www.ajlunited.org/take-action}}} | ||

|- style="vertical-align:top;" | |- style="vertical-align:top;" | ||

|} | |} | ||

|} | |} | ||

| − | |||

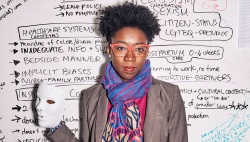

[[File:joyb.jpg|250px|thumb|MIT researcher, poet and computer scientist Joy Buolamwini]] | [[File:joyb.jpg|250px|thumb|MIT researcher, poet and computer scientist Joy Buolamwini]] | ||

The [https://www.ajlunited.org Algorithmic Justice league] works to address the implications and harm caused by coded biases in automated systems. These biases affect many people and make it difficult to utilize products. The Algorithmic Justice League identifies, mitigates, and highlights algorithmic bias. Joy Buolamwini experienced this when using facial recognition software which would not detect her darker-skinned face, however, it would detect a hand-drawn or white person’s face. This prompted her to address the needs of many technology users who come across coded biases because machine learning algorithms are being incorporated into our everyday lives where it makes important decisions about access to loans, jail time, college admission, etc. | The [https://www.ajlunited.org Algorithmic Justice league] works to address the implications and harm caused by coded biases in automated systems. These biases affect many people and make it difficult to utilize products. The Algorithmic Justice League identifies, mitigates, and highlights algorithmic bias. Joy Buolamwini experienced this when using facial recognition software which would not detect her darker-skinned face, however, it would detect a hand-drawn or white person’s face. This prompted her to address the needs of many technology users who come across coded biases because machine learning algorithms are being incorporated into our everyday lives where it makes important decisions about access to loans, jail time, college admission, etc. | ||

| Line 37: | Line 36: | ||

== History == | == History == | ||

| − | + | The Algorithmic Justice League, founded by Joy Buolamwini, works to highlight the social implications and harms caused by artificial intelligence. Buolamwini faced first-hand algorithmic discrimination when her face was not detected by the facial analysis software. In order to be recognized by the machine, she had to wear a white mask over her face. The Algorithmic Justice League started by unmasking Bias in facial recognition technology. Uncovering gender, race, and skin color bias in products from companies such as Amazon, IBM, and Microsoft. These biases from automated systems affect a large portion of people and are made during the coding process. | |

== Cases of Algorithmic Bias == | == Cases of Algorithmic Bias == | ||

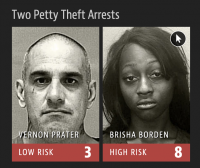

| + | [[File:Borden.png|200px|thumb|Borden was rated high risk for future crime after she and a friend took a kid’s bike and scooter that were sitting outside. She did not reoffend.]] | ||

=== Law Enforcement Risk Assessment === | === Law Enforcement Risk Assessment === | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

==== Sentencing ==== | ==== Sentencing ==== | ||

| − | |||

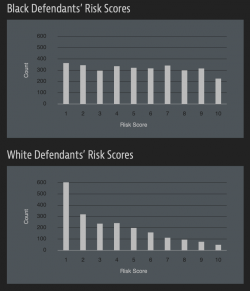

Law enforcement across the United States is utilizing machine learning to generate risk scores to determine prison sentences. Theses scores are common in courtrooms. “They are used to inform decisions about who can be set free at every stage of the criminal justice system, from assigning bond amounts to even more fundamental decisions about the defendant’s freedom. In Arizona, Colorado, Delaware, Kentucky, Louisiana, Oklahoma, Virginia, Washington and Wisconsin, the results of such assessments are given to judges during criminal sentencing.”<ref>Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing. </ref>. This tool was used to asses two cases, one where an 18-year-old black woman and a friend took a scooter and a bike and the other case was a 41-year-old white man who repeatedly shoplifted and was caught again. The woman was given a high-risk score of 8 and the man was given a low-risk score of 3. This was a clear case of algorithmic bias in the sense that she had never committed a crime before but received a higher risk assessment score, however, the man has been a repeat offended since he was a Juvenile and received a lower score. | Law enforcement across the United States is utilizing machine learning to generate risk scores to determine prison sentences. Theses scores are common in courtrooms. “They are used to inform decisions about who can be set free at every stage of the criminal justice system, from assigning bond amounts to even more fundamental decisions about the defendant’s freedom. In Arizona, Colorado, Delaware, Kentucky, Louisiana, Oklahoma, Virginia, Washington and Wisconsin, the results of such assessments are given to judges during criminal sentencing.”<ref>Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing. </ref>. This tool was used to asses two cases, one where an 18-year-old black woman and a friend took a scooter and a bike and the other case was a 41-year-old white man who repeatedly shoplifted and was caught again. The woman was given a high-risk score of 8 and the man was given a low-risk score of 3. This was a clear case of algorithmic bias in the sense that she had never committed a crime before but received a higher risk assessment score, however, the man has been a repeat offended since he was a Juvenile and received a lower score. | ||

Revision as of 05:08, 27 March 2020

|

The Algorithmic Justice league works to address the implications and harm caused by coded biases in automated systems. These biases affect many people and make it difficult to utilize products. The Algorithmic Justice League identifies, mitigates, and highlights algorithmic bias. Joy Buolamwini experienced this when using facial recognition software which would not detect her darker-skinned face, however, it would detect a hand-drawn or white person’s face. This prompted her to address the needs of many technology users who come across coded biases because machine learning algorithms are being incorporated into our everyday lives where it makes important decisions about access to loans, jail time, college admission, etc.

Contents

History

The Algorithmic Justice League, founded by Joy Buolamwini, works to highlight the social implications and harms caused by artificial intelligence. Buolamwini faced first-hand algorithmic discrimination when her face was not detected by the facial analysis software. In order to be recognized by the machine, she had to wear a white mask over her face. The Algorithmic Justice League started by unmasking Bias in facial recognition technology. Uncovering gender, race, and skin color bias in products from companies such as Amazon, IBM, and Microsoft. These biases from automated systems affect a large portion of people and are made during the coding process.

Cases of Algorithmic Bias

Law Enforcement Risk Assessment

Sentencing

Law enforcement across the United States is utilizing machine learning to generate risk scores to determine prison sentences. Theses scores are common in courtrooms. “They are used to inform decisions about who can be set free at every stage of the criminal justice system, from assigning bond amounts to even more fundamental decisions about the defendant’s freedom. In Arizona, Colorado, Delaware, Kentucky, Louisiana, Oklahoma, Virginia, Washington and Wisconsin, the results of such assessments are given to judges during criminal sentencing.”[1]. This tool was used to asses two cases, one where an 18-year-old black woman and a friend took a scooter and a bike and the other case was a 41-year-old white man who repeatedly shoplifted and was caught again. The woman was given a high-risk score of 8 and the man was given a low-risk score of 3. This was a clear case of algorithmic bias in the sense that she had never committed a crime before but received a higher risk assessment score, however, the man has been a repeat offended since he was a Juvenile and received a lower score.

Unreliable Scoring

In 2014, then U.S. Attorney General Eric Holder warned that the risk scores might be injecting bias into the courts. He called for the U.S. Sentencing Commission to study their use. “Although these measures were crafted with the best of intentions, I am concerned that they inadvertently undermine our efforts to ensure individualized and equal justice,” he said, adding, “theyFor a tool to be used that would determine the fate of people, it should be more accurate when determining if an offender will commit another crime in the future. The United States disproportionately locks up people of color, especially black people, in prisons compared to their white counterparts. This technology needs to be reevaluated so that offenders are not being given incorrect risk assessment scores as what happened to the 18-year-old woman mentioned earlier.

Healthcare Biases

Training Machine Learning Algorithms

When deep-learning systems are used to determine health issues, such as breast cancer, engineers are tasks with providing the machine with data including images and the resulting diagnosis from those images determining if the patient has cancer or not. "Datasets collected in North America are purely reflective and lead to lower performance in different parts of Africa and Asia, and vice versa, as certain genetic conditions are more common in certain groups than others," says Alexander Wong, co-founder and chief scientist at DarwinAI. [4] This is due to the lack of training these machine learning algorithms have with skin tones other than those of white or fairer skinned people.

Utilizing Open Source Repositories

A study from Germany that tested machine learning software in dermatology, found Deep-learning convolutional neural networks, CNN, outperformed the 58 dermatologists in the study when it came to identifying skin cancers. [5] The CNN used data taken from the International Skin Imaging Collaboration, ISIC, an open-source repository of thousands of skin images for machine learning algorithms. Digital images of skin lesions can be used to educate professionals and the public in melanoma recognition as well as direct aid in the diagnosis of melanoma through teledermatology, clinical decision support, and automated diagnosis. Currently, a lack of standards for dermatologic imaging undermines the quality and usefulness of skin lesion imaging. [6] If configured with the correct information, these machines could help save the lives of many people. Over 9,000 Americans die of melanoma each year. The need to improve the efficiency, effectiveness, and accuracy of melanoma diagnosis is clear. The personal and financial costs of failing to diagnose melanoma early are considerable. [7]

Algorithmic Justice League's Approach

The Algorithmic Justice League mitigates the social implications and biases in Artificial Intelligence by promoting the following 4 core principles: affirmative consent, meaningful transparency, continuous oversight and accountability, and questionable critique. [8] In order to ensure that biases are not coded into the programs we use, teams working on building these deep-learning machines should be diverse and utilize inclusive and ethical practices when designing and building these algorithms.

References

- ↑ Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

- ↑ Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

- ↑ Angwin, Julia, et al. “Machine Bias.” ProPublica, 9 Mar. 2019, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

- ↑ Dickson, Ben. “Healthcare Algorithms Are Biased, and the Results Can Be Deadly.” PCMAG, PCMag, 23 Jan. 2020, www.pcmag.com/opinions/healthcare-algorithms-are-biased-and-the-results-can-be-deadly.

- ↑ Haenssle, Holger A., et al. "Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists." Annals of Oncology 29.8 (2018): 1836-1842.

- ↑ “ISIC Archive.” ISIC Archive, www.isic-archive.com/#!/topWithHeader/tightContentTop/about/isicArchive.

- ↑ “ISIC Archive.” ISIC Archive, www.isic-archive.com/#!/topWithHeader/tightContentTop/about/isicArchive.

- ↑ “Mission, Team and Story - The Algorithmic Justice League.” Mission, Team and Story - The Algorithmic Justice League, www.ajlunited.org/about.