Difference between revisions of "Algorithmic Audits"

(Created page with "== Background == All kinds of systems have transformed into "smart" objects. At the core of all these real-time digital services lie algorithms that provide essential function...") |

(Sorry, forgot to remove the editing banner) |

||

| (25 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | [[File:Internet-law.jpg|thumbnail| visual of digital law on computer screen, image sourced from Google]] | |

| − | + | An algorithmic audit is a process involving the collection and analysis of an [https://en.wikipedia.org/wiki/Algorithm algorithm] in some context to determine if its behavior is negatively affecting the interests or rights of any person it influences<ref name="brown> Shea Brown, Jovana Davidovic, & Ali Hasan. (2021). The algorithm audit: Scoring the algorithms that score us. Big data & society, 8. SAGE Publishing.</ref>. | |

| + | [[File:Audits.jpg|thumbnail| Visualization of Algorithmic Audit, Image sourced from Google]] | ||

| − | + | The internet runs on software that the majority of people don't understand, or carry the impression that the algorithms are neutral (unbiased), meaning the internet can function relatively unchecked <ref name="wagner">Wagner, B. (2016). Algorithmic regulation and the global default: Shifting norms in Internet technology. Etikk I Praksis - Nordic Journal of Applied Ethics, 10(1), 5-13. https://doi.org/10.5324/eip.v10i1.1961</ref>. A study conducted by the PEW research center in February of 2021 showed that 85% of adults in the U.S utilize the internet daily <ref>Perrin, Andrew, and Sara Atske. About Three-in-Ten U.S. Adults Say They Are 'ALMOST Constantly' Online. 26 Mar. 2021, www.pewresearch.org/fact-tank/2021/03/26/about-three-in-ten-u-s-adults-say-they-are-almost-constantly-online/. </ref>. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | This statistic represents billions of people worldwide and emphasizes algorithms' persistence in our daily lives, despite a lack of general understanding. By looking at online platforms as a single entity and analyzing the results they're producing, more can be learned about algorithms and the way they affect society<ref name="sandvig1">Sandvig, Christian, et al. "An algorithm audit." Data and Discrimination: Collected Essays. Washington, DC: New America Foundation (2014): 6-10.</ref>. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | === | + | == Background == |

| + | The rapid advancement observed in the [https://en.wikipedia.org/wiki/High_tech tech industry] has revolutionized the way humans work, interact, and communicate. A diverse array of features are integrated into [https://en.wikipedia.org/wiki/Smart_device smart] applications for maximum [https://en.wikipedia.org/wiki/Availability availability]. At the core of all these real-time digital services lie algorithms which provide essential functions like sorting, segmentation, personalized recommendations, and information management<ref name="kotras">Kotras, Baptiste. "Mass personalization: Predictive marketing algorithms and the reshaping of consumer knowledge." Big Data & Society 7.2 (2020): 2053951720951581.</ref>. | ||

| + | |||

| + | In the last decade, researchers have identified numerous ethical breaches in online platforms and published their findings. Countries worldwide have rolled out their regulations and laws to keep the Internet safe for their citizens. However, the these efforts do little to limit the international liberties afforded online, including access, anonymity, and privacy. Because technology and algorithms are heavily integrated into humanity, ethical issues arise when algorithms are opaque to public scrutiny. Since the 2000s, the regulation of algorithms has become a concern to governments worldwide due to data and privacy implications, though legislation has made progress in essential areas<ref name="goldsmith">Goldsmith, J. (2007), "Who Controls the Internet? Illusions of a Borderless World", Strategic Direction, Vol. 23 No. 11. https://doi.org/10.1108/sd.2007.05623kae.001</ref>. | ||

| + | |||

| + | Higher education computer science and other similar programs have seen an increase in undergraduate and graduate enrollment, proportional to the tech industry's growth<ref name="usdoe">U.S. Department of Education, National Center for Education Statistics, Higher Education General Information Survey (HEGIS), "Degrees and Other Formal Awards Conferred" surveys, 1970-71 through 1985-86; Integrated Postsecondary Education Data System (IPEDS), "Completions Survey" (IPEDS-C:87-99); and IPEDS Fall 2000 through Fall 2011:https://nces.ed.gov/programs/digest/d12/tables/dt12_349.asp</ref>. Awareness about algorithms and digital platforms' infrastructure enables the public to identify ethical breaches in these systems' functionality. This awareness has sparked activism for ethics in technology. For example, [https://en.wikipedia.org/wiki/J Joy Buolamwini] identifying the bias in facial recognition technologies or [https://casetext.com/case/american-airlines-inc-v-sabre SABRE] leveraging their recommendation algorithm to place airlines who paid, higher on the recommendation than their competitors<ref name="copeland">Copeland, D.G., Mason, R.O., and McKenney, J.L. (1995). Sabre: The Development of Information-Based Competence and Execution of Information-Based Competition. IEEE Annals of the History of Computing, vol. 17, no. 3: 30-57.</ref>. | ||

== Types of Audits == | == Types of Audits == | ||

| − | |||

| − | |||

| − | |||

| − | === | + | === Code Audit<ref name="sandvig">Sandvig, Christian, et al. "Auditing algorithms: Research methods for detecting discrimination on internet platforms." Data and discrimination: converting critical concerns into productive inquiry 22 (2014): 4349-4357. pg. 9-15 </ref> === |

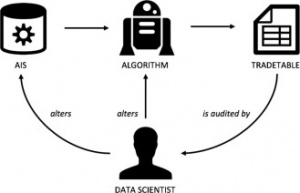

| − | + | [[File: Code Audit.png|thumbnail|Visual of code audit from Sandvig, Christian, et al. "Auditing algorithms: Research methods for detecting discrimination on internet platforms." pg 9]] | |

| − | + | A code audit is based on the concept of [https://en.wikipedia.org/wiki/Algorithmic_transparency Algorithm Transparency], where researchers acquire a copy of the algorithm and vet it for unethical behavior. This is uncommon because software is considered valuable intellectual property, and companies are reluctant to release the code, even to researchers. | |

| − | + | An issue with this method is that public interest in software can be malicious. On many platforms, the algorithm designers constantly battle those who try to take advantage of the software, so releasing code for review may cause more harm than good. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | === | + | === Noninvasive User Audit <ref name="sandvig"/>=== |

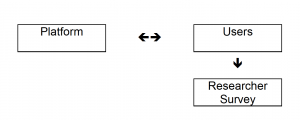

| − | + | [[File: NonInvasive User Audit.png|thumbnail|Visualization of code audit from Sandvig, Christian, et al. "Auditing algorithms: Research methods for detecting discrimination on internet platforms." pg 11]] | |

| − | + | A noninvasive user audit involves researchers requesting assorted input and output data from end users in order to analyze the workings of an algorithm. This form of audit carries the benefit of not interacting with a platform itself, ensuring that the researcher is not accused of tampering. The most challenging aspect of a user audit is generating a truly random and representative sample, making it difficult to identify [https://en.wikipedia.org/wiki/Causality causality]. | |

| − | + | ||

| − | + | ||

| − | === Collaborative or Crowdsourced Audit === | + | === Scraping Audit <ref name="sandvig"/>=== |

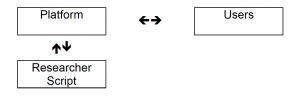

| − | + | [[File: Scraping Audit.png|thumbnail|Scraping audit visual from Sandvig, Christian, et al. "Auditing algorithms: Research methods for detecting discrimination on internet platforms." pg 12]] | |

| − | Amazon's Mechanical Turk allows a large enough group | + | A scraping audit involves researchers issuing repeated queries to a platform, then observing the results, employing programs that utilize API data mining and [https://en.wikipedia.org/wiki/Web_scraping webpage scraping]. This is an effective method to gather a large quantity of data to be analyzed, however this audit still encounters the issue of securing a random sample. |

| − | requires a large budget to pay the participants. | + | |

| + | Another difficulty with this method is the likelihood of being prosecuted under the Computer Fraud and Abuse Act ([https://en.wikipedia.org/wiki/Computer_Fraud_and_Abuse_Act CFAA]), a law which has been criticized as being too vague, as it criminalizes any unauthorized access to a computer <ref name="Whitehouse"> Whitehouse, Sheldon. (2016). Hacking into the Computer Fraud and Abuse Act: the CFAA at 30. The George Washington law review, 84(6), 1437. George Washington Law Review.</ref>. Consent is difficult to obtain from a platform operator in order to perform a scraping audit. | ||

| + | |||

| + | Similarly, a Terms of Service document on a Website may not have the force of law but some scholarly institutions pose that Internet researchers must not violate the Terms of Internet platforms, making the results from this form of research difficult to publish without proper permissions. | ||

| + | |||

| + | === Sock Puppet Audit <ref name="sandvig"/>=== | ||

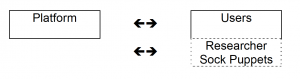

| + | [[File: Sock Puppet Audit.png|thumbnail|Sock Puppet Audit visual from Sandvig, Christian, et al. "Auditing algorithms: Research methods for detecting discrimination on internet platforms." pg 13]] | ||

| + | A sock puppet audit uses computer programs to impersonate users by creating false accounts. This method effectively investigates sensitive topics in difficult-to-access [https://en.wikipedia.org/wiki/Network_domain domains], and sock puppets can investigate features of systems that are not public and penetrate groups that are difficult to pinpoint and acknowledge. A large number of sock puppets are required to derive significant findings from the audit. This method is still susceptible to CFAA violations, presenting danger to the researchers conducting the study. | ||

| + | |||

| + | === Collaborative or Crowdsourced Audit <ref name="sandvig"/>=== | ||

| + | [[File: Crowdsourcing_Collaboration_Audit.png|thumbnail|Collaborative or Crowdsourced Audit visual from Sandvig, Christian, et al. "Auditing algorithms: Research methods for detecting discrimination on internet platforms." pg 15]] | ||

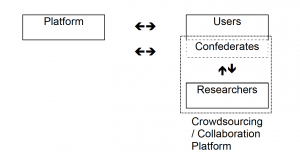

| + | A crowdsourced audit is similar to a sock puppet audit, employing real humans instead of a computer program imitating a user. This change circumvents the CFAA in most cases as long as the platform being audited allows anyone to create an account. This form of audit has the ability to utilize positive aspects of the four previously mentioned designs. With a large enough test sample and semi-automated tactics, it becomes more feasible to generate a random representative sample to investigate with. An example of this is [https://en.wikipedia.org/wiki/Amazon_Mechanical_Turk Amazon's Mechanical Turk] which allows a large enough testers group to produce significant results . The only drawback is a crowdsourced audit requires a large budget to pay the participants. | ||

| + | |||

| + | == Audits in Practice== | ||

| + | The purpose of algorithmic auditing is to ensure that some given software is not violating any legal or moral code which exists to protect people utilizing the internet. There are only a handful of historical and modern cases of transparent algorithm audits. | ||

| + | |||

| + | In 1984 USCAB declared that the airline sorting algorithm created by [https://en.wikipedia.org/wiki/Sabre_(computer_system) SABRE] must be known to participating airlines under the [https://www.govinfo.gov/content/pkg/CFR-2012-title14-vol4/pdf/CFR-2012-title14-vol4-sec255-4.pdf Display of Information in the Code of Federal Regulations]. This regulatory provision resulted from a bias identified by airlines using SABRE's software, which involved "screen science." and recommending airlines based on privatized criteria and capital incentives. The airlines noticed that American Airlines received prioritization in SABRE's algorithms search results, which led to an investigation by the USCAB and DOJ. | ||

| + | |||

| + | One instance which could have been circumvented with an algorithm audit is the Volkswagen emissions standard scandal which occurred in 2015 <ref name="wagner">Wagner, Ben. (2016). Algorithmic regulation and the global default: Shifting norms in Internet technology. Etikk i praksis, 10(1), 5. Norwegian University of Science and Technology Library.</ref>. Wagner describes how this is a prime example of a situation in which a standardized, scalable governing system regarding algorithms proves its necessity. They go on to mention the ways in which technology is a certainly not a neutral player in the modern social infrastructure and how regulation will have to improve in the future to avoid damage to society. | ||

== Ethical Implications == | == Ethical Implications == | ||

| + | Due to the scale of technology companies, regulation cannot keep pace. As a result, general guidelines and laws for digital interactions. However, as the infrastructure continues to grow, there are new ways for the system to be leveraged in new ways. As a result, Algorithm Audits have become the most efficient way to assess digital platforms and providers' ethical impact on their consumers and users. These Audits have formulated direct ways to check digital platforms and create protections for auditors. However, these methods are difficult to employ and fall short in many areas of regulation. <ref>O'neil, C. (2016). Weapons of math destruction: How big data increases inequality and threatens democracy. Crown.</ref> | ||

| + | |||

| + | === Research Protections === | ||

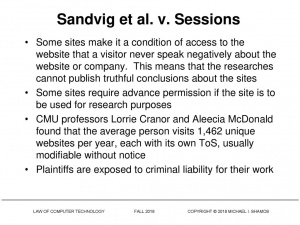

| + | Because researchers attempting to audit a platform or provider can be targeted by these large tech companies, legislation has been put in place through the CFAA and U.S. Department of Justice to protect the researchers from lawsuits. This resulted from the 2018 court ruling in SANDVIG et al. V. SESSIONS, where four university researchers attempted to scrape information on discrimination in the housing department. | ||

| + | [[File:SandvigSessions.jpg|thumbnail| Image of Sadvig Sessions ruling, sourced from Google]] | ||

| + | ===== SANDVIG et al V. SESSIONS<ref>Lee, A. (2019). Online Research and Competition under the CFAA. Available at SSRN 3259701.</ref> ===== | ||

| + | "On Mar. 27, the United States District Court of D.C. ruled that such actions should not be viewed as criminal under the statute, though it declined to weigh in on whether the professors' conduct would be protected under the First Amendment. | ||

| + | Without reaching this constitutional question, the Court concludes that the CFAA does not criminalize mere terms-of-service violations on consumer websites and, thus, that plaintiffs' proposed research plans are not criminal under the CFAA," | ||

| + | U.S. District Judge John Bates wrote in his opinion.<ref>https://cases.justia.com/federal/district-courts/district-of-columbia/dcdce/1:2016cv01368/180080/24/0.pdf?ts=1522488415</ref> | ||

| + | |||

| + | === Privacy Policies & Anonymity === | ||

| + | |||

| + | Performing any sort of audit requiring interaction with a platform can be dangerous because technology companies have outlined in their privacy policies restrictions on how users can engage on the forum. Researchers can be flagged for breaking these policies and banned from the platform. This can inhibit audits of large, developed tech companies if they're detected and can lead to lawsuits and further complications when the research is published. These large companies monitor data traffic and can identify a user based on their access point or device metadata, making it hard even to attempt many of the audit types. | ||

| + | |||

| + | == Future of Algorithmic Audits == | ||

| + | A research project conducted by Jack Bandy explores past research performed on algorithmic audits for the purpose of plotting future trajectory in the field. They outline four types of behavior revealed by studied audits: discrimination, distortion, exploitation, and misjudgment, where the main target of investigations has been discrimination. Bandy describes how very few audits focused on the exploitation of personal information relative to the high number of scholars who have voiced their concerns about the issue appearing in algorithms. Despite the high number of studies (N = 62), they state that there is remarkable room for improvement in the future. | ||

| + | |||

| + | The consensus from studying prior audits, Bandy states, is that every type of behavior that is attempting to be identified could benefit from greater specificity when performing the research. For example, algorithm research into discriminatory pricing focused greatly on the identification of such a practice, but not much beyond that. Bandy suggests that each unique instance which could draw misbehavior from an algorithm deserves to be its own field of research. | ||

| + | |||

| + | Another important facet of the report is the recommendations for methodology in the future, where Bandy describes the skew between number of audits performed on influential companies and how they should be more evenly distributed. Google had the most audits at 30 while similarly powerful corporations such as Twitter and Spotify only underwent 3. Replication is the last important piece mentioned for future audits, under the reasoning that algorithms can change very frequently and drastically so implementation of open science practices is vital to future auditing results <ref name="Bandy"> Bandy, Jack. (2021). Problematic Machine Behavior: A Systematic Literature Review of Algorithm Audits.</ref>. | ||

| − | == | + | == References == |

| − | + | ||

| − | + | ||

Latest revision as of 17:20, 10 April 2021

An algorithmic audit is a process involving the collection and analysis of an algorithm in some context to determine if its behavior is negatively affecting the interests or rights of any person it influences[1].

The internet runs on software that the majority of people don't understand, or carry the impression that the algorithms are neutral (unbiased), meaning the internet can function relatively unchecked [2]. A study conducted by the PEW research center in February of 2021 showed that 85% of adults in the U.S utilize the internet daily [3].

This statistic represents billions of people worldwide and emphasizes algorithms' persistence in our daily lives, despite a lack of general understanding. By looking at online platforms as a single entity and analyzing the results they're producing, more can be learned about algorithms and the way they affect society[4].

Contents

Background

The rapid advancement observed in the tech industry has revolutionized the way humans work, interact, and communicate. A diverse array of features are integrated into smart applications for maximum availability. At the core of all these real-time digital services lie algorithms which provide essential functions like sorting, segmentation, personalized recommendations, and information management[5].

In the last decade, researchers have identified numerous ethical breaches in online platforms and published their findings. Countries worldwide have rolled out their regulations and laws to keep the Internet safe for their citizens. However, the these efforts do little to limit the international liberties afforded online, including access, anonymity, and privacy. Because technology and algorithms are heavily integrated into humanity, ethical issues arise when algorithms are opaque to public scrutiny. Since the 2000s, the regulation of algorithms has become a concern to governments worldwide due to data and privacy implications, though legislation has made progress in essential areas[6].

Higher education computer science and other similar programs have seen an increase in undergraduate and graduate enrollment, proportional to the tech industry's growth[7]. Awareness about algorithms and digital platforms' infrastructure enables the public to identify ethical breaches in these systems' functionality. This awareness has sparked activism for ethics in technology. For example, Joy Buolamwini identifying the bias in facial recognition technologies or SABRE leveraging their recommendation algorithm to place airlines who paid, higher on the recommendation than their competitors[8].

Types of Audits

Code Audit[9]

A code audit is based on the concept of Algorithm Transparency, where researchers acquire a copy of the algorithm and vet it for unethical behavior. This is uncommon because software is considered valuable intellectual property, and companies are reluctant to release the code, even to researchers.

An issue with this method is that public interest in software can be malicious. On many platforms, the algorithm designers constantly battle those who try to take advantage of the software, so releasing code for review may cause more harm than good.

Noninvasive User Audit [9]

A noninvasive user audit involves researchers requesting assorted input and output data from end users in order to analyze the workings of an algorithm. This form of audit carries the benefit of not interacting with a platform itself, ensuring that the researcher is not accused of tampering. The most challenging aspect of a user audit is generating a truly random and representative sample, making it difficult to identify causality.

Scraping Audit [9]

A scraping audit involves researchers issuing repeated queries to a platform, then observing the results, employing programs that utilize API data mining and webpage scraping. This is an effective method to gather a large quantity of data to be analyzed, however this audit still encounters the issue of securing a random sample.

Another difficulty with this method is the likelihood of being prosecuted under the Computer Fraud and Abuse Act (CFAA), a law which has been criticized as being too vague, as it criminalizes any unauthorized access to a computer [10]. Consent is difficult to obtain from a platform operator in order to perform a scraping audit.

Similarly, a Terms of Service document on a Website may not have the force of law but some scholarly institutions pose that Internet researchers must not violate the Terms of Internet platforms, making the results from this form of research difficult to publish without proper permissions.

Sock Puppet Audit [9]

A sock puppet audit uses computer programs to impersonate users by creating false accounts. This method effectively investigates sensitive topics in difficult-to-access domains, and sock puppets can investigate features of systems that are not public and penetrate groups that are difficult to pinpoint and acknowledge. A large number of sock puppets are required to derive significant findings from the audit. This method is still susceptible to CFAA violations, presenting danger to the researchers conducting the study.

Collaborative or Crowdsourced Audit [9]

A crowdsourced audit is similar to a sock puppet audit, employing real humans instead of a computer program imitating a user. This change circumvents the CFAA in most cases as long as the platform being audited allows anyone to create an account. This form of audit has the ability to utilize positive aspects of the four previously mentioned designs. With a large enough test sample and semi-automated tactics, it becomes more feasible to generate a random representative sample to investigate with. An example of this is Amazon's Mechanical Turk which allows a large enough testers group to produce significant results . The only drawback is a crowdsourced audit requires a large budget to pay the participants.

Audits in Practice

The purpose of algorithmic auditing is to ensure that some given software is not violating any legal or moral code which exists to protect people utilizing the internet. There are only a handful of historical and modern cases of transparent algorithm audits.

In 1984 USCAB declared that the airline sorting algorithm created by SABRE must be known to participating airlines under the Display of Information in the Code of Federal Regulations. This regulatory provision resulted from a bias identified by airlines using SABRE's software, which involved "screen science." and recommending airlines based on privatized criteria and capital incentives. The airlines noticed that American Airlines received prioritization in SABRE's algorithms search results, which led to an investigation by the USCAB and DOJ.

One instance which could have been circumvented with an algorithm audit is the Volkswagen emissions standard scandal which occurred in 2015 [2]. Wagner describes how this is a prime example of a situation in which a standardized, scalable governing system regarding algorithms proves its necessity. They go on to mention the ways in which technology is a certainly not a neutral player in the modern social infrastructure and how regulation will have to improve in the future to avoid damage to society.

Ethical Implications

Due to the scale of technology companies, regulation cannot keep pace. As a result, general guidelines and laws for digital interactions. However, as the infrastructure continues to grow, there are new ways for the system to be leveraged in new ways. As a result, Algorithm Audits have become the most efficient way to assess digital platforms and providers' ethical impact on their consumers and users. These Audits have formulated direct ways to check digital platforms and create protections for auditors. However, these methods are difficult to employ and fall short in many areas of regulation. [11]

Research Protections

Because researchers attempting to audit a platform or provider can be targeted by these large tech companies, legislation has been put in place through the CFAA and U.S. Department of Justice to protect the researchers from lawsuits. This resulted from the 2018 court ruling in SANDVIG et al. V. SESSIONS, where four university researchers attempted to scrape information on discrimination in the housing department.

SANDVIG et al V. SESSIONS[12]

"On Mar. 27, the United States District Court of D.C. ruled that such actions should not be viewed as criminal under the statute, though it declined to weigh in on whether the professors' conduct would be protected under the First Amendment. Without reaching this constitutional question, the Court concludes that the CFAA does not criminalize mere terms-of-service violations on consumer websites and, thus, that plaintiffs' proposed research plans are not criminal under the CFAA," U.S. District Judge John Bates wrote in his opinion.[13]

Privacy Policies & Anonymity

Performing any sort of audit requiring interaction with a platform can be dangerous because technology companies have outlined in their privacy policies restrictions on how users can engage on the forum. Researchers can be flagged for breaking these policies and banned from the platform. This can inhibit audits of large, developed tech companies if they're detected and can lead to lawsuits and further complications when the research is published. These large companies monitor data traffic and can identify a user based on their access point or device metadata, making it hard even to attempt many of the audit types.

Future of Algorithmic Audits

A research project conducted by Jack Bandy explores past research performed on algorithmic audits for the purpose of plotting future trajectory in the field. They outline four types of behavior revealed by studied audits: discrimination, distortion, exploitation, and misjudgment, where the main target of investigations has been discrimination. Bandy describes how very few audits focused on the exploitation of personal information relative to the high number of scholars who have voiced their concerns about the issue appearing in algorithms. Despite the high number of studies (N = 62), they state that there is remarkable room for improvement in the future.

The consensus from studying prior audits, Bandy states, is that every type of behavior that is attempting to be identified could benefit from greater specificity when performing the research. For example, algorithm research into discriminatory pricing focused greatly on the identification of such a practice, but not much beyond that. Bandy suggests that each unique instance which could draw misbehavior from an algorithm deserves to be its own field of research.

Another important facet of the report is the recommendations for methodology in the future, where Bandy describes the skew between number of audits performed on influential companies and how they should be more evenly distributed. Google had the most audits at 30 while similarly powerful corporations such as Twitter and Spotify only underwent 3. Replication is the last important piece mentioned for future audits, under the reasoning that algorithms can change very frequently and drastically so implementation of open science practices is vital to future auditing results [14].

References

- ↑ Shea Brown, Jovana Davidovic, & Ali Hasan. (2021). The algorithm audit: Scoring the algorithms that score us. Big data & society, 8. SAGE Publishing.

- ↑ 2.0 2.1 Wagner, B. (2016). Algorithmic regulation and the global default: Shifting norms in Internet technology. Etikk I Praksis - Nordic Journal of Applied Ethics, 10(1), 5-13. https://doi.org/10.5324/eip.v10i1.1961

- ↑ Perrin, Andrew, and Sara Atske. About Three-in-Ten U.S. Adults Say They Are 'ALMOST Constantly' Online. 26 Mar. 2021, www.pewresearch.org/fact-tank/2021/03/26/about-three-in-ten-u-s-adults-say-they-are-almost-constantly-online/.

- ↑ Sandvig, Christian, et al. "An algorithm audit." Data and Discrimination: Collected Essays. Washington, DC: New America Foundation (2014): 6-10.

- ↑ Kotras, Baptiste. "Mass personalization: Predictive marketing algorithms and the reshaping of consumer knowledge." Big Data & Society 7.2 (2020): 2053951720951581.

- ↑ Goldsmith, J. (2007), "Who Controls the Internet? Illusions of a Borderless World", Strategic Direction, Vol. 23 No. 11. https://doi.org/10.1108/sd.2007.05623kae.001

- ↑ U.S. Department of Education, National Center for Education Statistics, Higher Education General Information Survey (HEGIS), "Degrees and Other Formal Awards Conferred" surveys, 1970-71 through 1985-86; Integrated Postsecondary Education Data System (IPEDS), "Completions Survey" (IPEDS-C:87-99); and IPEDS Fall 2000 through Fall 2011:https://nces.ed.gov/programs/digest/d12/tables/dt12_349.asp

- ↑ Copeland, D.G., Mason, R.O., and McKenney, J.L. (1995). Sabre: The Development of Information-Based Competence and Execution of Information-Based Competition. IEEE Annals of the History of Computing, vol. 17, no. 3: 30-57.

- ↑ 9.0 9.1 9.2 9.3 9.4 Sandvig, Christian, et al. "Auditing algorithms: Research methods for detecting discrimination on internet platforms." Data and discrimination: converting critical concerns into productive inquiry 22 (2014): 4349-4357. pg. 9-15

- ↑ Whitehouse, Sheldon. (2016). Hacking into the Computer Fraud and Abuse Act: the CFAA at 30. The George Washington law review, 84(6), 1437. George Washington Law Review.

- ↑ O'neil, C. (2016). Weapons of math destruction: How big data increases inequality and threatens democracy. Crown.

- ↑ Lee, A. (2019). Online Research and Competition under the CFAA. Available at SSRN 3259701.

- ↑ https://cases.justia.com/federal/district-courts/district-of-columbia/dcdce/1:2016cv01368/180080/24/0.pdf?ts=1522488415

- ↑ Bandy, Jack. (2021). Problematic Machine Behavior: A Systematic Literature Review of Algorithm Audits.