Generative Media

Contents

currently being edited

Generative Media (also known as AI-generated media,[1][2] synthetic media,[3] and personalized media,[4]) is an umbrella term for the production of algorithmically and autonomously generating media forms such as art, photographs, music, literature, and any other means of communicating creatively. It is media constructed with complex systems of mathematical formulas, which can magnify or mimic human ingenuity. Generative media is also called "organic media" because it is created either with mathematical tools or autonomously (i.e., without human intervention).[3] The creation of creative material usually takes a significant amount of time, which generative media streamlines by drastically cutting the creation process time down, while enhancing precision. The term “synthetic media” denotes artificially generated or manipulated media such as synthesized audio, virtual reality, and even advanced digital-image creation, which today is highly believable and “true to life.” [5] However, generative media's integration of technology with art creates new ethical issues concerning technological manipulation as well as copyright, ownership, and authorship of the media.

History

Algorithms and mathematical perspectives were used to generate art by the ancient Greeks. The Greek sculptor Polykleitos who lived in 5th century BCE, fixed proportions based on the ratio 1:√2 to construct the embodiment of what was thought to represent a physical god (the epitome of human physique)[6] The notion of autonomously generating media was also popular amongst Greek inventors such as Daedalus and Hero of Alexandria, who designed machines capable of generating media without human intervention. [7]

In the eighth century, current era, the Islamic world implemented complex geometric patterns to avoid figurative images becoming objects of worship. A recurring pattern is the 8-pointed star, often seen in Islamic tilework; it is made of two squares, one rotated 45 degrees with respect to the other. The fourth basic shape is the polygon, including pentagons and pentagons. All of these can be recursively configured to generate a slew of trigonometric symmetries and linear transformations that include shears, shifts, reflections and rotations.[8]

During The Renaissance period, Italians implemented a mathematical perspective to generate their paintings. In 1415, the Italian architect Filippo Brunelleschi demonstrated the geometric linear perspective used to generate art in Florence, using trigonometry formulated by Euclid (a Greek mathematician) to find the distant dimension's of objects.[9]

Digital Age

Desmond Paul Henry became a pioneer in generative media by producing some of the first works of art which were created by his "Drawing Machine 1", an analogue machine based on a bombsight computer and exhibited in 1962. The machine was capable of creating complex, abstract, asymmetrical, curvilinear, but repetitive line drawings. [10] Ian Goodfellow is a modern-day computer scientist who, in 2014, invented a new class of machine learning named generative adversarial network (GAN), which elevated a computers creativity significantly. GAN systems work much like neural networks, in that a computer system can be trained on data to learn how to mimic the creativity of established humans. Today, GANs can be trained on a single photograph and then can generate a seemingly authentic video with many realistic characteristics. Generative media is rapidly expanding by giving rise to some unique subsets.

Generative Modeling Techniques

There are many different ways that generative media can be produced, but most rely on various statistical modeling methods that use probability analysis to “approximate complicated, high-dimensional probability distributions using a large number of samples."[11] Most methods apply a combination of generative and discriminative approaches to achieve the task of probability approximations.[12]

The most common methods include: autoregressive models, variational autoencoders (VAEs), and generative adversarial networks (GANs).[11] Out of the three, GANs are the most widely used in generative media, as their popularity has tremendously risen in recent years due to their viral results.[13] The GAN architecture has seen many modifications throughout the years to make them easier to train, generate different types of media, and be used in various applications. The most notable of these modifications are cGANs, StyleGAN, DCGAN, and Pix2Pix GAN.[13]

Due to the success of deep learning, most of these algorithms now integrate deep neural networks with many hidden layers. There is currently a trend for creating deeper and deeper networks, since scaling up existing networks seems to be leading to better results.[14]

Recently, generative modeling has seen another paradigm shift through the use of transformers. Transformers are a specific type of neural network architecture that has its roots in natural language processing but is seeing expanded applications in computer vision and generative modeling [15]. The prime example of this is OpenAI’s CLIP and DALL-E. Both use an underlying transformer architecture, trained on over half the internet, called GPT-3. “DALL-E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text–image pairs. We’ve found that it has a diverse set of capabilities, including creating anthropomorphized versions of animals and objects, combining unrelated concepts in plausible ways, rendering text, and applying transformations to existing images.”[16]

Subsets of Generative Media

Generative Art

Generative art refers to art that is generated algorithmically and autonomously, made possible by the advancements in machine learning and neural networks. These algorithmic systems can be trained in a wide range of contexts. For example, with such a system, an artist would feed it data - such as Leonardo da Vinci’s entire painting catalog - while fine-tuning the neural net to make the decisions that the artist deems satisfactory and then generate a painting with the given specifications. The partition that once existed between fabrication and originality is nearly non-existent when analyzing contemporary pieces such as “the Next Rembrandt”. In 2016, a new Rembrandt painting was designed by a computer and created by a 3D printer 351 years after the painter’s death. [18] Tech giant Microsoft collaborated with other companies to create the artwork.[19] The state-of-the-art algorithms that produced The Next Rembrandt created a data set of topological maps that represented brushstrokes and layers of paint, which the artist used to make the very lifelike depths and tones. [19]

Generative Poetry

Generative Poetry describes the product of algorithms attempting to mimic the meaning, phrasing, structure, and rhyme aspects of poetry. Generating algorithmic poetry has been successful in many instances, as with the case of Zackary Scholl from Duke University; Scholl successfully created generative poetry that was met with positive feedback and was indistinguishable from poetry written by humans.[21] Other engineers have developed systems that use case-based-reasoning to generate formulations of a given input text via a composition of poetic fragments that are retrieved from a case-base of existing poems. The fragments are then configured according to metrical rules, which govern well-formed poetic pieces. Racter is one such program used to generate this synthetic poetry.

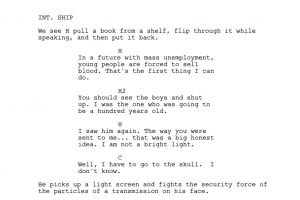

Generative Screenplay

Generative media can also take the form of generative filmmaking, where neural networks analyze, write, and generate films and screenplays. The machine learning models use neural networks to learn from existing text and film in order to produce new text and film based on the data. These models not only consider the plot, characters, and dialogue, but also keep track of the behavioral cues of characters or the background (e.g., environment, music, etc.) associated with scenes. [23] This allows them to analyze the emotional creativity associated with filmmaking to gain insight into what types of scenes elicit what feelings in the audience.

In 2016, Sunspring, a science fiction short film written entirely by an artificial intelligence bot named Benjamin was created for the Sci-Fi London film festival’s 48hr Challenge Contest. [24] Director Oscar Sharp and NYU AI researcher, Ross Goodwin, developed the original idea. Benjamin learned to mimic the structure of films by using a recurrent neural network called long short-term memory (LSTM) to analyze dozens of science fiction subtitle files and film scripts. [24][25] After being fed seeds of information (mostly props and lines that must appear in the film per contest guidelines), Benjamin was able to produce stage directions and well-formatted character lines.[24] However, the AI experienced difficulties with creating character names that are very unpredictable and not like other words; because of this, Goodwin changed the names of all the characters to single letters (i.e., H, H2, and C). [24] After learning from a collection of 30,000 pop songs, Benjamin also composed a pop song that served as musical interlude in the film. [24] Sharp and Goodwin edited the script for length but preserved the content created by Benjamin.

Deepfakes

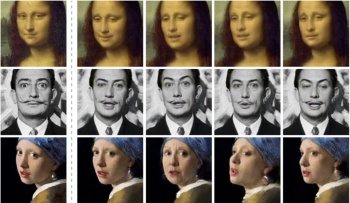

[Wikipedia: Deepfake | Deepfakes], the subset of generative media that is of the most concern today, [27] were brought forward in late 2017 with the use of artificial intelligence algorithms to insert famous actresses' faces into adult videos.[27] Deepfakes utilize GAN systems and have been the fastest evolving subset of generative media primarily because its building blocks - the computer source code - are open and free to anyone. One example of this public information being utilized was with the creation of a deepfake that was an impersonation of the 44th president, Barack Obama, seeming to portray him out of character. [Wikipedia: Jordan Peele | Jordan Peele], the comedian behind the demonstration, quickly revealed himself and explained the danger of such technology when in the hands of questionable individuals. Today, deepfake algorithms are progressing quickly; currently, only one picture is required to generate fabricated media, as opposed to the initial requirements of hundreds of pictures for the system to generate videos. [28]

Researchers can identify poor-quality deepfakes quickly due to their poor skin texture mapping, unaligned or inaccurate audio alignment, flickering transposed faces, and hair movement, which is particularly difficult for computers to render. However, there are ways to use computers to examine video content and spot deepfakes. [29] In a 2018 study aiming to expose AI-generated fake faces by analyzing the eye blinking patterns, researchers Yuezun Li, Ming-Ching Chang, and Siwei Lyu created a method to detect and identify abnormal blinking patterns.[30] However, while their results were promising, anonymous developers introduced new higher quality deepfake technology for improving their blinking to pass detection. A 2020 publication discusses a method where Mittal et al. developed a deep learning network that used emotion to identify deepfakes in video content. When tested against two large deep fake identification testing datasets, they scored 84.4% accuracy on the DFDC dataset and a 96.6% on the DF-TIMT datasets.[31] The method uses video and audio data from real and fake videos to establish a baseline for emotional responses and phrases. Algorithms are used to both create deepfakes and detect them. As the technology to combat deepfakes develops, so will the ability to pass detection. For this reason, mainstream media has taken to the outright banning of deepfakes.[32]

Ethical Implications of Deepfakes

While deepfakes can offer many benefits in assistive technology and education, they can also “challenge aural and visual authenticity and enable the production of disinformation by bad actors” [33]. This ability to change audio and visual elements of videos or recordings has the potential to “destabilize” areas such as news reporting – a domain where audio and video are treated as evidence [33]. Because this technology is so powerful, deepfakes can be thought of as a new and unique form of visual disinformation [34]. Research has highlighted that people correctly identify deepfakes in about half of presented cases – which is statistically equivalent to random guessing [34]. Because deepfakes portray very accurate depictions of people, disciplines where video and audio are used as evidence could be harmed, celebrities or public figures could be edited into compromising situations, and more. The combination of being able to mimic a voice as well as face-swap has the potential to spread misinformation on a very large scale [35]. This can lead to individuals taking in information from people they think are trustworthy – like certain reporters or political figures – when in reality they are listening to or watching someone impersonate a public figure.

As deepfakes become more popular due to their availability through apps, a number of legal, social, and ethical questions are raised [36]. Deep fakes enable the creation of an alternative reality “where celebrities and public leaders say and do things they never did, thus deceiving an unaware public. This makes a saying like ‘seeing is believing’ no longer valid” [36]. The technology also presents concerns regarding the harm to vulnerable groups like women and children [35]. Deep fake videos have resulted in the loss of consent and control over one’s representation in the public eye [35].

Solutions to the ethical implications of harmful deepfakes have been proposed. The fact that this technology can be used for “socially harmful purposes has prompted governments and market players to develop deep fake detection technologies” [36]. This is a difficult task given how accurate this technology can represent individuals. With the implementation of technologies that detect deepfakes, there is a possibility that deepfake technology will become more sophisticated, and the detection technology will have to advance as well [36]. This may result in the detection technology never being sophisticated enough to catch and detect the evolving landscape of the harmful use of deep fakes [36].

Ethical Dilemmas

Manipulation and credit/copyright issues are some of the ethical dilemmas surrounding generative media.[5]

Manipulation

Several studies have been carried out and are currently taking place to help understand how humans perceive generative media and their feelings towards algorithms in the world of fine arts and literature. "Investigating American and Chinese Subjects’ explicit and implicit perceptions of AI-Generated artistic work" is an academic paper that analyzes one such study between American and Chinese citizens. [37] The paper reveals a general opinion of artificial intelligence from the public of two of the biggest leaders of technology in the world to get a notion of how much fluctuation there is from one society to the next. The study reports that in China, the risks and dangers of fully autonomous systems have not been thoroughly articulated and debated; therefore, most Chinese citizens welcome and celebrate the technology, in sharp contrast to Western nations that are cautious with research and development. The government heavily influences public opinion in China, making generative media an efficient outlet to mislead and misinform. Society will overlook the dangerous capabilities of manipulated media in societies that show blind trust in novel technology. [38]

Credit, Copyright, & Ownership

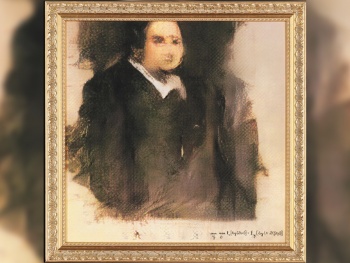

The rise of generative media has brought on new ethical concerns regarding ownership and copyright of the media among human collaborators. Christie's New York is a prestigious auction house, and in 2018 a historical event unfolded. The first-ever original work of art generated by artificial intelligence, Edmond de Belamy, was sold at the final price of $432,500, surpassing its opening price of $10,000. To successfully generate this piece, algorithms had to go under excessive training by being fed a slew of fine Renaissance paintings from the WikiArt database, which collected the specifications of its creators Obvious a Paris based collective, to learn to paint like a master. However, Robbie Barrat, the artist, and programmer who wrote the code to generate Renaissance-style images, was not amongst those who were compensated for the sale. The relationships formed when generating a synthetic piece of art get very complex, which obscures the proportions of involvement, subsequently splitting the reward in an unfair and unethical fashion.[40]

There have also been copyright concerns surrounding the AI’s potential authorship of the media. In Sunspring, the AI bot Benjamin is credited as both an author and tool of the film. [41] Some argue that in order to claim authorship or ownership over media, that creditor must have influenced the creative process associated with creating the media. [42] The filmmakers that collaborated with Benjamin hold mixed opinions on Benjamin’s creative abilities; those opposing Benjamin’s authorship claim that because Benjamin’s screenplay is based on its ability to imitate the previous screenplays it had been fed, it did not really create originally authentic media. [25]

References

- ↑ Goodstein, Anastasia. "Will AI Replace Human Creativity?" https://www.adlibbing.org/2019/10/07/will-ai-replace-human-creativity/ (30 January 2020)

- ↑ Waddell, Kaveh "Welcome to our new synthetic realities" https://www.axios.com/synthetic-realities-fiction-stories-fact-misinformation-ed86ce3b-f1a5-4e7b-ba86-f87a918d962e.html (30 January 2020)

- ↑ 3.0 3.1 Lastovich, Tyler. "Why Now Is The Time to Be a Maker in Generative Media." ProductHunt, n.d. https://www.producthunt.com/stories/why-now-is-the-time-to-be-a-maker-in-generative-media

- ↑ Ignatidou, Sophia "AI-driven Personalization in Digital Media Political and Societal Implications" https://www.chathamhouse.org/sites/default/files/021219%20AI-driven%20Personalization%20in%20Digital%20Media%20final%20WEB.pdf, International Security Department (30 January 2020)

- ↑ 5.0 5.1 Daniele, Antonio, Song, Yi-Zhe. AI+Art = Human. AAAI AI Ethics and Society (2019). https://dl.acm.org/doi/10.1145/3306618.3314233

- ↑ Stewart, Andrew "One Hundred Greek Sculptors: Their Careers and Extant Works" from: Journal of Hellenic Studies (November 1978)

- ↑ Brett, Gerard "The Automata in the Byzantine "Throne of Solomon"", Speculum, 29 (3): 477–487, doi:10.2307/2846790, ISSN 0038-7134, JSTOR 2846790.(July 1954)

- ↑ Bouaissa, Malikka. "The crucial role of geometry in Islamic art" http://www.alartemag.be/en/en-art/the-crucial-role-of-geometry-in-islamic-art/ "Al Arte Magazine" (1 December 2015) (27 July 2013)

- ↑ Vasari, Giorgio (1550). Lives of the Artists. Torrentino. p. Chapter on Brunelleschi.

- ↑ Beddard, Honor. "Computer art at the V&A". Victoria and Albert Museum. (22 September 2015)

- ↑ 11.0 11.1 Ruthotto, Lars, & Haber, Eldad. An Introduction to Deep Generative Modeling. (March 10, 2021). https://arxiv.org/pdf/2103.05180.pdf

- ↑ OpenAI. "Generative Models." openai.com. (June 16, 2016). https://openai.com/blog/generative-models/

- ↑ 13.0 13.1 Ahirwar, Kailash. "The Rise of Generative Adversarial Networks." medium.com. (March 28, 2019). https://blog.usejournal.com/the-rise-of-generative-adversarial-networks-be52d424e517

- ↑ Li, Chunyuan, & Gao, Jianfeng. "A deep generative model trifecta: Three advances that work towards harnessing large-scale power." Microsoft. (April 9, 2020). https://www.microsoft.com/en-us/research/blog/a-deep-generative-model-trifecta-three-advances-that-work-towards-harnessing-large-scale-power/

- ↑ Maxime. "What is a Transformer?" medium.com. (January 4, 2019). https://medium.com/inside-machine-learning/what-is-a-transformer-d07dd1fbec04

- ↑ OpenAI. "DALL·E: Creating Images from Text." openai.com. (January 5, 2021). https://openai.com/blog/dall-e/

- ↑ https://www.nextrembrandt.com.

- ↑ United Nations Educational, Scientific and Cultural Organization (March 2021). The Next Rembrandt. Retrieved from https://en.unesco.org/artificial-intelligence/ethics/cases

- ↑ 19.0 19.1 "The Next Rembrandt." nextrembrandt.com, ING. https://www.nextrembrandt.com/

- ↑ Racter, published in 1984. "The Policeman’s Beard is Half Constructed: Computer Prose and Poetry" http://www.101bananas.com/poems/racter.html.

- ↑ Merchant, Brian. "The Poem That Passed the Turing Test." Vice. (February 5, 2015). https://www.vice.com/en/article/vvbxxd/the-poem-that-passed-the-turing-test

- ↑ LA LA Film. "Sunspring: a short film written by an algorithm" https://lalafilmltd.wordpress.com/2016/06/11/sunspring-a-short-film-written-by-an-algorithm/ (11 June 2016)

- ↑ Chu, Eric & Roy, Deb. "Audio-Visual Sentiment Analysis for Learning Emotional Arcs in Movies", https://arxiv.org/pdf/1712.02896.pdf (8 Dec 2017)

- ↑ 24.0 24.1 24.2 24.3 24.4 Newitz, Annalee. "Movie written by algorithm turns out to be hilarious and intense", https://arstechnica.com/gaming/2016/06/an-ai-wrote-this-movie-and-its-strangely-moving/ (9 June 2016)

- ↑ 25.0 25.1 Frohlick, Alex. "The possibility of an AI Auteur? AI Authorship In the AI Film", https://www.researchgate.net/publication/342591536_The_Possibility_of_an_AI_Auteur_AI_Authorship_In_the_AI_Film (July 2020)

- ↑ Egor Zakharov, Aliaksandra Shysheya published on: 05.26.2019 "Deepfakes generated from a single image" https://www.wired.com/story/deepfakes-getting-better-theyre-easy-spot/

- ↑ 27.0 27.1 L. Whittaker, T. C. Kietzmann, J. Kietzmann, and A. Dabirian, "“All Around Me Are Synthetic Faces”: The Mad World of AI-Generated Media," in IT Professional, vol. 22, no. 5, pp. 90-99, 1 Sept.-Oct. 2020, doi: 10.1109/MITP.2020.2985492.

- ↑ Author: Dane Mitrev (March 2021). Few-Shot Adversarial Learning of Realistic Neural Talking Head Models. Retrieved from https://arxiv.org/pdf/1905.08233.pdf

- ↑ "How To Spot Deepfake Videos — 15 Signs To Watch For". Us.Norton.Com, 2021, https://us.norton.com/internetsecurity-emerging-threats-how-to-spot-deepfakes.html.

- ↑ Li, Yuezun, Ming-Ching Chang, and Siwei Lyu. "In ictu oculi: Exposing ai generated fake face videos by detecting eye blinking." arXiv preprint arXiv:1806.02877 (2018).

- ↑ Mittal, Trisha, et al. "Emotions Don't Lie: A Deepfake Detection Method using Audio-Visual Affective Cues." arXiv preprint arXiv:2003.06711 (2020).

- ↑ Hern, Alex. "Facebook Bans 'Deepfake' Videos in Run-Up To US election". The Guardian, 2021, https://www.theguardian.com/technology/2020/jan/07/facebook-bans-deepfake-videos-in-run-up-to-us-election

- ↑ 33.0 33.1 Diakopoulos, N., & Johnson, D. (2020). Anticipating and addressing the ethical implications of deepfakes in the context of elections. New Media & Society. 1-27. https://doi.org/10.1177/1461444820925811

- ↑ 34.0 34.1 Vaccari, C., & Chadwick, A. (2020). Deepfakes and disinformation: Exploring the impact of synthetic political video on deception, uncertainty, and trust in news. Social Media + Society. 1-13. https://doi.org/10.1177/2056305120903408

- ↑ 35.0 35.1 35.2 Yadlin-Segal, A., & Oppenheim, Y. (2020). Whose dystopia is it anyway? Deepfakes and social media regulation. Convergence: The International Journal of Research into New Media Technologies, 27(1), 36-51. https://doi-org.proxy.lib.umich.edu/10.1177/1354856520923963

- ↑ 36.0 36.1 36.2 36.3 36.4 Meskys, E. et al. (2020). Regulating deep fakes: Legal and ethical considerations. Journal of Intellectual Property Law & Practice, 15(1). 24-31. https://doi-org.proxy.lib.umich.edu/10.1093/jiplp/jpz167

- ↑ Authors: Yuheng Wu, Yi Mou, Zhipeng Li, Kun Xu, Investigating American and Chinese Subjects’ explicit and implicit perceptions of AI-Generated artistic work, Computers in Human Behavior, Volume 104, 2020, 106186, ISSN 0747-5632, https://doi.org/10.1016/j.chb.2019.106186. Retrieved from: (https://www.sciencedirect.com/science/article/pii/S074756321930398X)

- ↑ Authors: Yuheng Wu, Yi Mou, Zhipeng Li, Kun Xu, Investigating American and Chinese Subjects’ explicit and implicit perceptions of AI-Generated artistic work, Computers in Human Behavior, Volume 104, 2020, 106186, ISSN 0747-5632, https://doi.org/10.1016/j.chb.2019.106186. Retrieved from: (https://www.sciencedirect.com/science/article/pii/S074756321930398X)

- ↑ Bryner, Jeanna. "Creepy AI-Created Portrait Fetches $432,500 at Auction", https://www.livescience.com/63929-ai-created-painting-sells.html (25 October 2018)

- ↑ Epstein, Ziv, et al. “Who Gets Credit for AI-Generated Art?” IScience, vol. 23, no. 9, Elsevier Inc, 2020, p. 101515, doi:10.1016/j.isci.2020.101515.

- ↑ Arizona State University Center for Science and the Imagination. "Machines Who Write and Edit", https://csi.asu.edu/ideas/machines-who-write-and-edit/ (16 June 2016)

- ↑ Ravid, Shlomit Yanisky. "Generating Rembrandt: Artificial Intelligence, Copyright, and Accountability in the 3A Era--The Human-like Authors are Already Here-A New Model", Michigan State Law Review, https://ir.lawnet.fordham.edu/faculty_scholarship/956/ (2017)