Difference between revisions of "Content moderation"

| Line 60: | Line 60: | ||

===Virtual Sweatshops=== | ===Virtual Sweatshops=== | ||

| − | Often, companies outsource their content moderation tasks to third parties. This work cannot be done by computer algorithms because it is often very nuanced, which is where [http://si410wiki.sites.uofmhosting.net/index.php/Virtual_sweatshops virtual sweatshops] enter the picture. Virtual sweatshops enlist workers to complete mundane tasks in which they will receive small monetary reward for their labor. While some view this as a new market for human labor with extreme flexibility, there are also concerns with labor laws. There is not yet policy that exists on work regulations for internet labor, requiring teams of people overseas who are underpaid for the labor they perform. Companies overlook and often choose not to acknowledge the hands-on effort they require. Human error is inevitable causing concerns with privacy and trust when information is sent to these third-party moderators. | + | Often, companies outsource their content moderation tasks to third parties. This work cannot be done by computer algorithms because it is often very nuanced, which is where [http://si410wiki.sites.uofmhosting.net/index.php/Virtual_sweatshops virtual sweatshops] enter the picture. Virtual sweatshops enlist workers to complete mundane tasks in which they will receive small monetary reward for their labor. While some view this as a new market for human labor with extreme flexibility, there are also concerns with labor laws. There is not yet policy that exists on work regulations for internet labor, requiring teams of people overseas who are underpaid for the labor they perform. Companies overlook and often choose not to acknowledge the hands-on effort they require. Human error is inevitable causing concerns with privacy and trust when information is sent to these third-party moderators. <ref> Zittrain, Jonathon. "THE INTERNET CREATES A NEW KIND OF SWEATSHOP." NewsWeek. December 7, 2009. https://www.newsweek.com/internet-creates-new-kind-sweatshop-75751 </ref> |

=== Google's Content Moderation & the Catsouras Scandal === | === Google's Content Moderation & the Catsouras Scandal === | ||

Revision as of 15:05, 23 April 2019

Content moderation is the process of monitoring, filtering and removing online user-generated content according to the rules of a private organization or the regulations of a government. It is used to restrict illegal or obscene content, spam, and content considered offensive or incongruous with the values of the moderator. When applied to dominant platforms with significant influence, content moderation may be conflated with censorship. Ethical issues involving content moderation include the psychological effects on content moderators, human and algorithmic bias in moderation, the trade-off between free speech and free association, and the impact of content moderation on minority groups.

Overview

Most types of moderation involve a top-down approach, where a moderator or small group of moderators are given discretionary power by a platform to approve or disapprove user-generated content. These moderators may be paid contractors or unpaid volunteers. A moderation hierarchy may exist or each moderator may have independent and absolute authority to make decisions.

In general, content moderation can be broken down into 6 major categories:[1]

- Pre-Moderation screens each submission before it is visible to the public. This creates a bottleneck in user-engagement, and the delay may cause frustration in the user-base. However, it ensures maximum protection against undesired content, eliminating the risk of exposure to unsuspecting users. It is only practical for small user communities otherwise the flow of content would be slowed down too much. It was common in moderated newsgroups on Usenet.[2] Pre-moderation provides a high control of what ends up visible to the public. This method is suited towards communities where child protection is vital.

- Post-Moderation screens each submission after it is visible to the public. While preventing the bottleneck problem, it is still impractical for large user communities due to the vast number of submissions. Furthermore, as the content is often reviewed in a queue, undesired content may remain visible for an extended period of time, drowned out by benign content ahead of it, which must still be reviewed. This method is preferable to pre-moderation from a user-experience perspective, since the flow of content has not been slowed down by waiting for approval.

- Reactive moderation reviews only that content which has been flagged by users. It retains the benefits of both pre- and post-moderation, allowing for real-time user-engagement and the immediate review of only potentially undesired content. However, it is reliant on user participation and is still susceptible to benign content being falsely flagged. Therefore it is most practical for large user communities which have a lot of user activity. Most modern social media platforms, including Facebook and YouTube, rely on this method. This method allows for the users themselves to be held accountable for any information available and for determining what should or should not be taken down. This method is more easily scalable to a large number of users than both pre and post-moderation.

- Distributed moderation is an exception to the top-down approach. It instead gives the power of moderation to the users, often making use of a voting system. This is common on Reddit and Slashdot, the latter also using a meta-moderation system, in which users also rate the decisions of other users.[3] This method scales well across user-communities of all sizes, but also relies on users having the same perception of undesired content as the platform. It is also susceptible to groupthink and malicious coordination, also known as brigading.[4]

- Automated moderation is the use of software to automatically assess content for desirability. It can be used in conjunction with any of the above moderation types. Its accuracy is dependent on the quality of its implementation, and it is susceptible to algorithmic bias and adversarial examples[5]. Copyright detection software on YouTube and spam filtering are examples of automated moderation[6].

- No moderation is the lack of moderation entirely. Such platforms are often hosts to illegal and obscene content, and typically operate outside the law, such as The Pirate Bay and Dark Web markets. Spam is a perennial problem for unmoderated platforms, but may be mitigated by other methods, such as limited posting frequency and monetary barriers to entry. However, small communities with shared values and few bad actors can also thrive under no moderation, like unmoderated Usenet newsgroups.

History

Pre-1993: Usenet and the Open Internet

Usenet emerged in the early 1980s as a network of university and private computers, and quickly became the world's first Internet community. The decentralized platform hosted a collection of message boards known as newsgroups. These newsgroups were small communities by modern standards, and consisted of like-minded, technologically-inclined users sharing the hacker ethic. This collection of principles, including "access to computers should be unlimited", "mistrust authority: promote decentralization", and "information wants to be free", created a culture that was resistant to moderation and free of top-down censorship.[7] The default assumptions were of users acting in good faith and that new users could be gradually assimilated into the shared culture. As a result, only a minority of newsgroups were moderated, most allowing anyone to post however they pleased, as long as they followed the community's social norms, known as "netiquette."[8] Furthermore, the Internet, in general, was considered separate and distinct from the physical space its servers were located, existing in its own "cyberspace" not subject to the will of the state. Throughout this era of the Open Internet, online activity mostly escaped the notice of government regulation, creating a policy gap that only began to close in the late 1990s.[9]

1994 - 2005: Eternal September and Growth

In September 1993, AOL began offering Internet access to the general public. The resulting flood of users arrived too quickly and in too many numbers to be assimilated into the existing culture, and the shared values that had allowed unmoderated newsgroups to flourish were lost. This was known as the Eternal September, and the resulting growth transformed the Internet from a high-trust to a low-trust community.[10] The consequences of this transformation were first seen in 1994, when the first recorded instance of spam was sent out across Usenet.[11] The spam outraged Usenet users, and the first anti-spam bot was created in response, ushering in the era of content moderation.[12]

With the invention of the World Wide Web, users began to drift away from Usenet, while thousands of forums and blogs emerged as replacements. These small communities were often overseen by single individuals or small teams, and exercised total moderating control over their domains. In response to the growth of spam and other bad actors, these often had much stricter rules than early Usenet groups. However, the vast marketplace of available forums and places of discussion was such that, if a user did not like the moderation policies in one platform, they could easily move to another.

As corporate platforms matured, they began to adopt limited content policies as well, though in a more ad-hoc manner. In 2000, Compuserve was the first platform to develop an "Acceptable Use" policy, which banned racist speech[13] eBay soon followed in 2001, banning the sale of hate memorabilia and propaganda.[14]

2006 - 2010: Social Media and Early Corporate Moderation

In the mid-2000s, social media platforms such as YouTube, Twitter, Tumblr, Reddit, and Facebook began to emerge, and quickly became dominant, centralized platforms that gradually displaced the multitude of blogs and message boards as a place for user discussion. These platforms initially struggled with content moderation. YouTube in particular developed ad-hoc policies from individual cases, gradually building up an internal set of rules that was opaque, arbitrary, and difficult for moderators to apply.[13][15]

Other platforms, such as Twitter and Reddit adopted the unmoderated, free speech ethos of old, with Twitter claiming to be the "free speech wing of the free speech party" and Reddit stating that "distasteful" subreddits would not be removed, "even if we find it odious or if we personally condemn it."[16][17]

2010 - Present: Centralization and Expanded Moderation

Throughout the 2010s, as social media platforms became ubiquitous, the ethics of their moderation policies were brought into question. As these centralized platforms began to have significant influence over national and international discourse, concerns were raised over the presence of offensive content as well as the stifling of expression. [18][19] Additionally, internet infrastructure providers also began to remove content hosted on their platforms.

In 2010, WikiLeaks leaked the US Diplomatic Cables and hosted the documents on Amazon Web Services. These were later removed by Amazon as against their content policies. WikiLeaks' DNS provider also made the decision to drop their website, effectively removing WikiLeaks from the Internet until an alternative host could be found.[20]

In 2012, Reddit user /u/violentacrez was doxxed by Gawker Media for moderating several controversial subreddits, including /r/Creepshots. The subsequent media spotlight caused Reddit to reconsider their minimalist approach to content moderation.[21] This set a precedent which was used to ban more subreddits over the next few years. In 2015, Reddit banned /r/FatPeopleHate, which marked a turning point at which Reddit no longer considered itself a "bastion of free speech."[22] In 2019, Reddit banned /r/WatchPeopleDie, in an effort to suppress the spread of the Christchurch mass shooting video, a move widely considered as censorship. [23]

In 2015, Instagram came under fire for moderating female nipples, but not male nipples. It was later revealed that this decision was in turn due to content moderation policies for apps in Apple App Store.[24]

In 2016, in the aftermath of Gamergate and it's associated harassment, Twitter instituted the Trust and Safety Council, and began enforcing stricter moderation policies on their users.[25] In 2019, Twitter was heavily criticized for political bias, inconsistency and lack of transparency in their moderation practices.[26]

In 2018, Tumblr banned all adult content from its platform. This resulted in the mass removal of LGBT and GSM support groups and communities.[27]

In 2019, politicians pushed for content regulation from Google and Facebook, specifically regarding online hate speech. The increase in terrorist attacks and real-world violence has caused concern that these "tech giants have become digital incubators for some of the most deadly, racially motivated attacks around the world," including the white-supremacist attack in VA and the synagogue shooting in PA. [28] The mosque attack in Christchurch, New Zealand is an example of why the urge for regulation has become so prominent as the attacker live-recorded the shooting on Facebook. Facebook will reexamine its current procedures for identifying violent content and quickly taking action to remove it. [29]

Ethical Issues

The ethical issues regarding content moderation include how it is carried out, the possible bias of such individuals, and the negative effects this kind of job has on moderators. The problem lies in the fact that content moderation cannot be carried out by an autonomous program since many cases are highly nuanced and detectable only by knowing the context and the way humans might perceive it. Not only is this job often ill-defined in terms of policy, content moderators are often expected to make very difficult judgments while being afforded very few to no mistakes.

Virtual Sweatshops

Often, companies outsource their content moderation tasks to third parties. This work cannot be done by computer algorithms because it is often very nuanced, which is where virtual sweatshops enter the picture. Virtual sweatshops enlist workers to complete mundane tasks in which they will receive small monetary reward for their labor. While some view this as a new market for human labor with extreme flexibility, there are also concerns with labor laws. There is not yet policy that exists on work regulations for internet labor, requiring teams of people overseas who are underpaid for the labor they perform. Companies overlook and often choose not to acknowledge the hands-on effort they require. Human error is inevitable causing concerns with privacy and trust when information is sent to these third-party moderators. [30]

Google's Content Moderation & the Catsouras Scandal

Google is home to a practically endless amount of content and information all of which is for the most part, not regulated. In 2006, a young teen in Southern California named Nikki Catsouras crashed her car, which resulted in her gruesome death and decapitation. On the scene, members of the police force were tasked with taking pictures of the scene. However, as a Halloween joke, a few of the members who took the photos sent them around to various friends and family members. The picture of Nikki's mutilated body was then passed around the internet and was easily accessible via Google. The Catsouras family was devastated that these pictures of their daughter were being seen and viewed by millions, and desperately attempted to get the photo removed from the Google platform. However, Google refused to comply with Catsouras plea. This is a clear ethical dilemma that involves content moderation as this picture was certainly not meant to be released to the public and was very difficult for the family, but because Google did not want to begin moderating specific content of their platform they did nothing. This brings up the ethical question of if people have "The Right to Be Forgotten" [31].

Another massive ethical issue with the moderation of content online is the fact that the owners of the content/platform decide what is and what is not moderated. Thousands of people and companies claim that Google purposefully moderates content that directly competes with their platform. Shivaun Moeran and Adam Raff are two computer scientists who together created an incredibly powerful search platform called Foundem.com. The website was helpful for finding any amounts of information, it was particularly helpful for finding the cheapest possible items being sold on the internet. The key to the site was a Vertical Search Algorithm, which as an incredibly complex computer algorithm that focuses on search. This vertical search algorithm was significantly more powerful than Google's search algorithm, which was a horizontal search algorithm. The couple posted their site and within the first few days experienced great success and many site visitors, however, after a few days the visitor rate significantly decreased. They discovered that their site had been pushed multiple pages back on Google. This is because it directly competed with the "Google Shopping" app that had been released by Google. Morean and Raff had countless lawsuits filed and met with people at Google and other large companies to figure out what the issue was and how they could get it fixed but were met with silence or ambiguity. Foundem.com never became the next big search algorithm, partly because of the ethical issues seen in content moderation by Google [32]

Psychological Effects on Moderators

Content moderation can have significant negative effects on the individuals tasked with carrying out the motivation. Because most content must be reviewed by a human, professional content moderators spend hours every day reviewing disturbing images and videos, including pornography (sometimes involving children or animals) gore, executions, animal abuse, and hate speech. Viewing such content repeatedly, day after day can be stressful and traumatic, with moderators sometimes developing PTSD-like symptoms. Others, after continuous exposure to fringe ideas and conspiracy theories, develop intense paranoia and anxiety, and begin to accept those fringe ideas as true.[13][33][34]

Further negative effects are brought on by the stress of applying the subjective and inconsistent rules regarding content moderation. Moderators are often called upon to make judgment calls regarding ambiguously-objectionable material or content that is offensive but breaks no rules. However, the performance of their moderation decisions is strictly monitored and measured against the subjective judgment calls of other moderators. A few mistakes are all it takes for a professional moderator to lose their job.[34]

A report detailing the lives of Facebook content moderators explained the poor conditions these workers are subject to [35]. Even though content moderators have an emotionally intense, stressful job they are often underpaid. In addition, Facebook does provide forms of counseling to their employees, however, many are dissatisfied with the service [35]. The employees review a significant amount of traumatizing information daily, but it is their responsibility to seek therapy if needed, which is difficult for many. They are also required to constantly oversee content and are only allotted two 15 minute breaks and a half an hour lunch break. In the cases were they review particularly horrifying content, they are only given a 9 minute break to recover [35]. Facebook is often criticized for the ethical treatment of their content moderator employees.

Information Transparency

Information transparency is the degree to which information about a system is visible to its users.[36] By this definition, content moderation is not transparent at any level. First, content moderation is often not transparent to the public, those it is trying to moderate. While a platform may have public rules regarding acceptable content, these are often vague and subjective, allowing the platform to enforce them as broadly or as narrowly as it chooses. Furthermore, such public documents are often supplemented by internal documents accessible only to the moderators themselves.[13]

Secondly, content moderation is not transparent at the level of moderators either. The internal documents are often as vague as the public ones and contain significantly more internal inconsistencies and judgment calls that make them difficult to apply fairly. Furthermore, such internal documents are often contradicted by statements from higher-ups, which in turn may be contradicted by similar statements.[34]

Finally, even at the corporate level where policy is set, moderation is not transparent. Moderation policies are often created by an ad-hoc, case-by-case process and applied in the same manner. Some content that would normally be removed by moderation rules will be accepted for special circumstances, such as "newsworthiness". For example, videos of violent government suppression could be displayed or not, depending on the whims of moderation policy-makers and moderation QAs at the time.[13]

Bias

Due to its inherently subjective nature, content moderation can suffer from various kinds of bias. Algorithmic bias is possible when automated tools are used to remove content. For example, YouTube's automated Content ID tools may flag reviews of films or games that feature clips or gameplay as copyright violations, despite being Fair Use when used to criticize.[6] Moderation may also suffer from cultural bias, when something considered objectionable by one group may be considered fine to another. For example, moderators tasked with removing content that depicts minors engaging in violence may disagree over what constitutes a minor. Classification of obscenity is also culturally biased, with different societies around the world having different standards of modesty.[13][15] Moderation, both from the perspective of humans and automated systems, may be inherently flawed in that the subjective nature that comes along with deciding what is right versus what is wrong can be difficult to lay out in concrete terms. While there is no uniform solution to issues of bias in content moderation, some have suggested that approaching these issues with a utilitarian approach may serve as guiding ethical standard. [37]

Free Speech and Censorship

Content moderation often finds itself in conflict with the principles of free speech, especially when the content it moderates is of a political, social or controversial nature.[15]. One the one hand, internet platforms are private entities with full control over what they can allow their users to post. On the other hand, large, dominant social media platforms like Facebook and Twitter have significant influence over the public discourse and act as effective monopolies on audience engagement. In this sense, centralized platforms act as a modern day agoras, where John Stuart Mill's "marketplace of ideas" allows good ideas to be separated from the bad without top-down influence.[19] When corporations are allowed to decide with impunity what is or isn't allowed to be discussed in such a space, they circumvent this process and stifle free speech on the primary channel individuals use to express themselves.[18]

Anonymous Social Medias

Social media sites created with the intention of keeping users anonymous so that they may post freely is an ethical concern. Formspring, which is now defunct, was a platform that allowed anonymous users to ask selected individuals their questions. Ask.fm] which is a similar site, has outlived its rival, Formspring. However, a handful of content submitted by anonymous users on these sites are hateful comments that contribute to cyberbullying. There have been two suicides linked to cyberbullying on Formspring.[39][40]. In 2013, when Formspring shut down, Ask.fm began a more active approach at content moderation.

Other, similar anonymous apps include Yik Yak, Secret (now defunct), and Whisper. Learning from their predecessors and competition, YikYak and Whisper have also taken a more active approach at Content Moderation and have not just employed people to moderate content, but also algorithms [41].

Bots

Although a lot of content moderation cannot be dealt with using computer algorithms and must be outsourced to "virtual sweatshops", a lot of content is still moderated through the use of computer bots. The use of these computer bots naturally comes with many ethical concerns [42]. The largest concern lies among academics, an increasing portion of whom are worried that auto-moderation cannot be effectively implemented on a global scale[43] UCLA Professor Assistant Professor Sarah Roberts said in an interview with Motherboard regarding Facebook's attempt at global auto-moderation, "it’s actually almost ridiculous when you think about it... What they’re trying to do is to resolve human nature fundamentally."[44] The article's objective of making clear that auto-moderation isn't feasible includes a report that Facebook CEO Mark Zuckerberg and COO Sheryl Sandberg often have to weigh in on content moderation themselves, a testament to how situational and subjective the job is.[44]

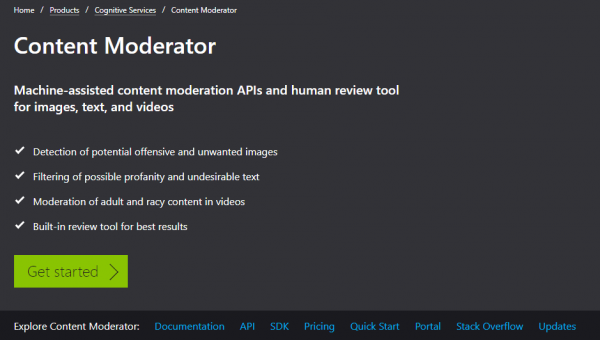

Tech companies such as Microsoft's Azure cloud service have begun offering automated content moderation packages for purchase by companies.[38] The Microsoft Azure content moderator advertises expertise in image moderation, text moderation in over 100 languages that monitors for profanity and contextualized offensive language, video moderation including recognizing "racy" content, as well as a human review tool for situations where the automated moderator is unsure of what to do.[38]

See Also

References

- ↑ Grime-Viort, Blaise (December 7, 2010). "6 Types of Content Moderation You Need to Know About". Social Media Today. Retrieved March 26, 2019.

- ↑ Big-8.org. August 4, 2012. "Moderated Newsgroups". Archived from the original on August 4, 2012. Retrieved March 26, 2019.

- ↑ Slashdot. Retrieved March 26, 2019."Moderation and Metamoderation".

- ↑ Reddit. January 18, 2018. Retrieved March 26, 2019."Reddiquette: In Regard to Voting"

- ↑ Goodfellow, Ian; Papernot, Nicolas; et al (February 24, 2017). "Attacking Machine Learning with Adversarial Examples". OpenAI. Retrieved March 26, 2019.

- ↑ 6.0 6.1 Tassi, Paul (December 19, 2013). "The Injustice of the YouTube Content ID Crackdown Reveals Google's Dark Side". Forbes. Retrieved March 26, 2019.

- ↑ Levy, Steven (2010). "Chapter 2: The Hacker Ethic". Hackers: Heroes of the Computer Revolution. pp. 27-31. ISBN 978-1-449-38839-3. Retrieved March 26, 2019.

- ↑ Kehoe, Brendan P. (January 1992). "4. Usenet News". Zen and the Art of the Internet. Retrieved March 26, 2019.

- ↑ Palfrey, John (2010). "Four Phases of Internet Regulation". Social Research. 77 (3): 981-996. Retrieved from http://www.jstor.org/stable/40972303 on March 26, 2019.

- ↑ Koebler, Jason (September 30, 2015). "It's September Forever". Motherboard. Retrieved March 26, 2019.

- ↑ Everett-Church, Ray (April 13, 1999). "The Spam That Started It All". Wired. Retrieved March 26, 2019.

- ↑ Gulbrandsen, Arnt (October 12, 2009). "Canter & Siegel: What actually happened". Retrieved March 26, 2019.

- ↑ 13.0 13.1 13.2 13.3 13.4 13.5 Buni, Catherine; Chemaly, Soraya (March 13, 2016). "The Secret Rules of the Internet". The Verge. Retrieved March 26, 2019.

- ↑ Cox, Beth (May 3, 2001). "eBay Bans Nazi, Hate Group Memorabilia". Internet News. Retrieved March 26, 2019.

- ↑ 15.0 15.1 15.2 Rosen, Jeffrey (November 28, 2008). "Google's Gatekeepers". New York Times. Retrieved March 26, 2019.

- ↑ 'The Guardian. Halliday, Josh (March 22, 2012). "Twitter's Tony Wang: 'We are the free speech wing of the free speech party'". Retrieved March 26, 2019.

- ↑ BBC News October 17, 2012. "Reddit will not ban 'distasteful' content, chief executive says". Retrieved March 26, 2019.

- ↑ 18.0 18.1 Masnick, Mike (August 9, 2019). "Platforms, Speech and Truth: Policy, Policing and Impossible Choices". Techdirt. Retrieved March 26, 2019.

- ↑ 19.0 19.1 Jeong, Sarah (2018). The Internet of Garbage. ISBN 978-0-692-18121-8. Retrieved March 26, 2019.

- ↑ Arthur, Charles; Halliday, Josh (December 3, 2010). "WikiLeaks fights to stay online after US company withdraws domain name". The Guardian. Retrieved March 26, 2019.

- ↑ Boyd, Danah (October 29, 2012). "Truth, Lies and 'Doxing": The Real Moral of the Gawker/Reddit Story". Wired. Retrieved March 26, 2019.

- ↑ "Removing Harassing Subreddits". June 10, 2015. Reddit. Retrieved March 26, 2019.

- ↑ Hatmaker, Taylor (March 15, 2019). "After Christchurch, Reddit bans communities infamous for sharing graphic videos of death". TechCrunch. Retrieved March 26, 2019.

- ↑ Kleeman, Sophie (October 1, 2015). "Instagram Finally Revealed the Reason It Banned Nipples - It's Apple". Mic. Retrieved March 26, 2019.

- ↑ Cartes, Patricia (February 9, 2016). "Announcing the Twitter Trust & Safety Council". Twitter. Retrieved March 26, 2019.

- ↑ Rogan, Joe (March 5, 2019). "Jack Dorsey, Vijaya Gadde, & Tim Pool". Joe Rogan Experience (Podcast). Retrieved March 26, 2019.

- ↑ Ho, Vivian (December 4, 2018). "Tumblr's adult content ban dismays some users: 'It was a safe space'". The Guardian. Retrieved March 26, 2019.

- ↑ Romm, T. (2019) The Washington Post. https://www.washingtonpost.com/technology/2019/04/08/facebook-google-be-quizzed-white-nationalism-political-bias-congress-pushes-dueling-reasons-regulation/?noredirect=on&utm_term=.e0dfe2d9dea7

- ↑ Shaban, H. (2019). Facebook to reexamine how live stream videos are flagged after Christchurch shooting. The Washington Post. https://www.washingtonpost.com/technology/2019/03/21/facebook-reexamine-how-recently-live-videos-are-flagged-after-christchurch-shooting/?utm_term=.a604cc1428b8

- ↑ Zittrain, Jonathon. "THE INTERNET CREATES A NEW KIND OF SWEATSHOP." NewsWeek. December 7, 2009. https://www.newsweek.com/internet-creates-new-kind-sweatshop-75751

- ↑ Toobin, Jeffrey. “The Solace of Oblivion.” The New Yorker, 22 Sept. 2014, www.newyorker.com/.

- ↑ Duhigg, Charles. “The Case Against Google.” The New York Times, The New York Times, 20 Feb. 2018, www.nytimes.com/.

- ↑ Chen, Adrian (October 23, 2014). "The Laborers Who Keep Dick Pics and Beheadings Out of Your Facebook Feed" Wired. Retrieved March 26, 2019.

- ↑ 34.0 34.1 34.2 Newton, Casey (February 25, 2019). "The Trauma Floor: The Secret Lives of Facebook Moderators in America" The Verge. Retrieved March 26, 2019.

- ↑ 35.0 35.1 35.2 Simon, Scott, and Emma Bowman. “Propaganda, Hate Speech, Violence: The Working Lives Of Facebook's Content Moderators.” NPR, NPR, 2 Mar. 2019, www.npr.org/2019/03/02/699663284/the-working-lives-of-facebooks-content-moderators.

- ↑ Turilli, Matteo; Floridi, Luciano (March 10, 2009). "The ethics of information transparency" Ethics and Information Technology. 11 (2): 105-112. doi:[https://doi.org/10.1007/s10676-009-9187-9 10.1007/s10676-009-9187-9 ]. Retrieved March 26, 2019.

- ↑ Mandal, Jharna, et al. “Utilitarian and Deontological Ethics in Medicine.” Tropical Parasitology, Medknow Publications & Media Pvt Ltd, 2016, www.ncbi.nlm.nih.gov/pmc/articles/PMC4778182/.

- ↑ 38.0 38.1 38.2 "Content Moderator." Microsoft Azure. https://azure.microsoft.com/en-us/services/cognitive-services/content-moderator/

- ↑ James, Susan Donaldson. “Jamey Rodemeyer Suicide: Police Consider Criminal Bullying Charges.” ABC News, ABC News Network, 22 Sept. 2011, abcnews.go.com/.

- ↑ “Teenager in Rail Suicide Was Sent Abusive Message on Social Networking Site.” The Telegraph, Telegraph Media Group, 22 July 2011, www.telegraph.co.uk/.

- ↑ Deamicis, Carmel. “Meet the Anonymous App Police Fighting Bullies and Porn on Whisper, Yik Yak, and Potentially Secret.” Gigaom – Your Industry Partner in Emerging Technology Research, Gigaom, 8 Aug. 2014, gigaom.com/.

- ↑ Bengani, Priyanjana. “Controlling the Conversation: The Ethics of Social Platforms and Content Moderation.” Columbia University in the City of New York, Apr. 2018, www.columbia.edu/content/.

- ↑ Newton, Casey. "Facebook’s content moderation efforts face increasing skepticism." The Verge. 24 August 2018. https://www.theverge.com/2018/8/24/17775788/facebook-content-moderation-motherboard-critics-skepticism

- ↑ 44.0 44.1 Koebler, Jason. "The Impossible Job: Inside Facebook’s Struggle to Moderate Two Billion People." Motherboard. 23 August 2018. https://motherboard.vice.com/en_us/article/xwk9zd/how-facebook-content-moderation-works