Difference between revisions of "Virtual Reality Data Practices"

| Line 42: | Line 42: | ||

The acceleration in VR technologies has developed significantly since the rapid growth of cheap computing power in the 1990s. This acceleration in availability and adoption can also be attributed to many factors, principally, a reduction in hardware cost increases the availability of high-speed high-quality connectivity, and most recently, shifts in society brought on by the global pandemic. According to market analysts, Fortune Business Insights, the size of the VR market in 2019 was USD 3.10 billion and is forecast to grow to USD 120.5 billion by 2026.<ref>“An Imperative - Developing Standards for Safety and Security in XR Environments - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 25 Feb. 2021, https://xrsi.org/publication/an-imperative-developing-standards-for-safety-and-security-in-xr-environments.</ref> | The acceleration in VR technologies has developed significantly since the rapid growth of cheap computing power in the 1990s. This acceleration in availability and adoption can also be attributed to many factors, principally, a reduction in hardware cost increases the availability of high-speed high-quality connectivity, and most recently, shifts in society brought on by the global pandemic. According to market analysts, Fortune Business Insights, the size of the VR market in 2019 was USD 3.10 billion and is forecast to grow to USD 120.5 billion by 2026.<ref>“An Imperative - Developing Standards for Safety and Security in XR Environments - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 25 Feb. 2021, https://xrsi.org/publication/an-imperative-developing-standards-for-safety-and-security-in-xr-environments.</ref> | ||

| − | Leveraging their 2014 acquisition of Oculus, early in 2020 Facebook launched a closed-beta test of their VR social network, Horizon. Horizon is only part of a broader project known as the “Metaverse”. The Metaverse Facebook proposes will consist of experiences that leverage fully immersive VR technologies, as well as augmented reality devices, nascent technologies; such as holographic displays, and traditional information systems; including billboards, televisions, shop windows, and transport information screens.<ref>“An Imperative - Developing Standards for Safety and Security in XR Environments - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 25 Feb. 2021, https://xrsi.org/publication/an-imperative-developing-standards-for-safety-and-security-in-xr-environments.</ref> | + | Leveraging their 2014 acquisition of Oculus, early in 2020 Facebook launched a closed-beta test of their VR social network, Horizon. Horizon is only part of a broader project known as the “Metaverse”. The Metaverse Facebook proposes will consist of experiences that leverage fully immersive VR technologies, as well as augmented reality devices, nascent technologies; such as holographic displays, and traditional information systems; including billboards, televisions, shop windows, and transport information screens.<ref>“An Imperative - Developing Standards for Safety and Security in XR Environments - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 25 Feb. 2021, https://xrsi.org/publication/an-imperative-developing-standards-for-safety-and-security-in-xr-environments.</ref> |

| + | |||

| + | ==VR Data Practices== | ||

| + | '''Collection''' | ||

| + | VR devices rely on information from multiple sources to deliver an optimal user experience and achieve functions other consumer devices cannot.<ref>Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.</ref> | ||

| + | '''Categorized into four types of data collection:''' | ||

| + | |||

| + | '''Observable:''' Information about an individual that VR technologies, as well as other third parties, can both observe and replicate, such as digital media the individual produces or their digital communications; | ||

| + | A user’s avatar, or virtual representation of themselves, maybe considered observable personal information. In addition to virtual representations of the user’s physical self, A VR device also collects certain observable data about user social interactions and affiliations in-world. As with other technologies, certain forms of communication such as instant messages constitute observable data. Video, images, or screenshots that identify an individual participating in certain activities are also observable data<ref>Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.</ref> | ||

| + | |||

| + | '''Observed:''' Information an individual provides or generates, which third parties can observe but not replicate, such as biographical information or location data; A significant amount of VR data falls into this category due to the reliance on sensors to replicate physical experiences in virtual spaces. This includes information about their position in physical space, such as Global Positioning System (GPS), (inertial measurement unit) IMU, and gyroscope or accelerometer data, as well as information about their surroundings collected through a mobile device’s camera or external-facing cameras on a head-mounted or heads-up display, lidar, and other spatial sensors. VR devices also track certain movements and collect observed biometric data to replicate a user’s actions in virtual space.<ref>Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.</ref> | ||

| + | |||

| + | '''Computed:''' new information AR/VR technologies infer by manipulating observable and observed data, such as biometric identification or advertising profiles. Descriptive information about users, such as demographic information, location, and in-app/in-world behavior or activities can be combined and analyzed to tailor advertisements, recommendations, and content to individuals. VR devices can also generate computed data from the various sensors included in these devices. Hand-tracking technologies use observed images of a user’s hand and machine-learning technologies to estimate important information such as the size, shape, and positioning of users’ hands and fingers. Biometric identification, such as iris scanning or facial recognition can also be computed data, future computed data can result from the interpretation of neural signals into actionable commands<ref>Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.</ref> | ||

| + | |||

| + | '''Associated:''' information that, on its own, does not provide descriptive details about an individual, such as a username or IP address. User-provided associated data also includes details such as login information (i.e., usernames and passwords) for applications and services; user contact information, such as email, phone number, and home address; and user payment information, such as credit card and bank account numbers.<ref>Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.</ref> | ||

| + | |||

| + | ==VR Data tracking practices== | ||

| + | '''Positional Tracking (Head/Eye/Full Body/ Inside-Out/Outside-In)''' is a technology that allows a device to estimate its position relative to the environment around it. It uses a combination of hardware and software to achieve the detection of its absolute position. Positional tracking is an essential technology for XR, making it possible to track movement with six degrees of freedom (6DOF). Some VR headsets require a user to stay in one place or to move using a controller, but headsets with positional tracking allow a user to move in virtual space by moving around in real space.<ref>“The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.</ref> | ||

| + | |||

| + | '''Head tracking''' refers to software detection and response to the movement of the user’s head. Typically, it’s used to move the images being displayed so that they match the position of the head. Most VR headsets have some form of head tracking to adjust their visual output to the user’s point of view. Orientation tracking uses accelerometers, gyroscopes, and magnetometers to determine how the user’s head is turned. Position tracking systems, like those in the HTC Vive or the HoloLens, require extra sensors set up around the room.<ref>“The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.</ref> | ||

| + | |||

| + | '''Eye-tracking enables software''' to capture which way the user’s eyes are looking and respond accordingly. Light from infrared cameras is directed toward the participant’s pupils, causing reflections in both the pupil and the cornea. These reflections, otherwise known as pupil center corneal reflection (PCCR), can provide information about the movement and direction of the eyes. Eye position can also be used as input. Eye-tracking can be used to capture and analyze visual attention using gaze points, fixation, heatmaps, and areas of interest(AOI). Eye-tracking can also provide output metrics, such as time to first fixation, time spent, and fixation sequences.<ref>“The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.</ref> | ||

| + | |||

| + | '''Full-body tracking''' is the process of tracing the humanlike movements of the virtual subject within the immersive environments. The location coordinates of moving objects are recorded in real-time via head-mounted displays (HMDs) and multiple motion controller peripherals to fully capture the movement of the entire body of the subject and represent them and their movements inside the virtual space.<ref>“The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.</ref> | ||

| + | |||

| + | '''Inside-Out tracking''' is a method of positional tracking commonly used in VR technologies, specifically for tracking the position of head-mounted displays (HMDs) and motion controller accessories. It differentiates itself from outside-in tracking by the location of the cameras or other sensors that are used to determine the object’s position in space. For inside-out positional tracking, the camera or sensors are located on the device being tracked (e.g., an HMD) while for outside-out positional tracking, the sensors are placed in stationary locations. A VR device using inside-out tracking looks out to determine how its position changes with the environment. When the headset moves, the sensors read just the user’s place in the room and the virtual environment responds accordingly in real-time. This type of positional tracking can be achieved with or without markers placed in the environment. The latter is called markerless inside-out tracking.<ref>“The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.</ref> | ||

| + | |||

| + | '''Outside-In tracking''' is a form of positional tracking where fixed external sensors placed around the viewer are used to determine the position of the headset and any associated tracked peripherals. Various methods of tracking can be used, including, but not limited to, optical and infra-red.<ref>“The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.</ref> | ||

| + | |||

| + | '''Biometric Tracking''' complements VR technology by monitoring and feeding back the user’s heart rate, respiration rate, pulse oximetry, and blood pressure. In traditional VR systems, tracking is used to provide a more immersed experience, ranging from head position and angle, hand movements, eye tracking, and full-body tracking capabilities. Biometrics tracking enables even more personalized data collection that can potentially be used for both good and bad purposes.<ref>“The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.</ref> | ||

| + | |||

| + | '''Gaze''' By using eye-tracking technology, one can track the direction (ray) where the user is looking in the virtual scene as well as potentially attribute the emotional response, such as increased heart rate, dilated pupils, or triggering a variety of human emotions.<ref>“The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.</ref> | ||

| + | |||

| + | ==Sharing== | ||

| + | The privacy aspects of VR platforms are currently not well understood. | ||

| + | OVERSEEN: Auditing Network Traffic and Privacy Policies in Oculus VR (OVR) was a large-scale study conducted as a comprehensive measurement and characterization of privacy aspects of OVR apps and the platform, from a combined network and privacy policy point of view. By comparing the data flows found in the network traffic with statements made in the apps’ privacy policies, the study found that approximately 70% of OVR data flows were not properly disclosed. Additional context from the privacy policies observed that 69% of the data flows were used for purposes unrelated to the core functionality of apps. Extracted and analyzed data flows in the collected network traffic from the 140 OVR apps, found that 33 and 70 apps send PII data types (e.g., Device ID, User ID, and Android ID) to first-and third-party destinations.). Notably, 58 apps expose VR sensory data (e.g., physical movement, play area) to third parties. Unlike other popular platforms (e.g., Android and Smart TVs), OVR exposes data primarily to tracking and analytics services and has a less diverse tracking ecosystem. There was no found data exposure to advertising services as ads on OVR is still in an experimental phase.<ref>R. Trimananda, H. Le, H. Cui, J. T. Ho, A. Shuba, A. Markopoulou, “Auditing Network Traffic and Privacy Policies in Oculus VR”, arXiv preprint arXiv:2106.05407, Technical Report (extended paper). June 2021.</ref> | ||

| + | |||

==See also== | ==See also== | ||

Revision as of 06:47, 28 January 2022

Virtual Reality (VR) is a fully immersive software-generated, artificial, digital environment. VR is a simulation of three-dimensional images, experienced by users via special electronic equipment, such as a Head Mounted Display (HMD). VR can create or enhance characteristics such as presence, embodiment, and agency.[1]. VR technologies are a collection of sensors and displays that work to create an immersive experience for the user of the technology. VR technologies create the illusion of virtual elements in three-dimensional physical space. These technologies require certain basic user-provided information as a starting point, and then a constant stream of new feedback data that users generate while interacting with their virtual environments. This baseline and ongoing feedback information could include biographical, demographic, location, and movement data, as well as biometrics. Advanced functions, such as gaze-tracking and even brain-computer interface (BCI) technologies that interpret neural signals, continue to introduce new consumer data collection practices largely unique to VR devices and applications.[2]

Contents

VR Device Categories

Standalone devices have all necessary components to provide virtual reality experiences integrated into the headset. Mainstream standalone VR platforms include Oculus Mobile SDK, developed by Oculus VR for its standalone headsets, and the Samsung Gear VR.[3]

Tethered headsets act as a display device to another device, like a PC or a video game console, to provide a virtual reality experience. Mainstream tethered VR platforms include: SteamVR, part of the Steam service by Valve. Oculus PC SDK for Oculus Rift and Oculus Rift S. Windows Mixed Reality (also referred to as "Windows MR" or "WMR"), developed by Microsoft Corporation for Windows 10 PCs. PlayStation VR, developed by Sony Computer Entertainment for use with PlayStation 4 home video game console. Open Source Virtual Reality (also referred to as "OSVR"). The list of supported games is here.[4]

Other Mobile headsets, which combine a smartphone with a mount, and hybrid solutions like the Oculus Quest with the Oculus Link feature that allows the standalone device to also serve as a tethered headset.[5]

VR Related Terminology

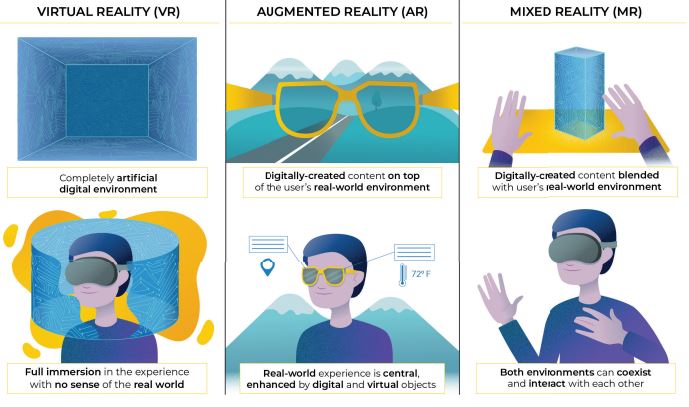

Extended Reality (XR) is a fusion of all the realities – including Augmented Reality (AR), Virtual Reality (VR), and Mixed Reality (MR). These technologies are mediated experiences enabled via a wide spectrum of hardware and software, including sensory interfaces, applications, and infrastructures. XR is often referred to as immersive video content, enhanced media experiences, as well as interactive and multi-dimensional human experiences.[6]

“XR does not refer to any specific technology. It’s a bucket for all of the realities.” Jim Malcolm, Humaneyes[7]

Augmented Reality (AR) overlays digitally-created content on top of the user’s real-world environment, viewed through a device that incorporates real-time inputs to create an enhanced version of reality. Digital and virtual objects, such as graphics and sounds, are superimposed on an existing environment to create an AR experience.[8]

Mixed Reality (MR) blends the user’s real-world environment with digitally-created content, where both environments can coexist and interact with each other. In MR, the virtual objects behave in all aspects as if they are present in the real world, e.g., they are occluded by physical objects, their lighting is consistent with the actual light sources in the environment, and they sound as though they are in the same space as the user. As the user interacts with the real and virtual objects, the virtual objects will reflect the changes in the environment as would any real object in the same space.[9]

Volumetric Video Capture is a technique that captures a three-dimensional space, including depth data, so that it can be later viewed from any angle at any moment in time.[10]

360 Degree Video is an immersive video format consisting of a video – or series of images – mapped to a portion of a sphere that allows viewing in multiple directions from a fixed central point. The mapping is usually carried out using equirectangular projection, where the horizontal coordinate is simply longitude, and the vertical coordinate is simply latitude, with no transformation or scaling applied. Other possible projections are Cube Map (that uses the six faces of a cube as the map shape), Equi-Angular Cubemap – EAC (detailed by Google in 2017 to distribute pixels as evenly as possible across the sphere so that the density of information is consistent, regardless of which direction the viewer is looking), and Pyramid (defined by Facebook in 2016). This type of video content is typically viewable through a head-mounted display, mobile device, or personal computer and allows for three degrees of freedom.[11]

Field of View (FOV) defines an observable area or the range of vision seen via an XR device such as HMD when the user is static within a given XR environment. The standard human FOV is approximately 200 degrees, but in an immersive experience, it may vary. The higher the FOV the more immersive the feeling.[[12]

Degrees of Freedom (DoF) describes the position and orientation of an object in space. DoF is defined by three components of translation and three components of rotation. An experience with three degrees of freedom (3DoF) allows for • Swiveling left and right (yawing); • Tilting forward and backward (pitching); • Pivoting side to side (rolling). An experience with six degrees of freedom (6DoF) allows for • Moving up and down (elevating/heaving – Y Translation); • Moving left and right (strafing/swaying – X Translation); • Moving forward and backward (walking/ surging – Z Translation); • Swivels left and right (yawing); • Tilts forward and backward (pitching); • Pivots side to side (rolling).[13]

Head Mounted Display (HMD) usually refers to a device with a small display such as projection technology integrated into eyeglasses or mounted on a helmet or hat. It’s typically in the form of goggles or a headset, standalone or combined with a mobile phone (Gear VR).[14]

Haptics is a mechanism or technology used for tactile feedback to enhance the experience of interacting with onscreen interfaces via vibration, touch, or force feedback. While an HMD can create a virtual sense of sight and sound, haptic controllers create a virtual sense of touch.[15]

Growth of VR

In the 1920s, The Link Flight Trainer was developed to improve the safety of trainee pilots. Grounded in ‘safety’, this kick-started the development of technologies we now know as XR. The acceleration in VR technologies has developed significantly since the rapid growth of cheap computing power in the 1990s. This acceleration in availability and adoption can also be attributed to many factors, principally, a reduction in hardware cost increases the availability of high-speed high-quality connectivity, and most recently, shifts in society brought on by the global pandemic. According to market analysts, Fortune Business Insights, the size of the VR market in 2019 was USD 3.10 billion and is forecast to grow to USD 120.5 billion by 2026.[16]

Leveraging their 2014 acquisition of Oculus, early in 2020 Facebook launched a closed-beta test of their VR social network, Horizon. Horizon is only part of a broader project known as the “Metaverse”. The Metaverse Facebook proposes will consist of experiences that leverage fully immersive VR technologies, as well as augmented reality devices, nascent technologies; such as holographic displays, and traditional information systems; including billboards, televisions, shop windows, and transport information screens.[17]

VR Data Practices

Collection VR devices rely on information from multiple sources to deliver an optimal user experience and achieve functions other consumer devices cannot.[18] Categorized into four types of data collection:

Observable: Information about an individual that VR technologies, as well as other third parties, can both observe and replicate, such as digital media the individual produces or their digital communications; A user’s avatar, or virtual representation of themselves, maybe considered observable personal information. In addition to virtual representations of the user’s physical self, A VR device also collects certain observable data about user social interactions and affiliations in-world. As with other technologies, certain forms of communication such as instant messages constitute observable data. Video, images, or screenshots that identify an individual participating in certain activities are also observable data[19]

Observed: Information an individual provides or generates, which third parties can observe but not replicate, such as biographical information or location data; A significant amount of VR data falls into this category due to the reliance on sensors to replicate physical experiences in virtual spaces. This includes information about their position in physical space, such as Global Positioning System (GPS), (inertial measurement unit) IMU, and gyroscope or accelerometer data, as well as information about their surroundings collected through a mobile device’s camera or external-facing cameras on a head-mounted or heads-up display, lidar, and other spatial sensors. VR devices also track certain movements and collect observed biometric data to replicate a user’s actions in virtual space.[20]

Computed: new information AR/VR technologies infer by manipulating observable and observed data, such as biometric identification or advertising profiles. Descriptive information about users, such as demographic information, location, and in-app/in-world behavior or activities can be combined and analyzed to tailor advertisements, recommendations, and content to individuals. VR devices can also generate computed data from the various sensors included in these devices. Hand-tracking technologies use observed images of a user’s hand and machine-learning technologies to estimate important information such as the size, shape, and positioning of users’ hands and fingers. Biometric identification, such as iris scanning or facial recognition can also be computed data, future computed data can result from the interpretation of neural signals into actionable commands[21]

Associated: information that, on its own, does not provide descriptive details about an individual, such as a username or IP address. User-provided associated data also includes details such as login information (i.e., usernames and passwords) for applications and services; user contact information, such as email, phone number, and home address; and user payment information, such as credit card and bank account numbers.[22]

VR Data tracking practices

Positional Tracking (Head/Eye/Full Body/ Inside-Out/Outside-In) is a technology that allows a device to estimate its position relative to the environment around it. It uses a combination of hardware and software to achieve the detection of its absolute position. Positional tracking is an essential technology for XR, making it possible to track movement with six degrees of freedom (6DOF). Some VR headsets require a user to stay in one place or to move using a controller, but headsets with positional tracking allow a user to move in virtual space by moving around in real space.[23]

Head tracking refers to software detection and response to the movement of the user’s head. Typically, it’s used to move the images being displayed so that they match the position of the head. Most VR headsets have some form of head tracking to adjust their visual output to the user’s point of view. Orientation tracking uses accelerometers, gyroscopes, and magnetometers to determine how the user’s head is turned. Position tracking systems, like those in the HTC Vive or the HoloLens, require extra sensors set up around the room.[24]

Eye-tracking enables software to capture which way the user’s eyes are looking and respond accordingly. Light from infrared cameras is directed toward the participant’s pupils, causing reflections in both the pupil and the cornea. These reflections, otherwise known as pupil center corneal reflection (PCCR), can provide information about the movement and direction of the eyes. Eye position can also be used as input. Eye-tracking can be used to capture and analyze visual attention using gaze points, fixation, heatmaps, and areas of interest(AOI). Eye-tracking can also provide output metrics, such as time to first fixation, time spent, and fixation sequences.[25]

Full-body tracking is the process of tracing the humanlike movements of the virtual subject within the immersive environments. The location coordinates of moving objects are recorded in real-time via head-mounted displays (HMDs) and multiple motion controller peripherals to fully capture the movement of the entire body of the subject and represent them and their movements inside the virtual space.[26]

Inside-Out tracking is a method of positional tracking commonly used in VR technologies, specifically for tracking the position of head-mounted displays (HMDs) and motion controller accessories. It differentiates itself from outside-in tracking by the location of the cameras or other sensors that are used to determine the object’s position in space. For inside-out positional tracking, the camera or sensors are located on the device being tracked (e.g., an HMD) while for outside-out positional tracking, the sensors are placed in stationary locations. A VR device using inside-out tracking looks out to determine how its position changes with the environment. When the headset moves, the sensors read just the user’s place in the room and the virtual environment responds accordingly in real-time. This type of positional tracking can be achieved with or without markers placed in the environment. The latter is called markerless inside-out tracking.[27]

Outside-In tracking is a form of positional tracking where fixed external sensors placed around the viewer are used to determine the position of the headset and any associated tracked peripherals. Various methods of tracking can be used, including, but not limited to, optical and infra-red.[28]

Biometric Tracking complements VR technology by monitoring and feeding back the user’s heart rate, respiration rate, pulse oximetry, and blood pressure. In traditional VR systems, tracking is used to provide a more immersed experience, ranging from head position and angle, hand movements, eye tracking, and full-body tracking capabilities. Biometrics tracking enables even more personalized data collection that can potentially be used for both good and bad purposes.[29]

Gaze By using eye-tracking technology, one can track the direction (ray) where the user is looking in the virtual scene as well as potentially attribute the emotional response, such as increased heart rate, dilated pupils, or triggering a variety of human emotions.[30]

Sharing

The privacy aspects of VR platforms are currently not well understood. OVERSEEN: Auditing Network Traffic and Privacy Policies in Oculus VR (OVR) was a large-scale study conducted as a comprehensive measurement and characterization of privacy aspects of OVR apps and the platform, from a combined network and privacy policy point of view. By comparing the data flows found in the network traffic with statements made in the apps’ privacy policies, the study found that approximately 70% of OVR data flows were not properly disclosed. Additional context from the privacy policies observed that 69% of the data flows were used for purposes unrelated to the core functionality of apps. Extracted and analyzed data flows in the collected network traffic from the 140 OVR apps, found that 33 and 70 apps send PII data types (e.g., Device ID, User ID, and Android ID) to first-and third-party destinations.). Notably, 58 apps expose VR sensory data (e.g., physical movement, play area) to third parties. Unlike other popular platforms (e.g., Android and Smart TVs), OVR exposes data primarily to tracking and analytics services and has a less diverse tracking ecosystem. There was no found data exposure to advertising services as ads on OVR is still in an experimental phase.[31]

See also

References

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.

- ↑ “Comparison of Virtual Reality Headsets.” Wikipedia, Wikimedia Foundation, 6 Jan. 2022, https://en.wikipedia.org/wiki/Comparison_of_virtual_reality_headsets.

- ↑ “Comparison of Virtual Reality Headsets.” Wikipedia, Wikimedia Foundation, 6 Jan. 2022, https://en.wikipedia.org/wiki/Comparison_of_virtual_reality_headsets.

- ↑ “Comparison of Virtual Reality Headsets.” Wikipedia, Wikimedia Foundation, 6 Jan. 2022, https://en.wikipedia.org/wiki/Comparison_of_virtual_reality_headsets.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “An Imperative - Developing Standards for Safety and Security in XR Environments - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 25 Feb. 2021, https://xrsi.org/publication/an-imperative-developing-standards-for-safety-and-security-in-xr-environments.

- ↑ “An Imperative - Developing Standards for Safety and Security in XR Environments - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 25 Feb. 2021, https://xrsi.org/publication/an-imperative-developing-standards-for-safety-and-security-in-xr-environments.

- ↑ Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.

- ↑ Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.

- ↑ Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.

- ↑ Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.

- ↑ Dick, Ellysse. “Balancing User Privacy and Innovation in Augmented and Virtual Reality.” Balancing User Privacy and Innovation in Augmented and Virtual Reality, Information Technology and Innovation Foundation, 4 Mar. 2021, https://itif.org/publications/2021/03/04/balancing-user-privacy-and-innovation-augmented-and-virtual-reality.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ “The XRSI Definitions of Extended Reality (XR) - XRSI – XR Safety Initiative.” XRSI, XR Safety Initiative, 3 May 2021, https://xrsi.org/publication/the-xrsi-definitions-of-extended-reality-xr.

- ↑ R. Trimananda, H. Le, H. Cui, J. T. Ho, A. Shuba, A. Markopoulou, “Auditing Network Traffic and Privacy Policies in Oculus VR”, arXiv preprint arXiv:2106.05407, Technical Report (extended paper). June 2021.