Difference between revisions of "Fact Checking"

(→Rise of Fact-Checking) |

(→2016 US Presidential Election) |

||

| Line 9: | Line 9: | ||

===2016 US Presidential Election=== | ===2016 US Presidential Election=== | ||

| − | The term "fake news" became exponentially popular during the 2016 US Presidential Election. After the election, and Donald Trump's victory, there was much debate about the impact social media as news platforms and the spreading of "fake news" and misinformation had on the results of the election. Research following the election concluded that fake new stories were shared more frequently than mainstream new stories, many people who are exposed to fake news report their believed it, and that most fake news stories favored Donald Trump over his competitor Hillary Clinton<ref>https://web.stanford.edu/~gentzkow/research/fakenews.pdf</ref>. These findings drove leaders in tech, government officials, and users of these platforms to look to create a solution before the 2020 Presidential election. | + | The term "fake news" became exponentially popular during the [https://en.wikipedia.org/wiki/2016_United_States_presidential_election 2016 US Presidential Election]. After the election, and Donald Trump's victory, there was much debate about the impact social media as news platforms and the spreading of "fake news" and misinformation had on the results of the election. Research following the election concluded that fake new stories were shared more frequently than mainstream new stories, many people who are exposed to fake news report their believed it, and that most fake news stories favored Donald Trump over his competitor Hillary Clinton<ref>https://web.stanford.edu/~gentzkow/research/fakenews.pdf</ref>. These findings drove leaders in tech, government officials, and users of these platforms to look to create a solution before the 2020 Presidential election. |

== Responsibility of Platforms == | == Responsibility of Platforms == | ||

Revision as of 15:16, 27 January 2022

Fact checking is the process of investigating an issue or information in order to verify the facts. The process of fact-checking has become more and more relevant in the discussion of the roles that major social media companies have as news sources. According to a survey in 2018, over two-thirds of Americans get some of their news from social media. They reported that 43% of Americans get their news from Facebook, 21% from YouTube, and 12% from Twitter [1]. While the process of fact-checking the information you are exposed to has always been a part of the discussion (i.e. using reliable sources, researching the author(s), etc.) there is now discussion surrounding who is responsible for fact checking information online? Is it the responsibility of the user to ensure their information comes from a reliable source or is is the responsibility of platforms that share this information (i.e. Facebook, Twitter, Google etc.)? Fact-checking is important because exposure to misinformation can greatly influence people’s opinion and in turn their actions.

There are different types of information on the internet that might be fact-checked. Misinformation is incorrect or misleading information[2]. Misinformation is unique in the regards that the information is spread by people who don’t know the information is false. An example of misinformation is “fake news”. Many people share fake news without knowing that it is fake, but they share it because it catches their attention and is interesting. Disinformation is the spreading of false information knowing that it is false[3].

Contents

History

Rise of Fact-Checking

The rise of the internet in the early 2000's allowed people to get their information from a wide variety of sources. People no longer had to rely on their thoroughly edited newspapers or radios to get their news. The growth of online platforms created many concerns such as a concentration of power in the hands of a few large media companies, and the growing likeliness for user's to get trapped in echo chambers or filter bubbles. An echo chamber or filter bubble occurs when people get isolated from information opposing their own perspectives and viewpoints. People tend to look for information that confirms their current beliefs, not information that challenges it. This means that people either needed to fact-check the information they are exposed to for accuracy or that the distributors of the information(i.e. social media platforms) are responsible for monitoring the accuracy of information posted on their sites or notifying users that it might not be correct. Fact-checking becomes an especially prevalent topic of conversation surrounding elections and political events such as the 2016 and 2020 US Presidential election and the January 6th, 2021 Capitol Insurrection.

2016 US Presidential Election

The term "fake news" became exponentially popular during the 2016 US Presidential Election. After the election, and Donald Trump's victory, there was much debate about the impact social media as news platforms and the spreading of "fake news" and misinformation had on the results of the election. Research following the election concluded that fake new stories were shared more frequently than mainstream new stories, many people who are exposed to fake news report their believed it, and that most fake news stories favored Donald Trump over his competitor Hillary Clinton[4]. These findings drove leaders in tech, government officials, and users of these platforms to look to create a solution before the 2020 Presidential election.

Responsibility of Platforms

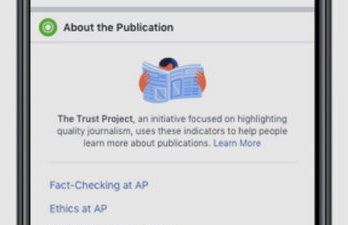

Third parties such as major social media platforms and government officials are facing pressures to combat the spread of fake news and misinformation. The United States of America both aims to protect the 1st Amendment of Free Speech, but also ensure their people have access to correct information. Until recently, user’s have generally been faced with the responsibility to fact-check their news and evaluate it for truth up until the last couple years. Below is a cross analysis of different third parties policies regarding fact-checking.

Facebook is an online social media and social networking service founded in 2004 by Mark Zuckerberg who is still their CEO. Facebook is a part of the Technology Conglomerate known as Meta which includes Instagram and Whatsapp. The policies outlined below apply to all Meta owned applications but Facebook is at the head of the discussion of fact-checking information.

Twitter is a social networking and microblogging platform founded in 2006 by Jack Dorsey. Their CEO is currently Parag Agrawal. 80% of Twitter's user base lies outside the U.S. [5]

YouTube is an online video sharing and social media platform owned by Google. It was founded in 2005. Its CEO is Susan Wojcicki.

Fact-Check Policies

| Policy | Facebook/Meta | YouTube/Google | |

|---|---|---|---|

| Misinformation Policy | Yes | Yes | No |

| Hate Speech | |||

| Anonymous Accounts | |||

| Recommendation Algorithms | |||

| Hacked Content |