Machine Learning Underlying Technology and Ethical Issues

Machine Learning is a subfield of Computer Science that has its roots in the 1950s but gained recognition in academia starting during the 1970s. The field of machine learning has grown significantly since the 1970s due to the rapid increase in computational power as well as the decreased costs. At a basic level, machine learning algorithms process and learn from a set of data given to them called training data, the model they build can then be used to predict new data that they have not seen before[2]. Machine learning allows for the analysis, pattern detection, and prediction of massive amounts of data far better and more efficient than a human or team of humans ever could. This has brought upon many great benefits through the use of machine learning. For example, image analysis for medical purposes, data analysis in business, and object recognition for autonomous systems just to name a few[3]. Despite the practicality of machine learning, unintended negative effects in the form of various biases and unfairness have emerged from models. Harmful societal stereotypes have been unintentionally perpetuated through the use of machine learning which has negatively impacted various groups of people. One incident of bias was Apple Credit Card offering smaller lines of credit to women when using machine learning to determine creditworthiness. Conversations about the ethics of machine learning are becoming more widespread and there has been an increase in research into mitigation strategies. Some common ways of reducing model bias are more frequent model and feature analysis, adversarial de-biasing, and regularization[4].

Contents

History

One of the first strides in the realm of Machine Learning was taken by Frank Rosenblatt from Cornell in 1957. Rosenblatt was a psychologist who developed the first machine learning algorithm the Perceptron. As a psychologist, his efforts into creating the perceptron stemmed from the human abilities of perception, recognition, concept formulation, and generalization of experiences. Through his cognitive studies, he developed the perceptron with these conditions in mind, an algorithm that could “learn” and then predict with probabilistic methods[5]. A basic perceptron algorithm operates on two assumptions the data can be binary classified and the data is linearly separable. Binary classification is where a data point can only be classified into one group or another, for example, positive or negative. Data is called linearly separable if it can be graphed in two dimensions and a straight line, called a decision boundary, can be drawn to separate it. The perception algorithm is a very simple but effective one. It looks at one data point at a time and classifies it, if correct it does nothing, if incorrect it updates the corresponding decision boundary or separating line. It continues to do this for all the data points until they are all correct and then it stops. If the model is given a test point to predict, depending on which side of the decision boundary it is on determines the prediction[6]. The Perceptron can be viewed as a single-layer neural network taking in a single data point and predicting it, laying the groundwork for algorithms to come.

Data Representation

Features

Machine learning algorithms are only as good as the training data they are given. Similar to a human, if given bad information the algorithm will make bad predictions when shown new information. Data given to machine learning algorithms is known as features. And a singular data point would be known as a feature vector, with each dimension of the vector being a different feature. Feature vectors can represent many different types of data such as text data, image data, stock market data, and a lot more[7]. Two and three-dimensional feature vectors can be visualized by humans on a plot and can give some idea about how the algorithm is making its decisions. However, feature vectors can contain many more dimensions which would be extremely difficult for a human to try and find patterns. That's where machine learning algorithms are useful. The feature itself doesn’t mean much to the computer, however, the values and how these values compare to other feature values help the algorithm construct models. With this ability to recognize patterns with extremely large and higher dimensional datasets comes benefits it also leads to bias as it becomes hard to discern what is influencing the model[8].

Training Data

Training data is the initial data that is used as input for the algorithm, this data is what makes up the features. The machine learning model is only as good as the data it learns from. So the idea of data collection, data augmentation, and data processing is a very important aspect in the role of a machine learning engineer. Data is now more than ever a valuable and competitive resource in the modern world to make more informed decisions. Unethical practices in data collection are now also prevalent because of how much power it has. This has lead to various privacy and security concerns. Data is also being used by individuals, companies, and governments in a malicious manner either intentionally or unintentionally through bias[9].

Subcategories of Machine Learning

Supervised learning

Supervised learning is a subset of machine learning. It contains algorithms that utilize training data that is labeled in order to classify data it has not seen before. For example, if you were trying to predict dog breeds from an image, you would train the algorithm with images of dogs that have been labeled with the type of dog displayed in the picture. In many cases, the data has to be labeled by humans which can easily make mistakes. This can unintentionally introduce bias and error into models that are used for important tasks. Commonly used supervised learning algorithms include Support Vector Machines, Linear and Logistic Regression, K-nearest neighbors, and Neural Networks[10].

Unsupervised Learning

Unsupervised learning is a subset of machine learning. It contains algorithms that utilize unlabeled training data to find patterns, clusters, and structures within data. New data points can then be predicted depending on where they would fit in on the model. An example of this can be seen with product recommendations. If two people buy the same product and they went on to purchase various other items, it might recommend similar products. Some popular unsupervised learning algorithms include K-means Clustering, Spectral Clustering, Hierarchical Clustering, Gaussian Mixture Models, and Expectation-Maximization[11].

Other Categories of Algorithms

There are other types of machine learning subsets, however, supervised and unsupervised learning are the most popular. Some of the other categories include semi-supervised learning as well as reinforcement learning. Semi-supervised has some training data with labels and some training data without labels. Reinforcement learning is where the model will use information that it learns as well as actions it takes in order to maximize reward. A popular reinforcement learning algorithm type is Hidden-Markov Models[12].

Popular Machine Learning Algorithms

Support Vector Machines

Support vector machines(SVM) are a very popular and effective statistical learning method. SVMs build upon the shortcomings of the perceptron, the data does not have to be linearly separable and it can also perform non-linear classification, through a method called the kernel trick. This greatly enhances the use cases of the algorithm and is what lead to it being popular in the industry unlike the perceptron which was mainly theoretical. SVMs are very similar to logistic regression and can also be used for classification. Similar to the perceptron there is a decision boundary or separating hyperplane that is used to classify points. However, the difference here is that there are two “support vectors” that run parallel to the hyperplane that form a margin. This margin is what allows for non-linearly separable data, as points in the margin or on the wrong side of the hyperplane get penalized, thus where it is similar to logistic regression. In order to choose the separating hyperplane and support vectors an optimization problem must be solved using gradient descent. This optimization problem is to choose a hyperplane where the margin, which is the minimum distance between the closest point and the hyperplane, is maximized[13].

Kernel Trick and Unfairness

SVMs ability to perform non linear classification stems from the kernel trick. Through this method nonlinear functions map the feature vectors into a higher dimensional space. Since the exhaustive computations do not need to be computed in this higher dimensional space because the vectors become more sparse, the data is more likely to be linearly separable. This leads to better performance of the SVM and in the lower dimensional space allows for a non-linear decision boundary. The kernel trick has its benefits, enabling SVMs to classify very complex problems. However, there are downsides that come from the kernel trick as well. Mapping data to a higher dimensional space transforms the data in a way where it can look very different from the original feature vectors. The original data is easy to see which dimension corresponds to a certain feature, and you can even tell to an extent how the SVM is making its decisions. Once the data is transformed you lose knowledge on what the dimensions correspond to, so it becomes unclear to what the SVM is now making decisions based on. The reason this is an issue is if the final model was classifying things in a way you didn’t expect it would be hard to know how to fix it. So if it was unfairly favoring a certain group of people when using the model it can be a serious problem[14].

Artifical Neural Networks

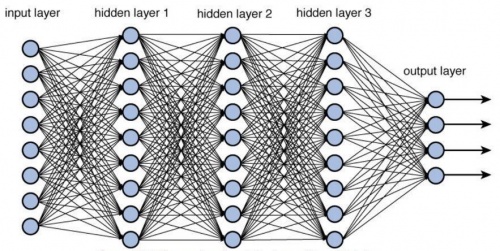

Artifical Neural networks are one of the most popular machine learning algorithms within the software industry. They are very versatile in what they can be used for which makes them a first choice for many when using machine learning in an application. Neural networks are based on a simplified model of the human brain, hence their name. The basic structure of a neural network is there is a set of input data passed through input nodes. There is then a “hidden layer” or many hidden layers of nodes called neurons, where each node in a hidden layer connects to every node in the previous layer. Then each node in the last hidden layer connects to the output layer. The connections between each of the nodes are the weights. While the nodes themselves are a function of the sum of weighted product of each node, and this is passed through an activation function. There are many different types of activations that are used but these functions essentially determine if that node will be on or off at a certain time. When training the neural network the weights are updated for each node as the data is fed through the neural network. The goal is to minimize the error of the neural network by optimizing the loss function through gradient descent. A loss function is function that quanitfys the error with the system, and the error needs to be minimized for better performance. It is the job of the engineer to design the architecture of the neural network, such as the number of hidden layers, the activation functions used, etc. The engineer has influence over hyperparameters for the training process such as the learning rate and number of epochs to run for[15]. There are different types of neural networks as well such as convolutional neural networks, which are good for image recognition as they take spatial aspects of the image into account. Recurrent neural networks can remember state across time steps of execution. Which can be useful for stock prediction as it is important to remember previous trends as they influence the present[16].

Bias in Neural Networks

Neural networks seem magical to the user and to an extent to the programmer. This is because they act like a black box and are not transparent when making decisions like other algorithms. The programmer can have knowledge of how they designed the architecture, but they don’t know what the neural network will learn from a given set a of inputs. It then will be used to predict new data and it could do this in a way very unexpected to the programmer[17]. In order to try and understand better which features are used to make decisions, researchers created Grad-CAM for convolutional neural networks. This method will display a heatmap over the input image highlighting which parts of the image influenced the decision the most, through gradient-based localization. An example of where bias was displayed using Grad-CAM was trying to classify dog breeds. For certain breeds parts of the image were highlighted that weren’t expected, like for one breed it was only the grass around the dog that was highlighted. This indicates that the neural network was using the grass as a feature to make a prediction not the dog itself. Thus when predicting new dogs the model didn’t perform that well because it could see grass behind a different breed. This is a simple example and it is usually not as clear and even if the issue is known it is not an easy task to solve[18]. One of the most common ways bias is introduced into neural networks is through overfitting. Overfitting occurs when too much training occurs, thus it has a hard time generalizing to data it has not seen before.

Methods to Limit Bias in Neural Networks

There are steps a programmer can take to limit small scale bias. Cross validation of hyperparameters is trying out different hyperparameters of the neural network on different slices of training data, keeping some data for validation and training. The programmer can decide which performance metric is the most important to maximize as there is many, however, a very useful metric is AUROC. Area under the receiving operating curve(AUROC), is a metric that is the ability of the classifier to predict correctly as opposed to incorrectly. So a AUROC of 0.5 would be a random classifier while a score of 1 would be perfect. Cross validation is great way of dealing with overfitting as well as you can use it to tune how many epochs you train for. Data augmentation is another way at curbing bias and improving performance, methods can include transfer learning or grayscale. Transfer learning uses a previous problem to solve a current one. So instead of training the network to classify a certain dog breed on pictures of that exact breed. You would mainly train it on a different breed and then applying that learning to the breed you are trying to classify. The idea is that the algorithm will focus in on more important features that are extremely unique to one breed and just the fur color, for example, as there can be many breeds with the same color fur[19].

Real-World Impacts of bias and Unfairness in Machine Learning

Machine Learning algorithms are now used ubiquitously in society to make decisions that affect people's lives, under the false notion that they are less biased than humans. Proprietary algorithms can predict many facets of people's lives, for example, they can predict ethnicity, who is considered a terrorist, if you will get a loan, if you should be fired, if you are paroled and how you are sentenced[20]. A very common notion that people believe is that algorithms are objective and developers continue to claim algorithms are neutral while people contain bias and don’t make fair decisions. However, during the 2010s new research has shown that this is not always the case. Most people including developers put blind trust into the black box that is many machine learning algorithms. A popular algorithm, COMPAS, used in sentencing and parole hearings to determine the likelihood of a person committing a future crime has been under scrutiny due to ethical concerns. A nonprofit newsroom ProPublic did an investigation on COMPAS and found that only 20% of people predicted to commit violent crimes actually did go on to do so. They also found large racial discrimination within this algorithm, black defendants were labeled as “future criminals” at twice the rate than white defendants. ProPublic also found that white defendants were labeled as low risk more often than black defendants, when they were not actually low risk. COMPAS is giving black defendants higher sentences and also leading to more lasting negative effects after incarceration. COMPAS is run by a for-profit company thus the algorithm is proprietary and people in the justice system don’t really know how it is making life-changing decisions. Since many algorithms are proprietary technology and are a secret as to how they work, there is no questioning of the results. In the case of sentencing, insurance, loans, etc. when a human makes a decision there is usually research and a report that goes into the decision. The details of the decision for a proprietary algorithm is usually secret, and most of the time are not clear. As neural networks and other algorithms that make decisions in higher dimensional feature spaces can’t really be deduced why it made a decision[21].

Accountability

There is not much accountability when it comes to algorithms. When a human makes a decision they are held accountable for what they choose. Because of the societal notion that algorithms are unbiased and make decisions completely independent of humans, people don’t question the lack of accountability. In most industries, companies are liable for their products and decisions but this hasn’t really been the case with developers. There has been a recent push to bring on more responsibility for companies that develop algorithms to try and curb bias. Governing bodies and guidelines relating to algorithms have been proposed, similar to what many industries have to protect people[22].

References

- ↑ https://towardsdatascience.com/training-deep-neural-networks-9fdb1964b964

- ↑ P. Louridas and C. Ebert, "Machine Learning," in IEEE Software, vol. 33, no. 5, pp. 110-115, Sept.-Oct. 2016, doi: 10.1109/MS.2016.114.

- ↑ P. Louridas and C. Ebert, "Machine Learning," in IEEE Software, vol. 33, no. 5, pp. 110-115, Sept.-Oct. 2016, doi: 10.1109/MS.2016.114.

- ↑ Kumar, M., Roy, R., & Oden, K. D. (2020, September). Identifying Bias in Machine Learning Algorithms: CLASSIFICATION WITHOUT DISCRIMINATION. The RMA Journal, 103(1), 42. https://link.gale.com/apps/doc/A639368819/ITOF?u=umuser&sid=bookmark-ITOF&xid=277baa63

- ↑ Rosenblatt, Frank (January 1957). "The Perceptron: A Perceiving and Recognizing Automaton (Project PARA)" (PDF). Report (85–460–1).

- ↑ Daume, Hal (January 2017). “A Course in Machine Learning”. http://ciml.info/

- ↑ Google Developers, Machine Learning Crash Course, https://developers.google.com/machine-learning/crash-course/framing/ml-terminology

- ↑ Daume, Hal (January 2017). “A Course in Machine Learning”. http://ciml.info/

- ↑ Mohanty, Soumendra, et al. Big Data Imperatives: Enterprise Big Data Warehouse, BI Implementations and Analytics. Apress, 2013.

- ↑ IBM Cloud Education, Supervised Learning, Aug 2020, https://www.ibm.com/cloud/learn/supervised-learning

- ↑ IBM Cloud Education, Unsupervised Learning, Sep 2020, https://www.ibm.com/cloud/learn/unsupervised-learning>

- ↑ Daume, Hal (January 2017). “A Course in Machine Learning”. http://ciml.info/

- ↑ Guenther, N., & Schonlau, M. (2016). Support vector machines. Stata Journal, 16(4), 917+. https://link.gale.com/apps/doc/A573713862/AONE?u=umuser&sid=bookmark-AONE&xid=1bbf92e6

- ↑ Guenther, N., & Schonlau, M. (2016). Support vector machines. Stata Journal, 16(4), 917+. https://link.gale.com/apps/doc/A573713862/AONE?u=umuser&sid=bookmark-AONE&xid=1bbf92e6

- ↑ Shufflebarger, Charles, “What is a Neural Network?”, November 1992.

- ↑ Daume, Hal (January 2017). “A Course in Machine Learning”. http://ciml.info/

- ↑ https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7968615/

- ↑ https://openaccess.thecvf.com/content_ICCV_2017/papers/Selvaraju_Grad-CAM_Visual_Explanations_ICCV_2017_paper.pdf

- ↑ Daume, Hal (January 2017). “A Course in Machine Learning”. http://ciml.info/

- ↑ Kumar, M., Roy, R., & Oden, K. D. (2020, September). Identifying Bias in Machine Learning Algorithms: CLASSIFICATION WITHOUT DISCRIMINATION. The RMA Journal, 103(1), 42.

- ↑ Martin, K. Ethical Implications and Accountability of Algorithms. J Bus Ethics 160, 835–850 (2019). https://doi.org/10.1007/s10551-018-3921-3

- ↑ Martin, K. Ethical Implications and Accountability of Algorithms. J Bus Ethics 160, 835–850 (2019). https://doi.org/10.1007/s10551-018-3921-3